Emotion, Motivation, and Pain

Perception of Aversiveness

The perception of both pain intensity and pain aversiveness is not a simple feedforward process that reads out the amplitude of an ascending nociceptive signal to evoke a conscious unpleasant sensation (Apkarian et al 2005). A wide variety of factors influence perception, including expectation, uncertainty, multisensory input, behavioral and environmental context, emotional and motivational state, self versus externally induced pain, and controllability (Eccleston and Crombez 1999, Price 2000, Villemure and Bushnell 2002, Fields 2004, Wiech et al 2008, Ossipov et al 2010, Tracey 2010). This illustrates the complex process by which the brain constructs the sensory and emotional sensation of pain and challenges any standard “perception–action” model.

The best-studied contribution to perception comes from expectation, not the least since this lies at the heart of the placebo and nocebo analgesic effect (Price et al 2008). In brief, expectation generally biases perception in the direction of that expectation: if one expects a higher degree of pain than is inflicted, the pain is typically felt as more painful. An expectation of mild or no pain similarly reduces actual pain. The source of information from which an expectation is derived is diverse and ranges from the implicit information inherent in pavlovian conditioning to explicitly provided verbal instruction, and a multitude of experimental manipulations attest to the ubiquity and complexity of these effects and their biological correlates (Voudouris et al 1989, Montgomery and Kirsch 1997, Price et al 1999, Benedetti et al 2005).

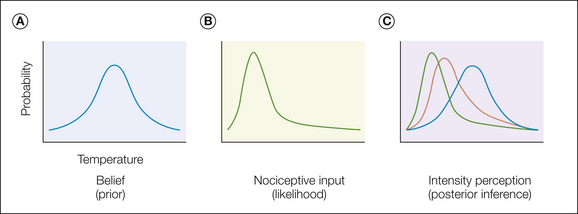

Underneath this apparent complexity may lie a relatively simple model, which we propose here. An expectation can be considered as a belief, and this in turn can be formalized as a probability distribution over possible intensities of pain (Fig. 17-2A). In the simplest case, this could be a prediction about the intensity of pain at a given point in time. Accordingly, the belief distribution incorporates the full breadth of an expectation with a mean intensity and uncertainty.

Figure 17-2 Pain affect as inference.

A, An expectation of any sort contains some sort of information about the nature of a forthcoming stimulus, for example, temperature, as shown here. This information may have variable fidelity and be represented as a probability distribution across possible events and hence captures the statistical knowledge embedded within an expectation. Narrow distributions represent more certain expectations. In principle, any aspect of pain, including intensity, timing, and duration, can be a component of an expectation. B, Ascending nociceptive information can itself be represented as a probability distribution that captures how likely a certain intensity of stimulus (temperature) is given ascending input. Again, a narrow distribution implies more certain information. C, Making an inference involves integrating the expectation (the “prior”) with the ascending nociceptive input (the “likelihood”) to estimate the most likely underlying intensity (the “posterior”) by taking all sources of information into account.

The relevance of this distribution comes from how this information is integrated with pain itself, although the exact nature of this integration has yet to be determined precisely. Possibly the most plausible way is to consider the effects of pain expectancy on a par with the effects of expectancy in other sensory modalities (e.g., Yuille and Kersten 2006, Feldman and Friston 2010), which consider the incoming sensory input as a probability distribution (in a similar fashion to expectancy) (Fig. 17-2B). In this manner, pain perception becomes a problem of inference in which one tries to infer the most likely intensity of an external nociceptive event given two sources of information, each with their own uncertainty. From a statistical perspective, the optimal way to make this inference is to use the Bayes rule, which simply involves multiplying (and normalizing) the two distributions (Fig. 17-2C). This accounts for the observation that more certain expectancies (i.e., more narrow distributions) appear to exert a stronger influence on subsequent pain (Brown et al 2008).

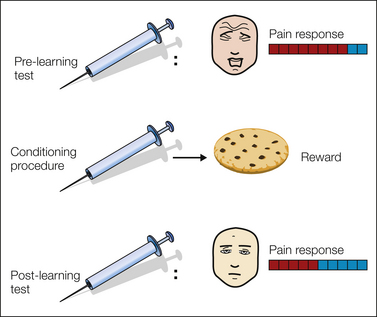

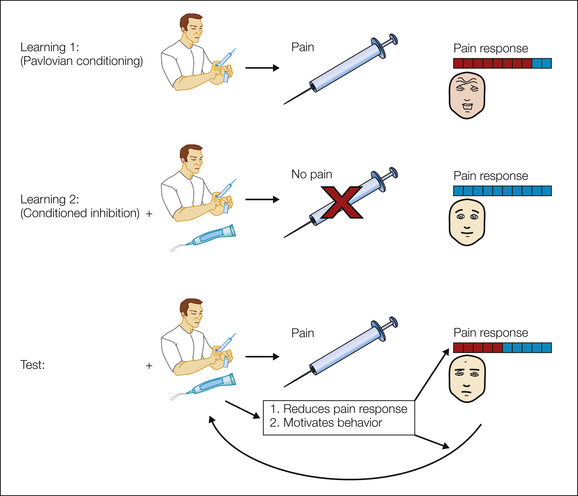

There is one further important component of pain perception that underlies the well-described distinction between the sensory perception of intensity and the emotional perception of unpleasantness. Whereas perception of intensity can be thought of as representing an accurate statistical estimate of the nature of the pain, the perception of unpleasantness incorporates the overall motivational significance of that pain to the individual. In this way it is clear that the same pain can have very different motivational significance in different physiological, behavioral, and environmental contexts. This can be illustrated in an experimental procedure called “counter-conditioning”—a pavlovian paradigm in which a painful stimulus repeatedly precedes a pleasant reward of some sort (Erofeeva 1916, 1921; Pearce and Dickinson 1975) (Fig. 17-3). As an individual learns the association between the pain and the reward, the aversiveness of the pain is diminished despite an apparently intact ability to appreciate the intensity of the pain. Indeed, this phenomena has sometimes been used as a psychological strategy in the clinical management of pain (Turk et al 1987, Slifer et al 1995).

Figure 17-3 Counter-conditioning.

In this example, a painful injection is paired with a tasty snack in a pavlovian (i.e., classic) conditioning procedure. Before learning, the pain induces innate aversive responses, but after repeated pairing, the reward (appetitive) prediction causes appetitive responses to be elicited.

The concepts of motivational value and utility lie at the heart of emotional accounts of pain. In economic theories of value (discussed in more depth below), the overall expected value of an event is equal to the mean of the product of the probability and value. Thus, since the value function is monotonically increasing (more pain is always worse than less pain), perceptual uncertainty about pain should exert a more dominant effect when above, as opposed to when below, a mean expectation. Although behavioral data suggest that this may well be the case (Arntz and Lousberg 1990, Arntz et al 1991), well-designed studies manipulating the statistics of expectancy and painful stimulation are lacking.

Even though simple expectancy phenomena may exert an influence on pain affect, it seems unlikely that this explains other instances of modulation (Basbaum and Fields 1978, Fanselow and Baackes 1982, Willer et al 1984, Lester and Fanselow 1985, Gebhart 2004, Granot et al 2008). In particular, pain modulation often displays a sensitivity to behavioral context beyond that which an account based on the mean motivational value of pain can explain. Instead, modulation appears to be the result of a “decision” by the pain system (Fields 2006) (e.g., by reducing ascending nociceptive input), which necessitates an account of how motivation and value relate to decision making.

Motivation and Value

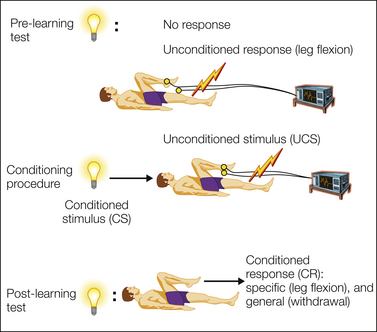

The concept of value captures the implications that a painful episode has on the overall welfare of the individual. In principle, value can be defined on a transitive scale of preferences: pain A has a smaller aversive value than pain B if it is consistently preferred in a forced choice between the two. A dominant approach to understanding motivation in animals and humans has been study of the acquisition of value by events that predict an event of intrinsic value (such as pain). Thus not only does pain itself have aversive value, but so also do events that predict its probable occurrence; when fully predicted in this way, pain merely fulfills its expectation. The core mechanisms of prediction have been studied for decades via pavlovian conditioning, which despite its apparent simplicity, betrays a complex and critically important set of processes that lie at the heart of animal and human motivation (Mackintosh 1983) (Fig. 17-4).

Figure 17-4 Pavlovian conditioning.

The basic pavlovian conditioning paradigm involves predictive pairing between a cue (the conditioned stimulus) and a painful stimulus (the unconditioned stimulus) and results in the acquisition of a response (the conditioned response) to the cue. The conditioned response illustrates the acquisition of value, and its magnitude correlates with the magnitude of the unconditioned stimulus. Conditioned responses can be specific to the nature of the anticipated threat, such as arm flexion, or general, such as withdrawal.

The conditioned response has two important properties. First, it is not merely a copy of the unconditioned response (stimulus substitution) but is appropriate to anticipation of the painful event. Second, it is not unitary in nature but consists of stimulus-specific and general affective components. Stimulus-specific responses reflect the precise nature of the pain being anticipated (e.g., leg flexion in anticipation of foot shock or eye blink in response to a puff of air onto the eye); general responses are specific only to valence and are shared across predictions for any aversive outcome, with the key example being a withdrawal response. Such general aversive responses seem to betray a unitary underlying aversive motivational system, and ingenious experimental designs such as trans-reinforcer blocking provide good evidence of this concept (see Dickinson and Dearing 1979).

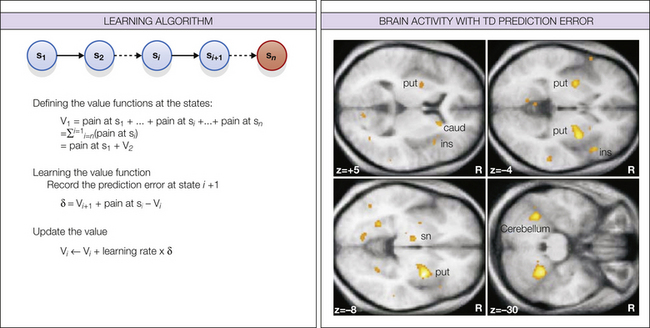

One of the challenges in the study of pavlovian motivation is to understand how value is acquired. The importance of statistical contingency is illustrated by the fact that increasing magnitude, probability, and temporal proximity of a painful stimulus increase the magnitude of the pavlovian value (Mackintosh 1983). Furthermore, it is known that learning depends on a prediction error—the difference between the expected and actual value of an outcome. Thus, if an outcome is worse (more painful) than expected, the aversive value of the preceding cue increases, and if it is better than expected, the aversive cue value diminishes. This is captured within reinforcement learning models of pain conditioning (Seymour et al 2004, Dayan and Seymour 2009), which describe in algorithmic terms what quantities the brain uses in constructing representations of aversive motivational value. Reinforcement learning models are similar to the well-known Rescorla–Wagner (1972) error-based learning rule but extend them to real-time learning in which pavlovian cues can transfer value to each other when chains of them occur in sequential relationships (Sutton and Barto 1987, 1998) (Fig. 17-5).

Figure 17-5 Temporal difference (TD) learning.

TD learning deals with the problem of how to make predictions about rewards or punishments when they occur at variable delays and with variable probabilities. It assigns all states a value that is equal to the total reward or punishment that is expected to occur if you find yourself in that state. This total reflects the sum of expected future outcomes but typically discounts outcomes that occur in the more distant future. The key feature of TD learning comes from the way in which these values are learned. Instead of waiting for the outcomes themselves, it uses the value of the next state as a surrogate estimate of the true value and thus “bootstraps” value predictions together. State values can then be learned by using a simple error-based learning rule in which the error between the expected and actual value of successive states is used to update the value of the preceding state, which to an extent depends on the learning rate. (From Seymour B, O’Doherty JP, Dayan P, et al 2004 Temporal difference models describe higher-order learning in humans. Nature 429:664–667, Fig. 2.)

In the example below, consider a sequence of states that end in a terminal state in which pain is experienced. The goal of learning is to predict the value of the pain expected to occur if one finds oneself at state 1 (i.e., to learn to make predictions as early as possible). Early in learning, the states immediately preceding the terminal pain state acquire aversive value, but with more experience, the value is transferred backward to earlier states. Beyond this simple example, the value-learning process can easily be modified to incorporate discounting of future options and probabilistic state transitions (Kaelbling et al 1996, Sutton and Barto 1998).

Relief, Reward, and Opponency

The fact that not experiencing pain when expected can be subjectively rewarding illustrates the special relationship between pain (and punishment more generally) and reward (Cabanac 1971). Relief represents a counter-factual state and is bestowed with rewarding properties entirely on the basis of an unfulfilled prediction of aversiveness.

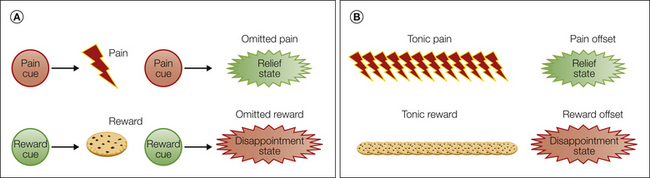

The motivational basis of relief has a long history in experimental psychology and comes in two forms. The first relates to the omission of an otherwise expected phasic punishment and is the opposite of disappointment (Fig. 17-6). Konorski (1967) first formalized this mutually inhibitory relationship in experimental paradigms such as conditioned inhibition. In this case, presentation of a cue that predicts that an otherwise expected pain stimulus is omitted acquires appetitive properties, as demonstrated by the difficulty in getting such cues to acquire aversive contingencies (retardation) and their ability to reduce aversive responses when paired with an aversive cue motivation (summation) (Rescorla 1969).

Figure 17-6 Konorksian and Solomon–Corbit opponency.

A, Konorskian opponency deals with the relationship between phasic inhibitors of excitatory aversive and appetitive states. These give rise to two opponent states, relief and disappointment, respectively. B, Solomon–Corbit opponency derives from states (and their predictors) that relate to the offset of tonically present states.

A slightly different type of relief occurs when tonically presented pain is terminated or reduced. Solomon and Corbit (1974) described how a prolonged aversive state leads to a compensatory rebound affective state when relieved: the longer and greater the magnitude of the aversive state, the more pronounced the pleasantness of the relief state. This illustrates the importance of the relationship between tonic and phasic states, with phasic events being judged not so much by their absolute value but by the relative advantage that they confer in comparison to an existing norm or baseline affective state (Seymour et al 2005, Baliki et al 2010). This is a natural way to motivate homeostatic behavior and can easily be incorporated within a learning framework by learning the value of states over two different time scales (Schwartz 1993, Mahadevan 1996): a slowly learned baseline affective state and a rapidly learned phasic affective state. In this way, a slow time scale component can act prospectively as the comparator for future expected events.

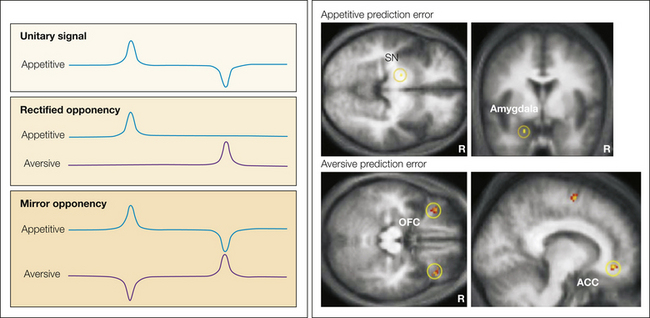

There are three possible schemes by which appetitive and aversive motivational systems might be implemented (ultimately at a neural level) (Fig. 17-7): a single system coding that spans both rewards and punishments, a rectified opponency scheme in which only positive quantities are coded by distinct reward and punishment arms, and a mirror opponency scheme in which the reward and punishment arms code the full range of positive and negative outcomes (which might incorporate a limited amount of rectification). Although studies are limited, existing neurophysiological evidence supports a mirror opponency scheme (Seymour et al 2005).

Figure 17-7 Schemes of opponency.

There are three basic ways of implementing opponent appetitive and aversive motivational representations at a neural level. A study investigating incorporated predictors of the offset of phasic tonic pain via functional magnetic resonance imaging found evidence to support the third way, the mirror opponent pattern, by aversive coding in the lateral orbitofrontal cortex, appetitive coding in the amygdala, and mixed coding in the striatum. (From Seymour B, O’Doherty JP, Koltzenburg M, et al 2005 Opponent appetitive-aversive neural processes underlie predictive learning of pain relief. Nature Neuroscience 8:1234–1240, Figs. 3 and 4.)

In real-world aversive behavior, probably the most important role of opponency lies in controlling the later stages of instrumental escape and avoidance behavior, where rewarding property endowed to the avoided state is able to reinforce behavior (discussed below). In human pain research, opponency also arises in placebo analgesia paradigms, which typically use conditioned inhibition designs; for example, placebo analgesic treatment is associated with covert reduction in otherwise expected pain, and thus the placebo treatment becomes a conditioned inhibitor. However, motivational opponency deals with the ability of placebo treatment to motivate behavior, and this may be distinct from an effect on perception of the subsequent pain state itself (Fig. 17-8). This echoes the distinction between “liking” a relief state (conditioned analgesia: a perceptual phenomenon) and “wanting” it (conditioned reinforcement: a motivational phenomenon), both of which can be studied with pavlovian paradigms.

Figure 17-8 Relief and conditioned inhibitors.

A conditioned inhibitor of pain predicts the absence of otherwise expected pain; in this example, the image of the doctor with a needle acts as an excitatory pavlovian aversive cue, and the green topical cream acts as the conditioned inhibitor. The conditioned inhibitor has two distinct effects. First, it can modify the subsequent experience of pain (“liking” a relief state) if it occurs, and this relieving sensation may in part be opioid dependent, as in placebo analgesia (Amanzio and Benedetti 1999). Second, it is associated with the reinforcing state (“wanting”) of relief that motivates action and may in part be dopamine dependent (Beninger et al 1980a, 1980b; Moutoussis et al 2008; Bromberg-Martin et al 2010).

Action and Decision Making

Ultimately, the evolutionary justification for pain rests on its ability to engage action that reduces harm. Action is central to theories of motivation and allows an organism to control its environment. The simplest forms of action are the innate responses directly associated with pain and the acquisition of pavlovian pain responses. However, this simplicity is superficial since it conceals a complex and highly specific array of behavior (Bolles 1970, Fanselow 1980, Gray 1987, Fanselow 1994). This includes complex repertoires of aggressive (e.g., rearing, fighting) and fear-related (e.g., fleeing and freezing) responses, often with strong sensitivity to the specific nature of the environment. Despite the sophistication of these responses, they ultimately depend on a “hard-wired” innate system of action, which lacks the flexibility to deal with the diversity and uncertainty of many real-world complex environments. This shortcoming is overcome by instrumental learning (operant conditioning) (Mackintosh 1983), which combines the ability to engage in novel actions with the capacity to assess their merits based on an outcome; actions that appear to lead to beneficial outcomes are reinforced (rewards), and actions that lead to aversive outcomes such as pain are inhibited (punishments).

Instrumental learning in the face of pain can take two forms. In escape learning, actions lead to the termination of a tonically occurring pain stimulus, whereas in avoidance learning, they lead to omission of the otherwise expected onset of pain (Mowrer 1951). These distinct paradigms illustrate several important points about the relationship between pavlovian and instrumental learning and between rewards and punishments. First, instrumental learning harbors the problem of how one selects novel actions in the first place before their outcomes are known. Pavlovian learning often “primes” actions away from punishments, and indeed it might often be difficult to know when an action is fundamentally pavlovian or instrumental (Dayan et al 2006). Thus, early in learning, actions may be dominated by pavlovian responses, but control is transferred to instrumental actions once their benefit can be reinforced. Second, escape behavior often precedes avoidance; as escape from a tonic punishment is learned, this action will often be elicited earlier and earlier until it ultimately precedes onset of the stimulus (if this is predictable by some sort of cue). Finally, the nature of instrumental learning changes through the course of acquisition: as successful escape and avoidance actions are discovered, the outcome acts as a relief (i.e., a conditioned inhibitor or “safety state”) from the preceding punishment, which is thought to reinforce behavior as though it were a primary reward (Brown and Jacobs 1949, Dinsmoor 2001). The nature of relief after escape and avoidance parallels the Solomon–Corbit and Konorskian opponent motivational states, respectively, and illustrates their importance in action control.

Pavlovian responses and simple instrumental action systems (“habits”) all harbor a common property; namely, they are ultimately “reflexive” since they evoke action in response to events in the environment: either pain itself or cues that act as conditioned (for pavlovian responses) or discriminative (for instrumental actions) stimuli. However, animals and humans often engage in action spontaneously, ostensibly to honor their goals. Goal-directed action represents a fundamentally different value and action system, a central property of which is reliance on internal representation of the outcome of an action. This representation is central both to the ability to plan actions to influence the likelihood of an outcome’s occurrence (to increase or decrease it for rewards or punishments, respectively) and to experimental endeavors to differentiate goal-directed actions from stimulus-evoked habits. The latter has typically relied on the sensitivity of action to some aspect of the motivational state, such as hunger or satiety. For example, goal-directed actions for food are characteristically sensitive to satiety, with a reduced motivation for food when sated implying a reappraisal of the value of the goal in the context of the motivational state, a process that does not occur for habit-like actions. Although indirect evidence of this dissociation exists in aversive learning, for instance, by using ambient temperature to manipulate the value of a heat source (Hendersen and Graham 1979) and using emetics to manipulate the value of food (Balleine and Dickinson 1991), there has as yet been no clear demonstration in studies of pain.

Another possible source by which actions and values can be acquired is through vicarious observation, and indeed in social organisms such as humans, this may well be one of the predominant sources of information. Observational motivational learning can take two forms: imitation, in which one merely reproduces the actions of another, and emulation, in which one “reverse-engineers” the goals of the action to infer its value. It is important to note that the informational value of vicarious learning is a different construct from empathy and schadenfreude. This describes the “other-regarding” motivational value associated with pain witnessed in others and depends on the existing nature of the relationship between each: cooperative pairs share empathy, and competitive pairs exhibit positive feelings derived from the distress of others (Singer et al 2006). Thus, empathy is an aversive state because an individual would engage in actions to reduce harm to a conspecific, and this is distinct from the positive informational value associated with understanding what chain of events led to the conspecific receiving pain in the first place.

Economic Approaches to Pain

Economic approaches to pain are founded on an axiomatic treatment of value along the key dimensions of amount, probability, and time (Kahneman and Tversky 1979, Camerer 2003). In stark contrast to clinical and psychological approaches to pain, an economic approach relies little, if at all, on subjective rating of pain to infer its underlying motivational value, a quantity referred to as “experienced utility.” Rather, it relies on observing the decisions that people make regarding pain to infer a value, or “decision utility.” Behavioral economists have long recognized the dissociation between these two quantities, as manifested, for instance, by the fact that people’s subjective rating of goods is a poor predictor of their subsequent purchasing behavior.

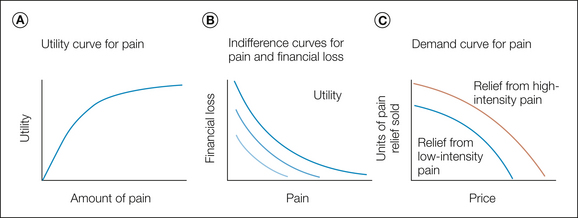

At the heart of economic theories of value is the concept of utility, which is effectively analogous to motivational value in experimental psychology. Importantly, for most quantities (including pain), utility is not linearly related to amount (Cabanac 1986, Rottenstreich and Hsee 2001, Berns et al 2008b) (the amount might refer to the intensity of pain, its duration, or the number of episodes of pain). However, utility functions are generally monotonically increasing and typically concave, as shown in Figure 17-9A.

Figure 17-9 A, Hypothetical utility function for pain. The slope of the function becomes shallower with increasing amount, thus illustrating decreasing marginal utility at greater amounts, and hence a marginal increase in pain has less additional impact as the overall amount of pain increases. B, Hypothetical indifference curves plotting the differing quantities of two amounts of a good (in this example loss of money and amount of pain) between which an individual is indifferent. The two lines represent two net amounts of utility, equivalent to contour lines of equal utility on a plane of utility. C, Demand curves illustrate the amount of pain relief that would be consumed if relief were sold at a certain price. Relief from higher pain is more leftward, thus indicating that it would sell well at higher prices.

Different approaches can be used to establish the shape of the utility function. One is to identify preference indifference between differing amounts and probabilities of a “good,” typically the certainty equivalent to a 50% chance of receiving the good, but this is complicated by the observation that people clearly overweigh low probabilities of pain (Rottenstreich and Hsee 2001, Berns et al 2008a). A different approach is to assess indifference between pain and some other good, such as the loss of money (see Fig. 17-9B). Importantly, most approaches rely on trading two options, and using preference to infer utility in this way makes assessment incentive compatible; therefore, it makes submitting true judgments rational in a way that passive subjective ratings are not.

Relief can be addressed in a similar manner, with individuals making preference judgments between financial loss and pain by allowing them to buy relief from otherwise expected pain. This sort of behavioral task is ultimately a (goal-directed) avoidance paradigm since it requires evaluation of the net utility of an anticipated amount of pain relief and a super-added financial cost. One way of illustrating subsequent behavior is to express utility as a demand curve (Fig. 17-9C), which shows how much relief a hypothetical “shop” would sell as a function of different prices (Vlaev et al 2009).

A distinct component of economic utility is its sensitivity to time, and it is well documented that people discount rewards that occur successively in the future. However, discounting studies of pain have revealed a surprising result: many people prefer to experience a painful stimulus now over an equivalent stimulus in the future (Berns et al 2006). This is potentially an important observation since it suggests that the actual process of anticipating pain is aversive in itself (termed “affect rich”). In other words, not only does future pain carry the aversive utility of that expectation, but there is also added aversive utility associated with the state of anticipation per se (Loewenstein 2006). This sort of inverse discounting has potentially important implications for patient decision making about their health since it suggests that people will prefer to “get pain out of the way” if they have the option. Although this seems advantageous, such as by encouraging someone to undergo a painful investigation sooner rather than later, it may mean that people avoid the actual process of thinking about possible future pain when contemplating actions with less clear-cut outcomes (Dayan and Seymour 2009, Huys and Dayan 2009).

Conclusion

Studies of the emotional and motivational basis of pain reveal a diverse and complex set of processes by which the affective experience of pain is realized. Current research seeks a mechanistic account informed by disciplines such as engineering, computer science, and economics, and this may be critical in understanding how the emotional phenomenology of unpleasantness is generated.

We can draw several broad conclusions. First, pain cannot be explained purely by a simple perception–action model in which peripheral nociceptive signals reflexively evoke a negative emotion. Rather, pain reflects the continuous state of a system that incorporates expectations and beliefs; the behavioral, physiological, and motivation state of the self; and the goals and intentions of future action. Understanding the relationship between online learning and state representation is likely to be critical in understanding how chronic pain develops as a pathological entity (Apkarian et al 2009).

Second, pain is unlikely to be underpinned by a single unified motivational value system. Rather, evidence points to a number of distinct systems of action, including innate, habit-like, and goal-directed systems. Furthermore, these systems may be distinct from the process of pain perception, from which the human conscious judgment of unpleasantness derives. Understanding these multiple value systems, especially the interactions among them, is likely to be critical for interpreting the results from animal experiments, in which explicit judgments are not possible.

Third, understanding decision making is likely to be critical for understanding the complexity of intrinsic pain modulation pathways. It remains an important challenge to understand to what extent pain modulation can be explained by simple perceptual processes and to what extent it derives from a “decision” by the pain system. In the case of the latter, understanding the underlying control system for such decisions may hold the key to novel analgesic strategies in humans.

The references for this chapter can be found at www.expertconsult.com.

References

Amanzio M., Benedetti F. Neuropharmacological dissection of placebo analgesia: expectation-activated opioid systems versus conditioning-activated specific subsystems. Journal of Neuroscience. 1999;19:484–494.

Apkarian A.V., Baliki M.N., Geha P.Y. Towards a theory of chronic pain. Progress in Neurobiology. 2009;87:81–97.

Apkarian A., Bushnell M., Treede R., et al. Human brain mechanisms of pain perception and regulation in health and disease. European Journal of Pain. 2005;9:463–484.

Arntz A., Lousberg R. The effects of underestimated pain and their relationship to habituation. Behaviour Research and Therapy. 1990;28:15–28.

Arntz A., van den Hout M.A., van den Berg G., et al. The effects of incorrect pain expectations on acquired fear and pain responses. Behaviour Research and Therapy. 1991;29:547–560.

Baliki M.N., Geha P.Y., Fields H.L., et al. Predicting value of pain and analgesia: nucleus accumbens response to noxious stimuli changes in the presence of chronic pain. Neuron. 2010;66:149–160.

Balleine B., Dickinson A. Instrumental performance following reinforcer devaluation depends upon incentive learning. Quarterly Journal of Experimental Psychology. B, Comparative and Physiological Psychology. 1991;43:279–296.

Basbaum A.I., Bautista D.M., Scherrer G., et al. Cellular and molecular mechanisms of pain. Cell. 2009;139:267–284.

Basbaum A.I., Fields H.L. Endogenous pain control mechanisms: review and hypothesis. Annals of Neurology. 1978;4:451–462.

Benedetti F., Mayberg H.S., Wager T.D., et al. Neurobiological mechanisms of the placebo effect. Journal of Neuroscience. 2005;25:10390–10402.

Beninger R.J., Mason S.T., Phillips A.G., et al. The use of conditioned suppression to evaluate the nature of neuroleptic-induced avoidance deficits. Journal of Pharmacology and Experimental Therapeutics. 1980;213:623–627.

Beninger R.J., Mason S.T., Phillips A.G., et al. The use of extinction to investigate the nature of neuroleptic-induced avoidance deficits. Psychopharmacology. 1980;69:11–18.

Berns G.S., Capra C.M., Chappelow J., et al. Nonlinear neurobiological probability weighting functions for aversive outcomes. NeuroImage. 2008;39:2047–2057.

Berns G.S., Capra C.M., Moore S., et al. Three studies on the neuroeconomics of decision-making when payoffs are real and negative. Advances in Health Economics and Health Services Research. 2008;20:1–29.

Berns G.S., Chappelow J., Cekic M., et al. Neurobiological substrates of dread. Science. 2006;312:754–758.

Bolles R.C. Species-specific defense reactions and avoidance learning. Psychological Review. 1970;77:32–48.

Bromberg-Martin E.S., Matsumoto M., Hikosaka O. Dopamine in motivational control: rewarding, aversive, and alerting. Neuron. 2010;68:815–834.

Brown C.A., Seymour B., Boyle Y., et al. Modulation of pain ratings by expectation and uncertainty: behavioral characteristics and anticipatory neural correlates. Pain. 2008;135:240–250.

Brown J.S., Jacobs A. The role of fear in the motivation and acquisition of responses. Journal of Experimental Psychology. 1949;39:747–759.

Cabanac M. Physiological role of pleasure. Science. 1971;173:1103–1107.

Cabanac M. Money versus pain: experimental study of a conflict in humans. Journal of the Experimental Analysis of Behavior. 1986;46:37–44.

Camerer C. Behavioral game theory: experiments in strategic interaction. Princeton, NJ: Princeton University Press; 2003.

Dayan P., Niv Y., Seymour B., et al. The misbehavior of value and the discipline of the will. Neural Network. 2006;19:1153–1160.

Dayan P., Seymour B. Values and actions in aversion. In: Glimcher P.W., Fehr E., Rangel A., et al, eds. Neuroeconomics: decision making and the brain. New York: Academic Press; 2009:175.

Dickinson A., Dearing M.F. Appetitive-aversive interactions and inhibitory processes. Mechanisms of learning and motivation. In: Dickinson A., Boakes R.A., eds. A memorial volume to Jerzy Konorski. Hillsdale, NJ: Erlbaum; 1979:203–231.

Dinsmoor J.A. Stimuli inevitably generated by behavior that avoids electric shock are inherently reinforcing. Journal of the Experimental Analysis of Behavior. 2001;75:311–333.

Eccleston C., Crombez G. Pain demands attention: a cognitive-affective model of the interruptive function of pain. Psychological Bulletin. 1999;125:356–366.

Erofeeva M.N. Contributions a l’etude des reflexes conditionnels destructifs. Compte Rendu de la Societé de Biologie Paris. 1916;79:239–240.

Erofeeva M.N. Further observations upon conditioned reflexes to nocuous stimuli. Bulletin of Institute of Lesgaft. vol. 3, 1921.

Fanselow M.S. Conditional and unconditional components of post-shock freezing. Integrative Psychological & Behavioral Science. 1980;15:177–182.

Fanselow M.S. Neural organization of the defensive behavior system responsible for fear. Psychonomic Bulletin & Review. 1994;1:429–438.

Fanselow M.S., Baackes M.P. Conditioned fear–induced opiate analgesia on the formalin test: evidence for two aversive motivational systems. Learning and Motivation. 1982;13:200–221.

Feldman H., Friston K.J. Attention, uncertainty, and free-energy. Frontiers in Human Neuroscience. 2010;4:215.

Fields H. State-dependent opioid control of pain. Nature Reviews. Neuroscience. 2004;5:565–575.

Fields H.L. Pain modulation: expectation, opioid analgesia and virtual pain. Progress in Brain Research. 1999;122:245–253.

Fields H.L. A motivation-decision model of pain: the role of opioids. In: Flor H., Kalso E., Dostrovsky J.O., eds. Proceedings of the 11th World Congress on Pain. Seattle: IASP Press; 2006:449–459.

Gebhart G.F. Descending modulation of pain. Neuroscience and Biobehavioral Reviews. 2004;27:729–737.

Granot M., Weissman-Fogel I., Crispel Y., et al. Determinants of endogenous analgesia magnitude in a diffuse noxious inhibitory control (DNIC) paradigm: do conditioning stimulus painfulness, gender and personality variables matter? Pain. 2008;136:142–149.

Gray J.A. The psychology of fear and stress. London: Cambridge University Press; 1987.

Hendersen R.W., Graham J.A. Avoidance of heat by rats: effects of thermal context on rapidity of extinction. Learning and Motivation. 1979;10:351–363.

Hunt S.P., Mantyh P.W. The molecular dynamics of pain control. Nature Reviews. Neuroscience. 2001;2:83–91.

Huys Q.J.M., Dayan P. A bayesian formulation of behavioral control. Cognition. 2009;113:314–328.

Kaelbling L.P., Littman M.L., Moore A.W. Reinforcement learning: a survey. Journal of Artificial Intelligence Research. 1996;4:237–285.

Kahneman D., Tversky A. Prospect theory: an analysis of decision under risk. Econometrica. 1979;47:263–291.

Konorski J. Integrative activity of the brain. Chicago: University of Chicago Press; 1967.

Lester L.S., Fanselow M.S. Exposure to a cat produces opioid analgesia in rats. Behavioral Neuroscience. 1985;99:756–759.

Loewenstein G. The pleasures and pains of information. Science. 2006;312:704–706.

Mackintosh N.J. Conditioning and associative learning. Oxford: Clarendon Press; 1983.

Mahadevan S. Average reward reinforcement learning: foundations, algorithms, and empirical results. Machine Learning. 1996;22:159–195.

Marr D. Vision: a computational investigation into the human representation and processing of visual information. San Francisco: W H Freeman; 1983.

Montgomery G.H., Kirsch I. Classical conditioning and the placebo effect. Pain. 1997;72:107–113.

Moutoussis M., Bentall R.P., Williams J., et al. A temporal difference account of avoidance learning. Network. 2008;19:137–160.

Mowrer O.H. Two-factor learning theory: summary and comment. Psychological Review. 1951;58:350–354.

Ossipov M.H., Dussor G.O., Porreca F. Central modulation of pain. Journal of Clinical Investigation. 2010;120:3779–3787.

Pearce J.M., Dickinson A. Pavlovian counterconditioning: changing the suppressive properties of shock by association with food. Journal of Experimental Psychology. 1975;104:170–177.

Price D.D. Psychological and neural mechanisms of the affective dimension of pain. Science. 2000;288:1769–1772.

Price D.D., Finniss D.G., Benedetti F. A comprehensive review of the placebo effect: recent advances and current thought. Annual Review of Psychology. 2008;59:565–590.

Price D.D., Milling L.S., Kirsch I., et al. An analysis of factors that contribute to the magnitude of placebo analgesia in an experimental paradigm. Pain. 1999;83:147–156.

Rescorla R., Wagner A. Variations in the effectiveness of reinforcement and nonreinforcement. In: Black A.H., Prokasy W.F., eds. Classical conditioning II: Current research and theory. New York: Appleton-Century-Crofts, 1972.

Rescorla R.A. Pavlovian conditioned inhibition. Psychological Bulletin. 1969;72:77–94.

Rottenstreich Y., Hsee C.K. Money, kisses, and electric shocks: on the affective psychology of risk. Psychological Science. 2001;12:185–190.

Schwartz A. A reinforcement learning method for maximizing undiscounted rewards. In: Machine Learning: Proceedings of the Tenth International Conference. Amherst: University of Massachusetts; 1993:305. June 27–29

Seymour B., O’Doherty J.P., Dayan P., et al. Temporal difference models describe higher-order learning in humans. Nature. 2004;429:664–667.

Seymour B., O’Doherty J.P., Koltzenburg M., et al. Opponent appetitive-aversive neural processes underlie predictive learning of pain relief. Nature Neuroscience. 2005;8:1234–1240.

Singer T., Seymour B., O’Doherty J.P., et al. Empathic neural responses are modulated by the perceived fairness of others. Nature. 2006;439:466–469.

Slifer K.J., Babbitt R.L., Cataldo M.D. Simulation and counterconditioning as adjuncts to pharmacotherapy for invasive pediatric procedures. Journal of Developmental and Behavioral Pediatrics. 1995;16:133–141.

Solomon R.L., Corbit J.D. An opponent-process theory of motivation: I. Temporal dynamics of affect. Psychological Review. 1974;81:119–145.

Sutton R.S., Barto A.G. A temporal-difference model of classical conditioning. In: Proceedings of the Ninth Annual Conference of the Cognitive Science Society. Washington: Seattle; 1987:355–378.

Sutton R.S., Barto A.G. Reinforcement learning: an introduction. Cambridge, MA: MIT Press; 1998.

Tracey I. Getting the pain you expect: mechanisms of placebo, nocebo and reappraisal effects in humans. Nature Medicine. 2010;16:1277–1283.

Turk D.C., Meichenbaum D., Genest M. Pain and behavioral medicine: a cognitive-behavioral perspective. New York: Guilford Press; 1987.

Villemure C., Bushnell M.C. Cognitive modulation of pain: how do attention and emotion influence pain processing. Pain. 2002;95:195–199.

Vlaev I., Seymour B., Dolan R.J., et al. The price of pain and the value of suffering. Psychological Science. 2009;20:309–317.

Voudouris N.J., Peck C.L., Coleman G. Conditioned response models of placebo phenomena: further support. Pain. 1989;38:109–116.

Wiech K., Ploner M., Tracey I. Neurocognitive aspects of pain perception. Trends in Cognitive Sciences. 2008;12:306–313.

Willer J.C., Roby A., Le Bars D. Psychophysical and electrophysiological approaches to the pain-relieving effects of heterotopic nociceptive stimuli. Brain. 1984;107:1095–1112.

Woolf C.J., Ma Q. Nociceptors—noxious stimulus detectors. Neuron. 2007;55:353–364.

Yuille A., Kersten D. Vision as bayesian inference: analysis by synthesis? Trends in Cognitive Sciences. 2006;10:301–308.