Theoretical Foundations of Health Informatics

Abstract

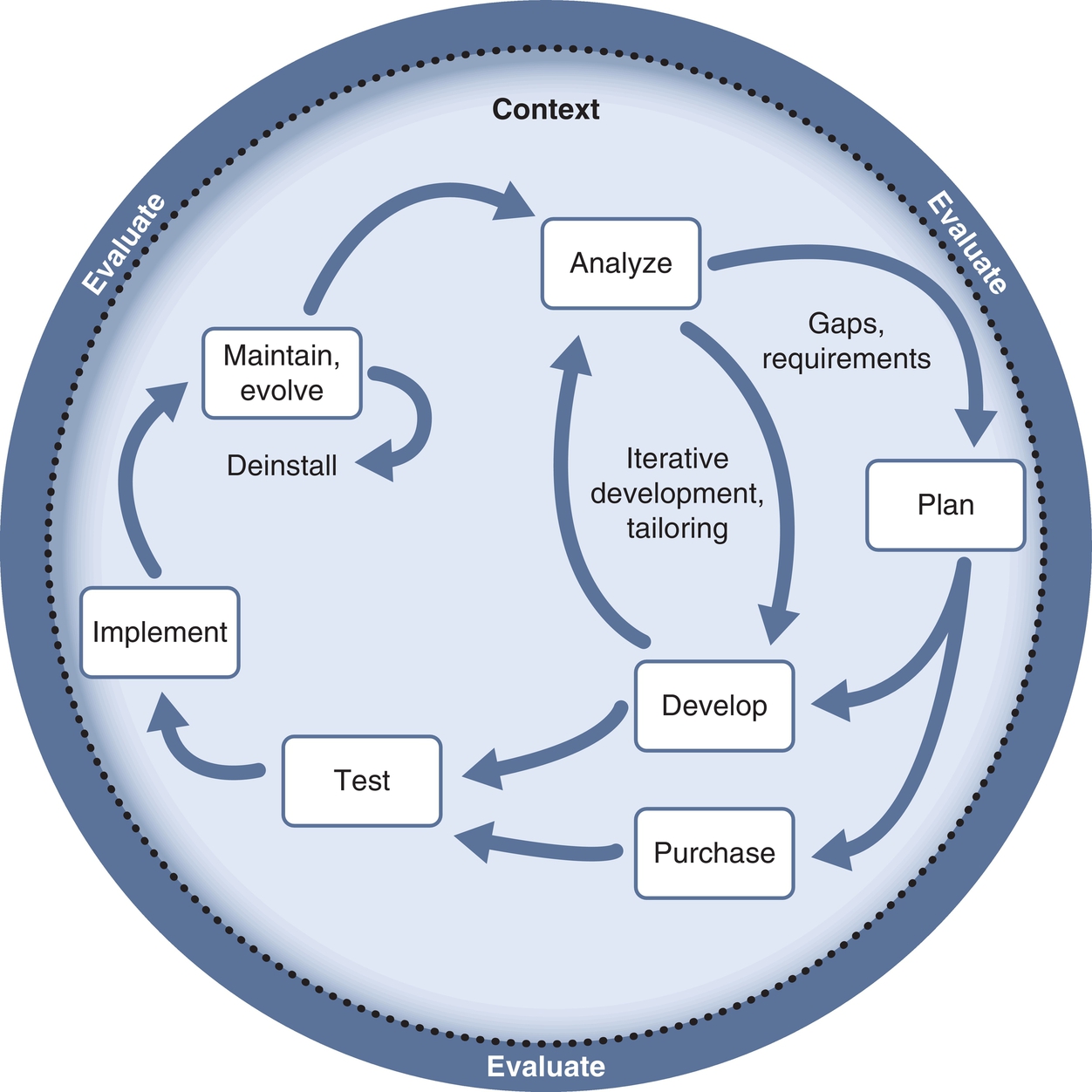

This chapter provides an overview of the technology-related literacies, theories, and models useful for guiding practice in health informatics. For both providers and patients, developing knowledge and related skills in health informatics requires first a foundation in technology-related literacies. The chapter begins by exploring these literacies and their relationship to health informatics. Next, the chapter defines and explains components of grand, middle-range, and micro theories. Whether designing effective and innovative technology-based solutions, implementing these approaches, or evaluating them, truly nothing is as useful as a good theory to guide the process. Specific theories relevant to informatics are outlined. Systems and complexity adaptation theory provide the foundation for understanding each of the theories presented. Information models from Blum, Graves, and Nelson outline the data, information, knowledge, and wisdom continuum. The next section of the chapter presents change theories including the diffusion of innovation theory. In the final section of the chapter, the Staggers and Nelson model of the systems life cycle is described, and its application is outlined.

Whether designing effective and innovative technology-based solutions, implementing these approaches, or evaluating them, truly nothing is as useful as a good theory to guide the process.

Judith Effken

At the completion of this chapter, the reader will be prepared to:

1. Explain the technology-related literacies and their relationship to health informatics.

2. Use major theories and models underpinning informatics to analyze health informatics-related phenomena.

3. Use major theories and models underpinning informatics to predict health informatics-related phenomena.

4. Use major theories and models underpinning informatics to manage health informatics-related phenomena.

Key terms

attributes 17

automated system 24

basic literacy 11

boundary 16

change theory 28

channel 22

chaos theory 18

closed systems 15

Complex Adaptive System (CAS) 18

complexity theory 18

conceptual framework 15

data 22

digital literacy 12

dynamic homeostasis 17

dynamic system 18

entropy 17

equifinality 17

FIT persons 12

fractal-type patterns 18

health literacy 13

information 22

information literacy 12

information theory 21

knowledge 22

lead part 16

learning theory 25

negentropy 18

noise 22

open systems 15

phenomenon 14

receiver 22

reiterative feedback loop 18

reverberation 18

sender 22

subsystem 16

supersystem 16

systems life cycle (SLC) 31

target system 16

theoretical model 15

theory 14

wisdom 23

Introduction

Health informatics is a profession. In turn, the individuals who practice this profession function as professionals. These statements may seem obvious, but there are important implications in these statements that can be easily overlooked. The professionals who practice a profession possess a body of knowledge, as well as values and skills unique to that profession. The body of knowledge, values, and skills guide the profession as a whole, as well as the individual professional, in decisions related directly or indirectly to the services provided to society by that profession. Professional practice is not based on a set of rules that can be carefully followed. Rather, the profession, through its professional organizations, and the professional, as an individual, make decisions by applying their knowledge, values, and skills to the specific situation. The profession and the professional within that profession have a high degree of autonomy, and are therefore responsible for the practice and the decision made within that practice. This chapter provides an overview of the primary technology-related literacies, the theories, and the models useful for guiding the professional practice in health informatics.

Foundational Literacies for Health Informatics

For both providers and patients, developing their knowledge and related skills in health informatics requires a foundation in the technology-related literacies.

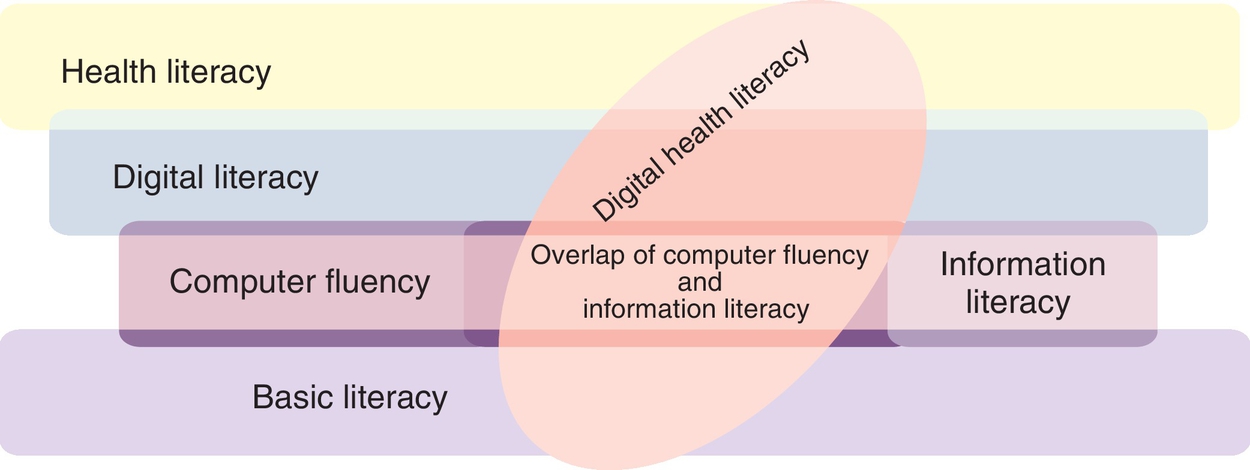

The discussion in this chapter focuses on basic literacy and technology-related literacies that relate directly to the work of patients and healthcare providers. Successful use of technology is dependent on basic literacy, computer literacy, information literacy, digital literacy, and health literacy. These specific literacies are both overlapping and interrelated as illustrated in Fig. 2.1.

Definition of Basic Literacy

As illustrated in Fig. 2.1, basic literacy is the foundational skill. Without a basic level of literacy, the other types of literacy become impossible and irrelevant. UNESCO offered one of the first definitions of literacy. “A literate person is one who can, with understanding, both read and write a short simple statement on his or her everyday life.”1, p. 12 This definition is still in frequent use today. In 2003 UNESCO proposed an operational definition that attempted to encompass several different dimensions of literacy. “Literacy is the ability to identify, understand, interpret, create, communicate, and compute, using printed and written materials associated with varying contexts. Literacy involves a continuum of learning in enabling individuals to achieve their goals, to develop their knowledge and potential, and to participate fully in their community and wider society.”1 Although other UNESCO publications have provided additional definitions of literacy, the 2003 definition continues to be the more comprehensive definition.

In the United States, the U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics conducts the National Assessment of Adult Literacy (NAAL). The NAAL definition of literacy includes both knowledge and skills. NAAL assesses three types of literacy: prose, document, and quantitative.

• Prose literacy: The knowledge and skills needed to search, comprehend, and use continuous texts such as editorials, news stories, brochures, and instructional materials.

• Document literacy: The document-related knowledge and skills needed to perform a search, comprehend, and use noncontinuous texts in various formats such as job applications, payroll forms, transportation schedules, maps, tables, and drug or food labels.

• Quantitative literacy: The quantitative knowledge and skills required for identifying and performing computations, either alone or sequentially, using numbers embedded in printed materials such as balancing a checkbook, figuring out a tip, completing an order form, or determining the amount.2

The focus of both the national and international definitions is the ability to understand and use information in printed or written format. The assumption is that this includes the ability to understand both text and numeric information. Many people assume that if one can read and understand information in printed format, presumably that individual could read and understand the same information on a computer screen. However, computer literacy involves much more than the ability to read information from a computer screen. In fact, the term computer literacy with its limited scope is outdated.

Definition of Computer Literacy/Fluency

Over 15 years ago, the National Academy of Science coined the term FIT persons to describe people who are fluent with information technology. That definition remains current. FIT persons possess three types of knowledge:

• Contemporary skills are the ability to use current computer applications such as word processors, spreadsheets, or an internet search engine, using the correct tool for the right job (e.g., spreadsheets when manipulating numbers and word processors when manipulating text).

• Foundational concepts are understanding the how and why of information technology. This knowledge gives the person insight into the opportunities and limitations of social media and other information technologies.

• Intellectual capabilities are the ability to apply information technology to actual problems and challenges of everyday life. An example of this knowledge is the ability to use critical thinking when evaluating health information on a social media site.3

Definition of Information Literacy

The Association of College and Research Libraries (ACRL), a division of the American Library Association (ALA), initially defined information literacy and has led the development of information literacy standards since the 1980s. As part of this effort, they established standards of information literacy for higher education, high schools, and even elementary education. The ALA defines information literacy as a set of abilities requiring individuals to recognize when information is needed and have the ability to locate, evaluate, and effectively use the needed information.4 This definition has gained wide acceptance. However, with the extensive growth of new technologies, including different internet-based information sources and social media, there have been increasing calls to revise the definition and the established standards from over a decade ago. “Social media environments and online communities are innovative collaborative technologies that challenge the traditional definitions of information literacy…information is not a static object that is simply accessed and retrieved. It is a dynamic entity that is produced and shared collaboratively with such innovative Web 2.0 technologies as Facebook, Twitter, Delicious, Second Life, and YouTube.”5, p. 62

For example, it requires different types of knowledge and skills to evaluate information posted on Facebook, versus Wikipedia, versus an online peer-reviewed prepublished article, versus a peer-reviewed published article. Professional students need different writing skills when participating in an online dialog as opposed to preparing a term paper. There are standards that apply to text messaging, especially if the message is between healthcare colleagues or is being sent to a patient. Developing appropriate policies, procedures, and standards are the challenges facing healthcare leaders in the world of evidence-based practice, social media, and engaged patients. Recognizing the changing world of information creation, access, and use, ACRL has expanded the definition of information literacy:

Information literacy is the set of integrated abilities encompassing the reflective discovery of information, the understanding of how information is produced and valued, and the use of information in creating new knowledge and participating ethically in communities of learning.6

In line with the expanded definition, the ACRL developed a Framework for Information Literacy for Higher Education (Framework).6 The Framework grows out of their belief that information literacy as an educational reform movement will realize its potential only through a richer, more complex set of core ideas. While the Framework does not replace the standards previously developed, it does provide a less prescriptive approach for incorporating information literacy, knowledge, and skills into education, including the education of health professionals. Table 2.1 lists and gives a brief description of the six frames in the Framework. As can be seen from the changing definition of information literacy the internet and related apps, as well as technologies, are changing the concept of information literacy. Arising from this change is the concept of digital literacy.

Table 2.1

Framework for Information Literacy for Higher Education6

| Frames | Description |

| Authority Is Constructed and Contextual | Information resources reflect their creators’ expertise and credibility, and are evaluated based on the information need and the context in which the information will be used. |

| Information Creation as a Process | The iterative processes of researching, creating, revising, and disseminating information vary, and the resulting product reflects these differences. |

| Information Has Value | Information possesses several dimensions of value, including as a commodity, as a means of education, as a means to influence, and as a means of negotiating and understanding the world. |

| Research as Inquiry | Research is iterative and depends upon asking increasingly complex or new questions whose answers in turn develop additional questions or lines of inquiry in any field. |

| Scholarship as Conversation | Communities of scholars, researchers, or professionals engage in sustained discourse with new insights and discoveries occurring over time as a result of varied perspectives and interpretations |

| Searching as Strategic Exploration | Searching for information is often nonlinear and iterative, requiring the evaluation of a range of information sources and the mental flexibility to pursue alternate avenues as new understanding develops. |

Definition of Digital Literacy

The term digital literacy first appears in the literature in the 1990s. However, to date, there is no generally accepted definition. While there is no generally accepted definition, there are a number of national and international Digital Literacy Centers supporting the development of digital literacy. Some examples include:

• Syracuse University’s Center for Digital Literacy at http://digital-literacy.syr.edu/

• University of British Columbia, the Digital Literacy Centre at http://dlc.lled.educ.ubc.ca/

• Microsoft Digital Literacy Curriculum at www.microsoft.com/en-us/DigitalLiteracy

• National Telecommunications and Information Administration Literacy Center at www.digitalliteracy.gov/

There are also a number of books published about digital literacy. Three recognized definitions are published. The earliest of these is provided in a White Paper commissioned by the Aspen Institute Communications and Society Program and the John S. and James L. Knight Foundation, “Digital and media literacy are defined as life skills that are necessary for participation in our media-saturated, information-rich society.” These skills include:

• Making responsible choices and accessing information by locating and sharing materials and comprehending information and ideas.

• Analyzing messages in a variety of forms by identifying the author, purpose, and point of view, and evaluating the quality and credibility of the content.

• Creating content in a variety of forms, making use of language, images, sound, and new digital tools and technologies.

• Reflecting on one’s own conduct and communication behavior by applying social responsibility and ethical principles.

• Taking social action by working individually and collaboratively to share knowledge and solve problems in the family, workplace, and community, and by participating as a member of a community.7

The second, and most recognized definition of digital literacy, provided by the ALA’s Digital Literacy Task Force, describes it as “the ability to use information and communication technologies to find, understand, evaluate, create, and communicate digital information, an ability that requires both cognitive and technical skills.”8, p. 2

A digitally literate person:

• Possesses a variety of skills—cognitive and technical—required to find, understand, evaluate, create, and communicate digital information in a wide variety of formats

• Is able to use diverse technologies appropriately and effectively to search for and retrieve information, interpret search results, and judge the quality of the information retrieved

• Understands the relationships among technology, lifelong learning, personal privacy, and appropriate stewardship of information

• Uses these skills and the appropriate technologies to communicate and collaborate with peers, colleagues, family, and, on occasion, the public

• Uses these skills to participate actively in society and contribute to a vibrant, informed, and engaged community8

The third definition, published by Springer in a book focused on social media for nurses, defined digital literacy as including:

• Competency with digital devices of all types including cameras, eReaders, smartphones, computers, tablets, and video games boards. This does not mean that one can pick up a new device and use that device without an orientation. Rather, one can use trial and error, as well as a manufacturer’s manual, to determine how to use a device effectively.

• The technical skills to operate these devices, as well as the conceptual knowledge to understand their functionality.

• The ability to creatively and critically use these devices to access, manipulate, evaluate, and apply data, information, knowledge, and wisdom in activities of daily living.

• The ability to apply basic emotional intelligence in collaborating and communicating with others.

• The ethical values and sense of community responsibility to use digital devices for the enjoyment and benefit of society.9

These three definitions have much in common, and together they demonstrate that digital literacy is a more comprehensive concept than computer or information literacy. The definition goes beyond the comfortable use of technology demonstrated by the digital native. Digital literacy is about understanding the implications of digital technology and the impact it is having, and will have, on every aspect of our lives. “The truth is, though most people think kids these days get the digital world, we are actually breeding a generation of digital illiterates. How? We are not teaching them how to really understand and use the tools. We are only teaching them how to click buttons. We need to be teaching our students, at all levels, not just how to click and poke, but how to communicate, and interact, and build relationships in a connected world.”10

Definition of Health Literacy

Although health literacy is concerned with the ability to access, evaluate, and apply information to health-related decisions, there is also not a consistent, generally accepted agreement on the definition of the term. In 2011, a published systematic review of the literature in Medline, PubMed, and Web of Science identified 17 definitions of health literacy and 12 conceptual models. The most frequently cited definitions of health literacy were from the American Medical Association, the Institute of Medicine, and World Health Organization (WHO).11 Current definitions from the Institute of Medicine, and WHO include:

• The Institution of Medicine uses the definition of health literacy developed by Ratzan and Parker and cited in Healthy People 2010. Health literacy is “the degree to which individuals have the capacity to obtain, process, and understand basic health information and services needed to make appropriate health decisions.”12

• The WHO has defined health literacy as “the cognitive and social skills that determine the motivation and ability of individuals to gain access to, understand, and use information in ways which promote and maintain good health.” Health literacy means more than being able to read pamphlets and successfully make appointments. By improving people’s access to health information and their capacity to use it effectively, health literacy is critical to empowerment. It is the degree to which people are able to access, understand, appraise, and communicate information to engage with the demands of different health contexts to promote and maintain good health across the life-course.13

The focus in each of these definitions is on an individual’s skill in obtaining and using the health information and services necessary to make appropriate health decisions. These definitions do not fully address the networked world of the internet. In recognition of this deficiency, Norman and Skinner introduced the concept of eHealth as “the ability to seek, find, understand, and appraise health information from electronic sources and apply the knowledge gained to addressing or solving a health problem.”14, e9 This definition acknowledges the need for computer fluency and the use of information skills to obtain an effective level of health literacy. However, this definition is not especially sensitive to the impact of social media. For example, it does not address the individual as a patient/consumer collaboratively creating health-related information that others could use in making health-related decisions. There is increasing evidence that patients bring to the dialog a unique knowledge base for addressing a number of health-related problems.15 Creating the comprehensive definition and model for assessment of health literacy levels that includes the social media literacy skills needed for today’s communication processes remains a challenge for health professionals. A key resource in meeting the health literacy needs within the clinical setting and the community can be found at http://nnlm.gov/outreach/consumer/hlthlit.html.

While each of the technology-related communication literacies presented here focuses on a different aspect of literacy and has a different definition, they all overlap and are interrelated. Fig. 2.1 demonstrates those interrelationships. In this figure, basic literacy is depicted as foundational to all other literacies. Digital literacy includes computer and information literacy as well as other social media–related knowledge and skills that were not initially included in the definitions of computer and information literacy. For example, playing online games is not usually considered part of information or computer literacy, but it clearly requires digital literacy. Health literacy now requires both digital literacy and a basic knowledge of health unrelated to automation. All of the literacies require the ability to evaluate online information and, especially, to pay attention to information generated on social media sites.

Understanding these technology-related communication literacies and integrating them into current policies and procedures is the challenge all healthcare providers and informaticians face.

Understanding theories and models

A theory explains the process by which certain phenomena occur.16 Theories vary in scope depending on the extent and complexity of the phenomenon of interest. Grand theories are wide in scope and attempt to explain a complex phenomenon within the human experience. For example, a learning theory that attempted to explain all aspects of human learning would be considered a grand theory. Because of the complexity of the theory and the number of variables interacting in dependent, independent, and interdependent ways, these theories are difficult to test. However, grand theories can be foundational within a discipline or subdiscipline. For example, learning and teaching theories are foundational theories within the discipline of education.

Middle-range theories are used to explain specific defined phenomena. They begin with an observation of the specific phenomena. For example, one might note how people react to change. However, why and how does this phenomenon occur? A theory focused on the phenomenon of change would explain the process that occurs when people experience change and predict when and how they will respond in adjusting to the change.

Micro theories are limited in scope and specific to a situation. For example, one might describe the introduction of a new electronic health record (EHR) within a large ambulatory practice and even measure the variables within that situation that could be influencing the acceptance and use of the new system. In the past, micro theories, with their limited scope, have rarely been used to test theory. However, this is changing with the development of Web 2.0 and the application of meta-analysis techniques to automatized natural language processing.

The development of a theory occurs in a recursive process moving on a continuum from the initial observation of the phenomenon to the development of a theory to explain that phenomenon. The process of moving on this continuum can be divided into several stages, including the following:

1. A specific phenomenon is observed and noted.

2. An idea is proposed to explain the development of the phenomenon.

3. Key concepts used to explain the phenomenon are identified, and the processes by which the concepts interact are described.

4. A conceptual framework is developed to clarify the concepts and their relationships and interactions. Conceptual frameworks can be used to propose theories and generate research questions. The conceptual framework can also be used to develop a conceptual model. A conceptual model is a visual representation of the concepts and their relationships.

5. A theory and related hypothesis are proposed and tested.

6. Evidence accumulates, and the theory is modified, rejected, replaced, or it gains general acceptance.

Many of the models used to guide the practice of health informatics and discussed in this chapter can be considered theoretical models or frameworks. Because theoretical frameworks explain a combination of related theories and concepts, they can be used to guide practice and generate additional research questions. With this definition, one can argue that the concept of a theoretical framework can be conceived as a bridge between a middle-range theory and a grand theory. A theoretical model is a visual representation of a theoretical framework. Many of the models in healthcare and health informatics use a combination of theories in explaining phenomena of interest within these disciplines and fit the definition of a theoretical framework.

Even though the terms theory and concept are consistently defined and used in the literature, the terms conceptual and theoretical framework, as well as the terms conceptual and theoretical models, are not. No set of consistent criteria can be applied to determine whether a model is conceptual or theoretical. As a result, researchers and informaticians will often publish models without clarifying that the proposed model is either conceptual or theoretical. In turn, it is possible for one reference to refer to a model as a conceptual framework whereas another uses the term theory when it refers to a model as a theoretical framework.

Theories and Models Underlying Health Informatics

Health informatics is an applied field of study incorporating theories from information science; computer science; the science for the specific discipline, such as medicine, nursing, or pharmacy; and the wide range of sciences used in healthcare delivery. Therefore health professionals and health informatics specialists draw on a wide range of theories to guide their practice. This chapter focuses on selected theories that are of major importance to health informatics and those that are most directly applicable. These theories are vital to understanding and managing the challenges and decisions faced by health professionals and informatics specialists. In analyzing the selected theories, the reader will discover that understanding these theories presents certain challenges. Some of the theories overlap, different theories are used to explain the same phenomena, and sometimes, different theories have the same name. The theories of information are an example of each of these challenges.

The one theory that underlies all of the theories used in health informatics is systems theory. Therefore this is the first theory discussed in this chapter.

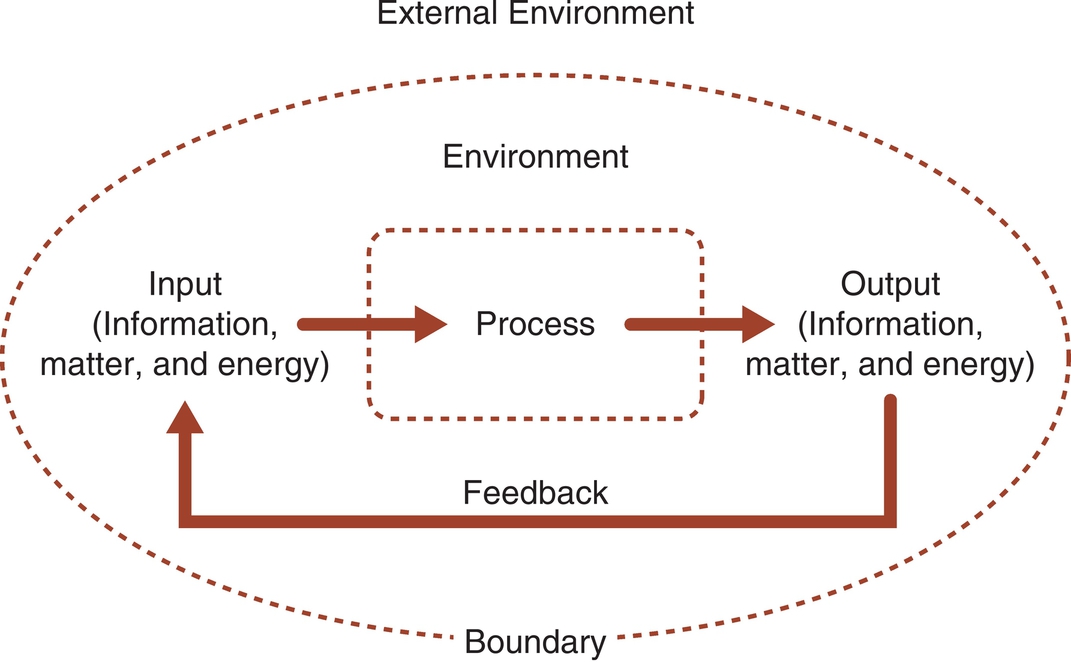

Systems Theory

A system is a set of related interacting parts enclosed in a boundary.17 Examples of systems include computer systems, school systems, the healthcare system, and a person. Systems may be living or nonliving.18 Systems may be either open or closed. Closed systems are enclosed within an impermeable boundary and do not interact with the environment. Open systems are enclosed within a semipermeable boundary and do interact with the environment. This chapter focuses on open systems, which can be used to understand technology and the people who are interacting with the technology. Fig. 2.2 demonstrates an open system interacting with the environment. Open systems take input (information, matter, and energy) from the environment, process the input, and then return output to the environment. The output then becomes feedback to the system. Concepts from systems theory can be applied in understanding the way people work with computers in a healthcare organization. These concepts can also be used to analyze individual elements such as software or the total picture of what happens when systems interact.

A common expression in computer science is “garbage in garbage out,” or GIGO. GIGO refers to the input-output process. The counter-concept implied by this expression is that quality input is required to achieve quality output. Although GIGO usually is used to refer to computer systems, it can apply to any open system. An example of this concept can be seen when informed active participants provide input for the selection of a healthcare information system. In this example, garbage in can result in garbage out or quality input can support the potential for quality output. Not only is quality input required for quality output, but the system must also have effective procedures for processing those data. Systems theory provides a framework for looking at the inputs to a system, analyzing how the system processes those inputs, and measuring and evaluating the outputs from the system.

Characteristics of Systems

Open systems have three types of characteristics: purpose, structure, and functions. The purpose is the reason for the system’s existence. The purpose of an institution or program is often outlined in the mission statement. Such statements can include more than one purpose. For example, many healthcare institutions have three purposes: (1) provide patient care, (2) provide educational programs for students in the health professions, and (3) conduct health-related research. Computer systems are often referred to or classified by their purpose(s). The purpose of a radiology system is to support the radiology department. An EHR can have several different purposes. One of the purposes is to maintain a census that can be used to bill for patient care.

One of the first steps in selecting a computer system for use in a healthcare organization is to identify the purposes of that system. Having a succinct purpose answers the question “Why select a system?” Many times, there is a tendency to minimize this step with the assumption that everyone already agrees on the purposes of the system. When a system has several different purposes, it is common for individuals to focus on the purposes most directly related to their area of responsibility. Taking the time to specify and prioritize the purposes helps to ensure that the representatives from clinical, administration, and technology agree on the reasons for selecting a system and understand the full scope of the project.

Functions, on the contrary, focus on the question “How will the system achieve its purpose?” Functions are sometimes mistaken for purpose. However, it is important to clarify why a system is needed, and then identify what functions the system will carry out to achieve that purpose. For example, a hospital may maintain patient census data including admissions, discharges, and transfers through a computerized registration system. Each time a department accesses the patient’s online record, the name and other identifying information are transmitted from a master file, ensuring consistency throughout the institution. When selecting a computer system, the functions for that system are carefully identified and defined in writing. These are listed as functional specifications. Specifications identify each function and describe how that function will be performed.

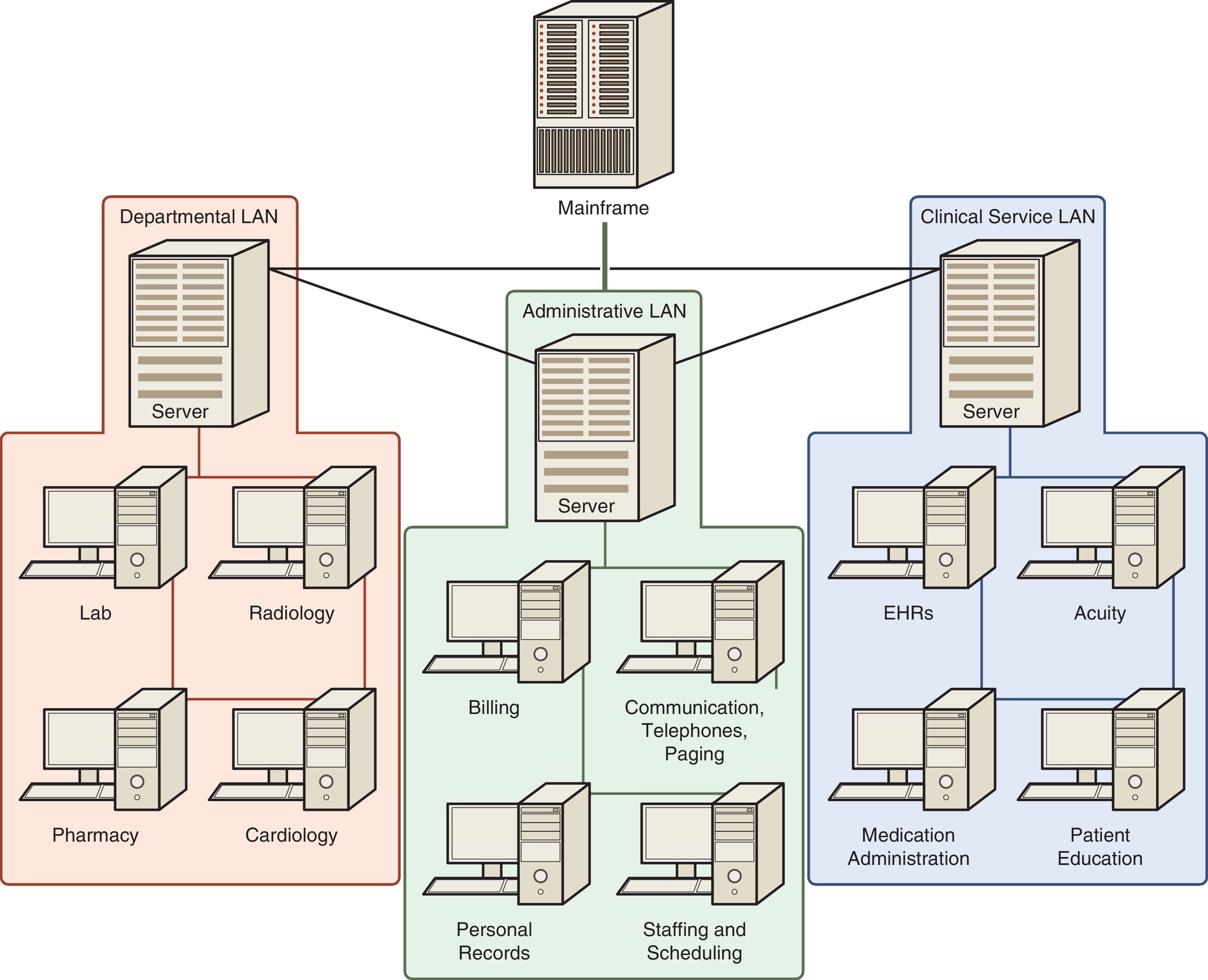

Systems are structured to perform their functions. Two different structural models operating concurrently can be used to conceptualize healthcare technical infrastructures. These are hierarchical and web. The hierarchical model is an older architectural model, and the terms, such as mainframe, that are used to describe the model reflect that reality. The location and type of hardware used within a system often follow a hierarchical model; however, as computer systems are becoming more integrated, information flow increasingly follows a web model. The hierarchical model can be used to structure the distribution of the computer processing loads at the same time as the web model is used to structure communication of health-related data throughout the institution. The hierarchical model is demonstrated in Fig. 2.3. Each individual computer is part of a local area network (LAN). The LANs join together to form a wide area network (WAN) that is connected to the mainframe computers. In Fig. 2.3 the mainframe is the lead computer or lead part. This structure demonstrates a centralized approach to managing the computer structure.

When analyzing the hierarchical model, the term system may refer to any level of the structure. In Fig. 2.3, an individual computer may be referred to as a system or the whole diagram may be considered a system. Three terms are used to indicate the level of reference. These are subsystem, target system, and supersystem. A subsystem is any system within the target system. For example, if the target system is a LAN, each computer is a subsystem. The supersystem is the overall structure in which the target system exists. If the target system is a LAN, then Fig. 2.3 represents a supersystem.

The second model used to analyze the structure of a system is the web model. The interrelationships between the different LANs function like a web. Laboratory data may be shared with the pharmacy and the clinical units concurrently, just as the data collected by nursing, such as weight and height, may be shared with each department needing the data. The internet is an example of a complex system that demonstrates both hierarchical and web structures interacting as a cohesive unit. As these examples demonstrate, a system includes structural elements from both the web model and the hierarchical model. Complex and complicated systems discussed later in this chapter can include a number of supersystems organized using both hierarchical and web structures.

Boundary, attributes, and environment are three concepts used to characterize structure. The boundary of a system forms the demarcation between the target system and the environment of the system. Input flows into the system by moving across the boundary and output flows into the environment across this boundary. For example, with a web model, information flows across the systems. Thinking in terms of boundaries can help to distinguish information flowing into a system from information being processed within a system. Fig. 2.3 can be used to demonstrate how these concepts can establish the boundaries of a project. Each computer in the diagram represents a target system for a specific project. For example, a healthcare institution could be planning for a new pharmacy information system. The new pharmacy system becomes the target system. However, as the model demonstrates, the pharmacy system interacts with other systems within the total system. The task group selecting the new pharmacy system will need to identify the functional specifications needed to automate the pharmacy and the functional specifications needed for the pharmacy system to interact with the other systems in the environment. Clearly, specifying the target system and the other systems in the environment that must interact or interface with the target will assist in defining the scope of the project. By defining the scope of the project, it becomes possible to focus on the task while planning for the integration of the pharmacy system with other systems in the institution. A key example is planning for the impact of a new pharmacy system in terms of the activities of nurses who are administering medications.

In planning for healthcare information systems, attributes of the system are identified. Attributes are the properties of the parts or components of the system. When discussing computer hardware, these attributes are usually referred to as specifications. An example of a list of patient-related attributes can be seen on an intake or patient assessment form in a healthcare setting. Attributes and the expression of those attributes play a major role in the development of databases. Field names are a list of the attributes of interest for a specific system. The datum in each cell is the individual system’s expression of that attribute. A record lists the attributes for each individual system. The record can also be seen as a subsystem of the total database system.

Systems and the Change Process

Both living and nonliving systems are constantly in a process of change. Six concepts are helpful in understanding the change process. These are dynamic homeostasis, equifinality, entropy, negentropy, specialization, and reverberation.

Dynamic homeostasis refers to the processes used by a system to maintain a steady state or balance. This same goal of maintaining a steady state can affect how clinical settings respond when changes are made or a new system is implemented.

Equifinality is the tendency of open systems to reach a characteristic final state from different initial conditions and in different ways. For example, two different clinics may be scheduled for the implementation of a new EHR. One unit may be using paper records and the other unit may have an outdated computer system. A year or two later, both clinical units may be at the same point, comfortably using the new system. However, the process for reaching this point may have been very different.

Entropy is the tendency of all systems to break down into their simplest parts. As it breaks down, the system becomes increasingly disorganized or random. Entropy is demonstrated in the tendency of all systems to wear out. Even with maintenance, a healthcare information system will reach a point where it must be replaced. Healthcare information that is transferred across many different systems in many different formats can also demonstrate entropy, thereby causing confusion and conflict between different entities within the healthcare system.

Negentropy is the opposite of entropy. This is the tendency of living systems to grow and become more complex. This is demonstrated in the growth and development of an infant, as well as in the increased size and complexity of today’s healthcare system. With the increased growth and complexity of the healthcare system, there has been an increase in the size and complexity of healthcare information systems. As systems grow and become more complex, they divide into subsystems and then sub-subsystems. This is the process of differentiation and specialization. Note how the human body begins as a single cell and then differentiates into different body systems, each with specialized purposes, structures, and functions. This same process occurs with healthcare. If the mainframe in Fig. 2.3 were to stop functioning, the impact would be much more significant than if an individual computer in one of the LANs were to stop functioning.

Change within any part of the system will be reflected across the total system. This is referred to as reverberation. Reverberation is reflected in the intended and unintended consequences of system change. When planning for a new healthcare system, the team will attempt to identify the intended consequences or expected benefits to be achieved. Although it is often impossible to identify a comprehensive list of unintended consequences, it is important for the team to consider the reality of unintended consequences. The potential for unintended consequences should be discussed during the planning stage; however, these will be more evident during the testing stage that precedes the implementation or “go-live.” Many times unintended consequences are not considered until after go-live, when they become obvious. For example, e-mail may be successfully introduced to improve communication in an organization. However, an unintended consequence can be the increased workload from irrelevant e-mail messages. Unintended consequences are not always negative. They can be either positive or negative.

Unintended consequences is just one example of how difficult it is to describe, explain, and predict events and maybe even control outcomes in complex systems such as a healthcare institution. Starting in the 1950s, chaos theory, followed by complexity theory, began to develop and was seen as an approach for understanding complex systems. Both chaos and complexity theory involve the study of dynamic nonlinear systems that change with time and demonstrate a variety of cause-and-effect relationships between inputs and outputs because of reiterative feedback loops. “The quantitative study of these systems is chaos theory. Complexity theory is the qualitative aspect drawing upon insights and metaphors that are derived from chaos theory.”19 Box 2.1 outlines the characteristics of chaotic systems. The characteristics of chaotic systems provide a foundation for understanding how complex systems adapt over time. Such systems are termed complex adaptive systems (CAS). There are now several examples of how the concept of CAS is being use to understand phenomenon of interest in the healthcare literature.23-30

Complex Adaptive Systems

A Complex Adaptive System (CAS) is defined as an “entity consisting of many diverse and autonomous parts which are interrelated, interdependent, linked through many interconnections, and behave as a unified whole in learning from experience and in adjusting (not just reacting) to changes in the environment. Each individual agent of a CAS is itself a CAS.”31 This definition is best understood by analyzing the characteristics of a CAS.32–35

Characteristics of a CAS

Change occurs through nonlinear interdependencies. A CAS demonstrates interrelationships, interaction, and interconnectivity of the units within the system and between the system and its environment. A change in any one part of the system will influence all other related parts but not in any uniform manner. For example, a small inexpensive change on a computer screen may make that screen easier to understand and in turn prevent a number of very dangerous mistakes. On the contrary, a major revision of an organization website may have minimal impact on how the user of that site sees the organization or uses the site.

Control is distributed. Within a CAS, there is no single person or group who has full control of the organization. It is the interrelationship and interdependencies that produce a level of coherence that makes it possible for the organization to function and even grow. Thus the overall behavior cannot be predicted by reviewing the activities of one section or part of the organization. Hindsight can be used to explain past events, but foresight is limited in predicting outcomes. Therefore solutions based on past practices may prove ineffective when presented with a new version of a previous problem.

Learning and behavior change is constant. Individuals and groups within a CAS are intelligent agents who learn from each other, probe their environment, and test out ideas. They are constantly learning and changing their ideas as well as their behavior.

Units or parts of a CAS are self-organizing. Self-organizing refers to the ability of CAS to arrange workflow and patterns of interaction spontaneously into a purposeful (nonrandom) manner, without the help of an external agency. Individuals and groups within a CAS are unique. Individuals and groups with the CAS will have different and overlapping needs, desires, knowledge, and personality traits. Order results from the feedback inherent in the interactions between individuals and groups as each moves through their own activities. Behavior patterns that were not designed into the system will emerge. As these individuals and groups interact, the emerging patterns of interactions will include some degree of both cooperation and conflict. Therefore these emergent behaviors may range from valuable innovations to severe conflict issues.

The patterns of interaction are for the most part informal yet well established for the individuals functioning in the group. The patterns of behavior will have a major influence on the organization of workflow, but are often not obvious to someone new in the group. In addition, such behaviors are often not documented; for example, in a policy or procedure. However, these emergent behaviors can be key to understanding how a department or group actually functions. In addition, emergent behaviors are constantly changing as both the external and internal environment of the unit changes.

The adaptive process within complex organizations demonstrates infinitely complex, unique emerging patterns. For example, the go-live process within or across complex organizations follows a similar but unique process each time a new system is installed, even when the same software is installed in a similar type of clinical unit in a new site. Sites and units all have different workflows and organizational cultures to which processes need to be tailored.

CASs exist in co-evolution with the environment. The concept of co-evolution refers to the process whereby a CAS is continuously adapting as it responds to its environment and, simultaneously, the environment is constantly adapting as it responds to the changing CAS. They are evolving together in a co-evolutionary interactive process.

The adaptive response of a complex system to environmental change and the resulting environmental change from that adaptation includes an element of unpredictability. The overall pattern of adaptation that emerges from the adaptive changes within each unit is unique to each organization and impossible to predict completely. For example, computerized provider order entry (CPOE) changed the workflow for unit secretaries, nurses, physicians, and hospital departments. Each of these units within the system is creating adaptations at the same time as it is adjusting to changes occurring in the other units. A significant number of these changes (whether they were seen as positive or negative by individuals in the institution) are experienced as unintended consequences.

A CAS includes both order and disorder. A certain level of order is necessary for a CAS to be effective and efficient. However, the more firmly order is imposed, the less flexibility the CAS has to adjust to change. Systems that are too tightly controlled can become fragile. A CAS needs to maintain some slack and redundancy to buffer against environmental changes that are not anticipated. This balance between creating a unified whole and an institution with enough flexibility to adapt to the changing internal and external environment can be a challenge when integrated healthcare institutions attempt to merge hospitals, long-term care facilities, clinics, and other settings into one healthcare system. The larger and more diffuse the organization, the more likely it is to include a degree of order and disorder and, therefore, more potential to survive in some form. For example, individual hospitals, clinics, and third-party payers may go out of business, but the healthcare system as a societal institution will continue to exist in ever-evolving ways.

The characteristics of a CAS interact together in a synergistic manner, ensuring that a certain level of uncertainly is inherent in the management of a CAS. The natural disposition of decision makers in such an environment is to attempt to reduce the uncertainty. However, imposing order and tightly regulating a CAS does not let the system take advantage of the inherent ability to adapt, and, in the end, it creates a CAS that is less resilient and less robust. Resilience is the ability to recover from a failure, and robustness is the ability to resist failure. The more robust a system is, the better able it is to anticipate and avoid a failure. The more resilient a system is, the better able it is to recover quickly from a failure.

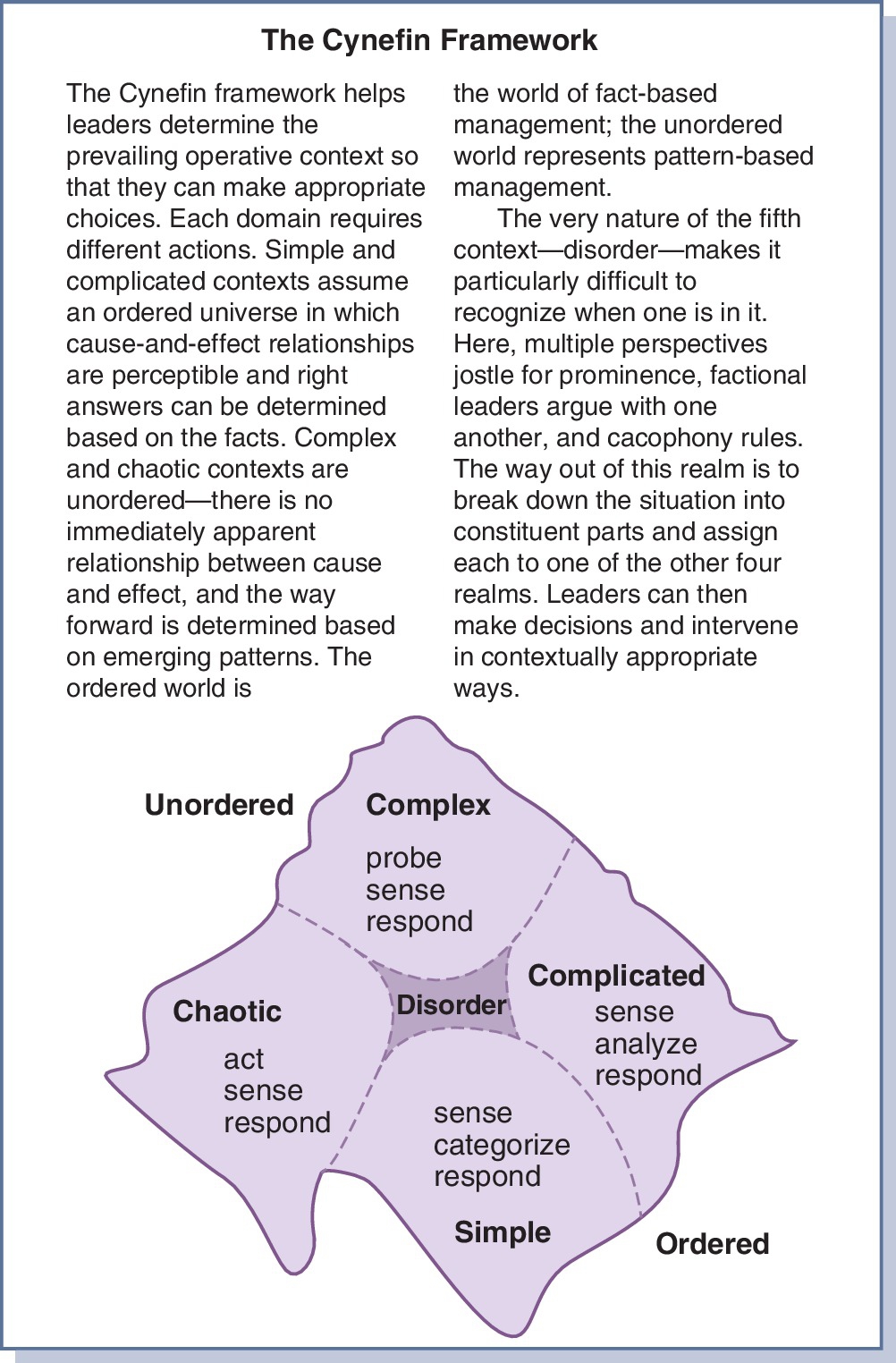

That being said, frameworks for understanding how to manage CASs such as organizations and communities continue to evolve. One such framework is the Cynefin Framework.34-39

Cynefin Framework for Managing Uncertainly in CAS

A CAS, such as a healthcare organization, will exist with a constantly fluctuating balance between order and disorder. This can be thought of as a continuum with any one system or part of the system moving between order and disorder. The Cynefin Framework was developed to guide organizational management on this continuum, and it provides a helpful perspective for managing informatics-related projects within a healthcare institution. The Framework consists of five domains, as depicted in Fig. 2.4.36 The first four domains (simple, complicated, complex, and chaotic) require the leader or manager to determine the level of order or disorder and adjust their management style to that reality. The fifth domain exists when the manager/leader is unclear which of the other four domains are predominant in a specific situation. As a situation moves toward the ordered end on the continuum, one of two domains will predominate. Those are the simple and complicated domains.

Simple Domain

A simple domain is highly ordered. Cause and effect are evident to the people involved in the setting. Processes are carried out using known procedures that are effective and efficient. This process involves (1) sensing or collecting the pertinent data, (2) categorizing the data, and (3) applying the appropriate response. An example would be administering medications on a clinical unit. The procedures are carefully designed to ensure the five rights of the patient are effectively and efficiently met. There are rules about what should be done if a patient refuses a medication, is off the clinical unit, or some other usual issue occurs. With this type of situation, everyone is expected to follow the procedure carefully. The concept of best practices is most appropriate in these types of situations. Often these types of processes or at least parts of their procedures are excellent candidates for computerization. Because these situations are highly ordered, they have little flexibility. A change in the environment of the unit can lead to chaos because there is no set procedure to be followed. For example, the procedure for administering medication may require that only registered nurses (RNs) open the medication cart. However, what happens in a disaster if no RNs are available? While micromanagement is contraindicated, the leadership in these situations should stay in the information loop, watching for early signs of change and avoiding a move to a chaotic domain.

Complicated Domain

In this domain, the relationship between cause and effect is known but it is not obvious to everyone in the domain. As a result, knowledge and expertise are very important for managing complicated problems. These types of problems are managed by collecting and analyzing data before responding. The expert will know what data are pertinent, as well as different approaches for analyzing these data and different options for responding. There is often more than one right answer. Different experts may solve the problem in different ways. In addition, experts can identify an effective solution but not be able to articulate every detail of the cause-and-effect relationship. For example, health professional or a health informatics specialist may be planning the educational program to accompany a major system implementation in a healthcare setting. In such a situation, there is not one perfect answer but rather several excellent options that can be used to prepare users for the new work environment. In these cases, good practice is a more appropriate approach than best practice because good practice is more flexible and able to tap into the expertise of the expert. Forcing experts to use the “one right approach” usually results in strong resistance.

Because experts are the dominant players, there are problems in the complicated domain that a manager must consider. Innovative approaches offered by nonexperts can be easily dismissed. This can be a significant problem in hierarchical organizations such as healthcare. A group of experts with different approaches can disagree and become bogged down in “analysis paralysis.” In addition, all experts are limited in scope of expertise, and experts are not always able to realize when they have drifted out of their scope of expertise. As a situation moves toward the disordered end on the continuum, one of two domains will predominate. These are the complex and the chaotic domains.

Complex Domain

In the complex domain, the cause-effect relationship is not known. If the cause-and-effect relationship is not known, what data are pertinent data is also unknown. Decisions must be based on incomplete data in an environment of unknown unknowns. The difference between a complicated situation and complex situation can be demonstrated in analyzing the difference between a computer and a person. A computer expert can identify all of the parts of a computer and how they interact. The expert can predict with high accuracy how a computer will react in different situations. For example, a bad hard drive can prevent a computer from booting, but the response of a person to different situations cannot be predicted with the same accuracy. Because healthcare organizations are CASs and always in flux, many important situations and decisions are complex. In this domain, problems are not solved by imposing order. Rather than collecting many data, better solutions can emerge if the manager probes by trying small, fail-safe solutions. For example, a large medical center recently purchased a small rural hospital with little to no computerization in the clinical units. Imposing the information systems installed in the medical center could produce significant resistance and probably fail. Success is more likely if small pilot tests are conducted and then used to pull key leaders and users in when analyzing the results. In the analysis, patterns and potential solutions will begin to emerge. Obviously, this process is time consuming and a bit messy but not nearly as messy as failure. However, there are leadership tools to utilize in a complex domain as illustrated in Box 2.2.

Chaotic Domain

In the chaotic domain, there is no discernable relationship between cause and effect; therefore it is impossible to determine and manage the underlying problem. In healthcare, the term crash is often used for this situation. For example, a patient is crashing or a computer crashed. In this situation, the goal is to first stabilize the situation and then assess potential causes. CPR will be tried before there is any attempt to determine why the patient would have experienced a cardiac arrest. In a crisis, the manager takes charge and gives orders. In our previous example dealing with medication administration, the health professional or health informatician may decide to unlock the medication cart for all patients and determine if family or other staff may be able to give at least some of the medications. In a chaotic situation, there is no time to seek input or second-guess decisions. However, once the situation begins to stabilize, this can be a unique opportunity for innovation. Organizations and individuals are more open to change after a crisis: note, for example, the number of patients who will change their lifestyle after they have experienced a myocardial infarction. In Fig. 2.4, the fifth domain is the center domain, existing between disorder and order.

Disorder Domain

Showden uses the term disorder to name this domain. Each of the other domains is focused on the type of situation or state of the organization. In the fifth domain, it is not the organization that is in disorder but rather the leader. The leader does not know which domain is predominant. If the leaders are aware of their confusion, they can begin by using the Framework to assess the situation. However, managers do not always realize what they do not know. If they do not realize the need to diagnosis the situation, there is a tendency to continue using their preferred management style.

While CASs are a unique type of system, all systems, whether they are complex or simple, change, and in the process interact and/or co-evolve with the environment. The basic framework of this interaction is shown in Fig. 2.2. Input to the system consists of information, matter, and energy. These inputs are then processed, and the result is output. Understanding this process as it applies to informatics involves an understanding of information theory.

Information Theory

The term information has several different meanings. An example of this can be seen in Box 2.3, taken from Merriam-Webster’s Collegiate Dictionary.40 Just as the term information has more than one meaning, information theory refers to more than one theory.41 In this chapter, two theoretical models of information theory are examined: the Shannon-Weaver information-communication model and the Nelson data-information-knowledge-wisdom model (DIKW) that evolved from Blum’s and Graves’s initial work.

Shannon-Weaver Information-Communication Model

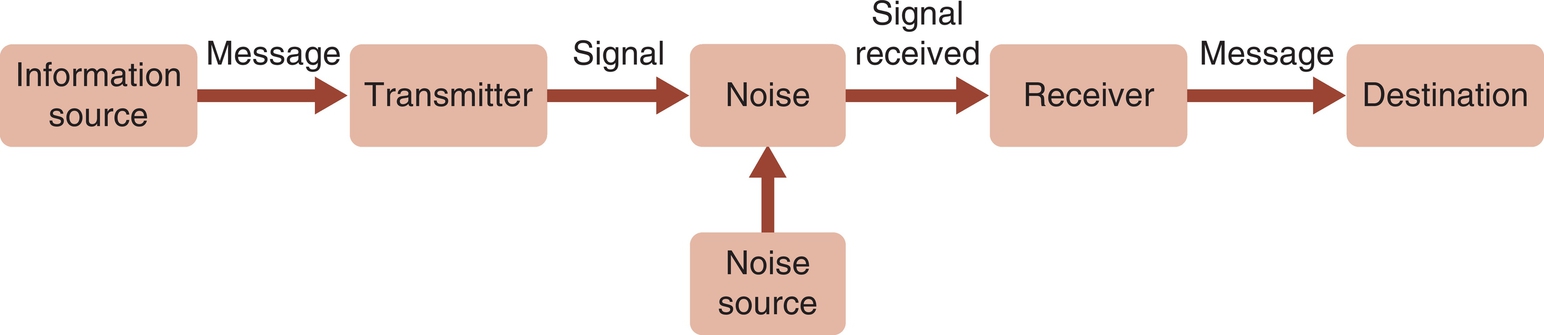

Information theory was formally established in 1948 with the publication of the landmark paper “The Mathematical Theory of Communication” by Claude Shannon.42 The concepts in this model are presented in Fig. 2.5. The sender is the originator of the message or the information source. The transmitter is the encoder that converts the content of the message to a code. The code can be letters, words, music, symbols, or a computer code. A cable is the channel, or the medium used to carry the message. Examples of channels include satellites, cell towers, glass optical fibers, coaxial cables, ultraviolet light, radio waves, telephone lines, and paper. Each channel has its own physical limitations in terms of the size of the message that can be carried. Noise is anything that is not part of the message but occupies space on the channel and is transmitted with the message. Examples of noise include static on a telephone line and background sounds in a room. The decoder converts the message to a format that can be understood by the receiver. When listening to a phone call, the telephone is a decoder. It converts the signal back into sound waves that are understood as words by the person listening. The person listening to the words is the destination.

Shannon, one of the authors of the Shannon-Weaver Information-Communication theory, was a telephone engineer. He used the concept of entropy to explain and measure the technical amount of information in a message. The amount of information in a message is measured by the extent to which the message decreases entropy. The unit of measurement is a bit. A bit is represented by a 0 (zero) or a 1 (one). Computer codes are built on this concept. For example, how many bits are needed to code the letters of the alphabet? What other symbols are used in communication and must be included when developing a code?

Warren Weaver, from the Sloan-Kettering Institute for Cancer Research, provided the interpretation for understanding the semantic meaning of a message.42 He used Shannon’s work to explain the interpersonal aspects of communication. For example, if the speaker is a physician who uses medical terms that are not known to the receiver (the patient), there is a communication problem caused by the method used to code the message. However, if the patient cannot hear well, he may not hear all of the words in the message. In this case the communication problem is caused by the patient’s ear.

The communication-information model provides an excellent framework for analyzing the effectiveness and efficiency of information transfer and communication. For example, a healthcare provider may use CPOE to enter orders. Is the order-entry screen designed to capture and code all of the key elements for each order? Are all aspects of the message coded in a way that can be transmitted and decoded by the receiving computer? Does the message that is received by the receiving department include all of the key elements in the message sent? Does the screen designed at the receiver’s end make it possible for the message to be decoded or understood by the receiver?

These questions demonstrate three levels of communication that can be used in analyzing communication problems.43 The first level of communication is the technical level. Do the system hardware and software function effectively and efficiently? The second level of communication is the semantic level. Does the message convey meaning? Does the receiver understand the message that was sent by the sender? The third level of communication is the effectiveness level. Does the message produce the intended result at the receiver’s end? For example, did the provider order one medication but the patient received a different medication with a similar spelling? Some of these questions require a more in-depth look at how healthcare information is produced and used. Bruce Blum’s definition of information provides a framework for this more in-depth analysis.

Blum Model

Bruce L. Blum developed his definition of information from an analysis of the accomplishments in medical computing.44 In his analysis, he identified three types of healthcare computing applications. Blum grouped applications according to the objects they processed. The three types of objects he identified are data, information, and knowledge. Blum defined data as uninterpreted elements such as a person’s name, weight, or age. Information was defined as a collection of data that have been processed and then displayed as information, such as weight over time. Knowledge results when data and information are identified and the relationships between the data and information are formalized. A knowledge base is more than the sum of the data and information pieces in that knowledge base. A knowledge base includes the interrelationships between the data and information within the knowledge base. A textbook can be seen as containing knowledge.44 These concepts are well accepted across information science and are not limited to healthcare and health informatics.45

Graves Model

Judy Graves and Sheila Corcoran, in their classic article “The Study of Nursing Informatics,” used the Blum concepts of data, information, and knowledge to explain the study of nursing informatics.46 They incorporated Barbara A. Carper’s four types of knowledge: empirical, ethical, personal, and aesthetic. Each of these represents a way of knowing and a structure for organizing knowledge. This article is considered the foundation for most definitions of nursing informatics.

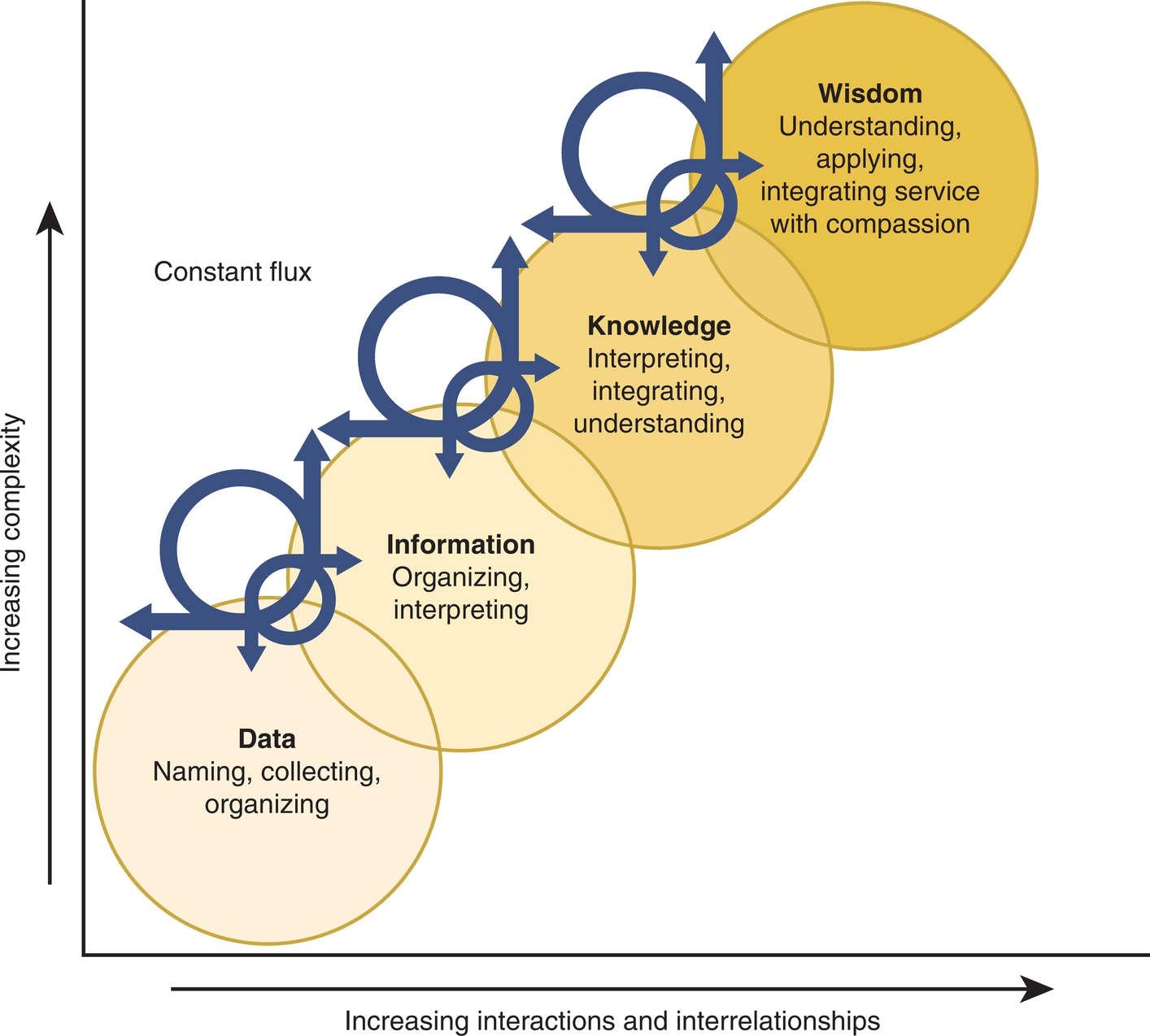

Nelson Model

In 1989 Nelson extended the Blum and Graves and Corcoran data-to-knowledge continuum by including wisdom.47 This initial publication provided only brief definitions of the concepts, but later publications included a model.48 Fig. 2.6 demonstrates the most current version of this model. Within this model, wisdom is defined as the appropriate use of knowledge in managing or solving human problems. It is knowing when and how to use knowledge in managing patient needs or problems. Effectively using wisdom in managing a patient problem requires a combination of values, experience, and knowledge. The concepts of data, information, knowledge, and wisdom overlap and interrelate as demonstrated by the overlapping circles and arrows in the model. Of note, what is information in one context may be data in another. For example, a nursing student may view the liver function tests within the blood work results reported this morning and see only data or a group of numbers related to some strange-looking tests. However, the staff nurse looking at the same results will see the information and in turn see the implications for the patient’s plan of care. The greater the knowledge base used to interpret data, the more information disclosed from that data, and in turn the more data points that may be generated. Data processed to become information can create new data items. For example, if one collects the blood sugar levels for a diabetic patient over time, patterns begin to emerge. These patterns become new data items to be interpreted. One nurse may notice and describe the pattern, but a nurse with more knowledge related to diabetes may identify a Somogyi-type pattern, with important implications for the patient’s treatment protocols. The concept of constant flux is illustrated by the curved arrows moving between the concepts. As one moves up the continuum, there are increasing interactions and interrelationships within and between the circles, producing increased complexity of the elements within each circle. Therefore the concept of wisdom is much more complex than the concept of data.

The introduction of the concept of wisdom gained professional acceptance in 2008 when the American Nurses Association included this concept and the related model in Nursing Informatics: Scope and Standards of Practice.49 In this document the model is used to frame the scope of practice for nursing informatics. This change meant that the scope of practice for nursing informatics was no longer fully defined by the functionality of a computer and the types of applications processed by a computer. Rather the scope of practice is now defined by the goals of nursing and nurse-computer interactions in achieving these goals.

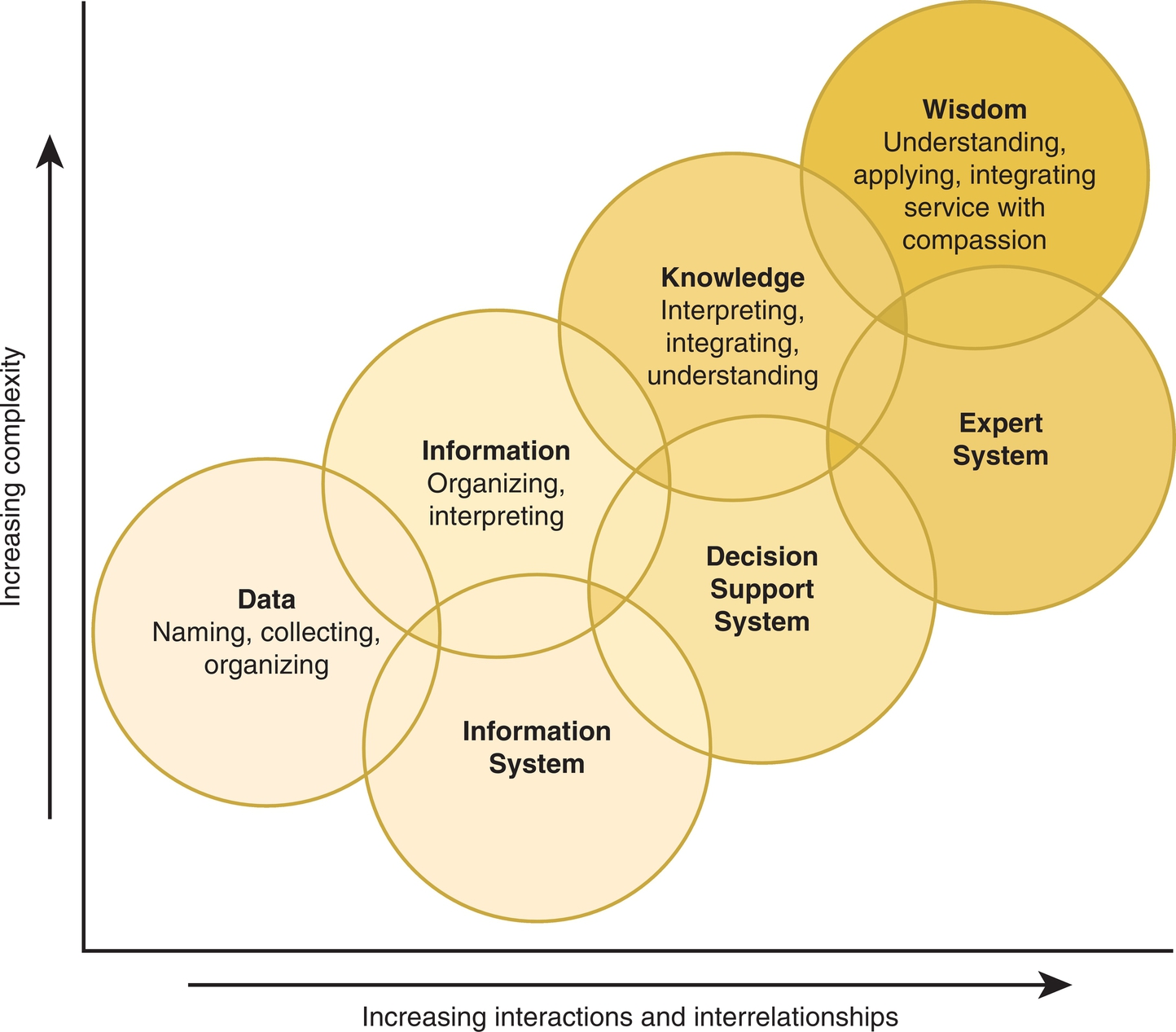

Using the concepts of data, information, knowledge, and wisdom makes it possible to classify the different levels of computing. An information system such as a pharmacy information system takes in data and information, processes the data and information, and outputs information. A computerized decision support system uses knowledge and a set of rules for using that knowledge to interpret data and information and output suggested or actual recommendations. A healthcare application may recommend additional diagnostic tests based on a pattern of abnormal test results, such as increasing creatinine levels. With a decision support system, the user decides whether the suggestion or recommendations will be implemented. A decision support system relies on the knowledge and wisdom of the user.

An computerized expert system goes one step further. An expert system implements the decision that has been programmed into the computer system without the intervention of the user. For example, an automated system that monitors a patient’s overall status and then uses a set of predetermined parameters to trigger and implement the decision to call a code is an expert system. In this example, the data were converted to information, a knowledge base was used to interpret that information, and the decision to implement an action based on this process has been automated. The relationships among the concepts of data, information, knowledge, and wisdom, as well as information, decision support, and expert computer systems, are demonstrated in Fig. 2.7.

In the model, the three types of electronic systems overlap, reflecting how such systems are used in actual practice. For example, an electronic system recommending that a medication order be changed to decrease costs might be consistently implemented with no further thought by the provider entering the orders. In this example, an application designed as a decision support system is actually being used as an expert system by the provider. Because there are limits to the amount of data and information the human mind can remember and process, each practitioner walks a tightrope between depending on the computer to assist in the management of a situation with a high cognitive load and delegating the decision to the computer application. This reality presents interesting and important practical and research questions concerning the effective and appropriate use of computerized decision support systems in the provision of healthcare.

Effective computerized systems are dependent on the quality of data, information, and knowledge processed. Box 2.4 lists the attributes of data, information, and knowledge. These attributes provide a framework for developing evaluation forms that measure the quality of data, information, and knowledge. For example, healthcare data are presented as text, numbers, or a combination of text and numbers. Good-quality health data provide a complete description of the item being presented with accurate measurements. Using these attributes, an evaluation form can be developed for judging the quality (including completeness) of a completed patient assessment form or for judging the quality of a healthcare website. The same process can be used with the attributes of knowledge. Think about the books or online references one might access in developing a treatment plan for a patient or consider a knowledge base that is built into a decision support system. What would result if the knowledge was incomplete or inaccurate or did not apply to the patient’s specific problem or if suggested approaches were out of date and no longer considered effective in treating the patient’s problem? What if the knowledge was not presented in the appropriate format for use?

Although this section has focused on computer systems, humans are also open systems that take in data, information, knowledge, and wisdom. Learning theory provides a framework for understanding how patients and healthcare providers, as open learning systems, take in, process, and output data, information, knowledge, and wisdom.

Learning Theory

Learning theory attempts to determine how people learn and to identify the factors that influence that process. Learning has been defined in a variety of ways, but for the purpose of this text, learning is defined as an increase in knowledge, a change in attitude or values, or the development of new skills. Several different learning theories have been developed. Each theory reflects a different paradigm and approach to understanding and explaining the learning process. Box 2.5 provides links to three sites that demonstrate the wide range of theories and the various approaches to classifying these theories.

These databases of different learning theories cannot be used to create a comprehensive theory of the learning process. The theories are not mutually exclusive. They often overlap and interrelate, yet they cannot be combined to create a total theory of learning.

Learning theories are important to the practice of health informatics for a variety of reasons. Health professionals and health informatics specialists plan and implement educational programs to teach healthcare providers to use new and updated applications and systems. A well-designed and well-implemented educational program can result in competent healthcare providers when it is able to satisfy all learning styles. Understanding how people learn is especially helpful in developing computer-related procedures that are safe and effective for healthcare providers and in building decision support systems that provide effective and appropriate support for healthcare providers who deal with a multitude of complex problems. Adult learning theories are often used when planning for a system implementation, whereas constructivist theories are often used in planning for a distance-education program. Specific learning theories as they relate to specific topics are presented in other chapters. For a listing of the learning theories included in this book, please check the index under the term learning theories. We will discuss four types of learning theories used to demonstrate the major approaches to learning theory.

Cognitive and Constructionist Learning Theories

Learning theories that are included under the heading of information processing theories divide learning into four steps:

1. How the learner takes input into the system

2. How that input is processed and constructed

3. What type of learned behaviors are exhibited as output

4. How feedback to the system is used to change or correct behavior

Data are taken into the system through the senses. First, if there is a sensory organ defect, such as hearing loss, data can be distorted or excluded. Second, data are moving across the semipermeable boundary of the system. There are limits to how much data can enter at one time. For example, if one is listening to a person who is talking too fast, some of the words will be missed. In addition, the learner will screen out data that are considered irrelevant or meaningless, such as background noise. Data limits are increased if the learner is under stress. Individuals who are anxious about learning to use a computer program will experience higher data limits and thereby less learning.

If new information is presented using several senses simultaneously, it is more likely to be taken in. For example, if a new concept is presented using slides that are explained by a speaker, the combination of both verbal and visual input makes it more likely that the learner will grasp the concept. As data enter the system, the learner structures and interprets these data, producing meaningful information. Previous learning has a major effect on how the data are structured and interpreted. For example, if a healthcare provider is already comfortable using Windows and is now learning a new software program based on Windows, he or she will be able to structure and interpret the new information quickly using previously developed cognitive structures. This is one reason why consistency in screen development can be very important for patient safety. In contrast, if the new information cannot be related to previous learning, the learner will need to build interpreting structures as he or she takes in the new information. For example, if a person is reading new information, she may stop at the end of each sentence and think about the content in that sentence. She is building interpretive cognitive structures while importing the data. If this same learner is hearing the new information at the same time she is taking notes, she may have difficulty capturing the content she is trying to record. The more time that is needed to interpret and structure data, the slower the learner will be able to import data. Assessment of the learner’s previous knowledge can help the instructor to identify these potential problems. Relating new information to previously learned information will help the learner to develop interpreting structures and in turn learn the information more effectively. Describing learning as a process of building interpretive cognitive structures while learning new content is consistent with constructionist theory explained below. By combining what is known with the new information the learner goes beyond the information that was provided in the learning experience. Using organizing structures such as outlines, providing examples, and explaining how new information relates to previously learned concepts encourages the learner to develop chunks and increases retention. An “aha moment” is the sudden understanding that occurs when the new information fits with previous learning and the student gains a new insight on the discussion.

Social constructivism focuses on how group interaction can be used to build new knowledge.50 Group discussion in which learners share their perceptions and understanding through peer learning encourages new insight, as well as retention of the newly constructed knowledge. There is limited research on how groups such as interprofessional health teams actually learn via the group construction process. Two studies at Massachusetts Institute of Technology and Carnegie Mellon University found converging evidence that groups participating in problem-solving activities demonstrate a general collective intelligence factor that explains a group’s performance on a wide variety of tasks. “This ‘c factor’ is not strongly correlated with the average or maximum individual intelligence of group members but instead with the average social sensitivity of group members, the equality in distribution of conversational turn-taking and the proportion of females in the group.”51, p. 686 Certain characteristics of the individuals within the group and the group’s ability to work together as a whole can influence the effectiveness of the group. “A group’s interactions drive its intelligence more than the brain power of individual members.”52 Approaches to assessing and measuring the c facture within a group are now being explored with hopes that this measure could be used to support the effective of groups or teams.53,54

Information once taken into the system is retained in several different formats. The three most common formats are episodic order, hierarchical order, and linked. For example, life events are often retained in episodic order. A list of computer commands is also retained in episodic order. Psychomotor commands learned episodically can become automatic. An example of this can be seen in the simple behavior of typing or the more complex behavior of driving a car. Cognitive learning tends to be retained in hierarchical order. For example, penicillin is an antibiotic. An antibiotic is a medication. Finally, information is retained because it is linked or related to other information. For example, the concept “paper” is related to a printer. The process by which information is retained in long-term memory (LTM) can be reinforced by a variety of teaching techniques. Providing the student with an outline when presenting cognitive information helps to reinforce the learner retaining the information in hierarchical order. Telling stories or jokes can be used to reinforce links between concepts. Practice exercises that encourage repeated use of specific keystroke sequences or computer commands assist with long-term retention of psychomotor episodic learning including muscle memory.