CHAPTER 82 Assessing Evidence

Between 1965 and 2009, there have been 1663 scientific articles on periodontal diseases and antibacterial agents. Which of these articles provides information that is clinically relevant? Are these 1663 articles accurately summarized in educational courses, textbooks, or systematic reviews? Relying on authority to provide answers to such questions is dangerous.101

Einstein purportedly said that “his own major scientific talent was his ability to look at an enormous number of experiments and journal articles, select the very few that were both correct and important, ignore the rest, and build a theory on the right ones.”13 Most evidence-based clinicians probably aspire toward the same goal in the evaluation of clinical evidence. In this search for good evidence, a “baloney detection kit”54 is needed to separate salesmanship from science and suggestive hints from unequivocal evidence. This chapter discusses 12 tools that may be useful in assessing causality in clinical sciences.

Twelve Tools for Assessing Evidence

1 Be Skeptical.

By 1990, based on the results of a dozen studies, it was concluded that “available data thus strongly support the hypothesis that dietary carotenoids reduce the risk of lung cancer.”121 Beta carotene (β-carotene) was hypothesized to interfere passively with oxidative damage to deoxyribonucleic acid (DNA) and lipoproteins,63 and these beliefs in part translated into $210 million sales of β-carotene in 1997 in the United States. Was this convincing evidence or should it be evaluated skeptically? Two large, randomized controlled trials (RCTs) were initiated, and both were stopped prematurely because they indicated that β-carotene increased lung cancer risk, cardiovascular disease risk, and overall mortality risk.11,92 In 2005, the primary investigator of one of the trials reported that “beta-carotene should be regulated as a human carcinogen.”92

Evidence on how to cure, manage, or prevent chronic diseases is notoriously contradictory, inconsistent, and unreliable. Mark Twain reminded people to be careful when reading health books because one may die of a misprint.100 The following powerful forces, in addition to misprints, conspire to deliver a preponderance of misleading results:

Several observations suggest that skepticism is required in the evaluation of periodontal evidence. First, the large number of “effective” periodontal treatments may be a telltale sign of a challenging chronic disease. Before 1917, there were hundreds of pneumonia treatments, none of which worked. Before the advent of antibiotics in the 1940s, the wealth of available tuberculosis treatments was misleading in the sense that none really worked. The current “therapeutic wealth” for periodontal diseases may well mean poverty—an indication of the absence of truly effective treatments—and a suggestion we are dealing with a challenging chronic disease.47 Second, periodontal diseases are by many no longer regarded as the simple, plaque-related diseases they thought to be in the mid-twentieth century but rather as complex diseases. Complex diseases are challenging to diagnose, treat, and investigate. Third, the scientific quality of periodontal studies has been rated as low.8-9 Major landmark trials were analyzed using wrong statistics,57 most randomized studies were not properly randomized,83 and the primary drivers of the periodontitis epidemic may have been misunderstood because of the lack of properly controlled epidemiologic studies.59,112 The chances that periodontal research somehow managed to escape the scientific challenges and hurdles that were present in research in other chronic diseases appears slim.

2 Don’t Trust Biologic Plausibility.

If an irregular heartbeat increases mortality risk and if encainide can turn an irregular heartbeat into a normal heartbeat, then encainide should improve survival.23 If high lipid levels increase myocardial infarction risk and if clofibrate can successfully decrease lipid levels, clofibrate should improve survival.97 Such “causal chain thinking” (A causes B, B causes C, therefore A causes C) is common and dangerous. These examples of treatment rationales, although seemingly reasonable and biologically plausible, turned out to harm patients. Causal chain thinking is sometimes referred to as “deductive inference,” “deductive reasoning,” or a “logical system.”

In mathematics, “once the Greeks had developed the deductive method, they were correct in what they did, correct for all time.”10 In medicine or dentistry, decisions based on deductive reasoning have not been “correct for all time” and are certainly not universal. Because of an incomplete understanding of biology, the use of deductive reasoning for clinical decisions may be dangerous, and it largely failed for thousands of years to lead to medical breakthroughs. In evidence-based medicine, evidence that is based on deductive inference is classified as level 5, which is the lowest level of evidence available.

Unfortunately, much of our knowledge on how to prevent, manage, and treat chronic periodontitis depends largely on deductive reasoning. Small, short-term changes in pocket depth or attachment levels have been assumed to translate into tangible, long-term patient benefits, but minimal evidence to support this deductive inference leap is available. Plaque was related to experimental gingivitis in a small uncontrolled study,72 and a “leap of faith” was made that plaque caused almost all periodontal diseases. Evidence that plaque control affects the most common forms of periodontal diseases is still weak58 and largely based on “biologic plausibility” arguments. The use of antibiotics for painful periodontal abscesses102 is similarly rationalized on deductive inference, a worrisome thought given the concerns about antibiotic resistance87,113 and the increasing evidence that no antibiotics are needed for self-limiting infections.2, 34, 49, 95 A move toward a higher level of evidence (higher than biologic plausibility) is needed to put periodontics on a firmer scientific footing.

3 What Level of Controlled Evidence Is Available?

Development of Western Science is based on two great achievements: the invention of a formal logical system (in Euclidean geometry) by the Greek philosophers, and the discovery of the possibility to find out causal relationships by systematic experiment (during the Renaissance).

Rational thought requires reliance on either deductive reasoning (biologic plausibility) or on systematic experiments (sometimes referred to as inductive reasoning). Galileo is typically credited with the start of systematic experimentation in physics. Puzzingly, it took until the latter half of the twentieth century before systematic experiments became part of clinical thinking. Three systematic experiments are now routine in clinical research: the case-control study, the cohort study, and the randomized control trial (RCT). In the following brief descriptions of these three systematic experimental designs, the term exposure refers to a suspected etiologic factor or an intervention, such as a treatment or a diagnostic test, and the term endpoint refers to the outcome of disease, quality-of-life measures, or any type of condition that may be of interest in clinical studies.

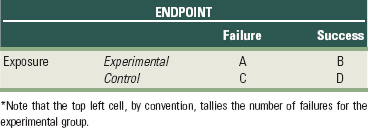

All three study designs permit us to study the association between the exposure and the endpoint. This association can be represented schematically as follows:

An important challenge in the assessment of controlled evidence is determining whether the association identified (→) is causal. Criteria used to assess causality include factors such as the assessment of temporality, the presence of a pretrial hypothesis, and the size or strength of the reported association. Unlike deductive reasoning, in which associations are either true or false, such absolute truths cannot be achieved with systematic experiments. Conclusions based on controlled study designs are always surrounded with a degree of uncertainty, a frustrating limitation to real-world clinicians who have to make yes/no decisions.

4 Did the Cause Precede the Effect?

In 2001 a study published in the British Medical Journal suggested that retroactive prayer shortened hospital stay in patients with bloodstream infection.70 The only problem was that patients were already dismissed from the hospital when the nonspecified prayer to the nonspecified deity was made. To most scientists, findings in which the effect (shorter hospital stay) precedes the cause (the prayer) are impossible, and this provides an unequivocal example of a violation of correct temporality; the effect preceded the hypothesized cause. In chronic disease research, it is often challenging to disentangle temporality, and fundamental questions regarding temporality often remain disputed. For example, in Alzheimer’s research the amyloid in the senile plaques in the brain is often considered to be the cause of Alzheimer’s disease, but some researchers suggested that amyloid may be the result rather than the cause of Alzheimer’s disease and that the amyloid actually may be protective.69 Vigorous investigation of temporality is a key aspect in scientific investigation.

![]() Science Transfer

Science Transfer

Clinicians can use these twelve rules as a foundation for determining clinical relevance of published reports of treatment outcomes, which will allow each doctor to make scientifically based treatment decisions without having to rely on authoritarian interpretations that can be biased. These rules can also be used as a foundation for planning clinical trials, and as the demands for more stringent approaches to clinical testing are stressed by research funding agencies and editorial decision makers, the library of reliable databases to be used for metaanalyses will grow. This will give increased emphasis to the evidence-based decision-making process so that it is incorporated into day-to-day periodontal practice.

Temporality is the only criterion that needs to be satisfied for claiming causality; the cause needs to precede the effect. In periodontal research, many studies relating plaque or specific infections to periodontal diseases suffer from unclear temporality. Are observed microbial profiles the result or the cause of periodontitis? Do individuals with periodontitis have more plaque because they have more root surface areas to clean, or do they have poorer oral hygiene? Similarly, studies on the potential association between so-called chronic periodontitis and systemic diseases may not have adequately addressed the issue of temporality. Is chronic periodontitis preceding the systemic disease, or are chronic periodontitis and systemic disease comorbid conditions caused by a common causal factor, such as sucrose. Unequivocal establishment of temporality is an essential element of causality and can be difficult to establish for chronic diseases, including the epidemiology of periodontal diseases.

5 No Betting on the Horse after the Race Is Over

An acquired immunodeficiency syndrome (AIDS) researcher at an international AIDS conference was jeered when she claimed that AIDS therapy provided a significant benefit for a subgroup of trial participants.89 A study published in the New England Journal of Medicine74 was taken as a textbook example of poor science37 when it claimed that coffee drinking was responsible for more than 50% of the pancreatic cancers in the United States. Results of a large collaborative study demonstrating that aspirin use after myocardial infarction increased mortality risk in patients born under Gemini or Libra provided a comical example of an important scientific principle; data-generated ideas are unreliable.

An essence of science is that hypotheses or ideas predict observations, not that hypotheses or ideas can be fitted to observed data. This essential characteristic of scientific enterprise—prediction—is often lost in medical and dental research when poorly defined prestudy hypotheses result in convoluted data-generated ideas or hypotheses that fit the observed data. It has been reported that even for well-organized studies with carefully written protocols, investigators often do not remember which hypotheses were defined in advance, which hypotheses were data derived, which hypotheses were “a priori” considered plausible, and which were unlikely.123 A wealth of data-generated ideas can be created by exploring patient subgroups, exposures, and endpoints, as shown by the following:

Deviating from the pretrial hypothesis is often compared to data torturing.82 Detecting the presence of data torturing in a published article is often challenging; just as the talented torturer leaves no scars on the victim’s body, the talented data torturer leaves no marks on the published study. Two types of torturing are recognized. Opportunistic data torturing refers to exploring data without the goal of “proving” a particular point of view. Opportunistic data torturing is an essential aspect of scientific activity and hypothesis generation. Procrustean data torturing refers to exploring data with the goal of proving a particular point of view. Just as the Greek mortal Procrusteus fitted guests perfectly to his guest bed either through bodily stretching or through chopping of the legs to ensure correspondence between body height and bed length, so can data be fitted to the pretrial hypothesis by Procrustean means.

6 What Is a Clinically Relevant Pretrial Hypothesis?

Far better an approximate answer to the right question, which is often vague, than the exact answer to the wrong question, which can always be made precise.

—John Tukey116

When alendronate was shown to lower fracture rates (a tangible benefit),31 it became the leading worldwide treatment for postmenopausal osteoporosis, and its use is expected to continue to grow.43 When a randomized trial showed that simvastatin saved lives of patients with prior heart disease (a tangible benefit),68 sales increased by 80% in the first 9 months after the study’s publication. A pivotal trial on hormone replacement therapy122 turned thriving drug sales into a major decline.42 A pivotal trial found a routine eye surgery to be harmful, prompting the National Institutes of Health to send a clinical trial alert61 to 25,000 ophthalmologists and neurologists.6

Clinically relevant questions are designed to have an impact on clinical practice and increasingly, trials on clinically relevant questions, succeed in exactly doing that; dramatically changing clinical practice. Usually, clinically relevant questions share four important characteristics of the pretrial hypothesis: (1) a clinically relevant endpoint (referred to as the Outcome in the PICO question), (2) relevant exposure comparisons (referred to as the Intervention and the Control in the PICO question, (3) a study sample representative of real-world clinical patients (should be representative of the Patient defined in the PICO question), and (4) small error rates.

Clinically Relevant Endpoint.

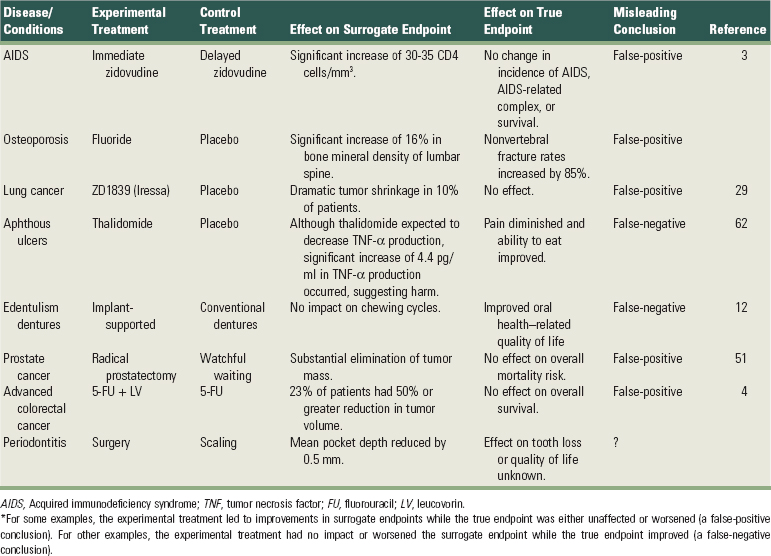

An endpoint is a measurement related to a disease process or a condition and used to assess the exposure effect. Two different types of endpoints are recognized. True endpoints are tangible outcomes that directly measure how a patient feels, functions, or survives40; examples include tooth loss, death, and pain. Surrogate endpoints are intangible outcomes used as a substitute for true endpoints109; examples include blood pressure and probing depths of periodontal pockets. Treatment effects on surrogates do not necessarily translate into real clinical benefit (Table 82-1). Use of surrogate endpoints has led to widespread use of deadly medications, and it has been suggested that such disasters should prompt policy changes in drug approval.96 Analogously, most major causes of human disease (e.g., cigarette smoking) were identified through studies using true endpoints. A first requirement for a clinically relevant study is the pretrial specification of a true endpoint.

Common and Relevant Exposure Comparisons.

The more prevalent a studied exposure, the more relevant is the clinical question. A clinically relevant exposure comparison implies (1) the absence of contrived control groups and (2) the use of a placebo control group when appropriate. Providing the control subjects with less than the standard dose of the standard treatment75,93 or providing a control therapy that avoids the real clinical questions30 are examples of clinically irrelevant research. In case-control or cohort studies the measurement and characterization of exposures (e.g., mercury, fluoride, chewing tobacco) can be difficult and imprecise, making answers imprecise. Moreover, a conclusion regarding the safety or efficacy of one particular type of exposure does not necessarily translate into safety or efficacy of apparently closely related exposures. This last consideration is important in the assessment of the “me-too” drugs or “me-too” implant systems.

Representative Study Sample.

When cholesterol-lowering drugs provided a small benefit in middle-aged men with abnormally high cholesterol levels, it was concluded that those benefits “could and should be extended” to other age groups and women with “more modest elevations” of cholesterol levels.71 Findings on blood lipids and heart disease derived mostly from Polish immigrants in the Framingham Study were generalized to a much more diverse population.46 An antidepressant, which was approved for use in adults, was widely prescribed for children with unexpected, serious consequences.1 Drugs primarily used by elderly individuals who take many drugs are often evaluated in younger individuals who take only one drug.7 The larger the discrepancy between the study sample and the patient you seek to treat, the more questionable the applicability of the study’s conclusions becomes.

Ideally, clinical trials should use simple entry criteria in which the enrolled patients reflect as closely as possible the real-world clinical practice situation.45 Legislation has been enacted to reach this goal. In 1993, U.S. policy ensured the recruitment of women and minority groups in clinical trials.18 A U.S. policy for the inclusion of children in clinical studies was then set into law in 1998. Experiments with long lists of inclusion and exclusion criteria can be expensive recipes for failure because they can lead to study subjects which are unrepresentative of most real-world clinical patients.

Small Type I and Type II Error Rates.

The type I error rate is the likelihood of concluding there is an effect, when in truth there is no effect. The type I error rate is set by the investigator, and common values are 1% or 5%. The type II error rate is the likelihood of concluding there is no effect, when in truth there is an effect. The type II error rate is typically set by the investigator at 10% or 20%. The complement of the type II error rate (i.e., 1 – type II error rate) is referred to as the power of the study. The likelihood for a false-positive or false-negative result depends, in addition to the type I and II error rate, on the likelihood of finding an effect that is not under the investigator’s control. For chronic diseases, in which the likelihood for identifying effective treatments or true causes is low, the false-positive rate can be high even when the type I error rate is low.39 Clinically relevant studies require small type I and type II error rates to minimize false-positive and false-negative conclusions.

7 Size Does Matter

Chronic hepatitis B infection increased the chances for liver cancer by more than 23,000%.14 Proximity to electromagnetic radiation increased the chance for leukemia in children by 49%.120 Periodontitis in populations with smokers increased the chance for coronary heart disease by 12%.60 No one doubts the causality of the association between chronic hepatitis B infection and liver cancer, but the role of periodontitis in coronary heart disease or electromagnetic radiation in childhood leukemia remains controversial. Why? To a large extent, the size of the association drives the interpretation of causality.

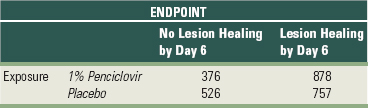

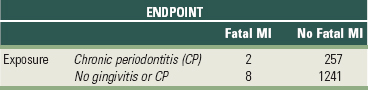

The larger an association, the less likely it is caused by bias, and the more likely it is causal. One simple way to calculate the size of the association is to calculate an odds ratio. The odds for an event is the probability that an event happens divided by the probability that an event does not happen. An odds ratio is a ratio of odds. To calculate an odds ratio, a two-by-two (2 × 2) table is constructed in which the outcome is cross-tabulated with the exposure (Table 82-2). Odds ratios can be calculated for data from RCTs, cohort studies, and case-control studies.

The odds ratio is the ratio of the cross-products (ad/bc). The odds ratio associated with penciclovir use for lesion healing is (376 × 757)/(526 × 878) = 0.62 (Table 82-3). The odds ratio associated with chronic periodontitis for a fatal myocardial infarction is (2 ×1241)/(8 × 257) = 1.21 (Table 82-4). The 95% confidence interval can be approximated by exp[ln(odds ratio) ±1.96 √(1/a + 1/b + 1/c + 1/d)]. The 95% confidence intervals of the odds ratio for lesion healing and fatal myocardial infarction are, respectively, exp(–0.48 ±1.96 √ 0.007), or 0.52 to 0.73, and exp(0.18 ±1.96 √ 0.63), or 0.25 to 5.72.

TABLE 82-4 Two-by-Two Table on Association between Chronic Periodontitis and Fatal Myocardial Infarction (MI)

The size of the odds ratio ranges between 0 and infinity. An odds ratio of 1 indicates the absence of an association, and if the two-by-two table is set up with the reference cell (poor outcome-exposure of interest) in the left-hand side of the top data row, an odds ratio larger than 1 means a harmful effect (e.g., periodontitis increases the odds for a fatal myocardial infarction by 20%), and an odds ratio smaller than 1 means a protective effect (e.g., penciclovir decreases the odds for failed lesion healing by day 6 by 38%).

The confidence interval is the range of numbers between the upper confidence limit and the lower confidence limit. The confidence interval contains the true odds ratio with a certain predetermined probability (e.g., 95%). In a properly executed randomized trial, a conclusion of causality is typically made if the 95% confidence interval does not include the possibility of “no association” (e.g., odds ratio = 1). For example, since the 95% con-fidence interval for the odds ratio associated with penciclovir use is 0.52 to 0.73 and does not include 1, the effect can be referred to as “statistically significant.” For the chronic periodontitis–myocardial infarction example, the 95% confidence interval ranges from 0.25 to 5.72, includes 1, and is therefore referred to as “statistically insignificant.”

In epidemiology, where there is no randomization of individuals to exposures, the interpretation of a confidence interval is challenging because no probabilistic basis (in the form of randomization) exists for making causal inference. A pessimist will claim that since no randomization was present, no statistical interpretations are allowed.46 The emphasis should be on visual display of the identified associations and on sensitivity analyses where the results are interpreted under “what if” assumptions.115 An optimist will argue that the absence of randomization does not preclude the making of statistical inferences, and that one always starts from the assumption that “assignments were random” (even when they were not).124

When individuals are randomly assigned to exposures, very small associations (i.e., associations very close to 1, such as 1.1) can reliably be identified. When individuals are not randomly assigned to exposures, as is the case in cohort studies and cases-control studies, the size of the reported association (e.g., the odds ratio) becomes key in the interpretation of the findings. Because of the inherent biases in epidemiologic research, small odds ratios cannot be reliably identified. But what is small? Leading epidemiologists provide some guidelines on how to interpret the size of an association with respect to possible causality. Richard Doll, one of the founders of epidemiology, said, “No single epidemiological study is persuasive by itself unless the lower limit of its 95% confidence level falls above a threefold (200%) increased risk.” Trichopoulos, past chairperson of epidemiology at Harvard University, opts “for a fourfold (300%) increase at the lower limit (of the 95% confidence interval).” Marcia Angell, former editor of the New England Journal of Medicine, reported: “As a general rule of thumb we are looking for an odds ratio of 3 or more (≥200% increased odds) [before accepting a paper for publication].” Robert Temple, Director of the Food and Drug Administration, stated: “My basic rule is if the odds ratio isn’t at least 3 or 4 (a 200% or 300% increased risk), forget it.”108 These opinions provide some guidelines on what size of odds ratio to look for when determining causality.

8 Is There “Even One Different Explanation that Works as Well or Better?”55

No amount of experimentation can ever prove me right; a single experiment can prove me wrong.

When you have eliminated the impossible, whatever remains, however improbable, must be the truth.

Dozens of epidemiologic studies appeared to support the hypothesis that β-carotene intake lowered lung cancer risk. However, two RCTs provided unequivocal evidence to the contrary.11,92 What went wrong? Different explanations that worked as well or better may have been inadequately explored. Possibly, smoking was not adequately considered as an alternative explanation and led to a misunderstanding on the health effects of β-carotene.38,107 Similarly, epidemiology appeared to support the hypothesis that Chlamydia pneumoniae caused myocardial infarctions.48 Again, however, a systematic review of RCTs suggested that the C. pneumoniae theory may be dead.90 Why was epidemiology misleading? Again, different explanations may have been inadequately explored. Analyses restricted to “never-smokers” indicated that C. pneumoniae infection was not associated with an increased coronary heart disease risk,110 a finding consistent with RCT results. The highest goal of a scientist is the attempt to refute, disprove, and vigorously explore factors and alternative hypotheses that may “explain away” the observed association.21 Not vigorously exploring smoking as a potential confounder may have led to a significant waste of clinical research resources and a lost opportunity to explore more solid epidemiologic leads for lung cancer and coronary heart disease. Is it possible that similar mistakes are occuring in periodontics-systemic disease research?

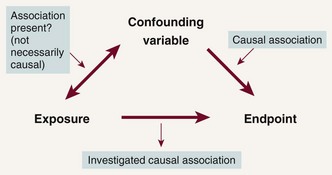

For a factor to explain away an observed association, two criteria need to be fulfilled. First, the factor must be related to the exposure, but not necessarily in causal way. Second, the factor must be causally related to the outcome and must not be in the causal pathway. If both criteria are satisfied, the factor is referred to as a confounder, and confounding is said to be present. For example, smoking satisfied the criteria for a confounder in the β-carotene–lung cancer association because (1) cigarette smokers consumed less β-carotene than nonsmokers and (2) smoking caused lung cancer. Confounding is often represented schematically (Figure 82-1).

Figure 82-1 Schematic representation of the two necessary criteria for a variable to induce spurious associations (i.e., to be a confounding variable). The confounding variable has to be (1) associated with the exposure and (2) causally linked to the outcome. When both criteria are satisfied, confounding is said to be present.

In randomized studies, confounding is not an issue because randomization balances known and unknown confounders across the compared groups with a high degree of certainty. In epidemiologic studies, in which no randomization is present, three questions related to confounding need to be considered in the assessment of the causality, as addressed next.

First, were all important confounders identified? Complex diseases have multiple risk factors, which may act as confounders in the reported association. The multiple confounders need to be included in the statistical analyses. An association unadjusted for any potential confounders is sometimes referred to as the crude association. When this crude association is adjusted for potential confounders, it is referred to as an adjusted association. Typically, crude associations are adjusted for multiple confounders, and both crude and adjusted odds ratios are presented.

Second, how accurately were confounders measured? Some potential confounders, such as age, gender, and race, can be measured relatively accurately. Other potential confounders, such as smoking or lifestyle, are notoriously more difficult to measure. A discrepancy between what is measured and what is the truth will result in the incom-plete removal of bias and lead to spurious associations. The remaining bias is sometimes referred to as residual confounding. Residual confounding is common in epidemiology and is one of the reasons why case-control and cohort studies are less effective research tools than randomized trials in identifying small effects. For instance, an accurate summary of smoking history over a person’s lifetime may be impossible.105

Third, was the statistical modeling of the confounders appropriate? Any misspecification of the functional relationships causes bias. For example, assuming a linear relationship between a confounder and an endpoint, while in truth the relationship is quadratic, will cause bias.

Evaluating the impact of confounding is a complex challenge. The goal of an epidemiologist is to come up with the best possible defense why an identified association is spurious.73 All possible efforts should be spent identifying known confounders, obtaining accurate measurements on the confounders, and exploring different analytic approaches to refute the observed association. Smoking, a potential confounder in many studies, has been found to be such a strong confounder that several leading epidemiologist have suggested that restriction to never-smokers is required to eliminate the potential for residual confounding by smoking.94 Control for confounding is the major methodological challenge in epidemiology, and randomization is the only tool available to eliminate confounding reliably.

9 Was the Study Properly Randomized?

Attempts by physicians to circumvent randomization are not isolated events; they’re part of an endemic problem stemming from ignorance.89

Randomization is a counterintuitive process because it (1) creates heterogeneity, (2) takes control over treatment assignment away from the physician, and (3) leads to apparently illogical situations in which patients randomly assigned to a treatment, but refusing it, still are analyzed as if they received the treatment. Although randomization was a radical innovation introduced for agriculture, it is doubtful whether it would have ever been introduced into medicine (and subsequently dentistry) were it not for a confluence of factors surrounding the end of World War II in Great Britain. Because of the revolutionary nature of randomization, fundamental misunderstandings of this process remain. About one third of the clinical trials published in elite medical journals apparently do not ensure that patients are assigned to different treatments by chance.5 The majority of reported periodontal trials fail to convince reviewers that (1) the studies were properly randomized, (2) randomization was concealed, or (3) randomized patients were accounted for.83 Tampering with the delicate process of randomization can quickly, using the words of Ronald Fisher, a statistician and geneticist, turn an “experiment into an experience.”

Several studies have shown how an inadequate randomization process will bias study findings. In one review study, the ability to reject patients from the study after random treatment assignment tripled the likelihood of finding significant results and doubled the likelihood that confounders were unequally distributed among the compared groups.24 Trials where clinicians can break the randomization code reported treatment effects that averaged 30% larger than effects in trials where the randomization could not be broken.65 The common desire to eliminate noncompliant patients can similarly lead to biases, as shown by the following two examples. First, in one cardiovascular disease trial, patients compliant to a placebo pill had a 10% reduction in mortality risk compared with patients noncompliant to the placebo.28 Second, in a caries trial, adolescents compliant to a placebo varnish had on average 2.2 fewer new caries lesions than adolescents noncompliant to a placebo varnish.41 Clearly, factors related to compliance and unrelated to the treatments under investigation have a powerful influence on the outcome measured. Deleting such noncompliant patients may lead to biases.

Proper randomization ideally includes the following elements. First, subjects are enrolled into a study before randomization: important baseline disease characteristics are recorded and provided to an independent person or organization. This step ensures that baseline information is available for every patient who will be randomized. Without this step, randomized patients can be “lost,” leading to biases. Subsequently, an independent person or organization randomly assigns subjects to treatments and informs the clinician regarding the treatment assignment. This randomization process needs to be auditable, making pseudorandom processes such as coin tosses unacceptable. The concealment of the randomization process ensures that clinicians cannot crack the code and that they will enroll only those patients they think are suited for the treatment that will be assigned. Finally, the outcome in the subject is evaluated regardless of follow-up time or compliance and according to the treatment assigned, not the treatment received. Imputation is used in sensitivity analyses to determine the extent that subjects with missing information can bias the conclusions. The whole process of randomization is complex and often deviated from leading to unreliable results.

10 When to Rely on Nonrandomized Evidence?

—Thomas Chalmers25

To tell the truth, all of the discussion today about the patient’s informed consent still strikes me as absolute rubbish.

More than 50 epidemiologic studies reported evidence that hormone replacement therapy provided benefits to postmenopausal women.36 Despite this “strong” evidence from “leading” researchers, and despite the opposition on ethical grounds to initiate a placebo-controlled trial, the Women’s Health Initiative trial was initiated. The “miracle” of hormone replacement therapy was shown to lead to increases in breast cancer risk, dementia, myocardial infarction, and stroke. This example illustrates well the need for randomized studies and for questioning well-established and widely accepted beliefs based on numerous epidemiologic studies. Nonetheless, the initiation of randomized trials can become difficult because of ethical considerations and unaffordably large sample sizes.

Ethical principles dictate that proposed interventions do more good than harm, that the populations in whom the study will be conducted will benefit from the findings, that informed consent is obtained from enrolled subjects, and that a genuine uncertainty exists with respect to treatment efficacy. The interpretation of these ethical principles is largely determined by culture and era. Ethical principles also play an important role in determining which clinical questions are sufficiently important to warrant the conduct of a randomized controlled trial (RCT).

Sample size considerations may prevent the conduct of RCTs. The smaller the rate at which endpoints occur in an RCT, the larger the required sample size will be. For rare events such as bacterial endocarditis subsequent to a dental procedure or HIV conversion after exposure to an HIV-contaminated dental needle, RCTs may never be possible because the required sample sizes are in the 100,000s or millions of subjects.

In addition to both ethical and practical reasons, there can be powerful political issues surrounding the decision to initiate clinical trials. Nonetheless, unequivocal evidence requires the conduct of rigorously designed and executed RCTs. The smaller the beneficial effect identified in observational studies, the more prevalent is the exposure, and the larger the need for RCT evidence. Although certain important clinical questions may never have reliable answers, the absence of RCT evidence for important and answerable clinical questions can be frustrating to those who seek reliable evidence-based practice guidelines.

11 Placebo Effects: Real or Sham?

I never knew any advantage from electricity in (the treatment of) palsies that was permanent. And how far the apparent temporary advantage might arise from the exercise in the patient’s journey, and coming daily to my house, or from the spirits given by the hope of success, enabling them to exert more strength in moving their limbs, I will not pretend to say.

—Benjamin Franklin117

Sham or mock surgeries have been used to evaluate whether implanting human fetal tissue in the brain decreases symptoms of Parkinson’s disease,91 whether surgical lavage and débridement decreases pain in arthritic knee joints,84 whether mammary artery ligation improves heart disease outcomes, and whether alveolar trephination relieves the pain of acute apical periodontitis.52,88

What motivates clinical investigators to subject patients to surgical risks, and yet knowingly provide no hypothesized benefits to these patients? A partial answer to this question lies in a phenomenon known as placebo effects: the beneficial effects some patients experience by simply participating in a study, by patient-physician interaction, by the patient’s anticipation for improvement, or by the patient’s desire to please the physician. A small, controlled study in 11 patients showed that placebo effects can cause changes in brain function,17 providing plausibility to the argument that placebo effects may have biologic effects. Because of such placebo effects, without mock surgeries it would be impossible to tell whether the improvements observed in clinical trials are caused by the placebo effects associated with the surgical procedures or by the hypothesized active ingredient of the surgery itself.

Two studies have quantified the placebo effect. In the first study the magnitude of the placebo effect was estimated by evaluating patient responses to ineffective treatments.98 Five treatments were identified as ineffective if the treatment had been abandoned by the medical profession and if at least one controlled study confirmed its ineffectiveness. With these ineffective treatments, good to excellent treatment responses were observed in 45% to 90% of the patients, a powerful placebo effect indeed. In the second study, placebo interventions (pharmacologic placebo, physical or psychologic intervention) were compared to true “no-treatment” interventions.53 A significant placebo effect was observed for pain, the condition that was evaluated in the largest number of trials and that had the largest number of evaluated participants. No significant placebo effects were detected for other outcomes such as weight loss.

In dentistry, sound systematic reviews may provide some ability to evaluate placebo effects. One systematic review of 133 studies reported that the fluoride effect on caries was significantly larger when a no-treatment control group was used versus a placebo–control group.77 One possible interpretation for these differences is a placebo effect: the placebo lowered caries rates. Other explanations, such as the scientific quality of the studies, may of course also be responsible for such observed differences, especially for pain. Overall, sufficient evidence is available to suggest that placebo effects can be real and measurable, and that the magnitude of the placebo effect may depend on the treatment under study and the type of outcome evaluated.

12 Was There Protection Against Conflict of Interest?

—A phrase coined by cardiac surgeons to refer to patients who died or were injured using Heartport equipment

Can one trust clinical recommendations regarding a novel, noninvasive cardiac bypass surgery by a physician who has a $100 million stake in the procedure he is recommending? Is it possible that financial stakes are preventing physicians from disclosing a tenfold increased mortality risk? Can one trust guidelines establishing sharply lowered lipid levels knowing that eight of the nine panel experts have financial connections to the manufacturers of lipid-lowering drugs?64 Is it possible that science panelists are picked for ideology?15 The answers to these questions are not straightforward and in general are discussed under the heading of “conflicts of interest.”

Conflict of interest has been defined as “a set of conditions in which professional judgment concerning a primary interest (such as patient’s welfare or validity of research) tends to be unduly influenced by a secondary interest.”111 A common secondary interest is financial but can include others, such as religious or scientific beliefs, ideologic or political beliefs, or academic interests (e.g., promotion). Some examples of how conflicts of interests can bias evidence are now given:

The potential for conflict of interest has increased over the past 20 years. The prevalence of industry-funded trials has increased from 32% to 62% between 1980 and 2000,85-86 and two-thirds of the universities hold stock in start-up companies that support clinical trials within that institution.16 Such connections can be viewed with a skeptical eye: industry-sponsored studies are 3.6 times as likely to have pro-industry conclusions as non–industry-sponsored studies.16 Even if industry-funded trials are executed with the highest degree of ethics and patient benefit in mind, the decrease of public trust caused by apparent conflict of interest can be damaging to the scientific reputation of journals, academic institutions, and science itself.32

Because of these issues, the proper handling of potential conflict-of-interest issues is an important aspect of clinical research. During the conduct of the research itself, independent data and safety monitoring boards provide protection against such biases. Policy regulations established by journals, academic institutions, and governments can further decrease the impact of perceived conflicts of interests. For instance, work is currently being done to eliminate the “file-drawer problem” by establishing a registry of RCTs in which investigators need to deposit the RCT protocols before their initiation.33

Conflict-of-interest issues may be just as prevalent in dental research as in other medical areas dealing with chronic disease. In 2002 an article published in a leading dental journal ended up on the cover of the New York Times.93 In part, the reason was a perceived conflict of interest; the article did not disclose that funding for the study came from an advertising company. Disclosure of conflict of interest is often poorly enforced, and some dental journals do not have regulations in place to reveal potential conflicts of interest of authors. Such situations (1) make it challenging for clinicians to recognize the potential for conflicts of interest, (2) may reduce trust in dental journals, and (3) may affect the scientific integrity of dental research.

Conclusion

Lessons learned from other chronic disease areas do apply to the evaluation of evidence in the periodontal disease research arena. Randomization or confounding is as important in periodontal research as it is in cancer research. Work remains to be done to integrate evidence-based thinking into clinical practice. The most challenging task may be to lessen the excessive reliance on biologic plausibility in determining both research priorities and patient management and to transition to clinical thinking that is based on controlled clinical observations, rather than biologic plausibility. Although the 12 proposed tools for assessing evidence do not cover all necessary tools, or even the most important tools, it is hoped that they provide a useful starting point for the further exploration of the issues and principles involved in the conduct of systematic experiments in periodontal disease research.

1 Abbott A. British panel bans use of antidepressant to treat children. Nature. 2003;423:792.

2 Abbott AA, Koren LZ, Morse DR, et al. A prospective randomized trial on efficacy of antibiotic prophylaxis in asymptomatic teeth with pulpal necrosis and associated periapical pathosis. Oral Surg Oral Med Oral Pathol. 1988;66:722.

3 Aboulker JP, Swart AM. Preliminary analysis of the Concorde trial, Concorde Coordinating Committee (see comments). Lancet. 1993;341:889. (letter)

4 Advanced Colorectal Cancer Meta-Analysis Project. Modulation of fluorouracil by leucovorin in patients with advanced colorectal cancer: evidence in terms of response rate. J Clin Oncol. 1992;10:896.

5 Altman DG, Dore CJ. Randomisation and baseline comparisons in clinical trials. Lancet. 1990;335:149.

6 Altman LK: Study prompts call to halt a routine eye operation, New York Times, Feb 22, 1995, p C100.

7 Angell M. The truth about the drug companies: how they deceive us and what to do about it. New York: Random House; 2004.

8 Antczak AA, Tang J, Chalmers TC. Quality assessment of randomized control trials in dental research. I. Methods. J Periodontal Res. 1986;21:305.

9 Antczak AA, Tang J, Chalmers TC. Quality assessment of randomized control trials in dental research. II. Results: periodontal research. J Periodontal Res. 1986;21:315.

10 Asimov I. A history of mathematics, ed 2. New York: Wiley & Sons; 1991. p vii

11 ATBC Cancer Prevention Study Group. The Alpha-Tocopherol, Beta-Carotene Lung Cancer Prevention Study: design, methods, participant characteristics, and compliance. Ann Epidemiol. 1994;4:1.

12 Awad MA, Locker D, Korner-Bitensky N, Feine JS. Measuring the effect of intra-oral implant rehabilitation on health-related quality of life in a randomized controlled clinical trial. J Dent Res. 2000;79:1659.

13 Barry JM. The great influenza: the epic story of the deadliest plague in history. New York: Viking; 2004. p 61

14 Beasley RP, Hwang LY, Lin CC, Chien CS. Hepatocellular carcinoma and hepatitis B virus: a prospective study of 22,707 men in Taiwan. Lancet. 1981;2:1129.

15 Begley S: Now, science panelists are picked for ideology rather than expertise, Wall Street Journal, Dec 6, 2002, p B1.

16 Bekelman JE, Li Y, Gross CP. Scope and impact of financial conflicts of interest in biomedical research: a systematic review. JAMA. 2003;289:454.

17 Benedetti F, Colloca L, Torre E, Lanotte M, et al. Placebo-responsive Parkinson patients show decreased activity in single neurons of subthalamic nucleus. Nat Neurosci. 2004;7:587.

18 Bennett-J-C. Inclusion of women in clinical trials: policies for population subgroups (see comments). N Engl J Med. 1993;329:288.

19 Bodenheimer T, Collins R: The ethical dilemmas of drug, money, and medicine, Seattle Times, 2001.

20 Brody BA. Ethical issues in drug testing, approval, and pricing: the clot dissolving drugs. New York: Oxford University Press; 1995.

21 Buck C. Popper’s philosophy for epidemiologists. Int J Epidemiol. 1975;4:159.

22 Burton TM: Why cheap drugs that appear to halt fatal sepsis go unused, New York Times, May 17, 2002, p A1.

23 Cardiac Arrhythmia Suppression Trial (CAST) investigators. Preliminary report: effect of encainide and flecainide on mortality in randomized trial of arrhythmia suppression after myocardial infarction. N Engl J Med. 1989;321:406.

24 Chalmers T, Celano P, Sacks H, Smith H. Bias in treatment assignment in controlled clinical trials. New Engl J Med. 1983;309:1358.

25 Chalmers TC. Randomization of the first patient. Med Clin North Am. 1975;59:1035.

26 Cobb LA, Thomans GI, Dillard DH. An evaluation of internal mammary artery ligation by a double-blind technique. N Engl J Med. 1959;260:1115.

27 Cohen J. Clinical research: AIDS vaccine results draw investor lawsuits. Science. 2003;299:1965.

28 Cohen J. Public health: AIDS vaccine trial produces disappointment and confusion. Science. 2003;299:1290.

29 Coronary Drug Project Research Group. Influence of adherence to treatment and response of cholesterol on mortality in the Coronary Drug Project. N Engl J Med. 1980;303:1038.

30 Couzin J. Cancer drugs: smart weapons prove tough to design. Science. 2002;298:522.

31 Crossen C: The treatment: a medical researcher pays for challenging drug-industry funding, Wall Street Journal, Aug 6, 2001, p A1.

32 Cummings SR, Black DM, Thompson DE, et al. Effect of alendronate on risk of fracture in women with low bone density but without vertebral fractures: results from the Fracture Intervention Trial. JAMA. 1998;280:2077.

33 DeAngelis CD. Conflict of interest and the public trust. JAMA. 2000;284:2237.

34 DeAngelis CD, Drazen JM, Frizelle FA, et al. Clinical trial registration: a statement from the International Committee of Medical Journal Editors. JAMA. 2004.

35 Del Mar C, Glasziou P, Hayem M. Are antibiotics indicated as initial treatment for children with acute otitis media? A meta-analysis. BMJ. 1997;314:1526.

36 Ehrlich R. Can a sugar pill kill you? In: 8 preposterous propositions. Princeton, NJ: Princeton University Press; 2003.

37 Enserink M. Women’s health: The vanishing promises of hormone replacement. Science. 2002;297:325.

38 Feinstein AR. Scientific standards in epidemiologic studies of the menace of daily life. Science. 1988;242:1257.

39 Feskanich D, Ziegler RG, Michaud DS, et al. Prospective study of fruit and vegetable consumption and risk of lung cancer among men and women. J Natl Cancer Inst. 2000;92:1812.

40 Fleming TR. Historical controls, data banks, and randomized trials in clinical research: a review. Cancer Treat Rep. 1982;66:1101.

41 Fleming TR, DeMets DL. Surrogate end points in clinical trials: are we being misled? Ann Intern Med. 1996;125:605.

42 Forgie AH, Paterson M, Pine CM, et al. A randomised controlled trial of the caries-preventive efficacy of a chlorhexidine-containing varnish in high-caries-risk adolescents. Caries Res. 2000;34:432.

43 Fuhrmans V: HRT worries give headaches to drug makers, Wall Street Journal, Oct 25, 2002, p B1.

44 Gilmartin RV: Chairman’s report, Annual Meeting of Stockholders, 2002, Merck & Co.

45 Grady D: Breast cancer researcher admits falsifying data, New York Times, Feb 2000, p A7.

46 Gray R, Clarke M, Collins R, Peto R. Making randomised trials larger: a simple solution? Eur J Surg Oncol. 1995;21:137.

47 Greenland S. Randomization, statistics, and causal inference. Epidemiology. 1990;1:21.

48 Guerini V. History of dentistry. New York: Lea & Febiger; 1909.

49 Gupta S, Camm AJ. Chlamydia pneumoniae, antimicrobial therapy and coronary heart disease: a critical overview. Coron Artery Dis. 1998;9:339.

50 Henry M, Reader A, Beck M. Effect of penicillin on postoperative endodontic pain and swelling in symptomatic necrotic teeth. J Endod. 2001;27:117.

51 Hensley S, Abboud L: Medical research has “black hole,” Wall Street Journal, June 4, 2004, p B3.

52 Holmberg L, Bill-Axelson A, Helgesen F, et al. A randomized trial comparing radical prostatectomy with watchful waiting in early prostate cancer. N Engl J Med. 2002;347:781.

53 Houck V, Reader A, Beck M, Nist R, Weaver J. Effect of trephination on postoperative pain and swelling in symptomatic necrotic teeth. Oral Surg Oral Med Oral Pathol Oral Radiol Endod. 2000;90:507.

54 Hrobjartsson A, Gotzsche PC. Is the placebo powerless? An analysis of clinical trials comparing placebo with no treatment. N Engl J Med. 2001;344:1594.

55 , http://www1.tpgi.com.au/users/tps-seti/baloney.html

56 , http://www.improbable.com/airchives/paperair/air-teachers-guide.html

57 Hujoel PP, DeRouen TA. A survey of endpoint characteristics in periodontal clinical trials published 1988-1992, and implications for future studies. J Clin Periodontol. 1995;22:397.

58 Hujoel PP, Moulton LH. Evaluation of test statistics in split-mouth clinical trials. J Periodontal Res. 1988;23:378.

59 Hujoel PP, Cunha-Cruz J, Loesche WJ, Robertson PR. Personal oral hygiene and chronic periodontitis: a systematic review. Periodontology 2000. 2005;37:29.

60 Hujoel PP, del Aguila MA, DeRouen TA, Bergstrom J. A hidden periodontitis epidemic during the 20th century? Community Dent Oral Epidemiol. 2003;31:1.

61 Hujoel PP, Drangsholt M, Spiekerman C, Derouen TA. Examining the link between coronary heart disease and the elimination of chronic dental infections. J Am Dent Assoc. 2001;132:883.

62 Ischemic Optic Neuropathy Decompression Trial Research Group. Optic nerve decompression surgery for nonarteritic anterior ischemic optic neuropathy (NAION) is not effective and may be harmful. JAMA. 1995;273:625.

63 Jacobson JM, Greenspan JS, Spritzler J, et al. Thalidomide for the treatment of oral aphthous ulcers in patients with human immunodeficiency virus infection, National Institute of Allergy and Infectious Diseases, AIDS Clinical Trials Group. N Engl J Med. 1997;336:1487.

64 Jha P, Flather M, Lonn E, et al. The antioxidant vitamins and cardiovascular disease: a critical review of epidemiologic and clinical trial data. Ann Intern Med. 1995;123:860.

65 Johnson LA: Group assails cholesterol advice over experts’ ties to drugmakers, online, July 17, Associated Press.

66 Juni P, Altman DG, Egger M. Systematic reviews in health care: assessing the quality of controlled clinical trials. BMJ. 2001;323:42.

67 Kahn JO, Cherng DW, Mayer K, et al. Evaluation of HIV-1 immunogen, an immunologic modifier, administered to patients infected with HIV 10 (having 300 to 549 6/L CD4 cell counts: a randomized controlled trial. JAMA. 2000;284:2193.

68 Kennedy D. The old file-drawer problem. Science. 2004;305:451.

69 King RJ: Keyhole heart surgery started fast: some say too fast, Wall Street Journal, May 5, 1999, p A1.

70 Kjekshus J, Pedersen TR. Reducing the risk of coronary events: evidence from the Scandinavian Simvastatin Survival Study (4S). Am J Cardiol. 1995;76:64C.

71 Lee HG, Casadesus G, Zhu X, et al. Perspectives on the amyloid-beta cascade hypothesis. J Alzheimer Dis. 2004;6:137.

72 Leibovici L. Effects of remote, retroactive intercessory prayer on outcomes in patients with bloodstream infection: randomised controlled trial. BMJ. 2001;323:1450.

73 Lipid Research Clinics Coronary Primary Prevention Trial. Results. I. Reduction in incidence of coronary heart disease. JAMA. 1984;251:351.

74 Löe H, Theilade E, Jensen SB. Experimental gingivitis in man. J Periodontol. 1965;36:177.

75 Maclure M. Multivariate refutation of aetiological hypotheses in non-experimental epidemiology. Int J Epidemiol. 1990;19:782.

76 MacMahon B, Yen S, Trichopoulos D, et al. Coffee and cancer of the pancreas. N Engl J Med. 1981;304:630.

77 Malakoff D. Human research: Nigerian families sue Pfizer, testing the reach of U.S. law. Science. 2001;293:1742.

78 Mandel EM, Rockette HE, Bluestone CD, et al. Efficacy of amoxicillin with and without decongestant-antihistamine for otitis media with effusion in children: results of a double-blind, randomized trial. N Engl J Med. 1987;316:432.

79 Marinho VC, Higgins JP, Logan S, Sheiham A: Topical fluoride (toothpastes, mouthrinses, gels or varnishes) for preventing dental caries in children and adolescents, Cochrane Database Syst Rev CD002782, 2003.

80 Marshall E. Breast cancer: reanalysis confirms results of “tainted” study. Science. 1995;270:1562.

81 Mathews A, Burton TM: Invasive procedure; After Medtronic lobbying push, the FDA had change of heart; Agency squelches an article raising doubts on safety of device to repair artery; Threat of ‘criminal sanction,’ Wall Street Journal, June 9, 2004, p A1.

82 Matthews R: Statistics: Undermined by an error of significance, Financial Times, 2004, p 9.

83 Merikangas KR, Risch N. Genomic priorities and public health. Science. 2003;302:599.

84 Mills JL. Data torturing. N Engl J Med. 1993;329:1196.

85 Montenegro R, Needleman I, Moles D, Tonetti M. Quality of RCTs in periodontology: a systematic review. J Dent Res. 2002;81:866.

86 Moseley JB, O’Malley K, Petersen NJ, et al. A controlled trial of arthroscopic surgery for osteoarthritis of the knee. N Engl J Med. 2002;347:81.

87 Moses HIII, Martin JB. Academic relationships with industry: a new model for biomedical research. JAMA. 2001;285:933.

88 National Institutes of Health. Extramural data and trends. Bethesda, Md: NIH; 2000.

89 Neu HC. The crisis in antibiotic resistance. Science. 1992;257:1064.

90 Nist E, Reader A, Beck M. Effect of apical trephination on postoperative pain and swelling in symptomatic necrotic teeth. J Endod. 2001;27:415.

91 Nowak R. Problems in clinical trials go far beyond misconduct. Science. 1994;264:1538.

92 O’Connor CM, Dunne MW, Pfeffer MA, et al. Azithromycin for the secondary prevention of coronary heart disease events: the WIZARD study—a randomized controlled trial. JAMA. 2003;290:1459.

93 Olanow CW, Goetz CG, Kordower JH, et al. A double-blind controlled trial of bilateral fetal nigral transplantation in Parkinson’s disease. Ann Neurol. 2003;54:403.

94 Omenn GS, Goodman GE, Thornquist MD, et al. Effects of a combination of beta carotene and vitamin A on lung cancer and cardiovascular disease. N Engl J Med. 1996;334:1150.

95 Peterson M: Madison Ave. has growing role in the business of drug research, New York Times, Nov 22, 2002, p A1.

96 Peto J. Cancer epidemiology in the last century and the next decade. Nature. 2001;411:390.

97 Pickenpaugh L, Reader A, Beck M, et al. Effect of prophylactic amoxicillin on endodontic flare-up in asymptomatic, necrotic teeth. J Endod. 2001;27:53.

98 Psaty BM, Weiss NS, Furberg CD, et al. Surrogate end points, health outcomes, and the drug-approval process for the treatment of risk factors for cardiovascular disease. JAMA. 1999;282:786.

99 Ramfjord SP. Surgical periodontal pocket elimination: still a justifiable objective? J Am Dent Assoc. 1987;114:37.

100 Report of the Committee of Principal Investigators. WHO cooperative trial on primary prevention of ischaemic heart disease with clofibrate to lower serum cholesterol: final mortality follow-up. Lancet. 1984;2:600.

101 Riggs BL, Hodgson SF, O’Fallon WM, et al. Effect of fluoride treatment on the fracture rate in postmenopausal women with osteoporosis. N Engl J Med. 1990;322:802.

102 Roberts AH, Kewman DG, Mercier L, Hovell MF. The power of nonspecific effects in healing: implications for psychosocial and biological treatments. Clin Psychol Rev. 1993;13:375.

103 Ross PE: Lies, damned lies and medical statistics, Forbes, 1995, p 130.

104 Ryan M. Who really said? The book that tests your “quote” IQ. Hong Kong: Great Quotations Publishing Company; 1967.

105 Sagan C. The demon-haunted world. New York: Ballantine Books; 1996.

106 Schluger S, Yuodelis RA, Page RC. Periodontal disease: basic phenomena, clinical management, and occlusal and restorative interrelationships. Philadelphia: Lea & Febiger; 1977.

107 Silverstein FE, Faich G, Goldstein JL, et al. Gastrointestinal toxicity with celecoxib vs nonsteroidal anti-inflammatory drugs for osteoarthritis and rheumatoid arthritis: the CLASS study—a randomized controlled trial, Celecoxib Long-term Arthritis Safety Study. JAMA. 2000;284:1247.

108 Sloppy stats shame science, Economist, 2004.

109 Spiekerman CF, Hujoel PP, DeRouen TA. Bias induced by self-reported smoking on periodontitis–systemic disease associations. J Dent Res. 2003;82:345.

110 Staquet MJ, Rozencweig M, von Hoff DD, Muggia FM. The delta and epsilon errors in the assessment of cancer clinical trials. Cancer Treat Rep. 1979;63:1917.

111 Steinbrook R. Public registration of clinical trials. N Engl J Med. 2004;351:315.

112 Stram DO, Huberman M, Wu AH. Is residual confounding a reasonable explanation for the apparent protective effects of beta-carotene found in epidemiologic studies of lung cancer in smokers? Am J Epidemiol. 2002;155:622.

113 Taubes G. News: Epidemiology faces its limits (see comments). Science. 1995;269:164.

114 Temple RJ. A regulatory authority’s opinion about surrogate endpoints. In: Tucker GT, editor. Clinical measurement in drug evaluation. New York: Wiley, 1995.

115 Thom DH, Grayston JT, Siscovick DS, et al. Association of prior infection with Chlamydia pneumoniae and angiographically demonstrated coronary artery disease. JAMA. 1992;268:68.

116 Thompson DF. Understanding financial conflicts of interest. N Engl J Med. 1993;329:573.

117 Tomar SL, Asma S. Smoking-attributable periodontitis in the United States: findings from NHANES III, National Health and Nutrition Examination Survey. J Periodontol. 2000;71:743.

118 Tomasz A. Multiple-antibiotic-resistant pathogenic bacteria: a report on the Rockefeller University Workshop. N Engl J Med. 1994;330:1247.

119 Tsintis P, La Mache E. CIOMS and ICH initiatives in pharmacovigilance and risk management: overview and implications. Drug Saf. 2004;27:509.

120 Tufte ER. The visual display of quantitative information. Cheshire, Conn: Graphics Press; 1983.

121 Tukey J. The future of data analysis. Ann Math Stat. 1962;33:1.

122 Van Doren C. Benjamin Franklin. New York: Viking; 1938.

123 Wadman M. Scientists cry foul as Elsevier axes paper on cancer mortality. Nature. 2004;429:687.

124 Wadman M. Spitzer sues drug giant for deceiving doctors. Nature. 2004;429:589.

125 Washburn EP, Orza MJ, Berlin JA, et al. Residential proximity to electricity transmission and distribution equipment and risk of childhood leukemia, childhood lymphoma, and childhood nervous system tumors: systematic review, evaluation, and meta-analysis. Cancer Causes Control. 1994;5:299.

126 Willett WC. Vitamin A and lung cancer. Nutr Rev. 1990;48:201.

127 Women’s Health Initiative. Risks and benefits of estrogen plus progestin in healthy postmenopausal women: principal results from the Women’s Health Initiative randomized controlled trial. JAMA. 2002;288:321.

128 Yusuf S, Wittes J, Probstfield J, Tyroler HA. Analyses and interpretation of treatment effects in subgroups of patients in randomized controlled trials. JAMA. 1991;266:93.

129 Zeger SL. RE: “Statistical reasoning in epidemiology,” the author replies. Epidemiology. 1992;135:1187.