Assistive Technology

After studying this chapter, the student or practitioner will be able to do the following:

1 Describe the range of assistive technology options currently available for persons with physical disabilities.

2 Explain the human interface assessment (HIA) model.

3 Identify common solutions for enabling control of daily living devices through technology.

4 Discuss options for augmentative and alternative communications

5 Analyze input and output options for assistive technologies and match them to the needs of consumers.

What Is Assistive Technology?

A discussion of assistive technology should begin with a description of the general limits of the topic. This is made difficult because the legal definitions of assistive technology are not uniform. Assistive technologies are sometimes included in the category of rehabilitation technology.12 In other cases, rehabilitation technology is considered an aspect of assistive technologies.8 A third category of universal designed technologies does not seem to fit into either category.11 For purposes of this discussion, the author will present a set of definitions that are within the current definitions, but not necessarily congruent with any particular statute.

Rehabilitative/Assistive/Universal Technologies

The category into which an enabling technology falls depends largely on its application, not on the specific device. What is for some people a convenience may be an assistive technology for another.

Rehabilitation Technology

To rehabilitate is to restore to a previous level of function. To be consistent with general usage, therefore, the term rehabilitation technology should be used to describe technologies that are intended to restore an individual to a previous level of function following the onset of pathology. When an occupational therapist (OT) uses a technological device to establish, restore, or modify function in a client, he or she is using a rehabilitative technology. Physical agent modalities such as ultrasound, diathermy, paraffin, and functional electric stimulation are examples of rehabilitative technology. Broadly, whenever an OT uses a technology, be it diathermy or a traditional occupational therapy activity such as leather working, with the primary goal of restoring strength, mobility, or function, the technology is rehabilitative. When these technologies have done their job, the client will have improved intrinsic function, and the technology will be removed.

Assistive Technology

Central to occupational therapy is the belief that active engagement in meaningful activity supports the health and well-being of the individual. When an individual has functional limitations secondary to some pathology, he or she may not have the cognitive, motor, or psychologic skills necessary to engage in a meaningful activity and may require assistance to participate in the desired task.

To assist is to help, aid, or support. There is no implication of restoration in the concept of assistance. Assistive technologies, therefore, are technologies that assist a person with a disability to perform tasks. More specifically, assistive technologies are technologies, whether designed for a person with a disability or designed for mass market and used by a person with a disability, that allow that person to perform tasks that an able-bodied person can do without technological assistance. It may be that an able-bodied person would prefer to use a technology to perform a task (using a television remote control, for example), but it does not rise to the level of assistive technology as long as it is possible to perform the task without the technology.

Assistive technologies replace or support an impaired function of the user without being expected to change the native functioning of the individual. A wheelchair, for example, replaces the function of walking but is not expected to teach the user to walk. Similarly, forearm crutches support independent standing but do not, of themselves, improve strength or bony integrity and thus will not change the ability of the user to stand without them.

Because they are not expected to change the native ability of the user, assistive technologies have different design considerations. They are expected to be used over prolonged periods by individuals with limited training and possibly with limited cognitive skills. The technology, therefore, must be designed so that it will not inflict harm on the user through casual misuse. The controls of the device must be readily understood such that although some training may be required to use the device, constant retraining will not be. The device should not require deep understanding of its principles and functions to be useful.

One significant difference between rehabilitation technology and assistive technology occurs at the end of the rehabilitation process. At this point the client no longer uses rehabilitation technologies but may have just completed training in the use of assistive technologies. The assistive technologies go home with the client; the rehabilitation technologies generally remain in the clinic. Some technologies do not fit neatly into these categories since they may be used differently with different clients. Some clinicians use assisted communication as a tool to train unassisted speech for their clients. For other clients, assisted communication may be used to support or replace speech. In the first case, the technology is rehabilitative. In the second, the same technology may be assistive.

Universal Design

Universal design is a very new category of technology. The principles of universal design were published by the Center for Universal Design at North Carolina State University in 1997,11 and their application is still very limited. The concept of universal design is very simple: if devices are designed with the needs of people with a wide range of abilities in mind, they will be more usable for all users, with and without disabilities.

This design philosophy could, in some cases, make assistive technology unnecessary. A can opener that has been designed for one-handed use by a busy housewife will also be usable by a cook who has suffered a cerebral vascular accident and now has the use of just one hand. Since both individuals are using the same product for the same purpose, it is just technology, not assistive technology. Electronic books on dedicated eReaders such as Kindle or iPad include features to allow them to be used as “talking books.” The goal here is to provide a “hands-free, eyes-free” interface so that the books can be used by commuters while driving. However, the same interface will meet the needs of an individual who is blind and cannot see the screen or who has limitations in mobility and cannot operate the manual controls. No adaptation is necessary because the special needs of the person with a disability have already been designed into the product.

The Role of Assistive Technology in Occupational Participation

The Occupational Therapy Practice Framework7 defines the appropriate domain of occupational therapy as including analysis of the performance skills and patterns of the individual and the activity demands of the occupation that the individual is attempting to perform.

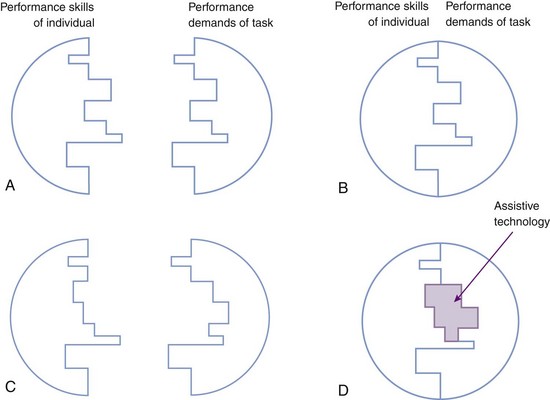

Human Interface Assessment

Anson’s human interface assessment (HIA) model provides a detailed look at the skills and abilities of humans in the skill areas of motor, process, and communication/interaction, as well as the demands of an activity (Figure 17-1, A). The HIA model suggests that when the demands of a task do not exceed the skills and abilities of an individual, no assistive technology is required, even when a functional limitation exists (Figure 17-1, B). Conversely, when a task makes demands that exceed the native abilities of the individual, the individual will not be able to perform the task in the prescribed manner (Figure 17-1, C). In these cases an assistive technology device may be used to bridge the gap between demands and abilities (Figure 17-1, D).

FIGURE 17-1 A, Skills of the individual and demands of the task. B, Match of the skills of the individual with the demands of the task. C, Mismatch of the skills of the individual and demands of the task. D, Assistive technology used to bridge the gap between the skills of the individual and the demands of the task.

Although an assistive technology must be able to assist in performing the desired task, it also presents an interface to the individual that must match the needs of the client. A careful match between the abilities of the human in sensory perception, cognitive processing, and motor output and the input and output capabilities of assistive technologies is necessary for assistive technologies to provide effective interventions.

Types of Electronic Enabling Technologies

Even though modern technology can blur some of the distinctions presented in this section, it is useful to consider assistive technologies in categories by the application for which they are used. This chapter deals only with electronic assistive technologies, which in terms of their primary application may be considered to fall into three categories: electronic aids to daily living (EADLs), alternative and augmentative communications (AACs), and general computer applications.

Electronic Aids to Daily Living

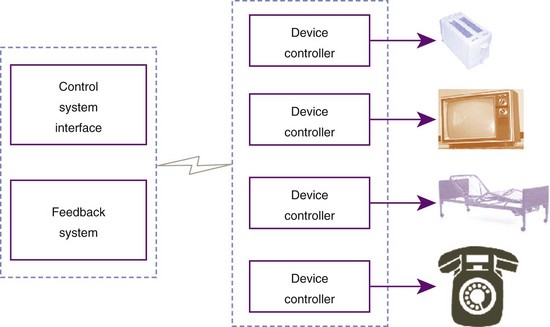

Electronic aids to daily living (EADLs) are devices that can be used to control electrical devices in the client’s environment. Before 1998,31 this category of device was generally known as an environmental control unit, although technically, this terminology should be reserved for furnace thermostats and similar controls. The more generic EADL applies to control of lighting and temperature, in addition to control of radios, televisions, telephones, and other electrical and electronic devices in the environment of the client (Figure 17-2).9,10,13

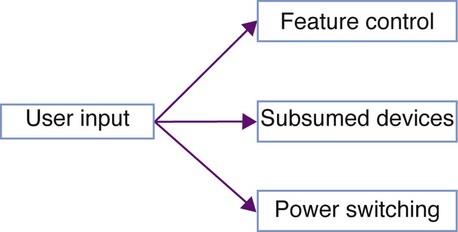

As described by the HIA model, EADLs bridge the gap between the skills and abilities of the user and the demands of the device to be controlled. Within this context, EADL systems may be thought of in terms of the degree and types of control over the environment that they provide to the user. These levels of control are simple power switching, control of device features, and subsumed devices (Figure 17-3).

Power Switching

The simplest EADLs provide simple switching of the electrical supply for devices in a room. Though not typically considered EADLs, the switch adaptations for switch-adapted toys provided to severely disabled children would formally be included in this category of device. Primitive EADL systems consisted of little more than a set of electrical switches and outlets in a box that connected to devices within a room via extension cords (e.g., Ablenet PowerLink 3). These devices are limited in their utility and safety since extension cords pose safety hazards to people in the environment through risks of both falls (tripping over the extension cords) and fires (overheated or worn cords). Because of the limitations posed by extension cords, EADL technology was driven to use remote switching technologies.

Second-generation EADL systems used various remote control technologies to remotely switch power to electrical devices in the environment. These strategies include the use of ultrasonic pulses (e.g., TASH Ultra 4), infrared light (e.g., infrared remote control), and electrical signals propagated through the electrical circuitry of the home (e.g., X-10). All these switching technologies remain in use, and some are used for much more elaborate control systems. Here, we are considering only power switching, however.

The most prevalent power-switching EADL control system is that produced by the X-10 Corporation. The X-10 system uses electrical signals sent over the wiring of a home to control power modules that are plugged into wall sockets in series with the device to be controlled. (In a series connection, the power module is plugged into the wall, and the remotely controlled device is plugged into the power module.) X-10 supports up to 16 channels of control, with as many as 16 modules on each, for a total of up to 256 devices controlled by a single system. The signals used to control X-10 modules will not travel through the home’s power transformer, so in single-family dwellings, there is no risk of interfering with devices in a neighbor’s home.

This is not necessarily true, however, in an apartment setting, where it is possible for two X-10 users to inadvertently control each other’s devices. The general set-up for early X-10 devices was to control up to 16 devices on an available channel so that such interference would not occur. In some apartments, the power within a single unit may be on different “phases” of the power supplied to the building. (These phases are required to provide 220-V power for some appliances.) If this is the case, the X-10 signals from a controller plugged into one phase will not cross to the second phase of the installation. A special “phase crossover” is available from X-10 to correct this problem. X-10 modules, in addition to switching power on and off, can be used via special lighting modules to dim and brighten room lighting. These modules work only with incandescent lighting but add a degree of control beyond simple switching. For permanent installations, the wall switches and receptacles of the home may be replaced with X-10–controlled units. Because X-10 modules do not prevent local control, these receptacles and switches will work like standard units, with the added advantage of remote control.

When they were introduced in the late 1970s, X-10 modules revolutionized the field of EADLs. Before X-10, remote switching was a difficult and expensive endeavor restricted largely to applications for people with disabilities and to industry. The X-10 system, however, was intended as a convenience for able-bodied people who did not want to walk across a room to turn on a light. Because the target audience was able to perform the task without remote switching, the technology had to be inexpensive enough that it was easier to pay the cost than to get out of a chair. X-10 made it possible for an able-bodied person to remotely control electrical devices for under $100, whereas most disability-related devices cost several thousand dollars. Interestingly, the almost universal adoption of X-10 protocols by disability-related EADLs did not result in sudden price drops in the disability field, so many clinicians continue to adapt mass-market devices for individuals with disabilities.

Feature Control

As electronic systems became more pervasive in the home, simple switching of lights and coffee pots failed to meet the needs of individuals with a disability who wanted to control the immediate environment. With wall current control, a person with a disability might be able to turn radios and televisions on and off but would have no control beyond that. A person with a disability might want to be able to surf cable channels as much as an able-bodied person with a television remote control. When advertisements are blaring from the speakers, a person with a disability might want to be able to turn down the sound or tune to another radio station. Nearly all home electronic devices are now delivered with a remote control, generally using infrared signals. Most of these remote controls are not usable by a person with a disability, however, because of dependence on fine motor control and good sensory discrimination.

EADL systems designed to provide access to the home environment of a person with a disability should provide more than on/off control of home electronics. They should also provide control of the features of home electronic devices. Because of this need, EADL systems frequently have hybrid capabilities. They will incorporate a means of directly switching power to remote devices, often using X-10 technology. This allows control of devices such as lights, fans, and coffee pots, as well as electrical door openers and other specialty devices.37 They will also typically incorporate some form of infrared remote control, which will allow them to mimic the signals of standard remote control devices. This control will be provided either by programming in the standard sequences for all commercially available DVD players, televisions, and satellite decoders or by teaching systems, in which the EADL learns the codes beamed at it by the conventional remote. The advantage of this latter approach is that it can learn any codes, even those that have not yet been invented. The disadvantage is that the controls must be taught, which requires more set-up and configuration time for the user and caregivers. Because the infrared codes have been standardized, it is entirely possible for an EADL to include the codes for the vast majority of home electronics. As with “universal remote controls” for able-bodied users, the set-up process then requires only that the individual enter simple codes for each device to be controlled.

Infrared remote control, as adopted by most entertainment systems controllers, is limited to approximate line-of-sight control. Unless the controller is aimed in the general direction of the device to be controlled (most have wide dispersion patterns), the signals will not be received. This means that an EADL cannot directly control, via infrared, any device not located in the same room. However, infrared repeaters such as the X-10 Powermid can overcome this limitation by using radio signals to send the control signals received in one room to a transmitter in the room with the device to be controlled. With a collection of repeaters, a person would be able to control any infrared device in the home from anywhere else in the home.

One problem that is shared by EADL users and able-bodied consumers is the proliferation of remote control devices. Many homes are now plagued with a remote control for the television, the cable/satellite receiver, the DVD player/VHS recorder (either one or two devices), the home stereo/multimedia center, and other devices, all in the same room. Universal remote controls can be switched from controlling one device to another but are often cumbersome to control. Some hope is on the horizon for improved control of home audiovisual devices with less difficulty. In November 1999, a consortium of eight home electronics manufacturers released a set of guidelines for home electronics called HAVi. The HAVi specification would allow compliant home electronics to communicate so that any HAVi device can control the operation of all of the HAVi devices sharing the standard. A single remote control could control all of the audiovisual devices in the home through a single interface. (As of summer 2010, the HAVi site lists six products, from two manufacturers, that actually use the HAVi standard, none of which appear to be in production, however.)

The Infrared Data Association (IrDA) is performing similar specifications work focusing purely on infrared controls. The IrDA standard will allow an infrared remote control to control features of computers, home audiovisual equipment, and appliances with a single standard protocol. In addition to allowing a single remote control to control a wide range of devices, IrDA standards will allow other IrDA devices, such as personal digital assistants (PDAs), personal computers, and augmentative communications systems, to control home electronics. Having a single standard for home electronics will allow much easier design of EADL systems for people with disabilities.

A more recent standard, V2,23 offers a much greater level of control. If fully implemented, V2 will allow a single EADL device to control all of the features of all electronic devices in its vicinity, such as the volume of the radio, the setting of the thermostat in the hall, or the “push to walk” button on the crosswalk.

One interesting aspect of feature control by EADLs is the relationship between EADLs and computers. Some EADL systems, such as the Quartet Simplicity, include features to allow the user to control a personal computer through the EADL. In general, this is little more than a pass-through of the control system of the EADL to a computer access system. Other EADLs, such as the PROXi, were designed to accept control input from a personal computer. The goal in both cases is to use the same input method to control a personal computer as to control the EADL. In general, the control demands for an EADL system are much less stringent than those for a computer. An input method that is adequate for EADL control may be very tedious for general computer controls. On the other hand, a system that allows fluid control of a computer will not be strained by the need to also control an EADL. The “proper” source of control will probably have to be decided on a case-by-case basis, but the issue will appear again in dealing with augmentative communication systems.

Subsumed Devices

Finally, modern EADLs frequently incorporate some common devices that are more easily replicated than controlled remotely. Some devices, such as the telephone, are so pervasive that an EADL system can assume that a telephone will be required. Incorporating telephone electronics into the EADL is actually less expensive, because of the standardization of telephone electronics, than inventing special systems to control a conventional telephone. Other systems designed for individuals with disabilities are so difficult to control remotely that the EADL must generate an entire control system. Hospital bed controls, for example, have no provisions for remote control, but they should be usable by a person with a disability.

Many EADL systems include a speakerphone, which will allow the user to originate and answer telephone calls by using the electronics of the EADL as the telephone. Because of the existing standards, these systems are generally analog, single-line telephones, electronically similar to those found in the typical home. Many business settings use multiline sets, which are not compatible with home telephones. Some businesses are converting to digital interchanges or “Voice Over IP” (VOIP), which are also not compatible with conventional telephones. As a result, the telephone built into a standard EADL may not meet the needs of a disabled client in an office setting. Before recommending an EADL as an access solution for a client’s office, therapists should check that the system is compatible with the telecommunications systems in place in that office.

Because the target consumer for an EADL will often have severe restrictions in mobility, the manufacturers of many of these systems believe that a significant portion of the customer’s day will be spent in bed and thus include some sort of control system for standard hospital beds. These systems commonly allow the user to adjust head and foot height independently, thereby extending the time that the individual can be independent of assistance for positioning. As with telephone systems, different brands of hospital beds use different styles of control. It is essential that the clinician match the controls provided by the EADL with the input required by the bed to be controlled.

Controlling EADLs

EADL systems are designed to allow individuals with limited physical capability to control devices in the immediate environment. Consequently, the method used to control the EADL must be within the capability of the client. Since these controls share many features in common with other forms of electronic enablers, the control strategies will be discussed below.

Augmentative and Alternative Communications

The phrase augmentative and alternative communications is used to describe systems that supplement (augment) or replace (alternative) communication by voice or gestures between people.30 Formally, AACs incorporate all assisted communication, including tools such as pencils and typewriters as used to communicate either over time (as in leaving a message for someone who will arrive at a location after you leave) or over distance (sending a letter to Aunt May). However, as used in assistive technology, AAC is considered the use of technology to allow communication in ways that an able-bodied individual would be able to accomplish without assistance. Thus, using a pencil to write a letter to Aunt May would not be an example of an AAC for a person who is unable to speak since an able-bodied correspondent would be using the same technology (pencil and paper) for the same purpose (social communication). However, when a person who is nonvocal uses the same pencil to explain to the doctor that she has sharp pains in her right leg, it becomes an AAC device since an able-bodied person would communicate this by voice.

There are two very different reasons why an individual might have a communication disorder. These can be grouped as language disorders and speech disorders. Following a brain injury that affects Wernicke’s area, an individual may exhibit a language disorder. An individual with a language disorder has difficulty understanding and/or formulating messages (receptive or expressive aphasia), regardless of the means of production. Although there are degrees of aphasia from mild to severe/profound, a person with a language disorder, in most cases, will not benefit from AAC. In contrast, with a brain injury (from birth, trauma, or disease) that affects the speech motor cortex (Broca’s area), an individual may be perfectly able to understand and formulate messages but not be able to speak intelligibly because of difficulty controlling the oral musculature. Such a person has no difficulty with language composition, only with language transmission. (In cases of apraxia, the motor control of writing can be as impaired as that of speaking, without affecting language.) In other cases, the physical structures used for speech generation (the jaw, tongue, larynx, etc.) may be damaged by disease or trauma. Although the person has intact neural control, the muscles to be controlled may not function properly. This person may well benefit from an AAC device.

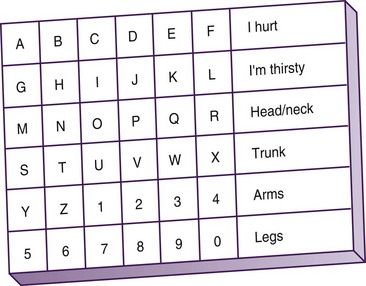

AAC devices range from extremely low technology to extremely high technology. In hospital intensive care units, low-tech communication boards can allow a person maintained on a respirator to communicate basic needs (Figure 17-4). A low-tech communication board can allow a client to deliver basic messages or spell out more involved messages in a fashion that can be learned quickly. For a person with only a yes/no response, the communication partner can indicate the rows of the aid one at a time and ask whether the desired letter or word is in the row. When the correct row is selected, the partner can move across a row until the communicator indicates the correct letter. This type of communication is inexpensive, quick to teach, but very slow to use. In settings in which there are limited communication needs, it is adequate but will not serve for long-term or fluent communication needs.

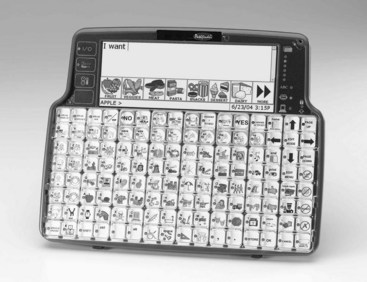

To meet the communication needs of a person who will be nonvocal over a longer period, electronic AAC devices are frequently recommended by clinicians (Figure 17-5).

FIGURE 17-5 High-tech AAC system (Pathfinder Plus). (Courtesy Prentke Romich Company, Wooster, Ohio.)

Light29 described four types of communication that we participate in: (1) expression of needs and wants, (2) transfer of information, (3) social closeness, and (4) social etiquette. In the intensive care setting noted earlier, most communication will occur at the first two levels. The communicator will want to express the basic needs of hunger, thirst, and relief of pain. He or she will wish to communicate with the doctor providing care, convey information about where it hurts, and determine whether treatment seems to be working. In work or school settings, communication is often intended to convey information.

When participating in a classroom discussion, a student may want to be able to describe the troop movements in the Battle of Gettysburg, for example. In math class, the student may need to present a proof involving oblique angles and parallel lines. Such exchange of information may be spontaneous, as when called on in class, or may be planned, as in a formal presentation.

In a social or interpersonal setting, communication has a markedly different flavor. Teenagers may spend hours on the telephone with very little “information” exchanged but communicate shared feelings and concerns. At a faculty tea, much of the communication is very formulaic, such as “How are you today?” Such queries are not intended as questions regarding medical status, but simply recognition of your presence and an indication of wishing you well. (Historically, this query was originally expressed as “I hope I see you well today.” This phrasing more accurately expresses the sentiment but is somewhat longer.) The planning and fluency of communication in each domain are substantially different, and the demands on AAC systems in each type of communication are likewise very different.

An AAC system used solely for expression of needs and wants can be fairly basic. The vocabulary used in this type of communication is limited, and because the expressions tend to be fairly short, the communication rate is not of paramount importance. In some cases the entire communication system may be an alerting buzzer to summon a caregiver because the individual is in need. Low-tech communication systems such as that just described can meet basic communication needs for individuals whose physical skills are limited to eye blinks or directed eye movement.

Low-tech devices may enable expression of more complex ideas. For example, a therapist became aware that his client with aphasia wished to communicate something. Since no AAC was available to this client, the therapist began attempting to guess what he wanted to communicate. After exhausting basic needs (“Do you need a drink? Do you need to use the bathroom?”), the therapist was floundering. Over the next 20 minutes, the client, in response to a conversation between a therapist and client at another treatment table, was able to communicate that his ears were the same shape as Cary Grant’s! Even a basic communication aid, such as the intensive care unit aid described earlier, would have allowed much faster transfer of this information.

Most development in AAC seems to be focused on the level of communicating basic needs and transfer of information. Information transfer presents some of the most difficult technological problems because the content of information to be communicated cannot be predicted. The designer of an AAC device would probably not be able to anticipate the need to discuss the shape of Cary Grant’s ears during the selection of vocabulary. To meet such needs, AAC devices must have the ability to generate any concept possible in the language being used. Making these concepts available for fluent communication is the ongoing challenge of AAC development.

Social communication and social etiquette present significant challenges for users of AAC devices. Although the information content of these messages tends to be low, since the communication is based on convention, the dialog should be both varied and spontaneous. AAC systems, such as the Dynavox, have provisions for preprogrammed messages that can be retrieved for social conversation, but providing both fluency and variability in social discourse through AAC remains a challenge. Current devices allow somewhat effective communication of wants. They are not nearly as effective in discussing dreams.

Parts of AAC Systems

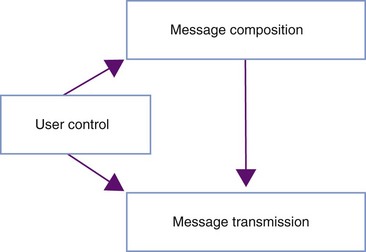

In general, electronic AAC systems have three components (Figure 17-6): a user control system, which allows the user to generate messages and control the device; a message composition system, which allows the user to construct messages to be communicated to others; and a message transmission system, which allows the communication partner to receive the message from the user. The issues of user control of AAC devices are essentially the same as those for other electronic assistive technologies and will be discussed with access systems in general.

Message Composition: Most of the time, able-bodied people, as well as individuals with disabilities, plan their messages before speaking. (Many of us can remember the “taste of foot” when we have neglected this process.) An AAC device should allow the user to construct, preview, and edit communication utterances before they become apparent to the communication partner. This gives the user of an AAC device the ability to think before speaking. It also allows compensation for the difference in rate between message composition via AAC and communication between able-bodied individuals.

Able-bodied individuals typically speak between 150 and 175 words per minute.35 Augmentative communication rates are more typically on the order of 10 to 15 words per minute, thus resulting in a severe disparity between the rate of communication construction and the expected rate of reception. Although input techniques (discussed later) offer some improvement in message construction rates, the rate of message assembly achieved with AAC is such that many listeners will lose interest before an utterance can be delivered. If words are spaced too far apart, an able-bodied listener may not even be able to assemble them into a coherent message!

The message construction area of an AAC device allows the individual to assemble a complete thought and then transmit it as a unit. A typical AAC device will include a display in which messages can be viewed before transmission. This area allows the communicator to review and edit the message that is being composed prior to transmitting it to the communication partner. This has two beneficial effects: the communicator can select words with care before communicating them, and it relieves the communication partner from the need for constant vigilance in conversation. The Atkinson-Shiffrin model of human memory suggests that human sensory memory will retain spoken information for only 4 or 5 seconds before it fades.1 Communication between able-bodied people generally takes place quickly enough for a complete sentence to be held in sensory memory at once. When an able-bodied person is communicating with a person using an AAC device, the time between utterances may be too long to remain in memory. The able-bodied person loses focus and may not be able to maintain attention to the conversation. If messages come as units, the communication partner can respond to a query and then busy himself/herself in another activity while the communicator composes the next message. This is not unlike having a conversation via e-mail.

Message Transmission: When the communicator has finished composing a message, it can be transmitted to the communication partner. The means of transmission varies with the device and the setting. Some AAC devices use exclusively printed transmission. One of the first true AAC devices, the Canon Communicator, was a small box that included an alphanumeric keyboard. The box was strapped to the user’s wrist, and the user tapped out messages that were printed on paper tape (message composition). When the message was completed, it was removed from the communicator and passed to the communication partner (message transmission).20 On devices such as the Zygo LightWRITER, the message may be displayed on an electronic display that is made visible to the communication partner. Other systems use auditory communication in which the message is spoken out loud via speech synthesis. There is a tendency to think of voice output as more appropriate than text since able-bodied people generally communicate by voice.20 In activities such as classroom discussion, voice communication may be the most appropriate method of communication. In other settings, such as a busy sidewalk or a noisy shop, voice output may be drowned out or unintelligible, and printed output may result in more effective communication. In a setting where verbalization may disturb others, printed output may, again, be the transmission method of choice.

In settings in which voicing is the preferred method of communication, voice quality must be considered. Early AAC devices used voices that to novice listeners were only slightly more understandable than the communicator’s unassisted voice. As speech synthesis technology has improved, AAC voices have generally become more intelligible. The high-quality voices of modern speech synthesizers have vastly improved intelligibility but continue to provide only a narrow range of variation and vocal expression. It is a mark of the improved state of speech synthesis that AAC users can now discuss who they would like to sound like rather than what they would like to sound like.

Communication Structure

Communications to be augmented may be categorized in terms of their intent, as well as in terms of their content, as proposed by Light.29 At the top level, communication may be divided, with the conditions discussed earlier, into primarily verbal and primarily written. In these cases the categorization would be based on the mode of communication that would typically be used by an able-bodied person, not on the form that is being used by the augmented communicator.

Verbal Communications: One category of verbal communication is conversation. Conversation implies a two-way exchange of information. This includes face-to-face communication with a friend, oral presentation when question-and-answer sessions are included, small group discussions, and a conversation over a telephone. In all of these cases, rapid communication is required and the user is expected to compose and respond immediately. If the composition rate is too slow, communication will break down, and the conversation will cease. The augmented communicator may use “telegraphic” speech styles, but this results in a primitive style of language (“want food”) that may be taken to indicate poor cognition.

Another form of verbal communication is an oral presentation in which no question-and-answer component is included or cases in which such a component is considered separately. In these cases the augmented communicator has ample preparation time to generate communications before delivery, and an entire presentation may be stored in a communication device before the time of delivery. In such cases, even though the time taken to prepare the message may be long, delivery of the message is not inhibited as long as the device has adequate storage for the entire presentation. Stephen Hawking, through the use of an ACC device, is able to orally present formal papers at conferences just as well as his colleagues. His ability to respond to questions, however, is severely constrained. Nonetheless, because he is Stephen Hawking, colleagues will wait as long as necessary for his answers to questions. Less eminent augmentative communicators may find this more constraining.

Graphic Communications: The category of graphic communications includes all forms of communication that are mediated by graphic symbols. This includes writing using paper and pencil, typewriter, computer/word processor, calculator, or a drawing program. In this form of communication the expectation is that there is a difference between the time to create the message and the time to receive it. Within the realm of graphic communications there is a wide range of conditions and intents of communication that may influence the devices selected for the user.

Historically, graphic communication did not allow “conversation” in the traditional sense, other than the note passing that friends sometimes engage in when not attending to a presentation. Today, however, technologies such as “instant messaging” allow real-time conversations via written (typed) communication. The same need for fluency and immediacy of communication applies as for verbal conversations.

One specialized type of graphic communication is note taking. Note taking is a method of recording information as it is being transmitted from a speaker so that the listener can recall it later. The intended recipient of this form of communication is the person who is recording the notes. Because it is a violation of social convention to ask the speaker to speak more slowly to accommodate the note taker, a note-taking system must allow rapid recording of information. Note taking differs from merely recording a presentation in that it is a cognitively active process. Because spoken language is typically highly redundant, the note taker must attend to the speaker and listen for ideas that should be recalled later. Only key points need be recorded. However, since the listener is also the intended recipient, notes can be very cryptic and may be meaningless to anyone other than the note taker. The special case in which notes are taken for a student with a disability changes the process to one of recording a transcript rather than simply taking notes, which is a different type of communication.

Messaging is a form of graphic communication that shares many characteristics with note taking. Although the intended recipient is another person, shared abbreviations and nongrammatic language are common in messaging. The language that is common in adolescent e-mail is very cryptic and only barely recognizable as English (Figure 17-7), but it is a form of graphic messaging that communicates to its intended audience. In general, messaging does not demand the speed of input of note taking since encoding and receiving are not linked in time. However, instant messaging, which has become nearly ubiquitous, blurs the lines between messaging and conversation. The expectation is generally of an immediate response, as in conversation. Social networking tools such as Twitter apply a different set of constraints. Because a Twitter feed may be “followed” by many people, obscure abbreviations cannot be used. However, because a single tweet can be no more than 140 characters long, messages must balance between being cryptic and informative.

The most language-intensive form of graphic communication is formal writing. This includes writing essays for school, writing for publication, and writing business letters or contracts. Formal writing differs from the previously discussed forms of graphic communication in that it must follow the rules of written grammar. It is expected that the communicator will spend significant time and effort in preparing a formal written document, and the abbreviations used in note taking and messaging are not allowed.

The most difficult form of formal communication may be mathematic notation. The early target of AAC devices was narrative text as used in messaging and written prose. Such language is commonly linear and can be composed in the same order that it is to be read. Mathematic expressions, on the other hand, may be two-dimensional and nonlinear. Simple arithmetic, such as 2 + 2 = 4, is not excessively difficult. However, algebraic expressions such as

can be much more difficult for an AAC device to create. A relatively basic calculus equation such as

can be an impossible barrier to write, much less solve for the user of an AAC device. Although current technology allows prose construction with some facility, an AAC user will have marked difficulty with higher mathematics. Because similar issues are experienced on the World Wide Web, new communication protocols such as MathML17 are being developed that may, if incorporated into augmentative communication devices, provide improved access to higher mathematics for AAC users.

General Computer Access

Computers have an interesting double role in the field of assistive technology. In some cases, using the computer is the intended activity. “Browsing” on the World Wide Web, for example, does not imply looking for specific information. It is a process of following links of immediate interest to see what is “out there.” The “Stumble-Upon” extension for the Firefox browser, for example, takes the browser to a random page that fits the interests declared by the user. Hobby computer programmers may use the computer simply to find what they can make it do. Using a computer can provide an individual with a disability a degree of control that is missing in much of the rest of life. In other cases, the computer is a means of accessing activities that would not be possible without it, so the computer can be considered in the same category as any other assistive technology. A student with a high-level spinal cord injury may need a computer to take notes in class. A business person with mild cognitive limitations may use computer technologies to organize and record information. A person with severe limitation in mobility may use social networks to remain in contact with friends and family. In these cases and many others, the computer is the path, not the destination.

As with the television remote control, the status of the computer (vis-à-vis assistive technology) depends on the status of the user, not on the type of computer. If a person with a disability is using a computer in the same way and for the same purposes as an able-bodied person would, it is not assistive technology. When a person with paraplegia accesses an online database of movies being shown at local theaters, this is not an assistive technology application because the same information is available without the computer. However, when a blind individual accesses the same database using the installed screen reader, it is an assistive technology since the printed schedule is not accessible. For a person with a print impairment, the computer can provide access to printed information either through electronic documents or through optical character recognition of printed documents, which can convert the printed page into an electronic document. Once a document is stored electronically, it can be presented as large text for a person with limited visual acuity or be read aloud for a person who is blind or profoundly learning disabled. Computers can allow the manipulation of “virtual objects” to teach mathematic concepts and constancy of form and develop spatial relationship skills that are commonly learned by manipulation of physical objects.22 Smart phones and PDAs may be useful for a busy executive but may be the only means available for a person with attention-deficit/hyperactivity disorder (ADHD) to get to meetings on time.39 For the executive, they are conveniences, but for the person with ADHD, they can be assistive technologies.

Individuals using computers can locate, organize, and present information at levels of complexity that are not possible without electronic aids. Through the emerging area of cognitive prosthetics, computers can be used to augment attention and thinking skills in people with cognitive limitations. Computer-based biofeedback can monitor and enhance attention to task. Research in individuals with temporal processing deficits has led to the development of computer-modified speech programs that can be used to enhance language learning and temporal processing skills.33,38

Beyond such rehabilitative applications, the performance-enhancing characteristics of the conventional computer can allow a person with physical or performance limitations to participate in activities that would be too demanding without the assistance of the computer. An able-bodied person would find having to retype a document to accommodate editing changes frustrating and annoying. A person with a disability may lack the physical stamina to complete the task without the cut-and-paste abilities of the computer. For the able-bodied person, the computer is a convenience. For the person with a disability, it is an assistive technology because the task is impossible without it. The applications of the computer for a person with a disability include all of the applications of the computer for an able-bodied person.

Control Technologies

All of these electronic enabling technologies depend on the ability of the individual to control them. Although the functions of the various devices differ, the control strategies for all of them share common characteristics. Since the majority of electronic devices were designed for use by able-bodied persons, the controls of assistive technologies may be categorized in the ways that they are adapted from the standard controls. Electronic control may be divided into three broad categories: (1) input adaptations, (2) performance enhancements, and (3) output adaptations.

Input to Assistive Technologies

Although there is a wide range of input strategies available to control electronic enablers, they can be more easily understood by considering them in subcategories. Different authors have created different taxonomies for the categorization of input strategies, and some techniques are classified differently in these taxonomies. The categorization presented here should not be considered as being uniquely correct, but merely as a convenience. Input strategies may be classified as those using physical keyboards, those using virtual keyboards, and those using scanning techniques.

Physical Keyboards

Physical keyboards generally supply an array of switches, with each switch having a unique function. On more complex keyboards, modifier keys may change the base function of a key, usually to a related function.2 Physical keyboards are found in a wide range of electronic technologies, including typewriters/computers, calculators, telephones, and microwave ovens. In these applications, sequences of keys are used to generate meaningful units such as words, checkbook balances, telephone numbers, or the cooking time for a baked potato. Other keyboards may have immediate action when a key is pressed. For example, the television remote control has keys that switch power or raise volume when pressed. Other keys on the remote control may, like other keyboards, be used in combinations. Switching to channel 152 requires sequential activation of the “1,” “5,” and “2” keys.

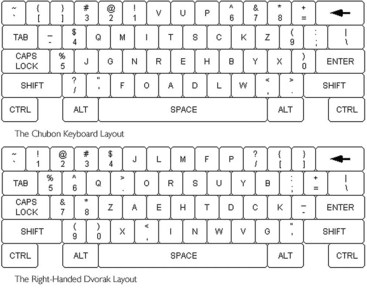

Physical keyboards can be adapted to the needs of an individual with a disability in a number of ways (Figure 17-8). Most alphanumeric keyboards, for example, are arranged in the pattern of the conventional typewriter. This pattern was intentionally designed, for reasons related to mechanical limitations, to slow the user down. Most individuals with disabilities do not require artificial restraints to slow them down, so this pattern of keys is seldom optimal for assistive technologies. Alternative keyboard patterns include the Dvorak Two Handed, Dvorak One Handed, and Chubon (Figure 17-9).2 These patterns offer improvement in the efficiency of typing that may allow a person with a disability to perform for functional periods of time.4

The standard keyboard is designed to respond immediately when a key is pressed and, in the case of computer keyboards, to repeat when held depressed. This behavior benefits individuals with rapid fine motor control but penalizes individuals with delayed motor response. Fortunately, on many devices the response of the keyboard can be modified. Delayed acceptance provides a pause between the instant that a key is pressed and the instant that the key press takes effect. Releasing the key during this pause will prevent the key from taking effect. This adaptation, if carefully calibrated, can allow a person to type with fewer mistakes, thereby resulting in higher accuracy and sometimes higher productivity. If not calibrated carefully, it will slow the typist without advantage.

The scale of the standard keyboard provides a balance between the fine motor control and range of motion (ROM) of an able-bodied individual. The “optimum keyboard,” modeled after the IBM Selectric typewriters of the 1960s, was scaled to match the hands of women typists. However, a client with limitations in either ROM or motor control may find the conventional keyboard difficult to use. If the client has limitations in motor control, a keyboard with larger keys and/or additional space between the keys may allow independent control of the device. This adaptation may also assist people with a visual limitation. This applies to computer keyboards, but it also applies to such suboptimal keyboards as those of television remote controls, which are available in large scale from a number of vendors. However, to provide an equivalent number of options with larger keys increases the size of the keyboard, which may make it unusable for a person with limitations in ROM.

To accommodate limitations in ROM, the keyboard controls can be reduced in size. Smaller controls, placed closer together, will allow selection of the full range of options with less demand for joint movement. However, the smaller controls will be more difficult to target for people with limited motor control. A mini-keyboard is usable only by a person with good fine motor control and may be the only scale of keyboard usable by a person with limited ROM. However, as many users of “netbooks” have discovered, smaller keyboards are often not as usable for able-bodied typists.

To accommodate both limited ROM and limited fine motor control, a keyboard can be designed with fewer options. Many augmentative communication devices can be configured with 4, 8, 32, or 64 keys on a keyboard of a single size. Modifier or “paging” keys can allow access to the full range of options for the keyboard, but with an attendant reduction in efficiency. In this approach the person uses one or more keys of the keyboard to shift the meanings of all of the other keys on the keyboard. Unless this approach is combined with a dynamic display, the user must remember the meanings of the keys.

Virtual Input Techniques

When an individual lacks the motor control to use an array of physical switches, a virtual keyboard may be used in its place. Virtual keyboards provide the functionality of a physical keyboard system by allowing the user to directly select from the array of options through a unique action, but it may not have physical switches for the actions to be performed. Instead, the meaning of the “selection” action may be encoded spatially, via pointing, or temporally by sequenced actions.

Pointing Systems

Pointing systems are analogous to using a physical keyboard with a physical pointer, such as a head or mouth stick.15 In these systems a graphic representation of a keyboard is presented to the user, and the user is able to make selections from it by pointing to a region of the graphic keyboard and performing a selection action. The selection action is typically either the operation of a single switch (e.g., clicking the mouse) or the act of holding the pointer steady for a certain period.

The pointer used for these systems may range from a beam of light projected by the user, the reflection of a light source from the user, or the position of a “solid-state gyroscope” worn by the user.6 Changing the orientation or position of the sensor moves an indicator over the graphic image of the keyboard to inform the user of the current meaning of the selection action.

Several augmentative communication systems now use a dynamic display in which the graphic keyboard can be changed as the user makes selections so that the meaning of each location of the keyboard changes as a message is composed. Dynamic displays free the user from having to either remember the current meaning of a key or decode a key with multiple images on it.

Pointing systems behave very much like physical keyboard systems, and many of the considerations of physical keyboards apply. The key size must balance the demands for fine motor control with the ROM available to the client. The keyboard pattern should be selected to enhance, not hinder function. The selection technique should facilitate intentional selections while minimizing accidental actions.

The ultimate pointing system to date is eye-tracking input. Eye-tracking systems are generally based on reflected infrared light from the surface of the eye. In general, these systems require extreme stability of the physical location of the eye and the camera observing the reflections.28 Traditionally, this has meant that the user must hold his/her head extremely still for the system to be usable. Because of cost considerations, this method of input has not been a reasonable option for a person who can produce head movements, so the requirement for head stabilization has not been a major issue. However, as eye gaze moves into mainstream technologies, there may be changes in these requirements.

The first mainstream products that incorporated eye tracking were hand-held camcorders, which had eye tracking built into the viewfinder. By tracking the portion of the screen being focused on, this eye tracking allowed the camera to focus on the part of the display that was of special interest to the person taking the video.

The demonstrated utility of these products has led to two divergent approaches to eye tracking in mainstream products. In the first approach, personal computer developers are exploring eye tracking as a means of detecting the action that the user would like to perform.34 This tracking will allow the computer to anticipate the needs of the user. To work as a mainstream product, the system must be able to track the user’s gaze anywhere in front of the computer monitor. Challenges encountered in developing adequate technology to allow free movement while tracking eye gaze are delaying the introduction of such products. Efforts to achieve mainstream gaze tracking are built around the low-cost webcams included in many modern computers. At current camera resolutions, the task is quite difficult, but research is progressing. Increasing detail by zooming in on the eye allows commercial eye-tracking systems to work, but it requires that the eye remain in a very narrow field of view.

The second approach to using eye-tracking devices is similar to video cameras with built-in eye tracking; these systems work because the camera is held to the eye and moved with the eye. If an eye-tracking system were small enough to be mounted on the head, it would be much easier to use because the system would remain in a fixed relationship to the eyes. Currently, systems that combine head-mounted displays with eye tracking are being made available for the development of future products. A number of possibilities are opened by this combination. For example, a head-mounted display might project a control system that the user looks at to control devices and looks through for other activities. With binocular eye tracking combined with head tracking, an EADL system might be constructed that would allow the user to control devices simply by looking at them. There is currently no affordable, easy-to-use, and effective eye gaze input system. There are systems that are effective, easy to use, or affordable, but not all three. Although eye gaze is available for those who have no other option, it is currently competitive as an input method for those who do have other options to control their computer. However, now that mainstream engineers have discovered this technology, it will probably become less costly and more effective.

Switch-Encoding Input

An individual who lacks the ROM or fine motor control necessary to use a physical or graphic keyboard may be able to use a switch-encoding input method. In switch encoding, a small set of switches (from one to nine) is used to directly access the functionality of the device. The meaning of the switch may depend on the length of time that it is held closed, as in Morse code, or on the immediate history of the switch set, as in the Tongue Touch Keypad (TTK).

In Morse code, a very small set of switches is used to type. In single-switch Morse, a short switch closure produces an element called a “dit,” which is typically written as “*.” A long switch closure produces the “dah” element, which is written as “−.” Formally, a “long switch closure” is three times longer than a short switch closure, which can be adjusted to the needs of the individual. Patterns of switch closures produce the letters of the alphabet, numbers, and punctuation. Pauses longer than five times the short switch closure indicate the end of a character. Two-switch Morse is similar, except that two switches are used: one to produce the “dit” element and a second to produce the “dah.” Because the meaning of the switches is unambiguous, it is possible for the dit and dah to be the same length, thereby potentially doubling typing speed. Three-switch Morse breaks the time dependence of Morse by using a third switch to indicate that the generated set of dits and dahs constitutes a single letter.

Morse code is a highly efficient method of typing for a person with severe limitations in motor control and has the advantage over other virtual keyboard techniques of eventually becoming completely automatic.5,32 Many Morse code users indicate that they do not “know” Morse code. They think in words, and the words appear on the screen, just as takes place in touch typing. Many Morse code users type at speeds approaching 25 words per minute, thus making this a means of functional writing. The historical weakness of Morse code is that each company creating a Morse interface for assistive technology has used slightly different definitions of many of the characters. To address this issue and to promote the application of Morse code, the Morse 2000 organization has created a standard for Morse code development.36

Another variety of switch encoding involves switches monitoring their immediate history for selection. The TTK, from newAbilities Systems, uses a set of nine switches on a keypad built into a mouthpiece that resembles a dental orthotic.

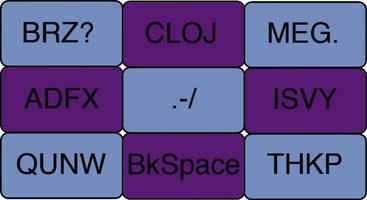

Early versions of the product used an “on-screen keyboard” called MiracleTyper (Figure 17-10). When using this input method, the first switch selection selects a group of nine possible characters, and the second switch action selects a specific character. This approach to typing is somewhat more physically efficient than Morse code but does require the user to observe the screen to know the current switch meaning. Later versions of the TTK used the keypad only as a mouse emulator to allow the user to select any on-screen keyboard desired for text entry.

A different approach to switch encoding is provided by the T9 keyboard (Figure 17-11), which is included in most cell phones with standard keypads. In this novel interface, each key of the keyboard has several letters on it, but the user types as though only the desired character were present. The keyboard software determines from the user’s input what word might have been intended. The disambiguation process used in the T9 keyboard allows a high degree of accuracy in determining which character the user intended, as well as rapid learning of the keyboard. This input technology is potentially compatible with pointing systems, described earlier, and can provide an excellent balance of target size and available options.

Speech Input

For many, speech input has a magical allure. What could be more natural than to speak to an EADL or computer and have one’s wishes carried out. When first introduced by Dragon Systems in 1990, large-vocabulary speech input systems were enormously expensive and, for most, of limited utility. Although highly dedicated users were able to type via voice in 1990, almost no one with the option of the keyboard would choose to use voice for daily work. These early systems required the user to pause between each word so that the input system could recognize the units of speech. Today,24 the technology has evolved to allow continuous speech, with recognition accuracy greater than 90%.26 (The companies producing speech products claim accuracy greater than 95%.) Even though this advance in speech technology is remarkable, it does not mean that speech input is the control of choice for people with disabilities, for a number of reasons.

Speech recognition requires consistent speech. Although it is not necessary that the speech be absolutely clear, the user must say words the same way each time for an input system to recognize them. Consequently, the majority of people with speech impairments cannot use a speech input system effectively. Slurring and variability in pronunciation will result in a low recognition rate.

Speech input requires a high degree of vigilance during training and use. Before current speech technology can be used, it must be “trained” to understand the voice of the intended user. To do this, the system presents text to the potential user, which must be read into the microphone of the recognition system. In Windows 7, the speech system included uses responses to a tutorial to learn the individual user, disguise the training process, and provide the user with an overview of the system at the same time. If the user lacks the cognitive skills to respond appropriately to the cues presented, the training process will be very difficult. A few clinicians have reported success in training students with learning disabilities or other cognitive limitations to use speech input systems, but in general, the success rate is poor. Even after training, the user must watch carefully for misrecognized words and correct them at the time that the error is made. Modern speech recognition systems depend on context for their recognition. Each uncorrected error slightly changes the context, and eventually the system can no longer recognize the words being spoken. Spell checking a document will not find misrecognized words since each word on the screen is a correctly spelled word. It just may not be the word that the user intended!

Speech input is intrusive. One person in a shared office space talking to a computer will reduce the productivity of every other person in the office. If everyone in the office were talking to their computers, the resulting noise levels would be chaotic. Speech input is effective for a person who works or lives alone, but it is not a good input method for most office or classroom settings.25

The type of speech system used depends on the device being controlled. For EADL systems, discrete speech (short, specific phrases such as “light on”) provides an acceptable level of control. The number of options is relatively small, and there is seldom a need for split-second control. Misrecognized words are unlikely to cause difficulty. Text generation for narrative description, however, places higher demands for input speed and transparency and may call for a continuous input method. Other computer applications may work better with discrete than with continuous input methods. Databases and spreadsheets typically have many small input areas, with limited information in each. These applications are much better suited to discrete speech than to continuous speech.

The optimum speech recognition program would be a system that would recognize any speaker with an accuracy of better than 99%. Developers of current speech systems say that based on advances in processor speed and speech technologies, this level of usability should be possible within about 5 years. However, they have been making the same prediction (within 5 years) for the past 20 years! Modern speech recognition systems are vastly better than those available 10 years ago and are also available at just over 1% of the cost of the early systems. However, even with these improvements, they are still not preferable to the conventional keyboard for most users.

Scanning Input Methods

For an individual with very limited cognition and/or motor control, a variation in row-column scanning is sometimes used.2,14,20 In scanning input, the system to be controlled sequentially offers choices to the user, and the user indicates affirmation when the correct choice is offered. Typically, such systems first offer groups of choices and, when a group is selected, offer the items in the group sequentially. Because the items were, in early systems, presented as a grid being offered a row at a time, such systems are commonly referred to as “row-column” scanning even when no rows or columns are present.

Scanning input allows the selection of specific choices with very limited physical effort. Generally, the majority of the user’s time is spent waiting for the desired choice to be offered, so the energy expenditure is relatively small. Unfortunately, the overall time expenditure is generally relatively large. When the system has only a few choices to select among, as in most EADL systems, scanning is a viable input method. The time spent waiting while the system scans may be a minor annoyance, but the difference between turning on a light now versus a few moments from now is relatively small. EADL systems are used intermittently throughout the day rather than continuously, so the delays over the course of the day are acceptable in most cases. For AAC or computer systems, however, the picture is very different. In either application, the process of composing thoughts may require making hundreds or thousands of selections in sequence. The cumulative effect of the pauses in row-column scanning will slow productivity to the point that functional communication is very difficult and may be impossible. Certainly, when productivity levels are mandated, the communication rate available via scanning input will not be adequate.

Integrated Controls

One area of current development is the long advocated idea of integrated controls.16 An individual with a profound disability is likely to require more than one type of control device. A person with severe motor involvement from cerebral palsy, for example, may require an augmentative communication system for interpersonal communication, an EADL for control of the local environment, a computer access system to complete job-related tasks, and a powered mobility device to navigate the community. Historically, each of these systems had their own control systems, with slightly different control strategies. The user of these multiple systems would have to move from control system to control system and have to learn how to control each of the systems.

With an integrated control system, the user would have a single interface that could control each assistive technology device. Since both AAC and computer access commonly involve language, the ability to use an AAC device to control a computer was an early goal. The 1994 standard for the general input device emulating interface (GIDEI)18 defined characters to be sent from an AAC device to a supporting computer to provide control equivalent to the keyboard and mouse. With GIDEI, an AAC user could use the same control interface to communicate with friends in the same room or to write a business proposal.

Since many devices can be used with a moving pointer, the joystick control of a powered wheelchair may offer another avenue to integrated controls. The same joystick could be used to move a selection icon on the display of an EADL or AAC device or the mouse pointer of a computer screen. Ideally, the communication between devices would be wireless, and the current Bluetooth standard for short-range, wireless communications offers to make such controls possible. (Bluetooth is a consortium of electronics companies whose goal is to develop a flexible low-cost wireless platform capable of short-distance communication.)

The question, for the clinician, will be whether the advantages of a single, generic control that is not optimal for any particular device would outweigh the costs of learning individual, optimal control strategies for each device. Because the demands of controlling a powered wheelchair are significantly different from those for writing a formal proposal, changing channels on a television, or answering a question in a classroom, different control strategies might be necessary for each device. Whether a single control interface can support the optimal strategy for each device remains to be seen.

Rate Enhancement Options

For EADL systems, the rate of control input is relatively unimportant. As noted earlier, the number of control options is relatively limited, and selections are rarely severely time constrained. However, for AAC and computer control systems, the number of selections to be made in sequence is high, and the rate is frequently very important. Because a person with a disability cannot generally make selections at the same rate as an able-bodied person, rate enhancement technologies may increase the information transmitted by each selection. In general, language can be expressed in one of three ways: letter-by-letter spelling, prediction, and compaction/expansion. The latter two options allow enhancement of language generation rates.

Letter-by-Letter Spelling

Typical typing is an example of letter-by-letter representation and is relatively inefficient. In all languages and alphabets, there is a balance between the number of characters used to represent a language and the number of elements in a message. English, using the conventional alphabet, averages about six letters (selections) per word (including the spaces between words). When represented in Morse code, the same text will require roughly 18 selections per word. By comparison, the basic Chinese vocabulary can be produced by selecting a single ideogram per word; however, thousands of ideograms exist. In general, having a larger number of characters in an alphabet allows each character to convey more meaning but may make selection of each specific character more difficult.

Many AAC systems use an expanded set of characters in the form of pictograms or icons to represent entire words that may be selected by the user. Such semantic compaction allows a large vocabulary to be stored within a device but requires a system of selection that may add complexity to the device.13 For example, a device may require the user to select a word group (e.g., food) before selecting a specific word (e.g., hamburger) from the group. Using subcategories, it is theoretically possible to represent a vocabulary of more than 2 million words on a 128-key keypad with just three selections.

Prediction

Because messages in a language tend to follow similar patterns, it is possible to produce significant savings of effort with prediction technology. Two types of prediction are used in language: (1) word completion and (2) word/phrase prediction.

In word completion, a communication system (AAC or computer based) will, after each keystroke, present a number of options to the user representing words that might be typed. When the appropriate word is presented by the prediction system, the user may select that word directly rather than continuing to type the entire word out. Overall, this strategy may reduce the number of selections required to complete a message.21 However, it may not improve typing speed.27 Anson3 demonstrated that when typing from copy using the keyboard, typing speed was reduced in direct proportion to the frequency of using word prediction. The burden of constantly scanning the prediction list overwhelms the potential speed savings of word completion systems under these conditions. However, when typing using an on-screen keyboard or scanning system, where the user must scan the input array in any case, word completion does appear to increase typing speed, as well as reduce the number of selections made.

Because most language is similar in structure, it is also possible, in some cases, to predict the word that will be used following a specific word. For example, after a person’s first name is typed, the surname will frequently be typed next. When this prediction is possible, the next word may be generated with a single selection. When combined with word completion, next-word prediction has the potential to decrease the effort of typing substantially. However, in many cases this potential is unrealized. Many users, though provided with next-word prediction, become so involved in spelling words that they ignore the predictions even when they are accurate. The cognitive effort of switching between “typing mode” and “list scanning” mode may be greater than the cognitive benefit of not having to spell the word out.19

Compression/Expansion

Compression/expansion strategies allow limited sets of commonly used words to be stored in unambiguous abbreviations. When these abbreviations are selected, either letter by letter or through word completion, the abbreviation is dynamically replaced by the expanded form of the word or phrase.2,14

Because the expansion can be many selections long, this technology offers an enormous potential for energy and time savings. However, the potential savings are available only when the user remembers to use the abbreviation rather than the expanded form of a word. Because of this limitation, abbreviations must be selected carefully. Many abbreviations are already in common use and can be stored conveniently. Most people will refer to the television by the abbreviation TV, which requires just 20% of the selections to represent it. With an expansion system, each use of TV can automatically be converted to “television” with no additional effort of the user. Similarly, TTFN might be used to store the social message “Ta-ta for now.”

Effective abbreviations will be unique to the user rather than general. An example of an effective form of abbreviations would be the language shortcuts commonly used in note taking. These abbreviations form a shorthand generally unique to the individual and allow complex thoughts to be represented on the page quickly in the course of a lecture. A clinician should work carefully with the client to develop abbreviations that will be useful and easily remembered.

Another form of “abbreviations” that is less demanding to create involves corrections of common spelling errors. For persons, either students or adult writers, who have cognitive deficits that influence spelling skills, expansions can be created to automatically correct misspelled words. In these cases the “abbreviation” is the way that the client generally misspells the word, and the “expansion” is the correct spelling of the word. Once a library of misspelled words is created, the individual is relieved of the need to worry about the correct spelling. There are those who maintain that this form of adaptation will prevent the individual from ever learning the correct spelling. In cases in which the client is still developing spelling skills, this is probably a valid concern, and the adaptation should not be used. However, for individuals with a cognitive deficit in which remediation is not possible, accommodation through compression/expansion technology is a desirable choice.