DEFINITION OF EVIDENCE-BASED PRACTICE

PROCESS OF EVIDENCE-BASED PRACTICE

▪ Evidence-based practice (EBP) is an approach to using the best quality evidence from clinical research, integrated with patient values and clinical experience as an ongoing process in the provision of high-quality hand therapy to individual patients.

▪ There are five sequential steps to the process.

1. Defining a specific clinical question

2. Finding the best evidence that relates to the question

3. Determining if the study results are true and applicable to the patient

4. Integrating patient values and clinical experience with the evidence to make conclusions

5. Evaluating the impact of decisions through determination of patient outcomes

▪ Specific skills are needed and challenges are presented when integrating EBP, but the learning curve can be decreased through the use of presynthesized evidence, EBP training opportunities, journal clubs, and other support systems.

▪ Hand therapy recognizes the importance of EBP and continues to develop the training, expertise, emphasis, and resources required to support the use of EBP in practice.

EBP is now universally recognized as an advancement in the process of providing high-quality clinical care. When the British Medical Journal invited readers to submit nominations for the top medical breakthrough since 1840, evidence-based medicine was in the top 10 (www.bmj.com/cgi/content/full/334/suppl_1/DC3). Originally focused on medical practice, there has been substantial transference into other health fields and even beyond health care. Hand therapy first focused on this topic with a special issue on EBP in the Journal of Hand Therapy in 2004.1,2 In 2009, Hand Clinics did so as well.3 This chapter provides an overview of evidence-based hand therapy. More detail on evidence-based rehabilitation can be found in a full textbook devoted to this topic.4

McMaster University is usually recognized as the birthplace of EBP, due to leadership provided by David Sackett, who led the development of this unique approach, while establishing the first clinical epidemiology department at McMaster University and contributing to the development of the Oxford Centre for Evidence-Based Medicine. Both remain leaders in teaching others to use and teach evidence-based medicine and continue to develop innovative concepts and approaches in EBP. However, EBP would not have flourished without a variety of similar-minded individuals in other countries who also led efforts that enriched the concepts and sustained their international acceptance. As recognized by David Sackett, the field of clinical epidemiology predated evidence-based medicine, but provides a significant conceptual basis.5-7

Evidence-based medicine became integrated in the training of physicians at McMaster University in Canada in the early 1970s within a new medical school that used innovative “problem-based” methods to train lifelong learners who could adapt to rapidly changing information.8 This training method was shared with other medical faculty and students in Sackett’s landmark and now widely used textbooks.9,10 The need for new practitioners to be able to access, evaluate, and apply new research knowledge is even more pressing today with the investment in health research and resultant explosion in availability of scientific reports in journals and other types of information resources. The information therapists learned during their training eventually becomes obsolete and must be replenished with the best new knowledge so as to be useful in optimizing patient care and outcomes. EBP provides a theoretical foundation, process, and associated tools to assist with making the best possible clinical decisions for specific clinical situations.

The following one-sentence (first, bolded) definition of EBP has become widely known, but we find the longer version more explicit.

Evidence-based medicine is the conscientious, explicit and judicious use of current best evidence in making decisions about the care of individual patients. The practice of evidence-based medicine means integrating individual clinical expertise with the best available external clinical evidence from systematic research. By individual clinical expertise, we mean the proficiency and judgment that individual clinicians acquire through clinical experience and clinical practice. Increased expertise is reflected in many ways, but especially in more effective and efficient diagnosis and in the more thoughtful identification and compassionate use of individual patients’ predicaments, rights, and preferences in making clinical decisions about their care. By best available external clinical evidence we mean clinically relevant research, often from the basic sciences of medicine, but especially from patient-centered clinical research into the accuracy and precision of diagnostic tests (including the clinical examination), the power of prognostic markers, and the efficacy and safety of therapeutic, rehabilitative, and preventive regimens. External clinical evidence both invalidates previously accepted diagnostic tests and treatments and replaces them with new ones that are more powerful, more accurate, more efficacious, and safer (www.cebm.net/index.aspx?O-1914).

EBP is based on a consistent five-step process (Box 143-1) that is used to answer different types of clinical decisions. It provides a powerful framework for answering clinical questions, for ongoing personal growth as a therapist, and for better management of health-care resources. By continuing to practice these five simple steps throughout your career, you can become a lifelong learner and maintain the highest quality of care for your patients.

Box 143-1 Five Steps of EBP

1. Ask a specific clinical question.

2. Find the best evidence to answer the question.

3. Critically appraise the evidence for its validity and usefulness.

4. Integrate appraisal results with clinical expertise and patient values.

The unique piece of EBP is the assumption that clinical research based on unbiased observations of groups of patients observed within high-quality research studies should be predominant in the decision-making process for individual patients. The importance of clinical expertise and patients’ values in the process is also prominent. However, neither are replacements for finding, then evaluating the validity and relevance of clinical research. Rather, the following three components must be integrated when making clinical decisions.

The first step in EBP is defining the clinical question. Many clinical questions arise during a hand therapist’s clinical practice. One of the key elements in EBP is defining specific and answerable clinical questions. The PICO(T) approach (Box 143-2) is commonly used to define answerable clinical questions. “P” stands for the important characteristics of the patient. This is where information from clinical experience and knowledge of basic science is integrated to help define logical, meaningful questions. Thus, clinical experience is the starting point of EBP. Typical questions asked by therapists include: What are the most important aspects of the disease or disability that must be addressed? What are the most important pathologic and prognostic features of the problem? What are realistic outcomes? What are potential longer-term risks? Therapists can make their EBP more patient-centered by integrating patient’s needs and values in the development and definition of specific questions.

Box 143-2 The PICO(T) Approach Is Commonly Used to Define Answerable Clinical Questions

Important characteristics of the patient |

|

Specific intervention that might potentially be used in managing a patient |

|

The comparative option |

|

Outcome(s) of interest |

|

Time (that the outcome of interest is determined) |

“I” refers to the specific intervention (diagnostic test, prognostic feature, surgical, or rehabilitative treatment) that might potentially be used in treating a patient. “C” refers to the comparative option (if indicated). This might be a control option (no intervention) or a standard currently accepted therapy. “O” refers to the outcome(s) of interest. This might include specific impairments like hand strength, range of motion, and activity or participation measures that address concepts such as disability or quality of life (see Chapter 16 on measuring patient outcomes). Patient values, goals, and priorities should also be reflected in the outcomes that are measured. Outcomes are often time-dependent (the “T” in PICO[T]), so it is important to define meaningful time points for clinical outcomes to be evaluated. This is particularly true in surgical interventions for which postoperative discomforts, complications, short-term recovery, and longer-term maintenance of functionality are all important considerations.

A number of different types of clinical questions are recognized within the EBP framework. It is important to be familiar with these different types of questions because there are different types of optimal research designs to answer these questions, different threats to validity, and, hence, different levels of evidence classifications for these varying types of questions. The types of clinical questions include

2. Differential diagnosis and symptom prevalence

4. Treatment selection and effectiveness

5. Prevention (diseases, disorders, and complications, etc.)/Harm

8. Costs: Economic and decision analysis

Let us take a patient example to illustrate how these questions might evolve.

Your patient is a 62-year-old female who presents with painful thumbs that are limiting her ability to perform her usual tasks of everyday life. Her symptoms include aching pain, which becomes worse with activity, and difficulty grasping objects. On examination she has a positive grind test and a positive Finkelstein’s test, localized swelling at the carpometacarpal joint (CMC), and reduced grip and pinch strength compared with normative values.

Hand therapy (and surgery) has a tradition of reliance on expert opinion. Can you think of a therapist whose name is associated with a particularly useful CMC joint orthosis? Now name the author of a randomized controlled trial evaluating CMC orthotic positioning. If you could do the first, but had difficulty with the latter, then you may be practicing “eminence-based practice,” instead of EBP. Although experts are often thought of as having an elite level of knowledge or skills, how can we evaluate this? Unfortunately, there are many reasons why clinicians can make faulty assumptions based on their clinical observations. Patients may improve due to natural history, their own actions, or report improvements for socially desirable reasons. All of these tend to confirm for clinicians that what they are doing is effective—even when it is not.

If we wish to use an evidence-based approach to treating the patient described earlier, we can start by asking a specific clinical question. Example questions in each of the categories are

1. Diagnosis: What are the false positive and negative rates for grind test and Finkelstein’s test in patients with thumb pain?

2. Differential diagnosis: In a patient presenting with these symptoms, which diagnosis is more likely?

3. Symptom prevalence study: What is the rate of CMC osteoarthritis in women 60 to 65 years of age?

4. Treatment selection/effectiveness (therapy): Is conservative management or surgical management optimal for this patient in terms of symptom relief?

5. Harm: Which approach (surgical or conservative) is more likely to result in complications?

6. Prevention: Would a joint protection program reduce progression of the disease process?

7. Etiology: Does metacarpophalangeal (MCP) joint mobility or laxity contribute to development of CMC joint arthritis?

8. Prognosis: Does self-efficacy affect the likelihood of success with an orthotic positioning and joint protection program?

9. Cost-effectiveness: Which is more cost-effective: tendon interposition arthroplasty or titanium implant for CMC joint reconstruction?

It is unrealistic to expect that we might be able to research every single clinical question with every single patient.11 However, if we use the five-step EBP approach to answer patient-specific questions, we can often generalize our analysis to other similar patients and be more aware of when exceptions apply. Defining new EBP questions based on our clinical encounters on an ongoing basis allows us to deepen our understanding of how to treat patients according to best evidence and evolve into more evidence-based practitioners. So a specific clinical question for our scenario might be: In women older than 60 years of age with mild osteoarthritis, does an orthotic combined with a strengthening program result in better pain relief, grip strength, and functional ability than orthotic use alone?

The next step in EBP is to search the literature for specific studies or evidence resources that answer our specific clinical question. This typically requires searching electronic databases to find the best studies that match the specifics of the clinical question. This is an area where EBP and improvements in technology have supported innovation. The exponential increase in information has been partially offset by increased ways to identify, sort, and evaluate clinical evidence through electronic databases, search engines, and other tools. In fact, research now focuses on optimizing search strategies,12-17 and significant efforts have been invested in providing database access to health professionals.18,19 Informatics has become a large area of inquiry and development, with the end result that there is now much better information management and more usable information resources than ever existed in the past.20 Despite this, locating high-quality research studies is commonly reported as a barrier to practicing EBP.21-28

Hand therapists need to know how to search for research studies using PubMed/Medline. PubMed is an easily accessible free search engine (www.ncbi.nlm.nih.gov/pubmed/) that is the most comprehensive source of medical research. It provides the abstract and in some situations a link to the full-text article. However, only a small proportion of journals provide free open access to their full text. Therefore, in majority of cases, therapists will need to purchase a copy or gain access to a local university library. It is important to realize that many rehabilitation journals are not indexed in Medline, therefore, it is important to search additional databases. CINAHL, the Cumulative Index to Nursing and Allied Health Literature, is the most comprehensive resource for nursing and allied health literature (www.ebscohost.com/cinahl/). It is available through universities and to individuals at a subscription rate. PEDro is the Physiotherapy Evidence Database (www.pedro.org.au). It was developed to give free access to bibliographic details and abstracts of randomized controlled trials, systematic reviews, and evidence-based clinical practice guidelines in physiotherapy. Most trials on the database have been rated for quality using the PEDro quality-rating scale. OTseeker is a similar database that contains abstracts of systematic reviews and randomized controlled trials relevant to occupational therapy (www.otseeker.com/).

A natural outgrowth of EBP has been the proliferation of an entire discipline around the best methods for synthesizing evidence.29,30 Systematic reviews (SRs), meta-analysis, and evidence-based clinical practice guidelines are the most common evidence syntheses used in clinical practice. When properly performed, evidence syntheses are valuable to clinicians as they bring together the best information across multiple studies because they reduce the burden on the individual to do so. The Cochrane collaboration31-35 has been a leader in the development of these methods.36 In addition, the Database of Abstracts of Reviews of Effects (DARE) (www.york.ac.uk/inst/crd/) is a searchable database of SRs which includes both a summary and critical appraisal of the SR. This can be a valuable resource for hand therapists. Unfortunately, few hand therapy issues have a sufficient number of studies that definitive evidence syntheses can be performed. However, The Journal of Hand Therapy was one of the first rehabilitation journals to highlight EBP and published a series of systematic reviews on hand therapy topics in 2004.1,37

Because finding evidence has been a persistent barrier for clinicians, a potential solution would be to remove the burden of searching and appraising evidence on individual therapists and placing it on skilled “extractors” who would find and classify the best evidence as it emerges. This has encouraged the move from “pull-out” toward “push-out” of evidence within EBP. An example of rudimentary push-out is a journal sending out the electronic table of contents of their latest issues to those who sign up for this service. Push-out customized alerting services are a more advanced form of pushing out high-quality evidence. Using technology, users can sign up for information on their clinical interests and receive alerts of evidence pushed out to them based on those needs or clinical interests and preferences for frequency of alerts and quality/relevance cut-offs. Customized user push-out of evidence through alerting service is currently available through MacPLUS/BMJ Updates (plus.mcmaster.ca/EvidenceUpdates), although it primarily focuses on medical journals and areas of expertise. It is possible to sign up for both orthopedic surgery and plastic surgery clinical specialties to receive literature relevant to hand therapy, but less relevant studies from other areas of the specialties can also be forwarded. The author and colleagues have developed and pilot-tested a rehabilitation version called MacPLUS Rehab. Hand therapy is one of the subspecialties that can be selected, allowing users to filter for this type of evidence. It is now accessible for public use (plus.mcmaster.ca/rehab/Default.aspx).

Regardless of these advances, for some clinical questions primary searching is still required. The clinician who is not familiar with electronic searching may find this first step a barrier. Fortunately, there are many excellent ways to gain these skills. A variety of written texts4,10 and articles38,39 are helpful. PubMed offers excellent online tutorials (www.nlm.nih.gov/bsd/disted/pubmed.html). Most university libraries offer (usually free) courses in searching the larger databases. Searching the literature is a skill, and like most skills, you will become more proficient with practice. You will quickly learn that overly broad search terms yield too many articles to review and will therefore need to build more efficient searches. Some databases have tools such as Clinical Queries (www.ncbi.nlm.nih.gov/entrez/query/static/clinical.shtml) in MEDLINE that help you run a more efficient search. Clinical Queries allows you to pick the specific type of clinical question you are asking and creates filters to make your search more efficient.

In our case scenario, we first used Clinical Queries in PubMed searching using the key words splinting† and arthritis. From this, we were able to locate a systematic review on orthotic positioning for osteoarthritis of the CMC joint.40 The authors anticipated a small number of studies and, therefore, included all identified studies. From this approach, seven studies on orthotic positioning of the CMC joint were identified and appraised. The authors concluded that positioning does decrease pain for many patients, that it does decrease subluxation on pinch for individuals with stage I and stage II CMC joint osteoarthritis, and that it does not appear to decrease the eventual need for surgery for persons with advanced disease or for those in whom immediate pain relief is not achieved. They also concluded that there do not appear to be any clear indications for any specific type of orthosis, other than patient preference. They noted the different types of orthoses appear to have characteristics that make them more or less attractive to patients depending on the types of activities they routinely perform. No studies specifically addressed the role of exercise in conjunction with orthotic positioning. A second search for primary studies using search terms of splinting AND exercise AND arthritis did not locate any studies that were relevant to the topic.

The next step involves appraising the evidence to determine whether it is true (valid) and applies to your individual patient (useful). EBP has led to the proliferation of different critical appraisal tools and critical appraisal.41 This can make the transition into EBP easier because different tools are available for different study designs or depths of appraisal. Although hand therapists can, and should, take advantage of critical appraisal done by others, it is not advisable to abdicate all responsibility for critical appraisal to experts since they do not have the content knowledge of hand therapy. It is often during the critical appraisal process that flaws in the clinical logic of a study are revealed. Even a single (critical) design flaw may prevent a study from providing a valid clinical conclusion or may mean that the conclusion does not pertain to the patient of interest. For example, if a study enrolled patients with one surgical procedure that allowed early mobilization into one group and compared them with patients who had hand therapy, but could not be mobilized because of a different surgical technique, in the comparison group, then any conclusions about the role of hand therapy would be flawed. Clinical sensibility (researchers call this external validity) and quality of the research design (researchers call this internal validity) must be considered simultaneously when determining whether to believe and apply the results of any individual research study.

These skills can be acquired in a number of ways. The classic EBP text written by Sackett10 is highly readable and has assisted many clinicians to gain these skills (a sample chapter of the third edition of the EBP text can be accessed at www.elsevierhealth.com/product.jsp?isbn=9780443074448#samplechaptertext). Law and MacDermid recently published the second edition of Evidence-Based Rehabilitation, which provides detailed information and tools within a rehabilitation context.4 Exemplars of critical appraisal and use of evidence-based practice methods are also available on the web (http://www.fetchbook.info/Evidence_Based_Medicine.html).

Perhaps the best method to develop critical appraisal skills is through participation in journal clubs, where both the merits of the research design and the application of the evidence can be discussed with other practitioners.42-44 Critical appraisal forms (for evaluation of effectiveness studies, diagnostic test studies, and psychometric evaluations of outcome measures) are available from the author and are also published in the textbook Evidence-Based Rehabilitation.4 The Centre for Evidence-Based Medicine (www.cebm.net) also provides excellent guidance, forms, and tools to assist with critical appraisal. In general, critical appraisal focuses on making decisions about the extent to which the study design provides confidence that the results are true. Key issues are random sampling and allocation to groups, blinding raters and patients, valid outcome measures, techniques to assess statistical significance (e.g., sample size and power, analysis methods), and clinical importance (size of the effect).

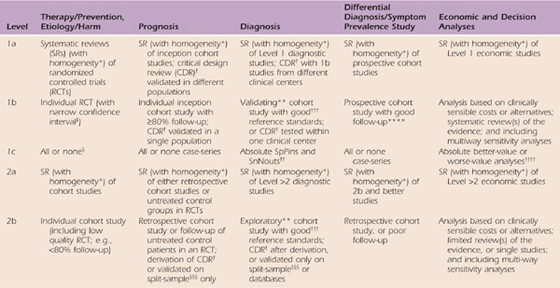

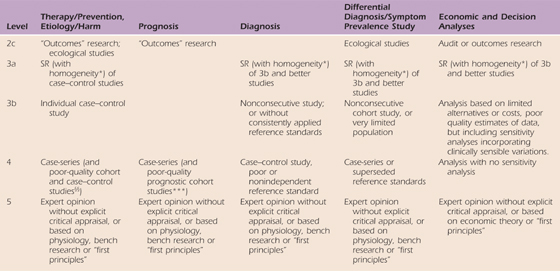

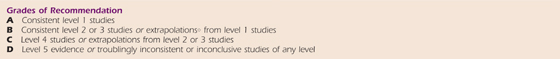

One of the most recognizable features of EBP is the “levels of evidence.” Basically, levels of evidence are simple ordinal scales that create hierarchical categories based on key elements of study design. By nature these are gross divisions, but have proved to be very useful in increasing awareness of the importance of design quality and in prioritizing and sorting the volume of information available. For example, Cochrane reviews commonly limit themselves to the highest level of evidence as a means of managing the volume of information and ensuring the best evidence is emphasized. The most consistently used and most accessible levels rating scale is available on the Oxford Centre of Evidence-Based Practice website (Table 143-1). The hierarchy of evidence consists of five levels of evidence with number one being the highest level of evidence.

Table 143-1 Oxford Centre for Evidence-Based Medicine Levels of Evidence

These levels were produced by Phillips R, Ball C, Sackett D, Badenoch D, Straus S, Haynes B, Dawes M since November 1998. Recent update 2001; available from CEBM: www.cebm.net/index.aspx?o=1025.

Notes for Table 143-1

Users can add a minus-sign (-) to denote the level that fails to provide a conclusive answer because of:

• EITHER a single result with a wide confidence interval (such that, for example, an absolute risk reduction in an RCT is not statistically significant but whose confidence intervals fail to exclude clinically important benefit or harm)

• OR a systematic review with troublesome (and statistically significant) heterogeneity.

• Such evidence is inconclusive, and therefore can only generate grade D recommendations.

*By homogeneity we mean a systematic review that is free of worrisome variations (heterogeneity) in the directions and degrees of results between individual studies. Not all systematic reviews with statistically significant heterogeneity need be worrisome, and not all worrisome heterogeneity need be statistically significant. As noted above, studies displaying worrisome heterogeneity should be tagged with a “-” at the end of their designated level.

†Clinical decision rule. (There are algorithms or scoring systems which lead to a prognostic estimation or a diagnostic category.)

‡See reference 2 for advice on how to understand, rate, and use trials or other studies with wide confidence intervals.

§Met when all patients died before the Rx became available, but some now survive on it; or when some patients died before the Rx became available, but none now die on it.

§§By poor quality cohort study we mean one that failed to clearly define comparison groups or failed to measure exposures and outcomes in the same (preferably blinded), objective way in both exposed and nonexposed individuals and/or failed to identify or appropriately control known confounders and/or failed to carry out a sufficiently long and complete follow-up of patients. By poor quality case–control study we mean one that failed to clearly define comparison groups and/or failed to measure exposures and outcomes in the same (preferably blinded), objective way in both cases and controls and/or failed to identify or appropriately control known confounders.

§§§Split-sample validation is achieved by collecting all the information in a single tranche, then artificially dividing this into “derivation” and “validation” samples.

††An “absolute SpPin” is a diagnostic finding whose Specificity is so high that a Positive result rules-in the diagnosis. An “absolute SnNout” is a diagnostic finding whose Sensitivity is so high that a Negative result rules-out the diagnosis.

‡‡Good, better, bad, and worse refer to the comparisons between treatments in terms of their clinical risks and benefits.

†††Good reference standards are independent of the test, and applied blindly or objectively to all patients. Poor reference standards are haphazardly applied, but still independent of the test. Use of a nonindependent reference standard (where the “test” is included in the “reference” or where the “testing” affects the “reference”) implies a level-4 study.

††††Better-value treatments are clearly as good but cheaper, or better at the same or reduced cost. Worse-value treatments are as good and more expensive, or worse and equally or more expensive.

**Validating studies test the quality of a specific diagnostic test, based on prior evidence. An exploratory study collects information and trawls the data (e.g., using a regression analysis) to find which factors are “significant.”

***By poor-quality prognostic cohort study we mean one in which sampling was biased in favor of patients who already had the target outcome, or the measurement of outcomes was accomplished in <80% of study patients, or outcomes were determined in an unblinded, nonobjective way, or there was no correction for confounding factors.

****Good follow-up in a differential diagnosis study is >80%, with adequate time for alternative diagnoses to emerge (e.g., 1–6 months acute, 1–5 years chronic)

○Extrapolations are data used in a situation that has potentially clinically important differences from the original study situation.

Level 1 evidence about treatment effectiveness comes from individual high-quality randomized clinical trials (RCTs), or systematic reviews (SRs) of randomized trials. Level 2 evidence comes from low-quality randomized or from prospective cohort studies of high quality (or from systematic reviews of these studies). Level 3 evidence comes from case–control studies or systematic reviews of case–control studies. Level 4 evidence comes from case series with no controls. Level 5 evidence comes from expert opinion without critical appraisal or information that is based on physiology or bench research.

The reason prospective randomized clinical trials have been set as the gold standard is because the RCT is the only experimental design. That is, through randomization we randomly distribute factors that might influence outcomes across comparison groups, leaving only the intervention of interest as the differentiating factor. In this way, we are confident that these differences can be attributed to the intervention’s effects. All other research designs are observational, and despite the strategies available to limit bias, we can never be truly confident that factors other than treatment are not the reason for observed group differences. Despite this, high-quality observational studies have been incredibly important in health care and should not be dismissed. EBP is not restricted to randomized trials and meta-analyses, but rather it involves tracking down the best external evidence to answer our clinical questions. It is important to note that there are different optimal designs of different study types. Thus, when looking at other types of questions like prognosis or diagnosis you should consult the appropriate level of evidence table.

Relevance is the process of deciding whether the results of individual clinical studies, or literature syntheses, can be generalized to your specific patient. Basically you must make the decision about whether the patients evaluated within the clinical research are similar enough to your patient that you might expect to achieve a similar result to that reported in the study. It is important to look at studies and consider subgroups and potential factors that might moderate or change the effect of the intervention. Matching aspects of the PICO(T) question to the specific demographics (and subgroup analyses) presented within a given trial are critical to evaluating the extent to which evidence can be applied to individual patients.

In examining our case scenario we are confident that the results apply to our patient because she is a typical participant in the published studies. That is, as an older female with relatively mild arthritis affecting the CMC joint presenting with pain and reduced functional ability, she is likely to benefit from orthotic positioning as did the majority of participants in the available studies. The research suggests that 3 to 4 weeks of more intensive use followed by use during strenuous activities, particularly those that might involve subluxation of CMC joint, is advisable. We would discuss with her which activities were difficult to determine which orthosis might be the best match for her needs. We anticipate that a trial of different orthoses would be needed to determine which is the best orthosis or combination of orthotic usage. There is little evidence to guide us with respect to a strengthening program. A systematic review of exercise for rheumatoid arthritis indicated improved strength with exercise.45 Based on limited evidence and theoretical knowledge, we would assume that some generic strengthening with a greater focus on muscles to counteract the deforming forces at the CMC joint combined with education on joint protection and pacing would have the best overall effect.

The next step of the process involves integrating the research evidence with your clinical expertise and the patient’s values. Values implies that patient goals, expectations, needs, ethics, culture, religion, and other factors may affect the interpretation of the applicability of the evidence or how it would be applied. Sackett termed this “thoughtful identification and compassionate use of individual patients’ predicaments, rights, and preferences in making clinical decisions about their care.” Even the developers of EBP have acknowledged that the specifics of how to do this step are less clear than are some of the more procedural elements.46 Sackett also said, “External clinical evidence can inform, but never replace individual clinical expertise. It is this expertise that decides which external evidence applies to the individual patient at all and if so how it should be integrated into a clinical decision.” Hand therapists tend to view themselves as being patient-centered and respectful of patient values. However, patient centeredness can be difficult in practice as it involves real shared decision making and time to understand the individual at many different levels and how he or she fits into the social context. We may need to accept interventions or outcomes that do not match our expectations or those achieved within the published studies if patients do not choose that path. We may need to advocate for interventions that exceed reimbursements. Both can be difficult within a health-care environment.

In our scenario we evaluate our personal expertise to determine which types of orthosis we are most skilled at making and also use our experience to match characteristics of different options to the patient’s needs. We consider the specific activities that are limited for the individual and their relative importance and how the patient reacts to an orthosis in terms of appearance and role identity. Integrating this information with evidence of effectiveness allows us to provide effective patient-centered care.

The process of evaluating the outcomes of the evidence-based decisions made for an individual patient is the important and fifth step in EBP. Rigorous evaluation of the treatment outcomes in individual patients is the basis for an ongoing feedback loop that helps us evolve and learn from our clinical experience. To better understand the effect of our clinical decisions, we must evaluate the results of objective tests, physical performance tests, and patient reports. Studies confirm that practitioners who experience improvements in patient outcomes are more likely to continue with evidence searching.47 It is important when selecting outcomes to consider both the impairments or disabilities being treated and the interventions being used. Short-term outcome measures help us determine whether a specific intervention “worked.” For example, short-term pain relief can be assessed using a numeric pain rating scale before and after intervention. A strengthening program can be assessed using strength measures. If pain relief is an important consideration for the patient, it should be measured. If the therapists felt that strength was limiting function, and the patient was concerned about her functional limitations, then hand strength should be measured. However, it is also important to assess more global effects of hand therapy. Self-report measures of functional disability and ability to achieve specific participation outcomes (e.g., return to work or a specific recreational activity) should also be measured to determine whether the treatment program achieved a meaningful outcome. This textbook has an entire chapter (Chapter 16) on measuring outcomes that can provide further information on how to do this.

However, with respect to our scenario, we note a previous study that compared the use of the Disabilities of the Arm, Shoulder, and Hand (DASH), Australian/Canadian Hand Osteoarthritis Index (AusCAN), and Patient-Related Wrist/Hand Evaluation (PRWHE) in patients with CMC arthritis.48 The study suggested that all three measures were valid, providing options for measuring self-reported pain and disability. Because we are interested in pain relief we decide to choose one of the two scales that have a subscale for pain (PRWHE or AusCAN). Since the latter would cost $400, and the former is free, we choose the PRWHE.

It is not uncommon to face misconceptions and even mistrust about EBP.49 Debate over these misconceptions and controversies includes both clinicians and academics and is a common source of editorial comment. Unfortunately, misinformation persists and is most often propagated by those who are reluctant to adopt the principles or change their practice.

One misconception is that practitioners are already using evidence as a basis for their practice. In fact, clinicians often receive more information from industry than from independent research. It is not surprising that businesses should market their products through advertisements or offering “educational sessions,” but hand therapists should be aware of the vested interests of those businesses and seek independent evidence. It is important to note that studies show that industry-funded research in orthopedics tends to be biased in favor of new industry-supported products,50-52 thus, even research funded by industries should be considered suspect, unless substantiated by independent researchers.

Hand therapists by tradition, and by influence of certification, are highly motivated to attend continuing education courses. In most surveys, therapists report that continuing education is their primary mode of getting new information about clinical practice. Unfortunately, the effect of continuing education on the quality of care provided has been questioned.53 Another common source of information for therapists is the use of colleagues. Thus, the primary source of information for hand therapists is not search evidence. Therapists should move toward use of research evidence, attending continuing education courses where research evidence is fully integrated, and discussion of research evidence with their colleagues to ensure a more evidence-based approach in information gathering.

A second misconception is that EBP takes too much time. Therapists often feel they do not have time to read research articles, or even to try new techniques. While in the long run an evidence-based approach should save time by eliminating ineffective practices, it is realistic to assume that some time is required to find and integrate new evidence. A learning curve should be expected. However, the frequency of searching diminishes over time as the therapist becomes more aware of current best evidence. In addition, the time required can be lessened by the use of literature synthesis resources and point-of-care decision support aids.

Another misconception is that EBP only values RCTs. This is not true. Although EBP values RCTs as the highest level of evidence for treatment effectiveness, it only requires that clinicians search out and apply the best available evidence. There will always be cases for which a weak study or knowledge of anatomy and pathology is the best available evidence. EBP merely requires the hand therapists continue to search for the best evidence and be willing to change their practice when higher quality studies emerge.

Finally, hand therapists are often concerned that the underlying reason for EBP is cutting costs. This is a legitimate concern as hand therapists have experienced some cases in which treatment or individual interventions have been denied with the rationale “lack of evidence.” It is possible to abuse the principles of EBP this way. Too often, people confuse a lack of evidence with a lack of effect. In some cases, funding agencies may be motivated to refuse payment of services when they feel there is insufficient evidence. The best defense against this form of abuse is for hand therapists to be armed with studies supporting the interventions they provide.

EBP is well recognized within the practice of hand therapy; however, resources to assist hand therapists with the process, such as special journal issues and training workshops, have only become recently available. Hand therapists are encouraged to contribute to the profession of hand rehabilitation by conducting and disseminating the results of original clinical research, critically appraising existing evidence, and participating as a volunteer in the development of EBP clinical guidelines through professional organizations (Box 143-3).

Box 143-3 Recent EBP Initiatives in Hand Rehabilitation

• The Journal of Hand Therapy now includes levels of evidence in the abstract.

• MacPLUS Rehab has been developed to provide a push-out customized service where hand therapists can be notified about the most recent high-quality evidence in hand therapy (quality rating and clinical relevance ratings performed by experts).

• The textbook, Evidence-Based Rehabilitation, 2nd edition, provides additional detail on EBP specific to rehabilitation practice.

• The American Society of Hand Therapists provides an online journal club.

• The American Society of Hand Therapists is developing evidence-based clinical practice guidelines.

• The Journal of Hand Therapy and Hand Clinics have both dedicated special issues to evidence-based practice providing information specific to the field.

1. MacDermid JC. Evidence-based practice. J Hand Ther. 2004;17:103–104.

2. MacDermid JC. An introduction to evidence-based practice for hand therapists. J Hand Ther. 2004;17:105–117.

3. Szabo RM, MacDermid JC. Introduction to evidence-based practice for hand surgeons and therapists. Hand Clin. 2009;25(1):1–14. Feb

4. Law M, MacDermid JC. Evidence-Based Rehabilitation. 2nd ed Thorofare, NJ: Slack Incorporated; 2008.

5. Sackett DL. Clinical epidemiology: what, who, and whither. J Clin Epidemiol. 2002;55:1161–1166.

6. Sackett DL. Evidence-based medicine. Semin Perinatol. 1997;21:3–5.

7. Sackett DL. Clinical epidemiology. Am J Epidemiol. 1969;89:125–128.

8. Leeder SR, Sackett DL. The medical undergraduate programme at McMaster University: learning epidemiology and biostatistics in an integrated curriculum. Med J Aust. 1976;2:875. 878-875, 880

9. Sackett DL, Haynes RB, Tugwell P. Clinical epidemiology. A Basic Science for Clinical Medicine. 1985;1:1–370.

10. Sackett DL, Straus SE, Richardson WS, et al. Evidence-based medicine. How to practice and teach. EBM. 2000;2nd:1–280.

11. Gosling AS, Westbrook JI, Coiera EW. Variation in the use of online clinical evidence: a qualitative analysis. Int J Med Inform. 2003;69:1–16.

12. Haynes RB, Wilczynski N, McKibbon KA, et al. Developing optimal search strategies for detecting clinically sound studies in MEDLINE. J Am Med Inform Assoc. 1994;1:447–458.

13. Haynes RB, Kastner M, Wilczynski NL. Hedges Team. Developing optimal search strategies for detecting clinically sound and relevant causation studies in EMBASE. BMC Med Inform Decis Mak. 2005;5:8.

14. Haynes RB, Wilczynski NL. Optimal search strategies for retrieving scientifically strong studies of diagnosis from Medline: analytical survey. BMJ. 2004;328:1040.

15. Montori VM, Wilczynski NL, Morgan D, Haynes RB. Optimal search strategies for retrieving systematic reviews from Medline: analytical survey. BMJ. 2005;330(7501):1162–1163.

16. Wilczynski NL, Haynes RB. Optimal search strategies for detecting clinically sound prognostic studies in EMBASE: an analytic survey. J Am Med Inform Assoc. 2005 Jul-Aug;12(4):481–485.

17. Wong SS, Wilczynski NL, Haynes RB. Developing optimal search strategies for detecting clinically relevant qualitative studies in MEDLINE. Medinfo. 2004;2004:311–316.

18. Herbert R, Moseley A, Sherrington C. PEDro: a database of randomised controlled trials in physiotherapy. Health Inf Manag. 1998;28:186–188.

19. Sherrington C, Herbert RD, Maher CG, Moseley AM. PEDro. A database of randomized trials and systematic reviews in physiotherapy. Man Ther. 2000;5:223–226.

20. Stefanelli M. Knowledge management to support performance-based medicine. Methods Inf Med. 2002;41:36–43.

21. Bennett S, Tooth L, McKenna K, et al. Perceptions of evidence-based practice: a survey of Australian occupational therapists. Aust Occ Ther J. 2003;50:13–22.

22. Dysart AM, Tomlin GS. Factors related to evidence-based practice among U.S. occupational therapy clinicians. Am J Occup Ther. 2002;56:275–284.

23. Jette DU, Bacon K, Batty C, et al. Evidence-based practice: beliefs, attitudes, knowledge, and behaviors of physical therapists. Phys Ther. 2003;83:786–805.

24. Kamwendo K. What do Swedish physiotherapists feel about research? A survey of perceptions, attitudes, intentions and engagement. Physiother Res Int. 2002;7:23–34.

25. Laupacis A. The future of evidence-based medicine. Can J Clin Pharmacol. 2001;8(Suppl A):6A–9A.

26. Newman M, Papadopoulos I, Sigsworth J. Barriers to evidence-based practice. Intensive Crit Care Nurs. 1998;14:231–238.

27. Palfreyman S, Tod A, Doyle J. Comparing evidence-based practice of nurses and physiotherapists. Br J Nurs. 2003;12:246–253.

28. Young JM, Ward JE. Evidence-based medicine in general practice: beliefs and barriers among Australian GPs. J Eval Clin Pract. 2001;7:201–210.

29. Greenhalgh T. Papers that summarise other papers (systematic reviews and meta-analyses). BMJ. 1997;315:672–675.

30. Hatala R, Keitz S, Wyer P, Guyatt G. Tips for learners of evidence-based medicine: 4. Assessing heterogeneity of primary studies in systematic reviews and whether to combine their results. CMAJ. 2005;172:661–665.

31. The Cochrane Centre—Cochrane Collaboration Handbook Issue 1. 1997

32. Jadad AR, Cook DJ, Jones A, et al. Methodology and reports of systematic reviews and meta-analyses: a comparison of Cochrane reviews with articles published in paper-based journals. JAMA. 1998;280:278–280.

33. Olsen O, Middleton P, Ezzo J, et al. Quality of Cochrane reviews: assessment of sample from 1998. BMJ. 2001;323:829–832.

34. Sackett DL. Cochrane collaboration. BMJ. 1994;309:1514–1515.

35. Shea B, Moher D, Graham I, et al. A comparison of the quality of Cochrane reviews and systematic reviews published in paper-based journals. Eval Health Prof. 2002;25:116–129.

36. Sackett DL. The Cochrane collaboration. ACP J Club. 1994;120(Suppl 3):A11.

37. MacDermid JC. Evidence-based practice. J Hand Ther. 2004;17:103–104.

38. Haynes RB. Of studies, summaries, synopses, and systems: the “4S” evolution of services for finding current best evidence. Evid Based Nurs. 2005;8:4–6.

39. Sackett DL, Straus SE. Finding and applying evidence during clinical rounds: the “evidence cart.”. JAMA. 1998;280:1336–1338.

40. Egan MY, Brousseau L. Splinting for osteoarthritis of the carpometacarpal joint: a review of the evidence. Am J Occup Ther. 2007;61:70–78.

41. Massy-Westropp N, Grimmer K, Bain G. A systematic review of the clinical diagnostic tests for carpal tunnel syndrome. J Hand Surg [Am ]. 2000;25:120–127.

42. Dirschl DR, Tornetta P III, Bhandari M. Designing, conducting, and evaluating journal clubs in orthopaedic surgery. Clin Orthop Relat Res. 2003.146–157.

43. Kirchhoff KT, Beck SL. Using the journal club as a component of the research utilization process. Heart Lung. 1995;24:246–250.

44. Turner P, Mjolne I. Journal provision and the prevalence of journal clubs: a survey of physiotherapy departments in England and Australia. Physiother Res Int. 2001;6:157–169.

45. Wessel J. The effectiveness of hand exercises for persons with rheumatoid arthritis: a systematic review. J Hand Ther. 2004;17:174–180.

46. Guyatt GH, Haynes RB, Jaeschke RZ, et al. Users’ Guides to the Medical Literature: XXV. Evidence-based medicine: principles for applying the Users’ Guides to patient care. Evidence-Based Medicine Working Group. JAMA. 2000;284:1290–1296.

47. Magrabi F, Westbrook JI, Coiera EW. What factors are associated with the integration of evidence retrieval technology into routine general practice settings? Int J Med Inform. 2007;76:701–709.

48. MacDermid JC, Wessel J, Humphrey R, Ross D, Roth JH. Validity of self-report measures of pain and disability for persons who have undergone arthroplasty for osteoarthritis of the carpometacarpal joint of the hand. Osteoarthritis Cartilage. 2007;15:524–530.

49. Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn’t. BMJ. 1996;312:71–72.

50. Cunningham MR, Warme WJ, Schaad DC, et al. Industry-funded positive studies not associated with better design or larger size. Clin Orthop Relat Res. 2007;457:235–241.

51. Leopold SS, Warme WJ, Fritz BE, Shott S. Association between funding source and study outcome in orthopaedic research. Clin Orthop Relat Res. 2003.293–301.

52. Shah RV, Albert TJ, Bruegel-Sanchez V, et al. Industry support and correlation to study outcome for papers published in Spine. Spine. 2005;30:1099–1104.

53. Sibley JC, Sackett DL, Neufeld V, et al. A randomized trial of continuing medical education. N Engl J Med. 1982;306:511–515.

* Supported by a New Investigator Award, Canadian Institutes of Health Research.

† Although hand therapists prefer the term orthotic, splinting is more commonly used in the current literature and, thus, more likely to reveal relevant studies; as an alternative you could search (splinting OR orthotic) AND arthritis.