Chapter Five Experimental designs and intervention studies

Introduction

Experiments are an important form of intervention studies. A well-designed experiment enables researchers to demonstrate how manipulating one set of variables (independent variables) produces systematic changes in another set of variables (outcome or dependent variables). Experimental designs attempt to ensure that all variables other than the independent variable(s) are controlled; that is, that there are no uncontrolled extraneous variables that might systematically influence or bias changes in the outcome variable(s). Control is most readily exercised in the sheltered environment of research laboratories and this is one of the reasons why these settings are the preferred habitats of experimentalists. Much of clinical experimentation, however, takes place in field settings (e.g. hospitals and clinics), where the phenomena of health, illness and treatment are naturally located. Even in these natural settings researchers can exercise control over extraneous variables. The randomized controlled trial (RCT) is an experimental research design aimed at assessing the effectiveness of a clinical intervention. The experimental approach has also been used more broadly in the ‘hard’ sciences.

Experimental research

Experimental studies and RCTs involve the following steps:

Thus, in an experimental study the researcher actively manipulates the independent variable(s) and monitors outcomes through measurement of the outcome or dependent variable(s).

Assignment of participants into groups

Random assignment

The simplest approach is to assign the participants randomly to independent groups. Each intervention group represents a ‘level’ of the independent variable. Say, for instance, we were interested in the effects of a new drug (we will call this drug A) in helping to relieve the symptoms of depression. We also decide to have a placebo control group, which involves giving patients a capsule identical to A, but not containing the active ingredient.

Given a sample size of 20, we would assign each participant randomly to either the experimental (drug A) or the control (placebo control) group. Random assignment could involve encoding all the 20 names into a computer program and using random numbers to generate two groups of 10. In this case, we would end up with the two equivalent groups:

| Levels of the independent variables | ||

|---|---|---|

| Control group (Placebo) | Experimental group (Drug A) | |

| Number of participants | nc = 10 | ne = 10 |

Here nc and ne refer to the number of participants in each of the groups, given that the total sample size (n) was 20. We can have more than two groups if we want. For example, if we also included another drug ‘B’, the independent variables would have three levels. We would require a total of 30 participants if the group sizes remained at 10.

Matched groups

Random assignment does not guarantee that the two groups will be equivalent. Rather, the argument is that there is no reason that the groups should be different. While this is true in the long run, with small sample sizes chance differences among the groups may distort the results of an experiment or RCT. Matched assignment of the participants into groups minimizes group differences caused by chance variation.

Using the hypothetical example discussed previously, say that the researcher required that the two groups should be equivalent at the start of the study on the measure of depression used in the experiment. Using a matched-groups design the participants would be assessed for level of depression before the treatment and paired for scores from highest to lowest. Subsequently, the two participants in each pair would be randomly assigned to either the experimental group or the placebo control group. In this way, it would be likely that the two groups would have similar average pre-test depression scores.

Different experimental designs

Four types of experimental design will be discussed. These are the post-test only, pre-test/post-test, repeated measures and factorial designs.

Post-test only design

At first it may appear that this would make measurement of change impossible. At an individual level this is certainly true. However, if we assume that the control and experimental groups were initially identical and that no change had occurred in the controls, direct comparison of the post-test scores will indicate the extent of the change. This type of design is fraught with danger in clinical research and should only be used in special circumstances, such as when pre-test measures are impossible or unethical to carry out. The assumptions of initial equivalence and of no change in the control group often may not be supported and, in such cases, interpretation of group differences is difficult and ambiguous.

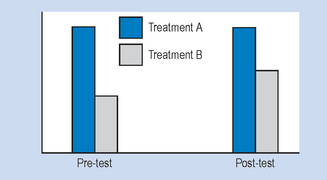

Pre-test/post-test design

In this design, measurements of the outcome or dependent variables are taken both before and after intervention. This allows the measurement of actual changes for individual cases. However, the measurement process itself may produce change, thereby introducing difficulties in the clear attribution of change to the intervention on its own. For example, in an experimental study of weight loss, simply administering a questionnaire concerning dietary habits may lead to changes in those habits by encouraging people to scrutinize their own behaviour and hence modify it. Alternatively, in measures of skill, there may be a practice effect such that, without any intervention, the performance on a second test will improve. In order to overcome these difficulties, many researchers turn to the post-test only design.

Repeated measures

In order to economize with the number of participants required in an experimental design, the researchers will sometimes re-use participants in the design. Thus, at different times the participants may receive, say, drug A or drug B. If it were the case that every subject encountered more than one level of the drug variable or factor, then ‘drug’ would be termed a repeated measures factor. An important consideration is using a ‘counterbalanced’ design to avoid series effects. For example, half the participants should receive drug A first, and half drug B. If all the participants received drug A first and then drug B, the study would not be counterbalanced and we would not be able to determine whether the order of administration of the drugs was important. Time is a common repeated measures factor in many studies. A pre-test/post-test design involves the measurement of the same participants twice. If ‘time’ is included in the analysis of the study, then this is a repeated measures factor. In statistical analysis, repeated measures factors are treated differently from factors where each level is represented by a separate, independent group. This is true both for matched groups, discussed earlier, as well as for repeated measures, discussed above.

Factorial designs

A researcher will often not be content with the manipulation of one intervention factor or variable in isolation. For example, a clinical psychologist may wish to investigate the effectiveness of both the type of psychological therapy and the use of drug therapy for a group of patients. Let us assume that the psychologist was interested in the effects of therapy versus no psychological treatment, and of drug A versus no treatment. These two variables lead to four possible combinations of treatment (see Table 5.1).

Table 5.1 Examples of a factorial design

| Drug A | No drug | |

|---|---|---|

| Rogerian therapy | 1 | 2 |

| No psychological therapy | 3 | 4 |

This design enables us to investigate the separate and combined effects of both independent variables upon the outcome measure(s). In other words, we are looking for interactions among the two (or more) factors. If all possible combinations of the values or levels of the independent variables are included in the study, the design is said to be fully crossed. If one or more is left out, it is said to be incomplete. With an incomplete factorial design, there are some difficulties in determining the complete joint and separate effects of the independent variables.

In order that the terminology in experimental designs is clear, it is instructive to consider the way in which research methodologists would typically describe the example design in Table 5.1. This is a study with two independent variables (sometimes called factors), namely, type of psychological therapy and drug treatment. Each independent variable or factor has two levels or values. In the case of psychological therapy, the two levels are Rogerian therapy and no psychological therapy. This would commonly be described as a 2 by 2 design (each factor having two levels). There are four groups (2 × 2) in the design.

If a third level, drug B, was added to the drug factor, then it would become a 2 by 3 design with six groups required. Two groups, drug B with Rogerian therapy and drug B with no psychological therapy, would be added. It is possible to overdo the number of factors and levels in experimental studies. A 4 × 4 × 4 design would require 64 groups: that is a lot of research participants to find for a study. It is relevant to note that when we evaluate two or more groups over a period of time we are also using a factorial design.

Multiple dependent variables

Just as it is possible to have multiple independent variables in an experimental study, it is also sometimes appropriate to have multiple outcome or dependent measures. For example, in order to assess the effectiveness of an intervention such as icing of an injury, factors such as extent of oedema and area of bruising are both important outcome measures. In this instance, there would be two outcome measures. The use of multiple dependent variables is very common in health research. The outcomes measured are usually evaluated individually, although there are more complex statistical techniques which enable the simultaneous analysis of multiple dependent variables.

External validity of experiments and RCTs

We have already discussed external validity in Chapter 3. There are further criteria for ensuring the generalizability of an investigation, depending on the procedures used and the interaction between the patients and the therapist.

The Rosenthal effect

A series of classic experiments by Rosenthal (1976) and other researchers have shown the importance of expectancy effects, where the expectations of the experimenter are conveyed to the experimental subject. This type of expectancy effect has been termed the Rosenthal effect and is best explained by consideration of some of the original literature in this area.

Rosenthal and his colleagues performed an experiment involving the training of two groups of rats in a maze-learning task. A bright strain and a dull strain of rats, specially bred for the purpose, were trained by undergraduate student experimenters to negotiate the maze. After a suitable training interval, the relative performances of the two groups were compared. Not surprisingly, the ‘bright’ strain significantly outperformed the ‘dull’ strain.

What was surprising is that the two groups of rats were actually genetically identical. The researchers had deceived the student experimenters for the purposes of the study. The students’ expectations of the rats likely had resulted in different handling and recording which had apparently affected the rats’ measured learning outcomes. Rosenthal’s results have been confirmed time and time again in a variety of experimental settings, and with a variety of participants.

If the Rosenthal effect is so pervasive, how can we control for its effects? One method of control is to ensure that the ‘experimenters’ do not know the true purpose of the study; that is, the experimental hypothesis. This can be done by withholding information – just not telling people what you are doing – or by deception.

Deception is riskier and less ethically acceptable. Most organizations engaged in research activity have ethics committees that carefully monitor and limit the use of deception in research. If the people carrying out the data collection are unaware of the research aims being tested we say that they were ‘blind’ to the research aims.

The Hawthorne effect

As well as the impact of the expectations of participants in experimental studies, there is also the issue of whether attention paid to participants in the experimental setting alters the results.

In the late 1920s, a group of researchers at the Western Electric Hawthorne Works in Chicago investigated the effects of lighting, heating and other physical conditions upon the productivity of production workers. Much to the surprise of the researchers, the productivity of the workers kept improving independently of the actual physical conditions. Even with dim lighting, productivity reached new highs. It was obvious that the improvements observed were not due to the mani-pulations of the independent variables themselves, but some other aspect of the research situation. The researchers concluded that there was a facilitation effect caused by the presence of the researchers. This type of effect has been labelled the Hawthorne effect and has been found to be prevalent in many settings. Of particular interest to us is the Hawthorne effect in clinical research settings. It must be considered that even ‘inert’ or useless treatments might result in significant improvements in the patients’ condition under certain circumstances. The existence of the placebo effect reinforces the importance of having adequate controls in applied clinical research. Although we cannot eliminate it, we can at least measure the size of it through observation of the control group, and evaluate the experimental results accordingly.

Controlled research involving human participants

Investigations involving human participants require that researchers should consider both psychological and ethical issues when designing experiments. Human beings respond actively to being studied. When recruited as a research participant, a person might formulate a set of beliefs about the aims of the study and will have expectations about the outcomes. In health research, placebo effects are positive changes in signs and symptoms in people who believe that they are being offered effective treatment by health professionals. These improvements in signs and symptoms are in fact elicited by inert treatments probably mediated by the patients’ expectations. Health professionals who hold strong beliefs about the effectiveness of their treatments communicate this attitude to their patients and possibly increase the placebo effects.

Placebo responses as such are not a problem in everyday health care. Skilled therapists utilize this effect to the patients’ benefit. Of course, charlatans exploit this phenomenon, masking the poor efficacy of their interventions. In health research, however, we need to demonstrate that an intervention has therapeutic effects greater than a placebo, hence the need for a placebo control group. The ideal standard for an experiment is a double-blind design, in which neither the research participants nor the health providers/experimenters know which participants are receiving the active form of the treatment.

It is not always possible, however, to form placebo control groups. In the area of drug research and some other physical treatments it can be possible; but with certain behaviourally-based interventions, in areas such as psychotherapy, physiotherapy or occupational therapy, double-blind placebo interventions may be impossible to implement. For instance, how could a physiotherapist offering a complex and intensive exercise programme be ‘blind’ to the treatment programme being offered? In these situations researchers employ no treatment or traditional intervention control groups when evaluating the safety and effectiveness of a novel intervention.

Also, there are ethical issues that need to be taken into account when using placebo or non-intervention control groups. Where people are suffering from acute or life-threatening conditions, assignment into a placebo or no-treatment control group could have serious consequences. This is particularly true for illnesses where prolonged participation in a placebo control group could have irreversible consequences for the sufferers. Under such circumstances, placebo or no-treatment controls might well be unethical and the selection of a traditional treatment group would be preferred.

Summary

The experimental (RCT) approach to research design involves the active manipulation of the independent variable(s) through the administration of an intervention often with a nonintervention control group and the measurement of outcome through the dependent variable(s). Good experimental design requires careful sampling, assignment and measurement procedures to maximize both internal and external validity.

The Hawthorne and Rosenthal effects are important factors affecting the validity of experimental studies, and we attempt to control for these by ‘blinding’ when ethically possible.

Common experimental designs include the pre-test/post-test, post-test only, repeated measures and factorial approaches. These designs ensure that investigations can show causal effects. More recent approaches to evidence-based health care have emphasized the importance of employing experimental trials wherever possible.

Self-assessment

Explain the meaning of the following terms: