Computers in Medical Imaging

At the completion of this chapter, the student should be able to do the following:

1 Discuss the history of computers and the role of the transistor and microprocessor.

2 Define bit, byte, and word as used in computer terminology.

3 List and explain various computer languages.

4 Contrast the two classifications of computer programs, systems software and applications programs.

5 List and define the components of computer hardware.

Today, the word computer refers to the personal computer, which is primarily responsible for the explosion in computer applications. In addition to scientific, engineering, and business applications, the computer has become evident in everyday life. For example, we know that computers are involved in video games, automatic teller machines (ATMs), and highway toll systems. Other everyday uses include supermarket checkouts, ticket reservation centers, industrial processes, touch-tone telephone systems, traffic lights, and automobile ignition systems.

Computer applications in radiology also continue to grow. The first large-scale radiology application was computed tomography (CT). Magnetic resonance imaging and diagnostic ultrasonography use computers similarly to the way CT imaging systems do. Computers control high-voltage x-ray generators and radiographic control panels, making digital fluoroscopy and digital radiography routine. Telecommunication systems have provided for the development of teleradiology, which is the transfer of images and patient data to remote locations for interpretation and filing. Teleradiology has changed the way human resources are allocated for these tasks.

History of Computers

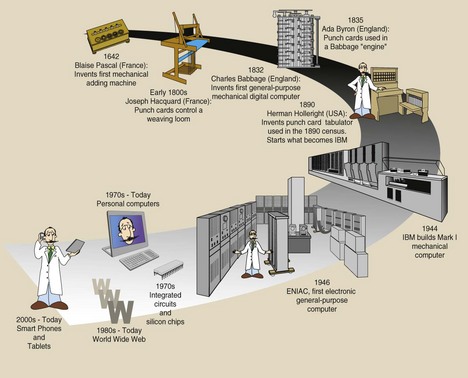

The earliest calculating tool, the abacus (Figure 14-1), was invented thousands of years ago in China and is still used in some parts of Asia. In the 17th century, two mathematicians, Blaise Pascal and Gottfried Leibniz, built mechanical calculators using pegged wheels that could perform the four basic arithmetic functions of addition, subtraction, multiplication, and division.

FIGURE 14-1 The abacus was the earliest calculating tool. (Courtesy Robert J. Wilson, University of Tennessee.)

In 1842, Charles Babbage designed an analytical engine that performed general calculations automatically. Herman Hollerith designed a tabulating machine to record census data in 1890. The tabulating machine stored information as holes on cards that were interpreted by machines with electrical sensors. Hollerith’s company later grew to become IBM.

In 1939, John Atansoff and Clifford Berry designed and built the first electronic digital computer.

In December 1943, the British built the first fully operational working computer, called Colossus, which was designed to crack encrypted German military codes. Colossus was very successful, but because of its military significance, it was given the highest of all security classifications, and its existence was known only to relatively few people. That classification remained until 1976, which is why it is rarely acknowledged.

The first general-purpose modern computer was developed in 1944 at Harvard University. Originally called the Automatic Sequence Controlled Calculator (ASCC), it is now known simply as the Mark I. It was an electromechanical device that was exceedingly slow and was prone to malfunction.

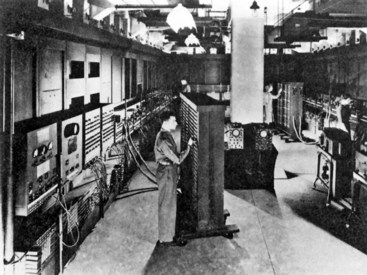

The first general-purpose electronic computer was developed in 1946 at the University of Pennsylvania by J. Presper Eckert and John Mauchly at a cost of $500,000. This computer, called ENIAC (Electronic Numerical Integrator And Calculator), contained more than 18,000 vacuum tubes that failed at an average rate of one every 7 minutes (Figure 14-2). Neither the Mark I nor the ENIAC had instructions stored in a memory device.

FIGURE 14-2 The ENIAC (Electronic Numerical Integrator And Calculator) computer occupied an entire room. It was completed in 1946 and is recognized as the first all-electronic, general-purpose digital computer. (Courtesy Sperry-Rand Corporation.)

In 1948, scientists led by William Shockley at the Bell Telephone Laboratories developed the transistor. A transistor is an electronic switch that alternately allows or does not allow electronic signals to pass. It made possible the development of the “stored program” computer and thus the continuing explosion in computer science.

The transistor allowed Eckert and Mauchly of the Sperry-Rand Corporation to develop UNIVAC (UNIVersal Automatic Computer), which appeared in 1951 as the first commercially successful general-purpose, stored program electronic digital computer.

Computers have undergone four generations of development distinguished by the technology of their electronic devices. First-generation computers were vacuum tube devices (1939–1958). Second-generation computers, which became generally available in about 1958, were based on individually packaged transistors.

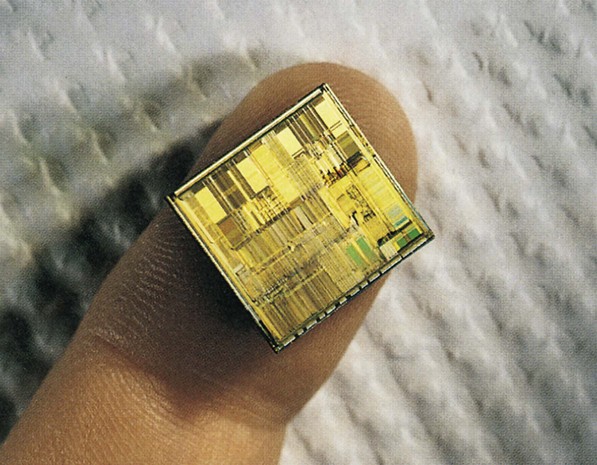

Third-generation computers used integrated circuits (ICs), which consist of many transistors and other electronic elements fused onto a chip—a tiny piece of semiconductor material, usually silicon. These were introduced in 1964. The microprocessor was developed in 1971 by Ted Hoff of Intel Corporation.

The fourth generation of computers, which first appeared in 1975, was an extension of the third generation and incorporated large-scale integration (LSI); this has now been replaced by very large-scale integration (VLSI), which places millions of circuit elements on a chip that measures less than 1 cm (Figure 14-3).

FIGURE 14-3 This Celeron microprocessor incorporates more than 1 million transistors on a chip of silicon that measures less than 1 cm on a side. (Courtesy Intel.)

The word computer today identifies the personal computer (PC) to most of us (Figure 14-4), which is configured as a desktop, laptop, or notebook.

FIGURE 14-4 Today’s personal computer has exceptional speed, capacity, and flexibility and is used for numerous applications in radiology. (Courtesy Dell Computer Corporation.)

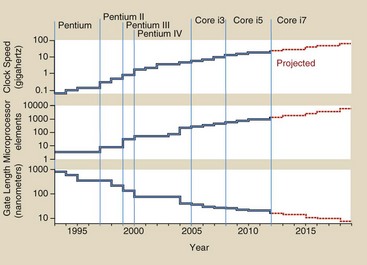

Decades ago, digital computers replaced analog computers, and the word digital is now almost synonymous with computer. A timeline showing the evolution of computers shows how rapidly this technology is advancing (Figure 14-5).

The difference between analog and digital is illustrated in Figure 14-6, which shows two types of watches. An analog watch is mechanical and has hands that move continuously around a dial face. A digital watch contains a computer chip and indicates time with numbers.

Analog and digital meters are used in many commercial and scientific applications. Digital meters are easier to read and can be more precise.

Computer Architecture

A computer has two principal parts—hardware and software. The hardware is everything about the computer that is visible—the physical components of the system that include the various input and output devices. Hardware usually is categorized according to which operation it performs. Operations include input processing, memory, storage, output, and communications. The software consists of the computer programs that tell the hardware what to do and how to store and manipulate data.

Computer Language

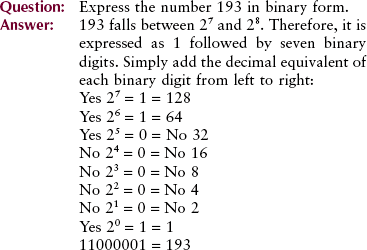

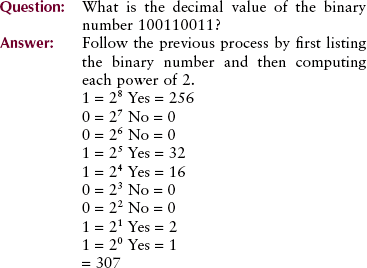

To give a computer instructions on how to store and manipulate data, thousands of computer languages have been developed. Higher level languages typically allow users to input short English-based instructions. All computer languages translate what the user inputs into a series of 1s and 0s that the computer can understand. Although the computer can accept and report alphabetic characters and numeric information in the decimal system, it operates in the binary system. In the decimal system, the system we normally use, 10 digits (0–9) are used. The word digit comes from the Latin for “finger” or “toe”. The origin of the decimal system is obvious (Figure 14-7).

Other number systems have been formulated to many other base values. The duodecimal system, for instance, has 12 digits. It is used to describe the months of the year and the hours in a day and night. Computers operate on the simplest number system of all—the binary number system. It has only two digits, 0 and 1.

Binary Number System

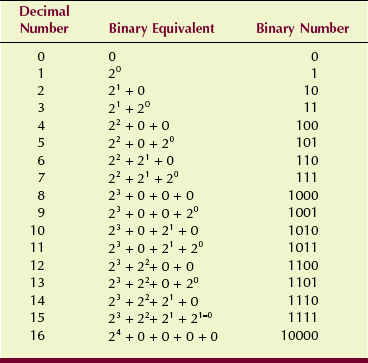

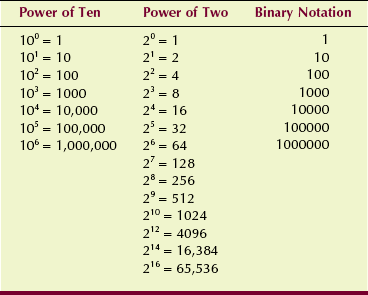

Counting in the binary number system starts with 0 to 1 and then counts over again (Table 14-1). It includes only two digits, 0 and 1, and the computer performs all operations by converting alphabetic characters, decimal values, and logic functions to binary values.

Even the computer’s instructions are stored in binary form. In this way, although binary numbers may become exceedingly long, computation can be handled by properly adjusting the thousands of flip-flop circuits in the computer.

In the binary number system, 0 is 0 and 1 is 1, but there, the direct relationship with the decimal number system ends. It ends at 1 because the 1 in binary notation comes from 20. Recall that any number raised to the zero power is 1; therefore, 20 is 1.

In binary notation, the decimal number 2 is equal to 21 plus 0. This is expressed as 10. The decimal number 3 is equal to 21 plus 20 or 11 in binary form; 4 is 22 plus no 21 plus no 20 or 100 in binary form. Each time it is necessary to raise 2 to an additional power to express a number, the number of binary digits increases by one.

Just as we know the meaning of the powers of 10, it is necessary to recognize the powers of 2. Power of 2 notation is used in radiologic imaging to describe image size, image dynamic range (shades of gray), and image storage capacity. Table 14-2 reviews these power notations. Note the following similarity. In both power notations, the number of 0s to the right of 1 equals the value of the exponent.

Digital images are made of discrete picture elements, pixels, arranged in a matrix. The size of the image is described in the binary number system by power of 2 equivalents. Most images measure 256 × 256 (28) to 1024 × 1024 (210) for computed tomography (CT) and magnetic resonance imaging (MRI). The 1024 × 1024 matrix is used in digital fluoroscopy. Matrix sizes of 2048 × 2048 (211) and 4096 × 4096 (212) are used in digital radiography.

Bits, Bytes, and Words

In computer language, a single binary digit, 0 or 1, is called a bit. Depending on the microprocessor, a string of 8, 16, 32, or 64 bits is manipulated simultaneously.

The computer uses as many bits as necessary to express a decimal digit, depending on how it is programmed. The 26 characters of the alphabet and other special characters are usually encoded by 8 bits.

Bits often are grouped into bunches of eight called bytes. Computer capacity is expressed by the number of bytes that can be accommodated.

One kilobyte (kB) is equal to 1024 bytes. Note that kilo is not metric in computer use. Instead, it represents 210 or 1024. The computers typically used in radiology departments have capacities measured in megabytes (MB) or more likely gigabytes (GB), where 1 GB = 1kB × 1 kB × 1 kB = 210 × 210 × 210 = 230 = 1,099,511,627,776 bytes and 1 GB = 1024 MB.

Depending on the computer configuration, two bytes usually constitute a word. In the case of a 16-bit microprocessor, a word would consist of 16 consecutive bits of information that are interpreted and shuffled about the computer as a unit. Sometimes half a byte is called a “nibble,” and two words is a “chomp”! Each word of data in memory has its own address. In most computers, a 32-bit or 64-bit word is the standard word length.

Computer Programs

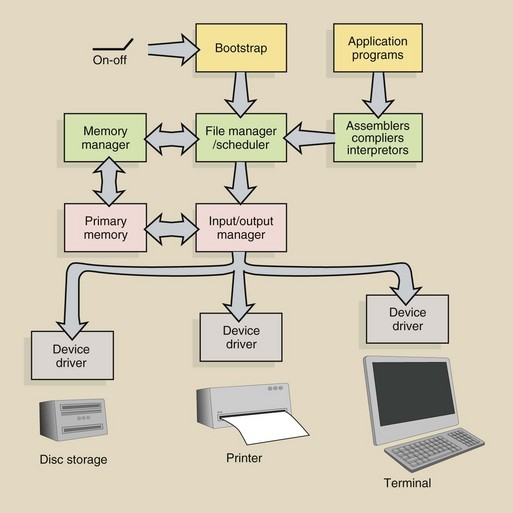

The sequence of instructions developed by a software programmer is called a computer program. It is useful to distinguish two classifications of computer programs: systems software and application programs.

Systems software consists of programs that make it easy for the user to operate a computer to its best advantage.

Application programs are those written in a higher level language expressly to carry out some user function. Most computer programs as we know them are application programs.

Systems Software

The computer program most closely related to the system hardware is the operating system. The operating system is that series of instructions that organizes the course of data through the computer to the solution of a particular problem. It makes the computer’s resources available to application programs.

Commands such as “open file” to begin a sequence or “save file” to store some information in secondary memory are typical of operating system commands. MAC-OS, Windows, and Unix are popular operating systems.

Computers ultimately understand only 0s and 1s. To relieve humans from the task of writing programs in this form, other programs called assemblers, compilers, and interpreters have been written. These types of software provide a computer language that can be used to communicate between the language of the operating system and everyday language.

An assembler is a computer program that recognizes symbolic instructions such as “subtract (SUB),” “load (LD),” and “print (PT)” and translates them into the corresponding binary code. Assembly is the translation of a program written in symbolic, machine-oriented instructions into machine language instructions.

Compilers and interpreters are computer programs that translate an application program from its high-level language, such as Java, BASIC, C++, or Pascal, into a form that is suitable for the assembler or into a form that is accepted directly by the computer. Interpreters make program development easier because they are interactive. Compiled programs run faster because they create a separate machine language program.

Application Programs

Computer programs that are written by a computer manufacturer, by a software manufacturer, or by the users themselves to guide the computer to perform a specific task are called application programs. Examples are iTunes, Spider Solitaire, and Excel.

Application programs allow users to print mailing lists, complete income tax forms, evaluate financial statements, or reconstruct images from x-ray transmission patterns. They are written in one of many high-level computer languages and then are translated through an interpreter or a compiler into a corresponding machine language program that subsequently is executed by the computer.

The diagram in Figure 14-8 illustrates the flow of the software instructions from turning the computer on to completing a computation. When the computer is first turned on, nothing is in its temporary memory except a program called a bootstrap. This is frozen permanently in ROM. When the computer is started, it automatically runs the bootstrap program, which is capable of transferring other necessary programs off the disc and into the computer memory.

The bootstrap program loads the operating system into primary memory, which in turn controls all subsequent operations. A machine language application program likewise can be copied from the disc into primary memory, where prescribed operations occur. After completion of the program, results are transferred from primary memory to an output device under the control of the operating system.

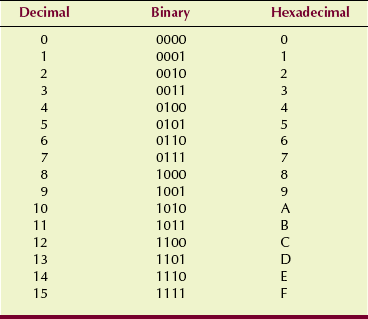

Hexadecimal Number System

The hexadecimal number system is used by assembly level applications. As you have seen, assembly language acts as a midpoint between the computer’s binary system and the user’s human language instructions. The set of hexadecimal numbers is 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, A, B, C, D, E, and F. Each of these symbols is used to represent a binary number or, more specifically, a set of four bits. Therefore, because it takes eight bits to make a byte, a byte can be represented by two hexadecimal numbers. The set of hexadecimal numbers corresponds to the binary numbers for 0 to 15, as is shown in Table 14-3.

High-level programming languages allow the programmer to write instructions in a form that approaches human language, with the use of words, symbols, and decimal numbers rather than the 1s and 0s of machine language. A brief list of the more popular programming languages is given in Table 14-4. With the use of one of these high-level languages, a set of instructions can be written that will be understood by the system software and will be executed by the computer through its operating system.

TABLE 14-4

| Language | Date Introduced | Description |

| FORTRAN | 1956 | First successful programming language; used for solving engineering and scientific problems |

| COBOL | 1959 | Minicomputer and mainframe computer applications in business |

| ALGOL | 1960 | Especially useful in high-level mathematics |

| BASIC | 1964 | Most frequently used with microcomputers and minicomputers; science, engineering, and business applications |

| BCPL | 1965 | Development-stage language |

| B | 1969 | Development-stage language |

| C | 1970 | Combines the power of assembly language with the ease of use and portability of high-level language |

| Pascal | 1971 | High-level, general-purpose language; used for teaching structured programming |

| ADA | 1975 | Based on Pascal; used by the U.S. Department of Defense |

| VisiCalc | 1978 | First electronic spreadsheet |

| C++ | 1980 | Response to complexity of C; incorporates object-oriented programming methods |

| QuickBASIC | 1985 | Powerful high-level language with advanced user features |

| Visual C | 1992 | Visual language programming methods; design environments |

| Visual BASIC | 1993 | Visual language programming methods; design environments; advanced user-friendly features |

FORTRAN

The oldest language for scientific, engineering, and mathematical problems is FORTRAN (FORmula TRANslation). It was the prototype for today’s algebraic languages, which are oriented toward computational procedures for solving mathematical and statistical problems.

Problems that can be expressed in terms of formulas and equations are sometimes called algorithms. An algorithm is a step-by-step process used to solve a problem, much in the way a recipe is used to bake a cake, except that the algorithm is more detailed, that is, it would include instructions to remove the shell from the egg. FORTRAN was developed in 1956 by IBM in conjunction with some major computer users.

BASIC

Developed at Dartmouth College in 1964 as a first language for students, BASIC (Beginners All-purpose Symbolic Instruction Code) is an algebraic programming language. It is an easy-to-learn, interpreter-based language. BASIC contains a powerful arithmetic facility, several editing features, a library of common mathematical functions, and simple input and output procedures.

QuickBASIC

Microsoft developed BASIC into a powerful programming language that can be used for commercial applications and for quick, single-use programs. QuickBASIC’s advanced features for editing, implementing, and decoding make it an attractive language for professional and amateur programmers.

COBOL

One high-level, procedure-oriented language designed for coding business data processing problems is COBOL (COmmon Business Oriented Language). A basic characteristic of business data processing is the existence of large files that are updated continuously. COBOL provides extensive file-handling, editing, and report-generating capabilities for the user.

Pascal

Pascal is a high-level, general purpose programming language that was developed in 1971 by Nicklaus Wirth of the Federal Institute of Technology at Zürich, Switzerland. A general-purpose programming language is one that can be put to many different applications. Currently, Pascal is the most popular programming language for teaching programming concepts, partly because its syntax is relatively easy to learn and closely resembles that of the English language in usage.

C, C++

C is considered by many to be the first modern “programmer’s language.” It was designed, implemented, and developed by real working programmers and reflects the way they approached the job of programming. C is thought of as a middle-level language because it combines elements of high-level languages with the functionality of an assembler (low-level) language.

In response to the need to manage greater complexity, C++ was developed by Bjarne Stroustrup in 1980, who initially called it “C with Classes.” C++ contains the entire C language, as well as many additions designed to support object-oriented programming (OOP).

When a program exceeds approximately 30,000 lines of code, it becomes so complex that it is difficult to grasp as a single object. Therefore, OOP is a method of dividing up parts of the program into groups, or objects, with related data and applications, in the same way that a book is broken into chapters and subheadings to make it more readable.

Visual C++, Visual Basic

Visual programming languages are more recent languages, and they are under continuing development. They are designed specifically for the creation of Windows applications. Although Visual C++ and Visual Basic use their original respective programming language code structures, both were developed with the same goal in mind: to create user-friendly Windows applications with minimal effort from the programmer.

In theory, the most inexperienced programmer should be able to create complex programs with visual languages. The idea is to have the programmer design the program in a design environment without ever really writing extensive code. Instead, the visual language creates the code to match the programmer’s design.

Macros

Most spreadsheet and word processing applications offer built-in programming commands called macros. These work in the same way as commands in programming languages, and they are used to carry out user-defined functions or a series of functions in the application. One application that offers a very good library of macro commands is Excel, a spreadsheet. The user can create a command to manipulate a series of data by performing a specific series of steps.

Macros can be written or they can be designed in a fashion similar to that of visual programming. This process of designing a macro is called recording. The programmer turns the macro recorder on, carries out the steps he or she wants the macro to carry out, and stops the recorder. The macro now knows exactly what the programmer wants implemented and can run the same series of steps repeatedly.

Other program languages have been developed for other purposes. LOGO is a language that was designed for children. ADA is the official language approved by the U.S. Department of Defense for software development. It is used principally for military applications and artificial intelligence. Java is a language that was developed in 1995 and has become very useful in web application programming as well as application software. Additionally, HTML (HyperText Markup Language) is the predominant language used to format web pages.

Components

The central processing unit (CPU) in a computer is the primary element that allows the computer to manipulate data and carry out software instructions. Examples of currently available CPUs are the Intel Core i5 and AMD Phenom II. In microcomputers, this is often referred to as the microprocessor. Figure 14-9 is a photomicrograph of the Pentium microprocessor manufactured by the Intel Corporation. The Pentium processor is designed for large, high-performance, multiuser or multitasking systems.

FIGURE 14-9 The width of the conductive lines in this microprocessor chip is 1.5 µm. (Courtesy Intel.)

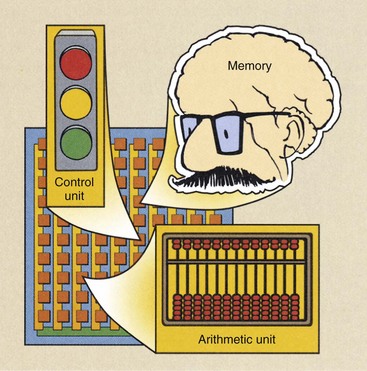

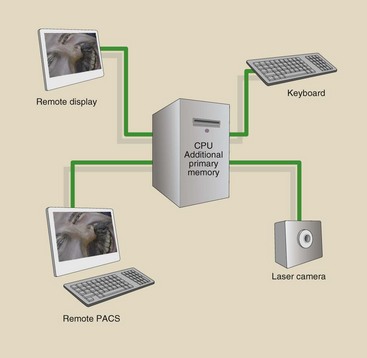

A computer’s processor (CPU) consists of a control unit and an arithmetic/logic unit (ALU). These two components and all other components are connected by an electrical conductor called a bus (Figure 14-10). The control unit tells the computer how to carry out software instructions, which direct the hardware to perform a task. The control unit directs data to the ALU or to memory. It also controls data transfer between main memory and the input and output hardware (Figure 14-11).

FIGURE 14-10 The central processing unit (CPU) contains a control unit, an arithmetic unit, and sometimes memory.

FIGURE 14-11 The control unit is a part of the central processing unit (CPU) that is directly connected with additional primary memory and various input/output devices.

The speed of these tasks is determined by an internal system clock. The faster the clock, the faster is the processing. Microcomputer processing speeds usually are defined in megahertz (MHz), where 1 MHz equals 1 million cycles per second. Today’s microcomputers commonly run at up to several gigahertz (GHz; 1 GHz = 1000 MHz).

Computers typically measure processing speed as MIPS (millions of instructions per second). Typical speeds range from 1000 MIPS to 160,000 MIPS.

The ALU performs arithmetic or logic calculations, temporarily holds the results until they can be transferred to memory, and controls the speed of these operations. The speed of the ALU is controlled by the system clock.

Memory

Computer memory is distinguished from storage by its function. Whereas memory is more active, storage is more archival. This active storage is referred to as memory, primary storage, internal memory, or random access memory (RAM). Random access means data can be stored or accessed at random from anywhere in main memory in approximately equal amounts of time regardless of where the data are located.

The contents of RAM are temporary, and RAM capacities vary widely in different computer systems. RAM capacity usually is expressed as megabytes (MB), gigabytes (GB), or terabytes (TB), referring to millions, billions, or trillions of characters stored.

Random access memory chips are manufactured with the use of complementary metal-oxide semiconductor (CMOS) technology. These chips are arranged as single-line memory modules (SIMMS).

The two types of RAM are dynamic RAM (DRAM) and static RAM (SRAM). DRAM chips are more widely used, but SRAM chips are faster. SRAM retains its memory even if power to the computer is lost but it is more expensive than DRAM and requires more space and power.

Special high-speed circuitry areas called registers are found in the control unit and the ALU. Registers are contained in the processor that hold information that will be used immediately. Main memory is located outside the processor and holds material that will be used “a little bit later.”

Read-only memory (ROM) contains information supplied by the manufacturer, called firmware, that cannot be written on or erased. One ROM chip contains instructions that tell the processor what to do when the system is first turned on and the “bootstrap program” is initiated. Another ROM chip helps the processor transfer information among the screen, the printer, and other peripheral devices to ensure that all units are working correctly. These instructions are called ROM BIOS (basic input/output system). ROM is also one of the factors involved in making a “clone” PC; for instance, to be a true Dell clone, a computer must have the same ROM BIOS as a Dell computer.

Three variations of ROM chips are used in special situations; PROM, EPROM, and EEPROM. PROM (programmable read-only memory) chips are blank chips that a user, with special equipment, can write programs to. After the program is written, it cannot be erased.

EPROM (erasable programmable read-only memory) chips are similar to PROM chips except that the contents are erasable with the use of a special device that exposes the chip to ultraviolet light. EEPROM (electronically erasable programmable read-only memory) can be reprogrammed with the use of special electron impulses.

The motherboard or system board is the main circuit board in a system unit. This board contains the microprocessor, any coprocessor chips, RAM chips, ROM chips, other types of memory, and expansion slots, which allow additional circuit boards to be added. All main memory is addressed, that is, each memory location is designated by a unique label in which a character of data or part of an instruction is stored during processing. Each address is similar to a post office address that allows the computer to access data at specific places in memory without disturbing the rest of the memory.

A sequence of memory locations may contain steps of a computer program or a string of data. The control unit keeps track of where current program instructions are stored, which allows the computer to read or write data to other memory locations and then return to the current address for the next instruction. All data processed by a computer pass through main memory. The most efficient computers, therefore, have enough main memory to store all data and programs needed for processing.

Usually, secondary memory is required in the form of compact discs (CDs), digital video discs (DVDs), hard disc drives, and solid-state storage devices. Secondary memory functions similarly to a filing cabinet—you store information there until you need to retrieve it.

After the appropriate file has been retrieved, it is copied into primary memory, where the user works on it. An old version of the file remains in secondary memory while the copy of the file is being edited or updated. When the user is finished with the file, it is taken out of primary memory and is returned to secondary memory (the filing cabinet), where the updated file replaces the old file.

The word file is used to refer to a collection of data or information that is treated as a unit by the computer. Each computer file has a unique name, and PC-based file names have extension names added after a period. For example, .DOC is added by a word processing program to files that contain word processing documents (e.g., REPORT.DOC).

Common file types are program files, which contain software instructions; data files, which contain data, not programs; image files, which contain digital images; audio files, which contain digitized sound; and video files, which contain digitized video images.

Storage

To understand storage hardware, it is necessary to understand the terms used to measure the capacity of storage devices. A bit describes the smallest unit of measure, a binary digit 0 or 1. Bits are combined into groups of 8 bits, called a byte.

A byte represents one character, digit, or other value. A kilobyte represents 1024 bytes. A megabyte (MB) is approximately 1 million bytes. A gigabyte (GB) is approximately 1 billion bytes and is used to measure the capacity of hard disc drives and sometimes RAM memory. A terabyte (TB) is 1024 GB and approximately 1 thousand billion bytes and higher capacity hard drives are often measured in terabytes.

The most common types of secondary storage devices are CDs, DVD, Blu-ray Discs, hard discs, and flash drives. Magnetic tape used to be a common storage medium for large computer systems but is now used primarily on large systems for backup and archiving of historical records, such as patient images. The “floppy disc” is also history. CDs, DVDs, and flash drives are today’s common transferable storage devices.

The CD stores data and programs as tiny indentions or pits on a disc-shaped, flat piece of Mylar plastic. This “pits” are read by a laser while the disc is spinning. The CD is removable from the computer and transferable (Figure 14-12).

The most common CD is nearly 5 inches in diameter; however, smaller CDs are also available. Data are recorded on a CD in rings called tracks, which are invisible, closed concentric rings. The number of tracks on a CD is called tracks per inch (TPI). The higher the TPI, the more data a CD can hold.

Each track is divided into sectors, which are invisible sections used by the computer for storage reference. The number of sectors on a CD varies according to the recording density, which refers to the number of bits per inch that can be written to the CD. CDs also are defined by their capacity, which ranges to several GB. A CD drive is the device that holds, spins, reads data from, and writes data to a CD. DVDs and Blu-ray Discs operate in the same manner as CDs but offer higher capacity. All of these devices are commonly known as optical storage devices.

A flash drive, sometimes called a jump drive or jump stick, is the newest of the small portable memory devices (Figure 14-13). The flash drive has a capacity of several GB; it connects through a USB port and transfers data rapidly. The drives operate using solid-state technology and are one of the most durable forms of storage.

FIGURE 14-13 A flash drive is a small, solid-state device that is capable of storing in excess of 1 TB of data.

In contrast to CDs, hard disc drives (HDDs) are thin, rigid glass or metal platters. Each side of the platter is coated with a recording material that can be magnetized. HDDs are tightly sealed in a hard disc drive, and data can be recorded on both sides of the disc platters. HDDs are typically located inside the computer but can also be attached externally.

Another form of internal data storage is a solid-state drive (SSD). These drives are typically of a lower capacity than HDDs and more expensive. However, they store data based on solid state principles and therefore allow for much faster access to data and are more durable than traditional HDDs.

Compared with CDs and flash drives, HDDs can have thousands of tracks per inch and up to 64 sectors. Storage systems that use several hard discs use the cylinder method to locate data (Figure 14-14). HDDs have greater capacity and speed than optical storage devices and SSDs.

FIGURE 14-14 This disc drive reads all formats of optical compact discs and reads, erases, writes, and rewrites to a 650-MB optical cartridge.

A redundant array of independent discs (RAID) system consists of two or more disc drives in a single cabinet that collectively act as a single storage system. RAID systems have greater reliability because if one disc drive fails, others can take over.

As discussed previously, optical discs include CDs, DVDs, and Blu-ray Discs. A single CD-ROM (compact disc–read-only memory) typically can hold 800 MB of data. CD-ROM drives used to handle only one disc at a time, but now, multidisc drives called jukeboxes can handle up to 2000 CDs, DVDs, or Blu-ray Discs. In an all-digital radiology department, the optical disc jukebox would replace the film file room (Figure 14-15).

Output Devices

Common output devices are display screens and printers. Other devices include plotters, multifunction devices, and audio output devices.

The output device that people use most often is the display screen or monitor. The cathode ray tube (CRT) is a vacuum tube that is used as a display screen in a computer or video display terminal (VDT). Soft copy is the term that refers to the output seen on a display screen.

Flat panel displays (liquid crystal displays [LCDs]) are thinner and lighter and consume less power than CRTs. These displays are made of two plates of glass with a substance between them that can be activated in different ways. Flat panel displays are most prevalent form of display in radiology departments today.

A terminal is an input/output device that uses a keyboard for input and a display screen for output. Terminals can be dumb or intelligent.

A dumb terminal cannot do any processing on its own; it is used only to input data or receive data from a main or host computer. Airline agents at ticketing and check-in counters usually are connected to the main computer system through dumb terminals.

An intelligent terminal has built-in processing capability and RAM but does not have its own storage capacity.

Printers are another form of output device and are categorized by the manner in which the print mechanism physically contacts the paper to print an image.

Impact printers such as dot matrix and high-speed line printers have direct contact with the paper. Such printers have largely been replaced by nonimpact printers.

The two types of nonimpact printers used with microcomputers are laser printers and ink-jet printers. A laser printer operates similarly to a photocopying machine. Images are created with dots on a drum, are treated with a magnetically charged inklike substance called toner, and then are transferred from drum to paper.

Laser printers produce crisp images of text and graphics, with resolution ranging from 300 dots per inch (dpi) to 1200 dpi and in color. They can print up to 200 text-only pages per minute (ppm) for a microcomputer and more than 100 ppm in full color. Laser printers have built-in RAM chips to store output from the computer; ROM chips that store fonts; and their own small, dedicated processor.

Ink-jet printers also form images with little dots. These printers electrically charge small drops of ink that are then sprayed onto the page. Ink-jet printers are quieter and less expensive and can also print in color. Printing up to 20 ppm for black text and 10 ppm for color images are possible with even modestly priced ink-jet printers.

Other specialized output devices serve specific functions. For example, plotters are used to create documents such as architectural drawings and maps. Multifunction devices deliver several capabilities such as printing, imaging, copying, and faxing through one unit.

Communications

Communications or telecommunications describes the transfer of data from a sender to a receiver across a distance. The practice of teleradiology involves the transfer of medical images and patient data.

Electric current, radiofrequency (RF), or light is used to transfer data through a physical medium, which may be a cable, a wire, or even the atmosphere (i.e., wireless). Many communications lines are still analog; therefore, a computer needs a modem (modulate/demodulate) to convert digital information into analog. The receiving computer’s modem converts analog information back into digital.

Transmission speed, the speed at which a modem transmits data, is measured in bits per second (bps) or kilobits per second (kbps). In addition to modems, computers require communications software. Often, this software is packaged with the modem, or it might be included as part of the system software.

Advances in technology have allowed for the development of faster and faster communication devices. Cable modems connect computers to cable TV systems that offer telecommunication services. Some cable providers offer transmission speeds up to 1000 times faster than a basic telephone line.

Integrated services digital network (ISDN) transmits over regular phone lines up to five times faster than basic modems. Digital subscriber lines (DSLs) transmit at speeds in the middle range of the previous two technologies. DSLs also use regular phone lines. Currently, the fastest available type of digital communication is a fiberoptic line that transmits signals digitally. These lines can range in speed from 5 Mbps to 50 Gbps, which at the fastest is about 1 million times faster than the original dial up modems used with a telephone line. In 1990, Tim Berners-Lee invented the worldwide web, which has profoundly connected us and shrunk our planet communications-wise. Telecommunications in the form of teleradiology is changing the way we allocate human resources to improve the speed of interpretation, reporting, and archiving of images and other patient data. Figure 14-16 is a good summary of the increasing speed and capacity of microprocessors designed to support teleradiology and medical image file sizes.

Input

Input hardware includes keyboards, mice, trackballs, touchpads, and source data entry devices. A keyboard includes standard typewriter keys that are used to enter words and numbers and function keys that enter specific commands. Digital fluoroscopy (see Chapter 25) uses function keys for masking, reregistration, and time-interval difference imaging.

Source data entry devices include scanners, fax machines, imaging systems, audio and video devices, electronic cameras, voice-recognition systems, sensors, and biologic input devices. Scanners translate images of text, drawings, or photographs into a digital format recognizable by the computer. Barcode readers, which translate the vertical black-and-white–striped codes on retail products into digital form, are a type of scanner.

An audio input device translates analog sound into digital format. Similarly, video images, such as those from a VCR or camcorder, are digitized by a special video card that can be installed in a computer. Digital cameras and video recorders capture images in digital format that can easily be transferred to the computer for immediate access.

Voice-recognition systems add a microphone and an audio sound card to a computer and can convert speech into digital format. Radiologists use these systems to produce rapid diagnostic reports and to send findings to remote locations by teleradiology.

Sensors collect data directly from the environment and transmit them to a computer. Sensors are used to detect things such as wind speed or temperature.

Human biology input devices detect specific movements and characteristics of the human body. Security systems that identify a person through a fingerprint or a retinal vascular pattern are examples of these devices.

Applications to Medical Imaging

Computers have continued to develop in terms of complexity as well as usability. The PC became available for purchase in the mid-1970s and in 2003, the U.S. Census Bureau reported that about 60% of U.S. households had at least one PC. This increase in the use of the PC has certainly not been limited to households. It would be difficult to find a radiology department in the United States that does not at least contain one computer. Computers in radiology departments are typically used to store, transmit, and read imaging examinations.

Computers play a large role in digital imaging, and the practice of digital imaging would not be possible without them. A digital image stored in a computer is rectangular in format and made up of small squares called pixels. A typical digital chest x-ray might contain 2000 columns of pixels and 2500 rows of pixels for a total of 5 million pixels. As discussed previously, computers at the most basic level read in binary format. This is the case in a digital image. Each pixel contains a series of 1s and 0s defining the gray scale or shade of that particular point on a digital x-ray image. Each space available for a 1 or 0 is called a bit. A group of 8 bits is called a byte. An x-ray image might be 16 bits (2 bytes), which would mean that each pixel contains a series of 16 1s and 0s. This results in 216 (65,536) combinations of 1 and 0, which means that the image is capable of displaying 65,536 different shades of gray.

| Question: | How much storage space do you think a 16 bit 2000 × 2500 pixel x-ray image would take? |

| Answer: | (1 byte/8 bits) × 16 bits × 2000 × 2500 pixels = 10,000,000 bytes 10,000,000 bytes × 1 kB/1024 byte × 1 MB/1024 kB = 9.5 MB |

In addition to the pixel information contained in the image, a typical x-ray image contains information about the patient, type of examination, place of examination, and so on. This information is stored in the image in what is called the header. The addition of a header requires that the image be stored in a slightly more complex way than just a series of pixels and their associated values. The American College of Radiology along with the National Electrical Manufacturers Association has developed a standard method of image storage for diagnostic medical images. This is known as the Digital Imaging and Communications in Medicine (DICOM) standard.

One problem with digital medical images is that they take up a relatively large amount of storage space and need to be transferred from the examination room to the radiologist and then need to be archived. The picture archiving and communication system (PACS) takes care of all of these tasks. In typical PACS systems, digital medical images are stored on a medium that allows for quick access until the examination results are reviewed by a radiologist or other physician. Then the examination results are typically sent to a cheaper type of storage device that takes longer to access for archiving. PACS typically consists of many different large storage devices, which could be a combination of any of the storage devices preciously discussed. Also, images are transferred via a network of usually fiberoptic lines that run throughout the hospital or facility. Having all of these digital images available on a network has made reading medical imaging examination results extremely convenient. Now there is no need for a hard copy of an x-ray film or other study; physicians merely need a fast network connection to obtain digital copies of the study they would like to read. This has led to the practice of teleradiology. Teleradiology is the practice in which radiologists remotely reads examination results and write reports. For example, a radiologist might be in Sydney, Australia, and read an examination that was performed in Houston, Texas, and complete his or her diagnosis in the same amount of time as a radiologist who was on site.

Computers are becoming so advanced that now many mobile smart phones available today are more powerful than large computers available less than a decade ago. In 2010, more mobile smartphones were sold than PCs (100.9 million vs. 92.1 million). This may further change the practice of medical imaging and medicine as well. Evidence of this can already be seen because the Food and Drug Administration approved the first application that allows for the viewing of medical images on a mobile phone in 2011.

Summary

The word computer is used as an abbreviation for any general-purpose, stored-program electronic digital device. General purpose means the computer can solve problems. Stored program means the computer has instructions and data stored in its memory. Electronic means the computer is powered by electrical and electronic devices. Digital means that the data are in discrete values.

A computer has two principal parts: the hardware and the software. The hardware is the computer’s nuts and bolts. The software is the computer’s programs, which tell the hardware what to do.

Hardware consists of several types of components, including a CPU, a control unit, an arithmetic unit, memory units, input and output devices, a video terminal display, secondary memory devices, a printer, and a modem.

The basic parts of the software are the bits, bytes, and words. In computer language, a single binary digit, either 0 or 1, is called a bit. Bits grouped in bunches of eight are called bytes. Computer capacity is typically expressed in gigabytes or terabytes.

Computers use a specific language to communicate commands in software systems and programs. Computers operate on the simplest number system of all—the binary system, which includes only two digits, 0 and 1. The computer performs all operations by converting alphabetic characters, decimal values, and logic functions into binary values. Other computer languages allow programmers to write instructions in a form that approaches human language.

Computers have greatly enhanced the practice of medical imaging. Computers have advanced to the point of allowing for the storage, transmission, and interpretation of digital images. This has virtually eliminated the need for hard copy medical images.

1. Define or otherwise identify the following:

2. Name three operations in diagnostic imaging departments that are computerized.

3. The acronyms ASCC, ENIAC, and UNIVAC stand for what titles?

4. What is the difference between a calculator and a computer?

5. How many megabytes are in 1 TB?

6. What are the two principal parts of a computer and the distinguishing features of each?

7. List and define the several components of computer hardware.

8. Define bit, byte, and word as used in computer terminology.

9. Distinguish systems software from applications programs.

10. List several types of computer languages.

11. What is the difference between a CD and a DVD?

12. A memory chip is said to have 256 MB of capacity. What is the total bit capacity?

13. What is high-level computer language?

14. What computer language was the first modern programmers’ language?

15. List and define the four computer processing methods.

16. Calculate the amount of storage space needed for a 32 bit 1024 × 1024 pixel digital image.

17. Describe what teleradiology is.

18. What input/output devices are commonly used in radiology?

The answers to the Challenge Questions can be found by logging on to our website at http://evolve.elsevier. com.