Audit

Audit as part of clinical governance

Audit as part of clinical governance

The relationship between practice research, service evaluation and audit

The relationship between practice research, service evaluation and audit

Structures, processes and outcomes which may be audited

Structures, processes and outcomes which may be audited

The stages in the audit cycle: standard setting, data collection, comparison with standards, identifying problems, implementing change, re-audit

The stages in the audit cycle: standard setting, data collection, comparison with standards, identifying problems, implementing change, re-audit

Introduction: what is audit?

Audit concerns the quality of professional activities and services. Audit is carried out to determine whether best practice is being delivered and, equally importantly, to improve practice. Audit is part of clinical governance (see Ch. 9) – probably the key part – therefore it forms part of the quality improvement work which takes place within all NHS organizations. It can be described as ‘improving the care of patients by looking at what you do, learning from it and if necessary, changing practice’. In England, quality improvement is now part of the QIPP agenda: Quality, Innovation, Productivity and Prevention, which is a major programme of work, aiming to transform the NHS; improving quality of care and making efficiency savings.

Audit is based around standards of practice. The hallmark of a professional is that they maintain standards of professional practice, which exist to protect the public from poor-quality services. Audit provides a method of accountability, both to the public and to the regulator, which demonstrates that standards are being met or, if not, that action is being taken to remedy the situation. It also provides managers with information about the quality of the services their staff deliver. Although this may seem somewhat threatening, ultimately the aim of audit is to improve the efficiency and effectiveness of services, to promote higher standards and to improve the outcome for patients. It also allows changes in practice to be evaluated. Therefore it is an essential component of any professional’s work and an integral part of day-to-day practice.

Most healthcare professionals’ activities have an impact on patients, either directly or indirectly, so can be described as a clinical service. Audit of these services is therefore clinical audit. Clinical audit is defined by NICE as ‘a quality improvement process that seeks to improve patient care and outcomes through systematic review of care against explicit criteria and the implementation of change’. All NHS trusts in the UK must support audit, so should have a central audit office which provides training and help in designing audits and collates the results of clinical audits. All NHS staff are expected to participate in clinical audit and the GPhC requires pharmacists to organize regular audits to protect patient and public safety and to improve professional services pharmacies. Community pharmacists in England must participate in two clinical audits each year, one based on their own practice and one multidisciplinary audit organized by their local primary care organization. Hospital pharmacists are required to be involved in clinical audits of Trust performance in areas of national priority.

There are few instances where pharmacists provide a clinical service to patients in isolation from other healthcare professionals. Providing advice and selling non-prescription medicines are examples of services which could be audited to comply with the requirement. Many services will impact on or be affected by services provided by other professionals, so can be regarded as multidisciplinary. Audit of these clinical services should ideally also be multidisciplinary. Users of services should also be involved in audit whenever possible, perhaps by asking patient representatives to join the audit team. They can provide important insight into what aspects of a service would benefit from audit and can help to set the criteria against which performance will be audited.

Relationship between practice research, service evaluation and audit

It is important to understand the relationship between practice research, service evaluation and audit. Practice research is designed to determine best practice. An example of this would be a randomized controlled trial of pharmacists undertaking a new service compared with normal care. In a controlled trial, patients are often carefully selected, using inclusion and exclusion criteria, special documentation and outcome measures are used, which may differ from those used in routine practice and all aspects of the service being studied must be standardized.

To implement a new service into routine practice, further development will be required. Many aspects of a new service are likely to differ from those used in a research situation and may differ between practice settings. All new services will then need to be evaluated, which may involve determining the views of service providers and users, collecting data on the outcomes for patients who use the service and finding out if publicity is adequate. Changes may be necessary if problems are identified during service evaluation.

Once a service is running smoothly it should then be subject to audit. This will involve setting standards for the service and measuring actual practice against these standards.

Although there are many similarities in the methods used to obtain data for research and for audit, there are important differences. In research, it is important to have controlled studies, to be able to extrapolate the results and to have large enough samples to demonstrate statistical significance of any differences between groups. None of these applies to audit. Audit compares actual practice to a predetermined level of best practice, not to a control. The results of audit apply to a particular situation and should not be extrapolated. Audit can be even applied to a single case; large numbers are not required and so the number included in an audit should be practical, to ensure resources are not wasted in carrying it out.

Types of audit

Audit may be of three types, depending on who undertakes it. These are:

Self-audit is undertaken by individuals and is part of a professional work attitude in which critical appraisal of actions taken and outcomes is continuous. While anyone can do self-audit, it is most likely to be used by pharmacists who work in isolation, such as in single-handed community pharmacies. There are many areas where self-audit can be conducted, e.g. smoking cessation services, owing and out of stock items and waste medicines.

Peer audit is undertaken by people within the same peer group, which usually means the same profession. Peer audit involves joint setting of standards by an audit team. For example, pharmacists from several hospitals which provide similar services could get together and audit each other’s service. Another way of doing this is benchmarking – a process of defining a level of care set as a goal to be attained. Here, standards may be set against those identified by a leading centre, such as a teaching hospital. Benchmarking in prescribing may involve the use of prescribing indicators (see Ch. 22) or comparators. A wide range of these has been developed to benchmark or audit good prescribing practice, available from the QIPP section of the DH website.

External audit is carried out by people other than those actually providing the service and so is perceived as threatening by those whose services are being audited. It may be more objective in its criticisms than self or peer audit, but there may be less enthusiasm for corrective action to improve services. If standards are imposed, there is a perceived threat if an individual’s performance is not of the standard required. Involving the providers of services to be audited in deciding what best practice should be and in making improvements makes external audit more acceptable. Multidisciplinary audit is the most common type of group audit and is usually preferred for clinical audit, but it is essential to ensure that one subgroup is not auditing the activities of another subgroup. This would lead to tensions and be counterproductive. For example, in an audit of doctors’ prescribing errors detected by pharmacists, pharmacists cannot set the standard for an acceptable level of errors without involving the doctors. If they are not part of the audit team, there is little chance of improvement. Pharmacists are often involved in carrying out audits of clinical practice, for example audit of prescribing against NICE clinical guidelines. NICE produces tools for clinical audit, baseline assessment and self-assessment to help organizations implement NICE guidance and audit their own practice. Pharmacists should work with prescribers in setting local standards for these audits.

In England, a National Clinical Audit and Patient Outcomes Programme, managed by the Healthcare Quality Improvement Partnership, enables national clinical audits to take place. Data are collected locally and pooled, but also fed back to individual Trusts, so that they can identify necessary improvements for patients. Pharmacists may be involved in collecting data for such audits. An example is shown in Table 12.1. This is an example of a clinical audit, for which data were collected from many centres and which has the potential to change practice.

Table 12.1

Audit example: Dementia care in hospitals, conducted as part of the National Clinical Audit and Patient Outcomes Programme

| Aspect of service | Structure/process/outcome | Data collection method |

| Service structures, policies, care processes and key staff providing services for people with dementia | Structures, processes | Checklist |

| Medical records of patients with dementia, audited against a checklist of standards covering admission, assessment, care planning/delivery and discharge | Processes | Retrospective case note review, using data collection form |

| Staffing, support and governance | Structures, processes | Checklist |

| Physical environment known to impact on people with dementia | Structures | Checklist |

| Staff awareness of dementia and support offered to patients with dementia | Structures, processes | Questionnaire to staff |

| Carers’/patients’ experience of the support received and perceptions of the quality of care | Outcomes | Questionnaire to carers/patients |

| Quality of care provided to people with dementia | Processes | Data collection through direct observation |

Audit standards were derived from: national reports, guidelines, recommendations of professional bodies and service user/carer organizations

Adapted from report available at: http://www.hqip.org.uk/national-audit-of-dementia.

What is measured in audit?

There are three aspects of any services and activities which can be audited. These are:

Structures are the resources available to help deliver services or carry out activities. Examples are staff, their expertise and knowledge, books, learning materials or training courses, drug stocks, equipment, layout of premises.

Processes are the systems and procedures which take place when carrying out an activity and may include quality assurance procedures and policies and protocols of all types. Examples are: procedures for dealing with patients’ own medicines in hospital, prescribing policies and disease management protocols.

Outcomes are the results of the activity and are arguably the most important aspect. In pharmaceutical audits of activities such as drug procurement, distribution or dispensing, outcomes should be easily identified and measurable. In many clinical audits, some outcomes are relatively easily measured, e.g. changes in parameters such as blood pressure, INR (international normalized ratio) control and serum biochemistry. Surrogate outcomes can also be used, such as the drugs or doses prescribed or patient perceptions of service quality. Outcomes such as health status, attitude or behaviour can also be included, but changes may be very difficult to achieve and to measure.

Any individual audit can examine structures, processes and outcomes individually or together. (See Table 12.1 for an example which involved all three aspects.)

The audit cycle

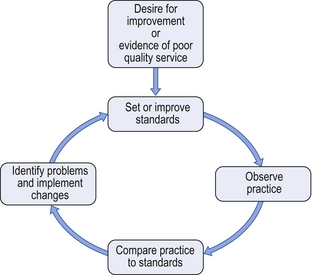

Audit is a continuous process, which follows a cycle of measurement, evaluation and improvement. The basic cycle is shown in Figure 12.1, but audit can also be seen as a spiral in which standards are continuously raised as practice improves.

Before starting an audit, first identify its purpose. This will derive from the desire to improve the quality of the service. For example, the purpose may be ‘to improve the dispensing turnaround time’ or ‘to increase the proportion of patients counselled about their new medicines’. It may be appropriate to conduct a ‘baseline assessment’ to find out if indeed there is a need to improve service quality through audit. This means conducting a small study before setting standards. Once you decide audit is needed the audit cycle incorporates:

Although the process is continuous, it is not practicable to audit all activities or services all the time. A baseline assessment helps to decide whether improvements are possible and routine monitoring may be instituted instead of repeat audits to ensure that best practice, once attained, is maintained.

Setting standards

All audits should be based on standards which are widely accepted (i.e. best practice). Examples of useful documents for helping to set standards are the Medicines, Ethics and Practice guide, national service frameworks and clinical guidelines. Several standards are usually set for any individual audit, relating to resources, processes or outcomes.

Because audit is about comparing actual practice to standards of best practice, numerical values are needed. A guideline may suggest a criterion, for example that patients receiving warfarin should be counselled about avoiding aspirin. For this to form a useful standard for audit, it needs to be clarified whether this applies to all patients, i.e. 100%. This numerical value is the target, which, together with the criterion, forms the standard or ‘level of performance’. It is then easy to measure whether this occurs in practice. Many clinical guidelines suggest audit standards and criteria.

A target level of 100% is termed an ideal standard but may not be achievable. The level set may need to be a compromise between what is desirable and what is possible, since resources may be limited. This would be an optimal standard. Using the previous example, it may be considered at the outset that there are insufficient staff to ensure that 100% of patients receiving warfarin could be counselled about avoiding aspirin. A compromise could be that 100% of patients prescribed warfarin for the first time receive this advice. Another type of standard is the minimal standard, which, as its name implies, is the minimum acceptable level of service and is often used in external audits.

If there are no published guidelines or standards, they will need to be devised. This may involve searching the literature, for example recent journals, textbooks or educational material. Whether devising standards from scratch or making guidelines into standards, it is important that the whole audit team is involved in devising them. This may include doctors, nurses, health visitors, technical staff, receptionists or porters, and patients or their carers. Inclusion avoids the potential feeling of threat which may be created by audit. Once standards have been set, the next stage of audit involves collecting data on actual practice.

Observing practice

Many audits require a simple form onto which data from other sources are transferred. Checklists are often most useful for auditing structures and processes but other types of data may also be needed, while auditing outcomes may require methods such as questionnaires (see Table 12.1). As with any data collection, the information obtained must be able to answer the questions asked, which may be relatively simple, such as ‘What percentage of patients receiving warfarin are counselled?’

It is often useful to incorporate some measure of potential factors which may influence practice within the data collection. So, in addition to finding out whether local guidelines are being used by examining medical records, it is worth issuing a questionnaire to those expected to use the guidelines to find out their views on whether the guidelines are readily available, are in an acceptable format and meet their needs. In an audit of warfarin counselling, it is useful to collect data on how busy the pharmacy is when each patient presents their prescription and how many staff trained to provide advice were available. This may mean that the data collection procedures may need to anticipate some potential causes of failing to provide best practice.

Before setting out to devise a data collection form, it is always worth finding out whether a similar audit has been done before, so you can adapt or modify the data collection procedures used. (See NICE or RPS websites for examples of audit documents.) If you do need to design a new procedure, the data collection must fulfil some basic requirements (Box 12.1). First the data collected must be able to address the purpose of the audit. The method of data collection must be valid and reliable. If sampling procedures are used, they too must be appropriate, avoiding bias and must be feasible.

Validity is the extent to which what is measured is what is supposed to be measured. To use the warfarin counselling example, the standard was about advice concerning aspirin. If the only data collected involved the number of patients who were counselled and not what advice they were given about aspirin, these data would be invalid, since they did not measure what they set out to measure.

Reliability is a measure of the reproducibility of the data collection procedure. It can be difficult to achieve reliability in measuring outcomes, so it is important to use recognized measures wherever possible. Reliability may also vary among individuals collecting data, despite their using the same data collection tool. It is important to check this, provide training if necessary and ensure everyone understands what is required.

Sampling is important in collecting data for audit, because the data should be unbiased and representative of actual practice. It may be that the numbers and time involved are small enough that all examples of the activity are included in data collection procedures. If numbers are large, it may be necessary to include a proportion in the audit. If so, a sampling plan is needed to ensure cases selected are representative. Many different sampling methods could be used, including random (using number tables or computer) or systematic (such as every tenth patient presenting a prescription for warfarin). Another way is to decide in advance that a certain proportion of the total population (a quota) will be sampled, usually ensuring that they will be typical of the population in important characteristics. These techniques require that the total population size within the audit period is known. A large population may need to be stratified into subgroups first before sampling, for example patients with new prescriptions and patients with repeats.

Since audit is about a particular service or activity, carried out by one or more particular individual professionals, the audit needs to strike a balance between having enough in the sample and not taking too much time. It must be possible, i.e. feasible, to collect the data required to answer the question. It is often necessary to incorporate data collection for audit into routine work, so the time needs to be minimized. Some data for use in audit may already be collected on a routine basis, e.g. data on PMRs or from MUR records. Some pharmacies routinely log the time when prescriptions are handed in and given out, so an audit of turnaround time could easily be carried out using these data. Routinely-collected hospital data on length of stay and number of admissions, discharges and deaths, may be useful.

Data for audit can be either quantitative or qualitative. Qualitative data can be useful in obtaining opinions about services or for assessing patient outcomes and large numbers are not required. It may be useful to undertake qualitative work to help design a good data collection tool to be used quantitatively, such as a questionnaire targeting larger numbers. Quantitative audit may generate large amounts of data, which require subsequent analysis. Usually only simple descriptive or simple comparative statistics are needed.

Whether the data collected are retrospective or prospective depends to a large extent on the topic of the audit and the data available. Retrospective audit can only be undertaken if good records of activities have been kept. Prospective audits should ensure that the data required are recorded, even if only for the audit period. There is a possibility of practice changing during the audit period simply because the audit is being undertaken. This may not always be a problem if practice is better than usual and if audit is continuous, since the ultimate aim is to improve services. It is more important to be aware of this effect if practice is measured periodically.

In large audits, piloting the data collection tool using a sample similar to those to be included in the audit is a valuable way of finding out if it is suitable. This should avoid discovering too late that there were difficulties in interpretation or that vital information has not been recorded.

Comparing practice to standards

This is the evaluation stage of audit, in which actual practice is compared to best practice. First the data obtained must be analysed and presented. Most audit data require only descriptive analysis, such as percentages, means or medians, along with ranges and standard deviations to show the spread of the data. Comparative statistical tests are useful for looking at one or more subgroups of quantitative data. This could be for different data collection periods (audit cycles) or for subgroups within one audit. Examples where comparison may be useful are three different pharmacies’ prescription turnaround times or the counselling frequencies for patients presenting prescriptions for warfarin for the first time compared to those who have taken it before. The statistical test must be appropriate for the type of data. Chi-square is used for non-parametric data, such as frequencies. For parametric data which are normally distributed, t-tests can be used. When using statistics, it is important to consider the practical significance of the data. An improvement which is statistically significant may not always be of practical significance and vice-versa.

Results should be presented to the team or to those involved in collecting audit data, using simple graphics, such as pie charts or bar charts. Data collected for audit purposes relate to the activities of individual professionals and to their effects on patients. It is therefore essential to maintain confidentiality. Permission is required before any information about one individual’s practice is given to other members of the audit team. Managers who may need this sort of information should be part of the audit team. In presenting audit results therefore, it is important to ensure that no individual practitioner or patient can be identified. This is essential if the audit is to improve services, as it will help others to learn and allow comparisons to be made.

When comparing the results of audits between centres, there will most probably be differences – perhaps in staffing levels, population served, case mix and so on – which could account for differences in apparent performance. Any unusual situations which occurred during the audit and which may have affected performance should be highlighted. Also any errors in data collection must be identified, which may mean data have to be excluded from analysis as they could be unrepresentative of what should have happened. It is most important to remember that the results of any audit should not be extrapolated beyond the sample audited. Audit applies to a particular activity, carried out by particular individuals and involving particular patients.

Providing the standards for the audit have been set appropriately, it should be relatively easy to determine whether they have been achieved. Often the most difficult part of audit is finding out why best practice is not being delivered and ensuring that improvement occurs.

Identifying problems

It is little use simply finding out that a service fails to meet an agreed standard. The underlying causes of failure need to be established and the data collection procedures should have attempted to identify some of these. Suboptimal practice can arise for a variety of reasons, such as inadequate skills or knowledge, poor systems of work or the behaviour of individuals within a team. Each should be examined as a possible contributory factor to disappointing results of an audit. Simple lack of awareness, e.g. about local clinical guidelines can contribute to their lack of use. Lack of skill may be related to infrequency of carrying out a particular activity or need for training. Both are relatively easily remedied. Both behaviour and the way in which work is organized are more difficult to change. The strategies adopted for effecting change will need to differ depending on which of these underlying causes is present.

Implementing changes

Achieving improvement in practice requires a change in behaviour. Change can be threatening simply because of its novelty. It may also involve increased work and is often resisted. This is why everyone whose work pattern may need to change should be active members of the audit team from the start. Change must be seen as leading to improvement in performance and ultimately patient benefit. The changes proposed to improve practice must be closely tailored to the underlying cause of the suboptimal audit results. They should be specific to the situation which has been audited, rather than general. They should be non-threatening and may need to be introduced gradually. Change may require resources, including time. It may also have other knock-on effects which need to be anticipated. The effect of changes must be monitored, to see whether they have been successful. This can be done by re-audit or by continuous monitoring if routinely collected data can be used.

Re-audit

Sometimes it may be appropriate to reconsider the standards before undertaking a further period of data collection.

Standards which were set too high may always be unattainable, although this may not have been apparent before practice was measured. It is equally possible to have used low standards and to have found they were exceeded. In this case it may be appropriate to raise them, which is a good way of improving practice. Whether or not the standards remain the same, a second period of measuring practice is needed if changes have been implemented, so that the effectiveness of these changes can be determined.

It is always difficult to change behaviour and improvements in practice may be short-lived. It may therefore be necessary to repeat audits at regular intervals to reinforce the desired practice and maintain the improvement in service.

Learning through audit

If the prevailing view of an audit which shows performance to be less than the standard set is that there are lots of reasons which could excuse this result, then little has been learned from undertaking the audit. Evaluating your service may be difficult, but it may also teach you a lot about yourself and the staff with whom you work. For example, it is of little use to suggest that the reason there were so many dispensing errors during the audit was that there was a new locum employed for part of the time. It is much more valuable to consider what information you have available for locums about your dispensing procedures and indeed whether your dispensing procedures are adequate.

If the results of an audit were suboptimal, but much as expected, is this because staff have been willing to accept poor practice in the past? Have staff been aware of the need for improvements in systems but felt unable to suggest changes? Have staff been wanting more training but known that there is no money available to pay for it? All these are hypothetical situations, but help to illustrate how conducting audit may have more learning than just what needs to be done to improve services. In this way, carrying out audit can contribute to continuing professional development and so has benefits both for you and, ultimately, for the patient.

Key Points

Pharmacists need to audit their practice to show that they meet appropriate standards

Pharmacists need to audit their practice to show that they meet appropriate standards

The main aim of audit must be to improve standards of service and outcomes for patients

The main aim of audit must be to improve standards of service and outcomes for patients

There are similarities and differences between audit, service evaluation and practice research

There are similarities and differences between audit, service evaluation and practice research

The three main types of audit are self-, peer and external audit

The three main types of audit are self-, peer and external audit

An audit may examine structures, processes or outcomes

An audit may examine structures, processes or outcomes

Criteria should be formulated into standards for audit which may be ideal, optimal or minimal

Criteria should be formulated into standards for audit which may be ideal, optimal or minimal

Standard setting should involve at least all those involved in delivering the service being audited

Standard setting should involve at least all those involved in delivering the service being audited

Data collection must address the purpose of the audit and have the potential to identify reasons for failure to meet the standard

Data collection must address the purpose of the audit and have the potential to identify reasons for failure to meet the standard

Sampling must ensure that the data collected in an audit are representative of the total activity

Sampling must ensure that the data collected in an audit are representative of the total activity

Piloting the data collection tool ensures that it is suitable and comprehensive

Piloting the data collection tool ensures that it is suitable and comprehensive

Comparison with standards will normally involve very simple descriptive or statistical analysis

Comparison with standards will normally involve very simple descriptive or statistical analysis

Confidentiality must be respected, but outcomes should be shared with all the audit team

Confidentiality must be respected, but outcomes should be shared with all the audit team

Implementing change is a key part of audit

Implementing change is a key part of audit

Re-audit tests whether changes have led to improved achievement of standards

Re-audit tests whether changes have led to improved achievement of standards