CHAPTER 12

Functional Tests

ELAINE EWING FESS, MS, OTR, FAOTA, CHT*

“Rule #1: When you get a bizarre finding, first question the test.”†

CRITICAL POINTS

▪ Standardized functional tests are statistically proven to measure accurately and appropriately when proper equipment and procedures are used.

▪ In order for a test to be an acceptable measurement instrument, it must include all of the following elements: reliability, validity, statement of purpose, equipment criteria and administration, and scoring and interpretation instructions.

▪ Hand and upper extremity assessment tools fall at varying levels along the reliability and validity continuum and therefore must be selected based on satisfying as many of the required elements as possible.

Objective measurements of function provide a foundation for hand rehabilitation efforts by delineating baseline pathology against which patient progress and treatment methods may be assessed. A thorough and unbiased assessment procedure furnishes information that helps match patients to interventions, predicts rehabilitation potential, provides data from which subsequent measurements may be compared, and allows medical specialists to plan and evaluate treatment programs and techniques. Conclusions gained from functional evaluation procedures guide treatment priorities, motivate staff and patients, and define functional capacity at the termination of treatment. Assessment of function through analysis and integration of data also serves as the vehicle for professional communication, eventually influencing the body of knowledge of the profession.

The quality of assessment information depends on the accuracy, authority, objectivity, sophistication, predictability, sensitivity, and selectivity of the tools used to gather data. It is of utmost importance to choose functional assessment instruments wisely. Dependable, precise tools allow clinicians to reach conclusions that are minimally skewed by extraneous factors or biases, thus diminishing the chances of subjective error and facilitating more accurate understanding. Functional instruments that measure diffusely produce nonspecific results. Conversely, instruments with proven accuracy of measurement yield precise and selective data.

Communication is the underlying rationale for requiring good assessment procedures. The acquisition and transmission of knowledge, both of which are fundamental to patient treatment and professional growth, are enhanced through development and use of a common professional language based on strict criteria for functional assessment instrument selection. The use of “home-brewed,” functional evaluation tools that are inaccurate or not validated is never appropriate since their baseless data may misdirect or delay therapy intervention. The purpose of this chapter is twofold: (1) to define functional measurement terminology and criteria and (2) to review current upper extremity functional assessment instruments in relation to accepted measurement criteria. It is not within the scope of this chapter to recommend specific test instruments. Instead, readers are encouraged to evaluate the instruments used in their practices according to accepted instrument selection criteria,1 keeping those that best meet the criteria and discarding those that do not.

Instrumentation Criteria

Standardized functional tests, the most sophisticated of assessment tools, are statistically proven to measure accurately and appropriately when proper equipment and procedures are used. The few truly standardized tests available in hand/upper extremity rehabilitation are limited to instruments that evaluate hand coordination, dexterity, and work tolerance. Unfortunately, not all functional tests meet all of the requirements of standardization.

Primary Requisites

For a test to be an acceptable measurement instrument, it must include all of the following crucial, non-negotiable elements:

Reliability defines the accuracy or repeatability of a functional test. In other words, does the test measure consistently between like instruments; within and between trials; and within and between examiners? Statistical proof of reliability is defined through correlation coefficients. Describing the “parallelness” between two sets of data, correlation coefficients may range from +1.0 to −1.0. Devices that follow National Institute of Standards and Technology (NIST) standards, for example, a dynamometer, usually have higher reliability correlation coefficients than do tests for which there are no governing standards. When prevailing standards such as those from NIST exist for a test, use of human performance to establish reliability is unacceptable. For example, you would not check the accuracy of your watch by timing how long it takes five people to run a mile and then computing the average of their times. Yet, in the rehabilitation arena, this is essentially how reliability of many test instruments has been documented.2

Once a test’s instrument reliability is established, inter-rater and intrarater reliability are the next steps that must be confirmed. Although instrument reliability is a non-negotiable prerequisite to defining rater reliability, researchers and commercial developers often ignore this critical step, opting instead to move straight to establishing rater reliability with its less stringent, human performance-based paradigms.3 The fallacy of this fatal error seems obvious, but if a test instrument measures consistently in its inaccuracy, it can produce misleadingly high rater reliability scores that are completely meaningless. For example, if four researchers independently measure the length of the same table using the same grossly inaccurate yardstick, their resultant scores will have high inter-rater and intrarater reliability so long as the yardstick consistently maintains its inherent inaccuracies and does not change (Fig. 12-1). Unfortunately, this scenario has occurred repeatedly with clinical and research assessment tools, involving mechanical devices and paper-and-pencil tests alike.

Figure 12-1 If an inaccurate assessment tool measures consistently and does not change over time, its intrarater and inter-rater reliabilities can be misleadingly high. Despite these deceptively high rater correlations, data collected from using the inaccurate test instrument are meaningless. (Courtesy of Dr. Elaine Ewing Fess.)

Validity defines a test’s ability to measure the thing it was designed to measure. Proof of test validity is described through correlation coefficients ranging from +1.0 to −1.0. Reliability is a prerequisite to validity. It makes no sense to have a test that measures authentically (valid) but inaccurately (unreliable). Validity correlation coefficients usually are not as high as are reliability correlation coefficients. Like reliability, validity is established through comparison to a standard that possesses similar properties. When no standard exists, and the test measures something new and unique, the test may be said to have “face validity.” An example of an instrument that has face validity is the volumeter that is based on Archimedes’ principle of water displacement. It is important to remember that volumeters must first be reliable before they may be considered to have face validity. A new functional test may be compared with another similar functional test whose validity was previously established. However, establishing validity through comparison of two new, unknown, tests produces fatally flawed results. In other words, “Two times zero is still zero.” Unfortunately, it is not unusual to find this type of error in functional tests employed in the rehabilitation arena.

Statement of purpose defines the conceptual rationale, principles, and intended use of a test. Occasionally test limitations are also included in a purpose statement. Purpose statements may range from one or two sentences to multiple paragraphs in length depending on the complexity of a test.

Equipment criteria are essential to the reliability and validity of a functional assessment test instrument. Unless absolutely identical in every way, the paraphernalia constituting a standardized test must not be substituted for or altered, no matter how similar the substituted pieces may be. Reliability and validity of a test are determined using explicit equipment. When equipment original to the test is changed, the test’s reliability and validity are rendered meaningless and must be reestablished all over again. An example, if the wooden checkers in the Jebsen Taylor Hand Function Test are replaced with plastic checkers, the test is invalidated.4

Administration, scoring, and interpretation instructions provide procedural rules and guidelines to ensure that testing processes are exactingly conducted and that grading methods are fair and accurate. The manner in which functional assessment tools are employed is crucial to accurate and honest assessment outcomes. Test procedure and sequence must not vary from that described in the administration instructions. Deviations in recommended equipment procedure or sequence invalidate test results. A cardinal rule is that assessment instruments must not be used as therapy practice tools for patients. Information obtained from tools that have been used in patient training is radically skewed, rendering it invalid and meaningless. Patient fatigue, physiologic adaptation, test difficulty, and length of test time may also influence results. Clinically this means that sensory testing is done before assessing grip or pinch; rest periods are provided appropriately; and if possible, more difficult procedures are not scheduled early in testing sessions. Good assessment technique should reflect both test protocol and instrumentation requirements. Additionally, directions for test interpretation are essential. Functional tests have specific application boundaries. Straying beyond these clearly defined limits leads to exaggeration or minimization of inherent capacities of tests, generating misguided expectations for staff and patients alike. For example, goniometric measurements pertain to joint angles and arcs of motion. They, however, are not measures of joint flexibility or strength.

Although, not a primary instrumentation requisite, a bibliography of associated literature is often included in standardized test manuals. These references contribute to clinicians’ better appreciation and understanding of test development, purpose, and usage.

Secondary Requisites

Once all of the above criteria are met, data collection may be initiated to further substantiate a test’s application and usefulness.

Normative data are drawn from large population samples that are divided, with statistically suitable numbers of subjects in each category, according to appropriate variables such as hand dominance, age, sex, occupation, and so on. Many currently available tests have associated so-called normative data, but they lack some or, more often, all of the primary instrumentation requisites, including reliability; validity; purpose statement; equipment criteria; and administration, scoring, and interpretation instructions. Regardless of how extensive a test’s associated normative information may be, if the test does not meet the primary instrumentation requisites, it is useless as a measurement instrument.5

Tertiary Options

Assuming a test meets the primary instrumentation requisites, other statistical measures may be applied to the data gleaned from using the test. The optional measures of sensitivity and specificity assist clinicians in deciding whether evidence for applying the test is appropriate for an individual patient’s diagnosis.

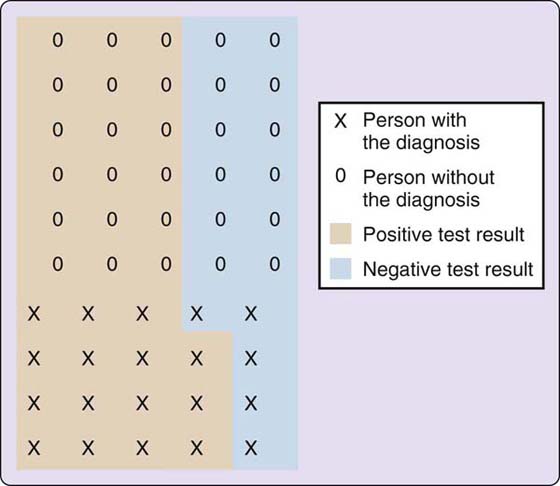

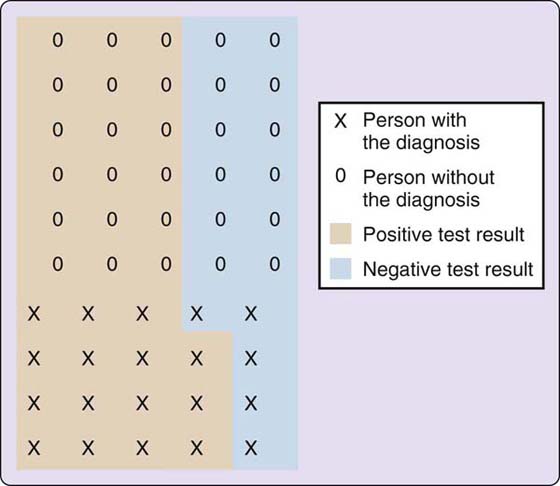

Sensitivity, a statistical measure, defines the proportion of correctly identified positive responses in a subject population. In other words, sensitivity tells, in terms of percentages, how good a test is at properly identifying those who actually have the diagnosis (Fig. 12-2).6 The mnemonic “SnNout” is often associated with Sensitivity, indicating that a Negative test result rules out the diagnosis.7 As an example, out of a population of 50, if a test correctly identifies 15 of the 20 people who have the diagnosis and who test positive (TP), the sensitivity of the instrument is 75.0% (TP/[TP + FN] = sensitivity %).*

Figure 12-2 Hypothetical population (n = 50) used to compute sensitivity, specificity, and predictive values. (Adapted from Loong TW. Understanding sensitivity and specificity with the right side of the brain. BMJ. 2003;327[7417]:716–719.)

Specificity, also a statistical measure, defines the proportion of negative results that are correctly identified in a subject population. Specificity, in the form of a percentage, tells how good a test is at correctly identifying those who do not have the diagnosis (see Fig. 12-2).6 “SpPin,” the mnemonic associated with Specificity, designates that a Positive test result rules in the diagnosis.7 If out of the same example population of 50 subjects, the test correctly identifies 12 of 30 people who do not have the diagnosis who test negative (TN), the specificity of the test is 40.0% (TN/[TN + FP] = specificity %).†

Predictive values define the chances of whether positive or negative test results will be correct. A positive predictive value looks at the true positives and false positives a test generates and defines the odds of a positive test being correct in terms of a percentage.6 Using the examples previously mentioned, 18 of 33 positive test results were true positives (pink), resulting in a positive predictive value of 54.5%. Conversely, a negative predictive value specifies, in terms of percentage, the chances of a negative test result being correct by looking at the true negatives and false negatives it generates. In the previous example, 12 of 17 negative test results were true negatives (blue), for a 70.6% negative predictive value. The advantage of predictive values is that they change as the prevalence of the diagnosis changes. Loong’s 2003 article, “Understanding sensitivity and specificity with the right side of the brain” is an excellent reference for students and those who are new to these concepts.6

It is important to remember that sensitivity, specificity, and predictive values have higher accuracy when test instruments meet the primary instrumentation requisites addressed earlier in this section. If a test measures inconsistently or inappropriately, or if instrumentation equipment or procedural guides are disregarded, the test’s respective sensitivity, specificity, and predictive value percentages are compromised.

Additional Considerations

Scale and range of test instruments are also important when choosing measurement tools.

Scale refers to the basic measurement unit of an instrument. Scale should be suitably matched to the intended clinical application. For example, if a dynamometer measures in 10-pound increments and a patient’s grip strength increases by a half a pound per week, it would take 20 weeks before the dynamometer would register the patient’s improvement. The dynamometer in this example is an inappropriate measurement tool for this particular clinical circumstance because its scale is too gross to measure the patient’s progress adequately.

Range involves the scope or breadth of a measurement tool, in other words, the distance between the instrument’s beginning and end values. The range of an instrument must be appropriate to the clinical circumstance in which it will be used. For example, the majority of dynamometers used in clinical practice have a range from 0 to 200 pounds and yet, grip scores of many patients in rehabilitation settings routinely measure less than 30 pounds. Furthermore, the accuracy of most test instruments is diminished in the lowest and highest value ranges. This means that clinicians are assessing clients grip strength using the least accurate, lower 15% of available dynamometer range. With 85% of their potential ranges infrequently used, current dynamometers are ill suited to acute rehabilitation clinic needs. However, these same dynamometers are well matched to work-hardening situations where grip strengths more closely approximate the more accurate, midrange values of commercially available dynamometers.

Standardized Tests Versus Observational Tests

Through interpretation, standardized tests provide information that may be used to predict how a patient may perform in normal daily tasks. For example, if a patient achieves x score on a standardized test, he may be predicted to perform at an equivalent of the “75th percentile of normal assembly line workers.” Standardized tests allow deduction of anticipated achievement based on narrower performance parameters as defined by the test.

In contrast, observational tests assess performance through comparison of subsequent test trials and are limited to like-item-to-like-item comparisons. Observational tests are often scored according to how patients perform specific test items; that is, independently, independently with equipment, needs assistance, and so forth. “The patient is able to pick up a full 12-ounce beer can with his injured hand without assistance.” Progress is based on the fact that he could not accomplish this task 3 weeks ago. Observational information, however, cannot be used to predict whether the patient will be able to dress himself or run a given machine at work. Assumptions beyond the test item trial-to-trial performance comparisons are invalid and irrelevant. Observational tests may be included in an upper extremity assessment battery so long as they are used appropriately.

Computerized Assessment Instruments

Computerized assessment tools must meet the same primary measurement requisites as noncomputerized instruments. Unfortunately, both patients and medical personnel tend to assume that computer-based equipment is more trustworthy than noncomputerized counterparts. This naive assumption is erroneous and predisposed to producing misleading information. In hand rehabilitation, some of the most commonly used noncomputerized evaluation tools have been or are being studied for instrument reliability and validity (the two most fundamental instrumentation criteria). However, at the time of this writing, none of the computerized hand evaluation instruments have been statistically proven to have intrainstrument and interinstrument reliability compared with NIST criteria. Some have “human performance” reliability statements, but these are based on the fatally flawed premise that human normative performance is equivalent to gold-standard NIST calibration criteria.2-3,5 Who would accept the accuracy of a weight set that had been “calibrated” by averaging 20 “normal” individuals’ abilities to lift the weights? Human performance is not an acceptable criterion for defining the reliability (calibration) of mechanical devices, including those used in upper extremity rehabilitation clinics.

Furthermore, one cannot assume that a computerized version of an instrument is reliable and valid because its noncomputerized counterpart has established reliability. For example, although some computerized dynamometers have identical external components to those of their manual counterparts, internally they have been “gutted” and no longer function on hydraulic systems. Reliability and validity statements for the manual hydraulic dynamometer are not applicable to the “gutted” computer version. Even if both dynamometers were hydraulic, separate reliability and validity data would be required for the computerized instrument.

The inherent complexity of computerized assessment equipment makes it difficult to determine instrument reliability without the assistance of qualified engineers, computer experts, and statisticians. Compounding the problem, stringent federal regulation often does not apply to “therapy devices.” Without sophisticated technical assistance, medical specialists and their patients have no way of knowing the true accuracy of the data produced by computerized therapy equipment.

Instrumentation Summary

Although many measurement instruments are touted as being “standardized,” most lack even the rudimentary elements of statistical reliability and validity,8,9 relying instead on normative statements such as means or averages. These norm-based tests lack even the barest of instrumentation requisites, meaning they cannot substantiate their consistency of measurement nor their ability to measure the entity for which they were designed. Because relatively few evaluation tools fully meet standardization criteria, instrument selection must be predicated on satisfying as many of the previously mentioned requisites as possible.10 Hand/upper extremity assessment tools vary in their levels of reliability and validity according to how closely their inherent properties match the primary instrumentation requisites.

As consumers, medical specialists must require that all assessment tools have appropriate documentation of reliability and validity at the very least. Furthermore, “data regarding reliability [and validity]‡ should be available and should not be taken at face value alone; just because a manufacturer states reliability studies have been done, or a paper concludes an instrument is reliable, does not mean the instrument or testing protocol meets the requirements for scientific design.”11 Purchasing and using assessment tools that do not meet fundamental measurement requisites limits potential at all levels, from individual patients to the scope of the profession.

Functional Assessment Instruments

Handedness

Handedness is an essential component of upper extremity function. Traditionally, the patient self-report is the most common method of defining hand preference in the upper extremity rehabilitation arena. Although hand preference tests with established reliability and validity have been used by psychologists to delineate cortical dominance for several decades,12,13 knowledge of these tests by surgeons and therapists is relatively limited. Recent studies show that the Waterloo Handedness Questionnaire (WHQ),13-15 a 32-item function-based survey with high reliability and validity, more accurately and more extensively defines hand dominance (Fig. 12-3) than does the patient self-report.16-17 The WHQ is inexpensive, simple and fast to administer, easy to score, and patients respond positively to it, welcoming its user-friendly format and explicitness of individualized results. Better definition of handedness is important to clinicians and researchers alike, in that it improves treatment focus and outcomes on a day-to-day basis, and, through more precise research studies, it eventually enhances the professional body of knowledge. (Also see the Grip Assessment section of this chapter.)

Figure 12-3 The Waterloo Handedness Questionnaire (WHQ) provides a more accurate definition of handedness than does the traditionally used self-report.

Hand Strength

Grip Assessment

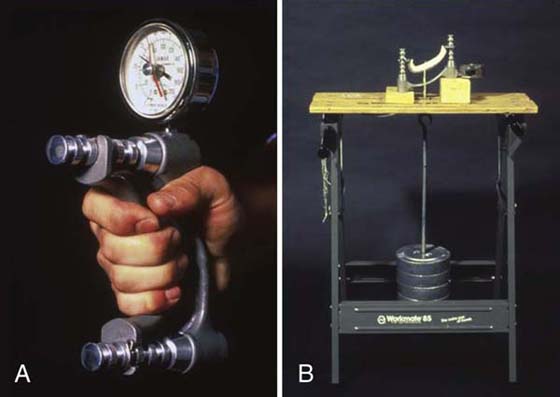

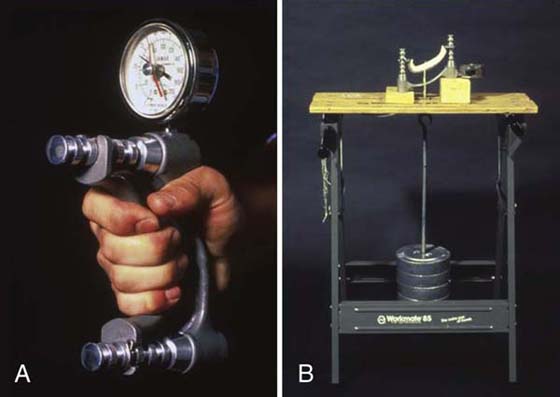

Grip strength is most often measured with a commercially available hydraulic Jamar dynamometer (Fig. 12-4), although other dynamometer designs are available.18-22 Developed by Bechtol23 and recommended by professional societies,24-26 the Jamar dynamometer has been shown to be a reliable test instrument,27 provided calibration is maintained and standard positioning of test subjects is followed.28-34

Figure 12-4 A, The hydraulic Jamar dynamometer has excellent reliability when calibrated correctly. B, A calibration testing station is easy to set up and will pay for itself over time. The most expensive components of the testing station are the F-tolerance test weights. The hook-weight combination is drill-calibrated to match the other weights, eliminating the need to subtract the weight of the hook.

In an ongoing instrument reliability study, of over 200 used Jamar and Jamar design dynamometers evaluated by the author, 51% passed the requisite +0.9994 correlation criterion compared with NIST F-tolerance test weights (government certified high-caliber test weights). Of these (0.9994 and above), 27% needed minor faceplate adjustments to align their read-out means with the mean readings of the standardized test weights. Of 30 brand new dynamometers, 80% met the correlation criterion of +0.9994. Interestingly, two Jamar dynamometers were tested multiple times over more than 12 years with less than a 0.0004 change in correlation, indicating that these instruments do maintain their calibration if carefully used and stored.35

Test procedure is important. In 1978 and 1983, the American Society for Surgery of the Hand recommended that the second handle position be used in determining grip strength and that the average of three trials be recorded.24,36 In contrast to the recommended mean of three grip trials, one maximal grip is reported to be equally reliable in a small cohort study.37 Deviation from recommended protocol should be undertaken with caution in regard to the mean-of-three-trials criterion, which is commonly utilized in research studies. More information is needed before adopting the one-trial method.

Of importance is the concept that grip changes according to size of object grasped. Normal adult grip values for the five consecutive handle positions consistently create a bell-shaped curve, with the first position (smallest) being the least advantageous for strong grip, followed by the fifth and fourth positions; strongest grip values occur at the third and second handle positions.23,38 If inconsistent handle positions are used to assess patient progress, normal alterations in grip scores may be erroneously interpreted as advances or declines in progress. Fatigue is not an issue for the three-trial test procedure, but may become a factor when recording grip strengths using all five handle positions (total of 15 trials with 3 trials at each position).39 A 4-minute rest period between handle positions helps control potential fatigue effect.40 Although a 1-minute rest between sets was reported to be sufficient to avoid fatigue, this study was conducted using a dynamometer design instrument whose configuration is different from that of the Jamar dynamometer.41 Percent of maximal voluntary contraction (MVC) required is also important in understanding normal grip strength and fatigue.42 For example it is possible to sustain isometric contraction at 10% MVC for 65 minutes without signs of muscle fatigue.43 Although Young40 reported no significant difference in grip scores between morning and night, his data collection times were shorter compared to those of other investigators who recommend that time of day should be consistent from trial to trial.23,44

Better definition of handedness directly influences grip strength. Using the WHQ, Lui and Fess found a consistent polarization pattern with greater differences between dominant and nondominant grip strengths in normal subjects with WHQ classifications of predominantly left or right preference versus those who were ambidextrous or with slight left or right preferences. This polarization pattern was especially apparent in the second Jamar handle position.45

Norms for grip strength are available,46-49 but several of these studies involve altered Jamar dynamometers or other types of dynamometers.50-54 Independent studies refute the often cited 10% rule for normal subjects,55 with reports finding that the minor hand has a range of equal to or stronger than the major hand in up to 31% of the normal population.54,56,57 The “10% rule” also is not substantiated when the WHQ is used to define handedness.45 Grip has been reported to correlate with height, weight, and age,23,54,56,58 and socioeconomic variables such as participation in specific sports or occupations also influence normal grip.59,60 Grip strength values lower than normal are predictive of deterioration and disability in elderly populations.61-67 It is important to note that the Mathiowetz normative data reported for older adults48 may be “up to 10 pounds lower than they should be.”68 A 2005 meta-analysis by Bohannon and colleagues that combined normative values from 12 studies that used Jamar dynamometers and followed American Society of Hand Therapists (ASHT) recommended testing protocols may be the most useful reference for normative data to date.69 In a 2006 study, Bohannon and coworkers also conducted a meta-analysis for normative grip values of adults 20 to 49 years of age.70

Although grip strength is often used clinically to determine sincerity of voluntary effort, validity of its use in identifying submaximal effort is controversial, with studies both supporting71-76 and refuting its appropriateness.77-82 Niebuhr83 recommends use of surface electromyography in conjunction with grip testing to more accurately determine sincerity of effort. The rapid exchange grip test,84,85 a popular test for insincere effort, has been shown to have problems with procedure and reliability;86,87 and even with a carefully standardized administration protocol, its validity is disputed due to low sensitivity and specificity.86,88,89

Hand grip strength is often an indicator of poor nutritional status. Unfortunately, the majority of these studies have been conducted using grip instruments other than the Jamar or Jamar-like dynamometers.90-93 Furthermore, very few of these studies address calibration methods, rendering their results uncertain.

Bowman and associates reported that the presence or absence of the fifth finger flexor digitorum superficialis (FDS) significantly altered grip strength in normal subjects, with grip strength of the FDS-absent group being nearly 7 pounds less than the FDS-common group, and slightly more than 8 pounds less than the FDS-independent group.94

The Jamar’s capacity as an evaluation instrument, the effects of protocol, and the ramification of its use have been analyzed by many investigators over the years with mixed, and sometimes conflicting, results. Confusion is due in large part to the fact that the vast majority of studies reported have relied on nonexistent, incomplete, or inappropriate methods for checking instrument accuracy of the dynamometers used in data collection. A second and more recent development is the ability to better define handedness using the WHQ. Scientific inquiry is both ongoing and progressive as new information is available. Although past studies provide springboards and directions, it is important to understand that all grip strength studies need to be reevaluated using carefully calibrated instruments and in the context of the more accurate definition of handedness provided by the WHQ.

Other grip strength assessment tools need to be ranked according to stringent instrumentation criteria, including longitudinal effects of use and time. Although spring-load instruments or rubber bulb/bladder instruments (Fig. 12-5) may demonstrate good instrument reliability when compared with corresponding NIST criteria, both these categories of instruments exhibit deterioration with time and use, rendering them inaccurate as assessment tools.

Figure 12-5 Rubber or spring dynamometer components deteriorate with use and over time, rendering the dynamometers useless as measurement devices.

Pinch Assessment

Reliability of commercially available pinchometers needs thorough investigation. Generally speaking, hydraulic pinch instruments are more accurate than spring-loaded pinchometers (Fig. 12-6). A frequently used pinchometer in the shape of an elongated C with a plunger dial on top is, mechanically speaking, a single large spring, in that its two ends are compressed toward each other against the counter force of the single center C spring. This design has inherent problems in terms of instrument reliability.95

Figure 12-6 A hydraulic pinchometer (right) is consistently more reliable than the commonly used, elongated-C-spring design pinchometer (left). This is because the C-spring design pinchometer (left) is actually one large spring that is compressed as a patient pinches its two open ends together.

Three types of pinch are usually assessed: (1) prehension of the thumb pulp to the lateral aspect of the index middle phalanx (key, lateral, or pulp to side); (2) pulp of the thumb to pulps of the index and long fingers (three-jaw chuck, three-point chuck); and (3) thumb tip to the tip of the index finger (tip to tip). Lateral is the strongest of the three types of pinch, followed by three-jaw chuck. Tip to tip is a positioning pinch used in activities requiring fine coordination rather than power. As with grip measurements, the mean of three trials is recorded, and comparisons are made with the opposite hand. Better definition of handedness via the WHQ improves understanding of the relative value of dominant and nondominant pinch strength. Cassanova and Grunert96 describe an excellent method of classifying pinch patterns based on anatomic areas of contact. In an extensive literature review, they found more than 300 distinct terms for prehension. Their method of classification avoids colloquial usage and eliminates confusion when describing pinch function.

Sensibility Function

Of the five hierarchical levels of sensibility testing ([1] autonomic/sympathetic response, [2] detection, [3] discrimination, [4] quantification, and [5] identification),97,98 only the final two levels include functional assessments. (See Chapter 11 for details regarding testing in the initial three levels.) At this time no standardized tests are available for these two categories although their concepts are used frequently in sensory reeducation treatment programs. However, several observation-based assessments are used clinically.

Quantification is the fourth hierarchical level of sensory capacity. This level involves organizing tactile stimuli according to degree. A patient may be asked to rank several object variations according to tactile properties, including, but not limited to, roughness, irregularity, thickness, weight, or temperature. An example of a quantification level functional sensibility assessment, Barber’s series of dowel rods covered with increasingly rougher sandpapers requires patients to rank the dowel rods from smoothest to roughest with vision occluded. For more detail, the reader is referred to Barber’s archived chapter on the companion Web site of this text.

Identification, the final and most complicated sensibility level, involves the ability to recognize objects through touch alone.

The Moberg Picking-Up Test is useful both as an observational test of gross median nerve function and as an identification test. Individuals with median nerve sensory impairment tend to ignore or avoid using their impaired radial digits, switching instead to the ulnar innervated digits with intact or less impaired sensory input, as they pick up and place small objects in a can. This test is frequently adapted to assess sensibility identification capacity by asking patients, without using visual cues, to identify the small objects as they are picked up. A commercially available version of the Moberg Picking-Up Test is available. Currently, the Moberg is an observational test only. It meets none of the primary instrumentation requisites, including proof of reliability and validity; and for most of the noncommercial versions that are put together with a random assortment of commonly found small objects, it lacks even the simplest of equipment standards.

Daily Life Skills

Traditionally, the extent to which daily life skills (DLS) are assessed has depended on the type of clientele treated by various rehabilitation centers. For example, facilities oriented toward treatment of trauma injury patients required less extensive DLS evaluation and training than centers specializing in treatment of arthritis patients. However, with current emphasis on patient satisfaction reporting, it is apparent that more extensive DLS evaluation is needed to identify specific factors that are individual and distinct to each patient and patient population.

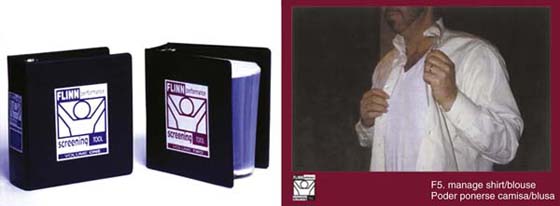

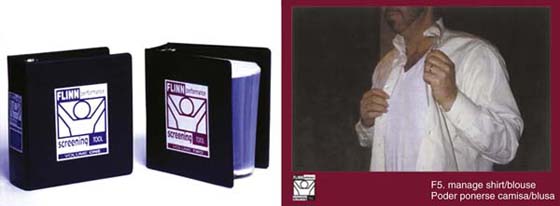

The Flinn Performance Screening Tool (FPST)99 is important because of its excellent test–retest reliability (92% of the items: Kendall’s τ > 0.8 and 97% agreement); and because it continues to be tested and upgraded over time (Fig. 12-7). The FPST allows patients to work independently of the evaluator in deciding what tasks they can and cannot perform. The fact that this test is not influenced by the immediate presence of an administrator is both important and unusual. Busy clinicians may prejudge or assume patient abilities based on previous experience with similar diagnoses; and some patients hesitate to disclose personal issues. With the FPST, the potential for therapist bias is eliminated and issues such as patient lack of disclosure due to social unease is reduced. Patients peruse and rank the cards on their own without extraneous influence. The FPST consists of three volumes of over 300 laminated daily activity photographs that have been tested and retested for specificity and sensitivity of task. Volume 1 assesses self-care tasks; volume 2 evaluates home and outside activities; volume 3 relates to work activities. This test represents a major step toward defining function in a scientific manner.

Figure 12-7 With a high reliability rating, the Flinn Performance Screening Tool (FPST) consists of a series of laminated photographs of activities of daily living and instrumental activities of daily living tasks from which patients identify activities that are problematic. Patients work independently, thereby avoiding potential evaluator bias or inhibiting societal influence. A, Volumes 1 and 2. B, One of more than 300 laminated photo cards contained in volumes 1–3.

Manual Dexterity and Coordination

Tests that assess dexterity and coordination are available in several levels of difficulty, allowing selection of instruments that best suit the needs and abilities of individual patients. As noted earlier, when using a standardized test instrument, it is imperative not to deviate from the method, equipment, and sequencing described in the test instructions. Test calibration, reliability, and validity are determined using very specific items and techniques, and any change in the stipulated pattern renders resultant data invalid and meaningless. Using a standardized test as a teaching or training device in therapy also excludes its use as an assessment instrument, due to skewing of data.

Jebsen Taylor Hand Function Test

Of the tests available, the Jebsen Taylor Hand Function Test100 requires the least amount of extremity coordination, is inexpensive to assemble, and is easy to administer and score. The Jebsen consists of seven subtests: (1) writing, (2) card turning, (3) picking up small objects, (4) simulated feeding, (5) stacking, (6) picking up large lightweight objects, and (7) picking up large heavy objects. Originally developed for use with patients with rheumatoid arthritis,100,101 the Jebsen has been used to assess aging adults,102 hemiplegic persons,103 children,104 and patients with wrist immobilization,105 among others. Jebsen norms are categorized according to maximum time, hand dominance, age, and gender.106 Rider and Lindon4 report a statistically significant difference in times with substitution of plastic checkers for the original wooden ones, and a trend of faster times with use of larger paper clips than originally described, invalidating the test. Equipment for standardized tests cannot be substituted or altered from that of the original test unless the test is restandardized completely with the new equipment. Capacity to measure gross coordination makes the Jebsen test an excellent instrument for assessing individuals whose severity of impairment precludes use of many other coordination tests, which often require very fine prehension patterns. The Jebsen is commercially available, but, before being used, this commercial format must be assessed thoroughly to ensure that it meets the original equipment standards and procedures.

Minnesota Rate of Manipulation Tests

Based on placing blocks into spaces on a board, the Minnesota Rate of Manipulation Tests (MRMT) include five activities: (1) placing, (2) turning, (3) displacing, (4) one-hand turning and placing, and (5) two-hand turning and placing. Originally designed for testing personnel for jobs requiring arm–hand dexterity, the MRMT is another excellent example of a test that measures gross coordination and dexterity, making it applicable to many of the needs encountered in hand/upper extremity rehabilitation. Norms for this instrument are based on more than 11,000 subjects. Unfortunately, some of the commercially available versions of the MRMT are made of plastic. Reliability, validity, and normative data were established on the original wooden version of the test and are not applicable to the newer plastic design. Essentially, the plastic MRMT is an unknown whose reliability, validity, and normative investigation must be established before it may be used as a testing instrument.

Purdue Pegboard Test

Requiring prehension of small pins, washers, and collars, the Purdue Pegboard Test107 evaluates finer coordination than the two previous dexterity tests. Subcategories for the Purdue are (1) right hand, (2) left hand, (3) both hands, (4) right, left, and both, and (5) assembly. Normative data are presented in categories based on gender and job type: male and female applicants for general factory work, female applicants for electronics production work, male utility service workers, among others. Normative data are also available for 14- to 19-year-olds108 and for ages 60 and over.109 Reddon and colleagues110 found a learning curve for some subtests when the Purdue was given five times at weekly intervals, reinforcing the concept that standardized tests should not be used as training devices for patients.

In terms of psychomotor taxonomy, all of the previously described tests assess activities that are classified as skilled movements, with the exception of the Jebsen’s task of picking up small objects with a spoon. Skilled movements involve picking up and manipulation of objects using finger–thumb interaction. At this level, tool usage is not involved in the testing process. In contrast, compound adaptive skills, the next higher psychomotor level, incorporates tool use, a more difficult proficiency.

Crawford Small Parts Dexterity Test

Evaluating compound adaptive skills, the Crawford Small Parts Dexterity Test adds another dimension to hand function assessment by introducing tools into the test protocol. Increasing the level of difficulty, this test requires patients to control implements in addition to their hands and fingers. The Crawford involves use of tweezers and a screwdriver to assemble pins, collars, and small screws on the test board. It relates to activities requiring very fine coordination, such as engraving, watch repair and clock making, office machine assembly, and other intricate skills.

O’Connor Peg Board Test

The O’Connor Peg Board111 test also requires use of tool manipulation to place small pegs on a board.

Nine-Hole Peg Test

Although frequently used in clinics, the Nine-Hole Peg Test lacks all the primary instrumentation requisites, including the critical elements of proof of reliability and validity. This “test” does have reported normative values but without the presence of primary instrumentation criteria, it is therefore useless as a measurement tool.

With the exception of the Nine-Hole Peg Test, all of the previously mentioned dexterity and coordination tests meet all of the primary and secondary instrumentation requisites. Other hand-coordination and dexterity tests are available. Before being used to assess patients, these tests should be carefully evaluated in terms of the primary, secondary, and optional instrumentation requisites discussed earlier in this chapter to ensure they have been proved to measure appropriately and accurately.

Work Hardening

Work-hardening tests span a wide range in terms of meeting instrumentation criteria, with many falling into the category of specific item or task longitudinal tests designed specifically to meet the needs of an individual patient.

Valpar Component Work Samples

On the sophisticated end of the continuum, the Valpar Work Sample consists of 19 work samples, each of which meets all the criteria for a standardized test. The individual tests may be used alone or in multiple groupings depending on patient requirements. The work samples may also be administered in any order. With the exception of the Valpar Work Samples, many work-hardening tests are not standardized.

Baltimore Therapeutic Equipment Work Simulator

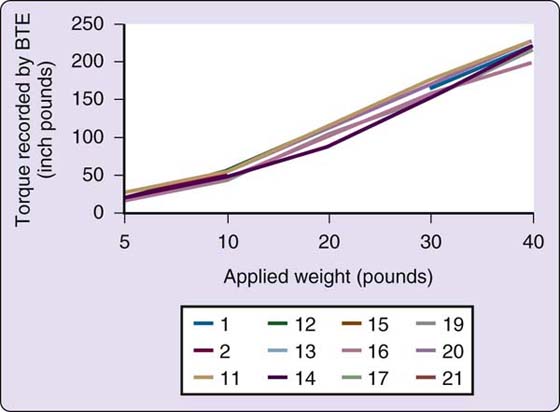

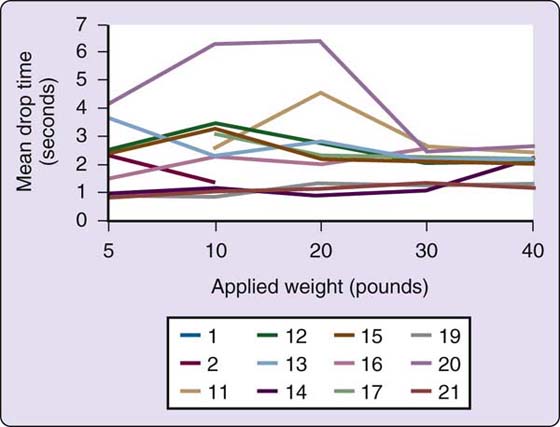

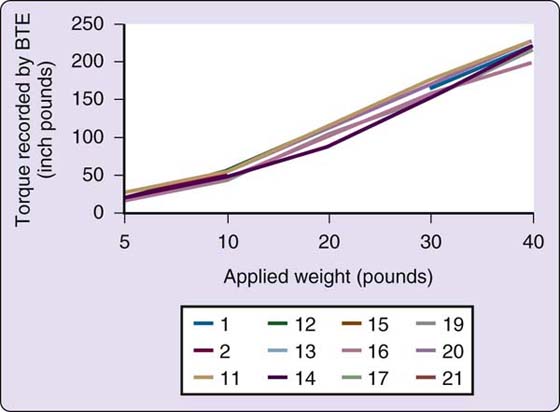

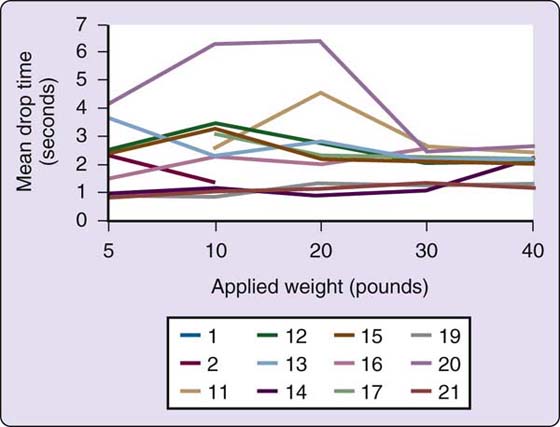

The Baltimore Therapeutic Equipment Work Simulator (BTEWS) employs static and dynamic modes to produce resistance to an array of tools and handles that are inserted into a common exercise head. Although the basic concept of this machine is innovative and the static mode has been shown to be accurate with high reliability correlation coefficients, the dynamic mode has problems producing consistent resistance. Coleman and coworkers found the dynamic mode resistance to vary widely both within machines and between machines, making the Work Simulator inappropriate for assessment when consistent resistance is required (Fig. 12-8).112-114 Because of the fluctuating and unpredictable dynamic mode resistance changes, which are not accurately reflected by the computer printout (Figs. 12-9 and 12-10), caution should be used in allowing patients with acute injuries, geriatric patients, pediatric patients, patients with inflammatory problems such as rheumatoid arthritis, patients with unstable vascular systems, or patients with impaired sensibility to exercise on this machine. Longitudinal pre-BTEWS and post-BTEWS volumetric measurements may be helpful in identifying patients whose inflammatory response to working in the Work Simulator dynamic mode may be progressively increasing.

Figure 12-8 Designed by engineers, this method of testing the consistency of resistance of the Baltimore Therapeutic Equipment Work Simulator dynamic mode is more appropriate and more accurate than static weight suspension or human performance data. With the Work Simulator dynamic mode resistance set at 92% of the applied weights, when the pin is pulled, the wheel begins to rotate one revolution against the preset Simulator resistance and the weights are lowered to the floor in a controlled descent. If the resistance remains constant, the timed descent of the weights remains constant both within and between weight drop trials. If, however, the resistance fluctuates, the timed descent of the weights is inconsistent within and between trials. In many of the Work Simulators, resistance surges produced by the Simulator were sufficiently strong to halt the descent of 20 to 40 pounds of weight in midair. Other times the weights crashed unexpectedly to the floor when Simulator resistance suddenly decreased. (Coleman EF, Renfro RR, Cetinok EM, et al. Reliability of the manual dynamic mode of the Baltimore Therapeutic Equipment Work Simulator. J Hand Ther. 1996;9[3]:223–237.)

Figure 12-9 A composite of the Work Simulators tested, these are the reports generated by the Work Simulator dynamic mode printouts of the resistance (torque) produced by Simulator dynamic modes. The Simulator printouts reported output data that closely mirrored the input resistance set by the engineer researchers. (Coleman EF, Renfro RR, Cetinok EM, et al. Reliability of the manual dynamic mode of the Baltimore Therapeutic Equipment Work Simulator. J Hand Ther. 1996;9[3]:223–237.)

Figure 12-10 In contrast to the printout reports in Figure 12-9, the timed weight drops against Simulator-produced resistance indicate large inconsistencies in resistances created by the various dynamic modes of the Work Simulators. Furthermore, Simulator-generated printout reports did not accurately reflect the actual resistances produced by the Simulator dynamic modes. (Coleman EF, Renfro RR, Cetinok EM, et al. Reliability of the manual dynamic mode of the Baltimore Therapeutic Equipment Work Simulator. J Hand Ther. 1996;9[3]:223–237.)

Interestingly, a review of reliability studies involving the Work Simulator identifies a pattern that is all too often found with mechanical rehabilitation devices, in that multiple studies were conducted and reported using human performance to establish its reliability as an assessment instrument.115-121 Human performance is not an appropriate indicator of accuracy or calibration for mechanical devices using NIST criteria. Furthermore, the recommended method for “calibrating” Work Simulators involves a static process of weight suspension that is incongruous with assessing dynamic mode resistance. Later, when a team of engineers evaluated the consistency of resistance of the dynamic mode according to NIST standards and employing an appropriate dynamic measurement method, reliability and accuracy of the dynamic mode were found to be seriously lacking.113

A second-generation “work simulator,” the BTE Primus, is currently on the market, along with similar machines from other companies. The Primus drops the terminology of “static” and “dynamic” modes, opting instead for isometric, isotonic, and isokinetic resistance and continuous passive motion modes. No NIST-based reliability information for the modes in which patients work against preset resistances is available for any of these second-generation machines including the Primus.122-125 Before the Primus may be used as an assessment instrument, or for that matter, a treatment tool, the Coleman and associates113 engineering methods used to evaluate the BTEWS should be employed to fully identify the consistency of resistance produced by its resistance modes. Unfortunately, the Coleman and associates NIST-based engineering study of the BTEWS is not included in the Primus operator’s manual bibliography.

Assessment of a patient’s potential to return to work is based on a combination of standardized and observational tests, knowledge of the specific work situation, insight into the patient’s motivational and psychological resources, and understanding of the complexities of normal and disabled hands and upper extremities in general. Although its importance has been acknowledged in the past, vocational assessment of the patient with an upper extremity injury is now given a higher priority in most of the major hand rehabilitation centers throughout the country. Treatment no longer ends with achievement of skeletal stability, wound healing, and a plateau of motion and sensibility. This shift in emphasis has been the result, in large part, of the contributions of the Philadelphia Hand Center.

Patient Satisfaction

Testing patient satisfaction has become an integral part of rehabilitation endeavors.126,127 Just as other test instruments must meet primary instrumentation requisites, so too must patient satisfaction assessment tools,128-130 which are often in the format of patient self-report questionnaires.

Current symptom or satisfaction tools used in evaluating patients with upper extremity injury or dysfunction include the Medical Outcomes Study 36-Item Health Survey (SF-36), the Upper Extremities Disabilities of Arm, Shoulder, and Hand (DASH),131-150 the Quick DASH, and the Michigan Hand Outcomes Questionnaire (MHQ).151,152 In contrast to the DASH instruments, the MHQ takes into consideration the important factors of hand dominance and hand/upper extremity cosmesis.151-160 These assessment tools have been extensively tested and meet all the primary instrumentation requisites. They are also available in many languages.

Diagnosis-Specific Tests of Function

Rheumatoid Arthritis

Arthritis literature is replete with dexterity and coordination tests, due in no small part to the numerous research studies involving outcomes of pharmacologic interventions. Some of the more frequently used assessments include the Sequential Occupational Dexterity Assessment (SODA), the Sollerman Test of Hand Grip, the Arthritis Hand Function Test, the Grip Ability Test, the Keitel Function Test, and the previously mentioned Jebsen Taylor Hand Function Test.

In contrast to these sophisticated coordination tests is the ubiquitous use of sphygmomanometers to assess grip strength. Despite a 1999 engineering report by Unsworth and colleagues161 that found “bags of different diameter and volume were seen to give statistically different pressure readings when squeezed by the same subjects,” the blood pressure cuff remains a common tool for evaluating grip strength in contemporary rheumatoid arthritis literature.

Stroke

The Wolf Motor Function Test (WMFT)162-171 assesses motor function in adults with hemiplegia due to stroke or traumatic brain injury. Although the original WMFT had 21 tasks, the adapted WMFT is shortened to 15 functional tasks and “includes items that cover a range of movements that can be evaluated within a realistic time period for both clinical and research purposes.” Ranging from 0.86 to 0.99, the WMFT-15 has good inter-rater, intrarater agreement, internal consistency, and test–retest reliability. A video demonstration of the WMFT may be found at www.youtube.com/watch?y=SIJK88NdZM.

Used in constraint-induced movement therapy, the original Motor Activity Log (MAL)172-176 was designed as a structured interview to assess how hemiparetic poststroke individuals use their more involved upper extremity in real-world activities. The MAL is divided into two components: amount of use (AOU) and quality of movement (QOM), during activities of daily living. The MAL is “internally consistent and relatively stable in chronic stroke patients not undergoing intervention.” However, its longitudinal construct validity is in doubt. The Motor Activity Log-14, a shortened version of the MAL, corrects many of the inconsistencies of the original MAL.174

Carpal Tunnel Syndrome

The Severity of Symptoms and Functional Status in Carpal Tunnel Syndrome questionnaire is an outcomes tool that was developed specifically to assess carpal tunnel syndrome symptoms177-183 using a combination of impairment assessment tools, patients’ self-reports of their symptoms, and their abilities to accomplish a few specific functional tasks that are problematic for those suffering from median nerve compression at the wrist.

Summary

Evaluation with instruments that measure accurately and truthfully allows physicians and therapists to correctly identify hand/upper extremity pathology and dysfunction, assess the effects of treatment, and realistically apprise patients of their progress. Accurate assessment data also permit analysis of treatment modalities for effectiveness, provide a foundation for professional communication through research, and eventually influence the scope and direction of the profession as a whole. Because of their relationship to the kind of information obtained, assessment tools should be chosen carefully. The choice of tools directly influences the quality of individual treatment and the degree of understanding between hand specialists. Criteria exist for identifying dependable instruments for accurate measurement when used by different evaluators and from session to session. Unless the results of a “home-brewed” test are statistically analyzed, the test is tried on large numbers of normal subjects, and the results are analyzed again, it is naïve to assume that such a test provides meaningful information. In the future, currently utilized tools may be better understood through checking their reliability and validity levels with bioengineering technology,176 and statisticians may be of assistance in devising protocols that will lead to more refined and accurate information. We as hand specialists have a responsibility to our patients and our colleagues to continue to critique the instruments we use in terms of their capacities as measurement tools. Without assessment, we cannot treat, we cannot communicate, and we cannot progress.

REFERENCES

1. Fess E. Guidelines for evaluating assessment instruments. J Hand Ther. 1995;8:144–148.

2. Fess E. Research for the clinician: human performance—an appropriate measure of instrument reliability? J Hand Ther. 1997;10:46–47.

3. Fess E. Research for the clinician: why trial-to-trial reliability is not enough. J Hand Ther. 1994;7:290.

4. Rider B, Linden C. Comparison of standardized and non-standardized administration of the Jebsen Hand Function Test. 1, 1988.

5. Fess E. Research for the clinician: how to avoid being misled by statements of average. J Hand Ther. 1994;7:193–194.

6. Loong TW. Understanding sensitivity and specificity with the right side of the brain. BMJ. 2003;327 (7417):716–719.

7. Sensitivity & Specificity (SnNouts and SpPins).http://www.cebm.utoronto.ca/glossary/spsnPrint.html.

8. Fess E. Research for the clinician: using research terminology correctly—reliability. J Hand Ther. 1988;1(3):109.

9. Fess E. Research for the clinician: using research terminology correctly—validity. J Hand Ther. 1988;1(4):148.

10. Fess EE. The need for reliability and validity in hand assessment instruments. J Hand Surg Am. 1986;11(5):621–623.

11. Bell-Krotoski J. Advances in sensibility evaluation. Hand Clin. 1991;7(3):527–546.

12. McMeekan E, Lishman W. Retest reliabilities and interrelationship of the Annett hand preference questionnaire and the Edinburgh handedness inventory. Br J Psychol. 1975;66:53–59.

13. Steenhuis RE, Bryden MP, Schwartz M, Lawson S. Reliability of hand preference items and factors. J Clin Exp Neuropsychol. 1990;12(6):921–930.

14. Peters M, Murphy K. Cluster analysis reveals at least three, and possibly five distinct handedness groups. Neuropsychologia. 1992;30(4):373–380.

15. Tan U. Normal distribution of hand preference and its bimodality. Int J Neurosci. 1993;68 (1-2):61–65.

16. Kersten K., et al. A Correlational Study between Self-Reported Hand Preference and Results of the Waterloo Handedness Questionnaire. New York: Columbia University, Occupational Therapy; 1995.

17. Lui P, Fess E. Establishing hand dominance: self-report versus the Waterloo Handedness Questionnaire. J Hand Ther. 2009;12(3):238-239.

18. Aveque C, Mugnier C, Jeay F, Moutet F. [The evaluation of the Artem grip in its function assessment]. Ann Chir Main Memb Super. 1994;13(5):334–344.

19. Fraser A, Vallow J, Preston A, Cooper RG. Predicting ‘normal’ grip strength for rheumatoid arthritis patients. Rheumatology (Oxford). 1999;38(6):521–528.

20. LaStayo P, Hartzel J. Dynamic versus static grip strength: how grip strength changes when the wrist is moved, and why dynamic grip strength may be a more functional measurement. J Hand Ther. 1999;12(3):212–218.

21. Lindahl OA, Nyström A, Bjerle P, Boström A. Grip strength of the human hand–measurements on normal subjects with a new hand strength analysis system (Hastras). J Med Eng Technol. 1994;18(3):101–103.

22. Walamies M, Turjanmaa V. Assessment of the reproducibility of strength and endurance handgrip parameters using a digital analyser. Eur J Appl Physiol Occup Physiol. 1993;67(1):83–86.

23. Bechtol C. Grip test: use of a dynamometer with adjustable handle spacing. J Bone Joint Surg [Am]. 1954;36:820–824.

24. American Society for Surgery of the Hand. The Hand: Examination and Diagnosis. New York: Churchill Livingstone; 1983.

25. American Society of Hand Therapists. American Society of Hand Therapists Clinical Assessment Recommendations. Chicago: The Society; 1992.

26. Kirkpatrick J. Evaluation of grip loss: a factor of permanent partial disability in California. Ind Med Surg. 1957;26:285–289.

27. Fess E. A method of checking Jamar dynamometer reliability. J Hand Ther. 1987;1(1):28–32.

28. LaStayo P, Chidgey L, Miller G. Quantification of the relationship between dynamic grip strength and forearm rotation: a preliminary study. Ann Plast Surg. 1995;35(2):191–196.

29. Mathiowetz V, Rennells C, Donahoe L. Effect of elbow position on grip and key pinch strength. J Hand Surg Am. 1985;10(5):694–697.

30. O’Driscoll S, Horii E, Ness R, et al. The relationship between wrist position, grasp size, and grip strength. J Hand Surg. 1992;17:169A–177A.

31. Richards LG. Posture effects on grip strength. Arch Phys Med Rehabil. 1997;78(10):1154–1156.

32. Richards LG, Olson B, Palmiter-Thomas P. How forearm position affects grip strength. Am J Occup Ther. 1996;50:133–138.

33. Spijkerman DC., et al. Standardization of grip strength measurements. Effects on repeatability and peak force. Scand J Rehabil Med. 1991;23(4):203–206.

34. Su CY, Lin JH, Chien TH, et al. Grip strength: relationship to shoulder position in normal subjects. Gaoxiong Yi Xue Ke Xue Za Zhi. 1993;9(7):385–391.

35. Fess E. Instrument reliability of new and used Jamar and Jamar design dynamometers. Ongoing study.

36. American Society for Surgery of the Hand. The Hand: Examination and Diagnosis. Aurora, Colorado: The Society; 1978.

37. Coldham F, Lewis J, Lee H. The reliability of one vs. three grip trials in symptomatic and asymptomatic subjects. J Hand Ther. 2006;19(3):318–326. quiz, 327

38. Murray JF, Mills D. Presidential address—American Society for Surgery of the Hand. The patient with the injured hand. J Hand Surg Am. 1982;7(6):543–548.

39. Reddon JR, Stefanyk WO, Gill DM, Renney C. Hand dynamometer: effects of trials and sessions. Percept Mot Skills. 1985;61(3 Pt 2):1195–1198.

40. Young V, Pin P, Kraemer BA, et al. Fluctuation in grip and pinch strength among normal subjects. J Hand Surg Am. 1989;14(1):125–129.

41. Watanabe T, Owashi K, Kanauchi Y, et al. The short-term reliability of grip strength measurement and the effects of posture and grip span. J Hand Surg Am. 2005;30(3):603–609.

42. Jaskolska A, Jaskolski A. The influence of intermittent fatigue exercise on early and late phases of relaxation from maximal voluntary contraction. Can J Appl Physiol. 1997;22(6):573–584.

43. Kahn JF, Favriou F, Jouanin JC, Monod H. Influence of posture and training on the endurance time of a low-level isometric contraction. Ergonomics. 1997;40(11):1231–1239.

44. Ferraz MB, Ciconelli RM, Araujo PM, et al. The effect of elbow flexion and time of assessment on the measurement of grip strength in rheumatoid arthritis. J Hand Surg Am. 1992;17(6):1099–1103.

45. Lui P, Fess E. Comparison of dominant and nondominant grip strength: critical role of the Waterloo Handedness Questionnaire. J Hand Ther. 2009;12(3):238-239.

46. Hanten WP, Chen WY, Austin AA, et al. Maximum grip strength in normal subjects from 20 to 64 years of age. J Hand Ther. 1999;12(3):193–200.

47. Harkonen R, Piirtomaa M, Alaranta H. Grip strength and hand position of the dynamometer in 204 Finnish adults. J Hand Surg Br. 1993;18(1):129–132.

48. Mathiowetz V, Kashman N, Volland G, et al. Grip and pinch strength: normative data for adults. Arch Phys Med Rehabil. 1985;66(2):69–74.

49. Mathiowetz V, Wiemer DM, Federman SM. Grip and pinch strength: norms for 6- to 19-year-olds. Am J Occup Ther. 1986;40(10):705–711.

50. Luna-Heredia E, Martin-Pena G, Ruiz-Galiana J. Handgrip dynamometry in healthy adults. Clin Nutr. 2005;24(2):250–258.

51. Montoye HJ, Lamphiear DE. Grip and arm strength in males and females, age 10 to 69. Res Q. 1977;48(1):109–120.

52. Montpetit RR, Montoye HJ, Laeding L. Grip strength of school children, Saginaw, Michigan: 1899 and 1964. Res Q. 1967;38(2):231–240.

53. Newman DG, Pearn J, Barnes A, et al. Norms for hand grip strength. Arch Dis Child. 1984;59(5):453–459.

54. Schmidt RT, Toews JV. Grip strength as measured by the Jamar dynamometer. Arch Phys Med Rehabil. 1970;51(6):321–327.

55. Sella GE. The hand grip: gender, dominance and age considerations. Eur Med Phys. 2001;37:161–170.

56. Petersen P, Petrick M, Connor H, Conklin D. Grip strength and hand dominance: challenging the 10% rule. Am J Occup Ther. 1989;43(7):444–447.

57. Su CY, Cheng KF, Chien TH, Lin YT. Performance of normal Chinese adults on grip strength test: a preliminary study. Gaoxiong Yi Xue Ke Xue Za Zhi. 1994;10(3):145–151.

58. Backous DD, Farrow JA, Friedl KE. Assessment of pubertal maturity in boys, using height and grip strength. J Adolesc Health Care. 1990;11(6):497–500.

59. De AK, Bhattacharya AK, Pando BK, Das Gupta PK. Respiratory performance and grip strength tests on the basketball players of inter-university competition. Indian J Physiol Pharmacol. 1980;24(4):305–309.

60. De AK, Debnath PK, Dey NK, Nagchaudhuri J. Respiratory performance and grip strength tests in Indian school boys of different socio-economic status. Br J Sports Med. 1980;14(2-3):145–148.

61. Bohannon RW. Hand-grip dynamometry provides a valid indication of upper extremity strength impairment in home care patients. J Hand Ther. 1998;11(4):258–260.

62. Davies CW, Jones DM, Shearer JR. Hand grip—a simple test for morbidity after fracture of the neck of femur. J R Soc Med. 1984;77(10):833–836.

63. Giampaoli S, Ferrucci L, Cecchi F, et al. Hand-grip strength predicts incident disability in non-disabled older men. Age Ageing. 1999;28(3):283–288.

64. Phillips P. Grip strength, mental performance and nutritional status as indicators of mortality risk among female geriatric patients. Age Ageing. 1986;15(1):53–56.

65. Rantanen T, Guralnik JM, Foley D, et al. Midlife hand grip strength as a predictor of old age disability. JAMA. 1999;281(6):558–560.

66. Sunderland A, Tinson D, Bradley L, Hewer RL. Arm function after stroke. An evaluation of grip strength as a measure of recovery and a prognostic indicator. J Neurol Neurosurg Psychiatry. 1989;52(11):1267–1272.

67. Bohannon RW. Grip strength predicts outcome. Age Ageing. 2006;35(3):320. author reply, 320

68. Flood-Joy M, Mathiowetz V. Grip-strength measurement: a comparison of three Jamar dynamometers. J Occup Ther Res. 1987;7(4):235–243.

69. Bohannon RW, Peolsson A, Massy-Westropp N, et al. Reference values for adult grip strength measured with a Jamar dynamometer: a descriptive meta-analysis. Physiotherapy. 2006;92:11–15.

70. Bohannon RW, Peolsson A, Massy-Westropp N. Consolidated reference values for grip strength of adults 20 to 49 years: a descriptive meta-analysis. Isokinet Exer Sci. 2006;14:221–224.

71. Chengalur SN, Smith GA, Nelson RC, Sadoff AM. Assessing sincerity of effort in maximal grip strength tests. Am J Phys Med Rehabil. 1990;69(3):148–153.

72. Gilbert JC, Knowlton RG. Simple method to determine sincerity of effort during a maximal isometric test of grip strength. Am J Phys Med. 1983;62(3):135–144.

73. Goldman S, Cahalan TD, An KN. The injured upper extremity and the Jamar five-handle position grip test. Am J Phys Med Rehabil. 1991;70(6):306–308.

74. Mitterhauser MD, Muse VL, Dellon AL, Jetzer TC. Detection of submaximal effort with computer-assisted grip strength measurements. J Occup Environ Med. 1997;39(12):1220–1227.

75. Seki T, Ohtsuki T. Influence of simultaneous bilateral exertion on muscle strength during voluntary submaximal isometric contraction. Ergonomics. 1990;33(9):1131–1142.

76. Stokes HM, Landrieu KW, Domangue B, Kunen S. Identification of low-effort patients through dynamometry. J Hand Surg Am. 1995;20(6):1047–1056.

77. Ashford RF, Nagelburg S, Adkins R. Sensitivity of the Jamar dynamometer in detecting submaximal grip effort. J Hand Surg Am. 1996;21(3):402–405.

78. Fairfax AH, Balnave R, Adams RD. Variability of grip strength during isometric contraction. Ergonomics. 1995;38(9):1819–1830.

79. Hamilton A, Balnave R, Adams R. Grip strength testing reliability. J Hand Ther. 1994;7(3):163–170.

80. Niebuhr B, Marion R. Voluntary control of submaximal grip strength. Am J Phys Med Rehabil. 1990;69:96–101.

81. Tredgett M, Pimble LJ, Davis TR. The detection of feigned hand weakness using the five position grip strength test. J Hand Surg Br. 1999;24(4):426–428.

82. Gutierrez Z, Shechtman O. Effectiveness of the five-handle position grip strength test in detecting sincerity of effort in men and women. Am J Phys Med Rehabil. 2003;82(11):847–855.

83. Niebuhr BR, Marion R, Hasson SM. Electromyographic analysis of effort in grip strength assessment. Electromyogr Clin Neurophysiol. 1993;33(3):149–156.

84. Hildreth DH, Breidenbach WC, Lister GD, Hodges AD. Detection of submaximal effort by use of the rapid exchange grip. J Hand Surg Am. 1989;14(4):742–745.

85. Joughin K, Gulati P, Mackinnon SE, et al. An evaluation of rapid exchange and simultaneous grip tests. J Hand Surg Am. 1993;18(2):245–252.

86. Taylor C, Shechtman O. The use of the rapid exchange grip test in detecting sincerity of effort, Part I: administration of the test. J Hand Ther. 2000;13(3):195–202.

87. Shechtman O, Taylor C. How do therapists administer the rapid exchange grip test? A survey. J Hand Ther. 2002;15(1):53–61.

88. Shechtman O, Taylor C. The use of the rapid exchange grip test in detecting sincerity of effort, Part II: validity of the test. J Hand Ther. 2000;13(3):203–210.

89. Westbrook AP, Tedgett MW, Davis TR, Oni JA. The rapid exchange grip strength test and the detection of submaximal grip effort. J Hand Surg Am. 2002;27(2):329–333.

90. Heimbürger O, Qureshi AR, Blaner WS, et al. Hand-grip muscle strength, lean body mass, and plasma proteins as markers of nutritional status in patients with chronic renal failure close to start of dialysis therapy. Am J Kidney Dis. 2000;36(6):1213–1225.

91. Castaneda C, Charnley JM, Evans WJ, Crim MC. Elderly women accommodate to a low-protein diet with losses of body cell mass, muscle function, and immune response. Am J Clin Nutr. 1995;62(1):30–39.

92. Pieterse S, Manandhar M, Ismail S. The association between nutritional status and handgrip strength in older Rwandan refugees. Eur J Clin Nutr. 2002;56(10):933–939.

93. Wang AY, Sea MM, Ho ZS, et al. Evaluation of handgrip strength as a nutritional marker and prognostic indicator in peritoneal dialysis patients. Am J Clin Nutr. 2005;81(1):79–86.

94. Bowman P, Johnson L, Chiapetta A, et al. The clinical impact of the presence or absence of the fifth finger flexor digitorum superficialis on grip strength. J Hand Ther. 2003;16(3):245–248.

95. Dunipace KR. Personal communication. 1990.

96. Casanova J, Grunert B. Adult prehension: patterns and nomenclature for pinches. J Hand Ther. 1989;2(4):231–244.

97. LaMotte R. Testing sensibility. Symposium: Assessment of Levels of Cutaneous Sensibility. Carville, LA: United States Public Health Service Hospital; 1980.

98. Fess E. Documentation: essential elements of an upper extremity assessment battery. In: Mackin E, Callahan A, Skirven T, eds., et al. Rehabilitation of the Hand and Upper Extremity. St. Louis: C. V. Mosby; 2002:263–284.

99. Flinn S, Ventura D. The Flinn Performance Screening Tool. Cleveland: Functional Visions; 1997.

100. Jebsen RH, Taylor N, Trieschmann RB, et al. An objective and standardized test of hand function. Arch Phys Med Rehabil. 1969;50(6):311–319.

101. Sharma S, Schumacher HR Jr, McLellan AT. Evaluation of the Jebsen Hand Function Test for use in patients with rheumatoid arthritis [corrected]. Arthritis Care Res. 1994;7(1):16–19.

102. Hackel ME, Wolfe GA, Bang SM, Canfield JS. Changes in hand function in the aging adult as determined by the Jebsen Test of Hand Function. Phys Ther. 1992;72(5):373–377.

103. Spaulding SJ, McPherson JJ, Strachota E, et al. Jebsen Hand Function Test: performance of the uninvolved hand in hemiplegia and of right-handed, right and left hemiplegic persons. Arch Phys Med Rehabil. 1988;69(6):419–422.

104. Taylor N, Sand PL, Jebsen RH. Evaluation of hand function in children. Arch Phys Med Rehabil. 1973;54(3):129–135.

105. Carlson JD, Trombly CA. The effect of wrist immobilization on performance of the Jebsen Hand Function Test. Am J Occup Ther. 1983;37(3):167–175.

106. Stern EB. Stability of the Jebsen-Taylor Hand Function Test across three test sessions. Am J Occup Ther. 1992;46(7):647–649.

107. Tiffin J, Asher EJ. The Purdue Pegboard; norms and studies of reliability and validity. J Appl Psychol. 1948;32(3):234–247.

108. Mathiowetz V, Rogers SL, Dowe-Keval M, et al. The Purdue Pegboard: norms for 14- to 19-year-olds. Am J Occup Ther. 1986;40(3):174–179.

109. Desrosiers J, Bravo G, Hébert R, Dutil E. Normative data for grip strength of elderly men and women. Am J Occup Ther. 1995;49(7):637–644.

110. Reddon JR, Gill DM, Gauk SE, Maerz MD. Purdue Pegboard: test-retest estimates. Percept Mot Skills. 1988;66(2):503–506.

111. Hines M, O.C. J. A measure of finger dexterity. Personnel J. 1926;4:4–9.

112. Cetinok EM, Renfro RR, Coleman EF. A pilot study of the reliability of the dynamic mode of one BTE Work Simulator. J Hand Ther. 1995;8(3):199–205.

113. Coleman EF, Renfro RR, Cetinok EM, et al. Reliability of the manual dynamic mode of the Baltimore Therapeutic Equipment Work Simulator. J Hand Ther. 1996;9(3):223–237.

114. Dunipace KR. Reliability of the BTE Work Simulator dynamic mode. J Hand Ther. 1995;8(1):42–43.

115. Anderson PA, Chanoski CE, Devan DL, et al. Normative study of grip and wrist flexion strength employing a BTE Work Simulator. J Hand Surg Am. 1990;15(3):420–425.

116. Bhambhani Y, Esmail S, Brintnell S. The Baltimore Therapeutic Equipment Work Simulator: biomechanical and physiological norms for three attachments in healthy men. Am J Occup Ther. 1994;48(1):19–25.

117. Curtis RM, Engalitcheff J Jr. A work simulator for rehabilitating the upper extremity—preliminary report. J Hand Surg Am. 1981;6(5):499–501.

118. Esmail S, Bhambhani Y, Brintnell S. Gender differences in work performance on the Baltimore Therapeutic Equipment Work Simulator. Am J Occup Ther. 1995;49(5):405–411.

119. Kennedy LE, Bhambhani YN. The Baltimore Therapeutic Equipment Work Simulator: reliability and validity at three work intensities. Arch Phys Med Rehabil. 1991;72(7):511–516.

120. Powell DM, Zimmer CA, Antoine MM, et al. Computer analysis of the performance of the BTE Work Simulator. J Burn Care Rehabil. 1991;12(3):250–256.

121. Wilke NA, Shedahl LM, Dougherty SM, et al. Baltimore Therapeutic Equipment Work Simulator: energy expenditure of work activities in cardiac patients. Arch Phys Med Rehabil. 1993;74(4):419–424.

122. Capodaglio P, Strada MR, Lodola E, et al. Work capacity of the upper limbs after mastectomy. G Ital Med Lav Ergon. 1997;19(4):172–176.

123. Cooke C, Disk LA, Menard MR, et al. Relationship of performance on the ERGOS Work Simulator to illness behavior in a workers’ compensation population with low back versus limb injury. J Occup Med. 1994;36(7):757–762.

124. Dusik LA, Menard MR, Cooke C, et al. Concurrent validity of the ERGOS Work Simulator versus conventional functional capacity evaluation techniques in a workers’ compensation population. J Occup Med. 1993;35(8):759–767.

125. Kaiser H, Kersting M, Schian HM. [Value of the ERGOS Work Simulator as a component of functional capacity assessment in disability evaluation]. Rehabilitation (Stuttg). 2000;39(3):175–184.

126. Amadio PC. Outcomes research and the hand surgeon. J Hand Surg Am. 1994;19(3):351–352.

127. Barr JT. The outcomes movement and health status measures. J Allied Health. 1995;24(1):13–28.

128. Bieliauskas L, Fastenau PS, Lacy MA, Roper BL. Use of the odds ratio to translate neuropsychologicals test scores into real-world outcomes: from statistical significance to clinical significance. J Clin Exp Neuropsychol. 1997;19:889–896.

129. Blevins L, McDonald CJ. Fisher’s Exact Test: an easy-to-use statistical test for comparing outcomes. MD Comput. 1985;2(1):15–19. 68

130. Bridge PD, Sawilowsky SS. Increasing physicians’ awareness of the impact of statistics on research outcomes: comparative power of the t-test and Wilcoxon Rank-Sum test in small samples applied research. J Clin Epidemiol. 1999;52(3):229–235.

131. Amadio PC. Outcomes assessment in hand surgery. What’s new? Clin Plast Surg. 1997;24(1):191–194.

132. Calderon SA, Zurakowski D, Davis JS, Ring D. Quantitative adjustment of the influence of depression on the Disabilities of the Arm, Shoulder, and Hand (DASH) Questionnaire. Hand (N Y). 2009. [Epub ahead of print]

133. Huisstede BM, Feleus A, Bierma-Zeinstra M, et al. Is the Disability of Arm, Shoulder, and Hand Questionnaire (DASH) also valid and responsive in patients with neck complaints. Spine. 2009;34(4):E130–E138. (Phila Pa 1976)

134. Rosales RS, Diez de la Lastra I, McCabe S, et al. The relative responsiveness and construct validity of the Spanish version of the DASH instrument for outcomes assessment in open carpal tunnel release. J Hand Surg Eur Vol. 2009;34(1):72–75.

135. Kitis A, Celik E, Aslan UB, Zencir M. DASH Questionnaire for the analysis of musculoskeletal symptoms in industry workers: a validity and reliability study. Appl Ergon. 2009;40(2):251–255.

136. Cheng HM, Sampaio RF, Mancini MC, et al. Disabilities of the Arm, Shoulder and Hand (DASH): factor analysis of the version adapted to Portuguese/Brazil. Disabil Rehabil. 2008;30(25):1901–1909.

137. De Smet L. The DASH Questionnaire and score in the evaluation of hand and wrist disorders. Acta Orthop Belg. 2008;74(5):575–581.

138. Janssen S, De Smet L. Responsiveness of the DASH Questionnaire for surgically treated tennis elbow. Acta Chir Belg. 2008;108(5):583–585.

139. Varju C, Bálint Z, Solyom AI, et al. Cross-cultural adaptation of the Disabilities of the Arm, Shoulder, and Hand (DASH) Questionnaire into Hungarian and investigation of its validity in patients with systemic sclerosis. Clin Exp Rheumatol. 2008;26(5):776–783.

140. Khan WS, Jain R, Dillon B, et al. The “M2 DASH”-Manchester-modified Disabilities of Arm Shoulder and Hand score. Hand (N Y). 2008;3(3):240–244.

141. Dixon D, Johnston M, McQueen M, Court-Brown C. The Disabilities of the Arm, Shoulder and Hand Questionnaire (DASH) can measure the impairment, activity limitations and participation restriction constructs from the International Classification of Functioning, Disability and Health (ICF). BMC Musculoskelet Disord. 2008;9:114.

142. Mousavi SJ, Parnianpour M, Abedi M, et al. Cultural adaptation and validation of the Persian version of the Disabilities of the Arm, Shoulder and Hand (DASH) outcome measure. Clin Rehabil. 2008;22(8):749–757.

143. Abramo A, Kopylov P, Tagil M. Evaluation of a treatment protocol in distal radius fractures: a prospective study in 581 patients using DASH as outcome. Acta Orthop. 2008;79(3):376–385.