Appraising and understanding systematic reviews of quantitative and qualitative evidence

After reading this chapter, you should be able to:

• Understand what systematic reviews are

• Know where to look for systematic reviews

• Understand how systematic reviews are carried out

• Critically appraise a systematic review

• Understand how to interpret the results from systematic reviews

• Understand how systematic reviews can be used to inform practice

A systematic review is a method for systematically locating, appraising and synthesising research from primary studies and is an important means of condensing the research evidence from many primary studies. This chapter will describe systematic reviews of quantitative and qualitative evidence, discuss their advantages and disadvantages and briefly illustrate the use of systematic reviews for different types of clinical questions. The methods for carrying out systematic reviews will be described, and key factors that can introduce bias into reviews will be explained. Using worked examples, we will also demonstrate how to critically appraise a systematic review and how it can be used to guide decision making in practice.

What are systematic reviews?

Due to the massive increase in the volume of health research over the last 50 years or so, it was recognised that some system of synthesising this information was essential. The health literature has a long tradition of using literature or narrative reviews to help readers grasp the breadth of a particular topic. These literature reviews typically provide a reasonably thorough description of a particular topic and refer to many articles that have been published in that particular area. Nearly all students are required to do at least one literature review as part of their studies, so you probably know what literature reviews are all about.

More recently, systematic reviews have been embraced as a more comprehensive and trustworthy means of synthesising the literature.1 Systematic reviews differ from literature reviews in that they are prepared using transparent, explicit and pre-defined methods that are designed to limit bias.2 In contrast to literature reviews, systematic reviews:

• involve a clear definition of eligibility criteria

• incorporate a comprehensive search to identify all potentially relevant studies (although there are qualitative reviewers that opt for a purposeful sample of papers deemed relevant for generating theory)

• use explicit, reproducible and uniformly applied criteria in the selection of studies for the review

• rigorously appraise the risk of bias within individual studies, and

• systematically synthesise the results of included studies.2

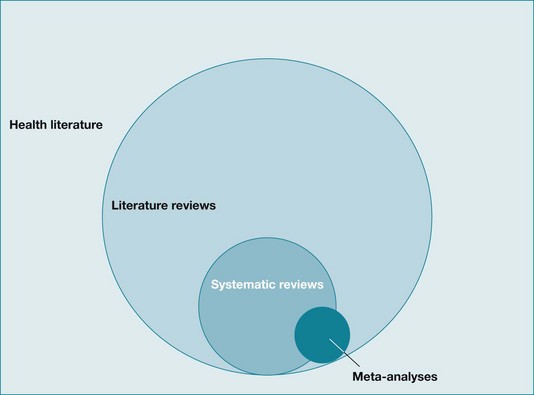

Systematic reviews may summarise the results from quantitative studies, qualitative studies or a combination of quantitative and qualitative studies, depending on the clinical question and the methods used to conduct the review. In a quantitative review the results from two or more individual quantitative studies are typically summarised using a measure of effect, which enables each study's effect size to be statistically combined and compared in what is called a meta-analysis.2 Meta-analyses generally provide a better overall estimate of a clinical effect than looking at the results from individual studies. However, sometimes it is not possible to combine the results of individual studies in a meta-analysis because the interventions or outcomes used in the different studies may be too diverse for it to be sensible to combine them. In these instances the results from these studies have to be synthesised narratively.

It may help you to think about these different types of reviews visually. As you can see in Figure 12.1, literature reviews make up the vast majority of reviews that are found in the overall health literature. Systematic reviews can be considered a subset of literature reviews, and meta-analyses are a smaller set again. Note that the circle representing meta-analyses overlaps both systematic and literature reviews. This is because not all meta-analyses are carried out systematically. For example, it is technically possible for someone to take a collection of articles that report randomised controlled trials from their desk and undertake a meta-analysis of these articles. This would not be considered systematic, as methods were not used to ensure that a systematic search for all relevant articles was conducted. Sometimes the terms ‘systematic review’ and ‘meta-analysis’ are used interchangeably, but in this chapter they are conceptualised as different entities. A systematic review does not need to incorporate a meta-analysis, but a meta-analysis should only be done within the context of a systematic review (although occasionally it is not).

Figure 12.1 The relationships between the health literature, literature reviews, systematic reviews, and meta-analyses.

A review of qualitative studies (also known as qualitative evidence synthesis, QES) aggregates or integrates findings from individual qualitative basic research studies into categories or metaphors in order to generate new theory or advance our understanding of particular phenomena to advise professional practice or policy. In a mixed-methods review, both quantitative and qualitative studies are used in parallel or sequentially.3

Advantages and disadvantages of systematic reviews

By combining the results of similar studies, a well-conducted systematic review can:

• improve the dissemination of evidence2

• hasten the assimilation of research into practice3

• assist in clarifying the heterogeneity of conflicting results between studies4

• establish generalisability of the overall findings2

• improve the understanding of a particular phenomenon or situation, and

Systematic reviews are important for health professionals and consumers of health care who are seeking answers to clinical questions, for researchers who are identifying gaps in research and defining future research agendas and for administrators and purchasers who are developing policies and guidelines.2 Although systematic reviews have many advantages, as with any other study type they may be subject to bias, which will be discussed in detail later in this chapter.

Systematic reviews for different types of clinical questions

Systematic reviews synthesise studies using different study designs to address different research questions.5 As we saw in Chapter 2 when we examined the hierarchies of evidence for various question types, systematic reviews are at the top of the hierarchy of evidence for questions about the effects of intervention, diagnosis and prognosis. For example, a well-conducted systematic review of randomised controlled trials is generally considered to be the best study design for assessing the effectiveness of an intervention because it identifies and examines all of the evidence about the intervention from randomised controlled trials (providing these are rigorous themselves) that meet pre-determined inclusion criteria.6 This type of systematic review is by far the most common type of systematic review available. However, systematic reviews of quantitative studies may be carried out to address other types of questions, such as questions about diagnosis and prognosis.

Systematic reviews that focus on questions of prognosis or prediction best undertake a synthesis of cohort studies. Consider the following example in which a systematic review of cohort studies of patients in the subacute phase of ischaemic or haemorrhagic stroke was conducted with the aim of identifying prognostic factors for future place of residence at 6 to 12 months post-stroke.7 The authors searched MEDLINE, EMBASE, CINAHL, Current Contents, Cochrane Database of Systematic Reviews, PsycLIT and Sociological Abstracts as well as reference lists, personal archives and consultations of experts in the field and guidelines to locate relevant cohort studies. They then assessed the internal, statistical and external validity of the 10 studies that they had selected for inclusion. Although the review found many factors that were predictive of a patient's future place of residence (for example, low initial functioning in activities of daily living, advanced age, cognitive disturbance, paresis of arm and leg), the authors concluded that there was insufficient evidence concerning possible predictors in the subacute stage of stroke with respect to place of future residence.

Systematic reviews of diagnostic test studies are carried out to determine estimates of test performance and consider variation between studies. This type of systematic review uses different methods to assess study quality and combine results than those used for systematic reviews of randomised controlled trials.8 An example of this type of review is one that used meta-analytic procedures in a review of the accuracy of the Mini-Mental State Examination in the detection of dementia and mild cognitive impairment.9 Thirty-four dementia studies and five mild cognitive impairment studies were included in the meta-analysis. It was concluded that the Mini-Mental State Examination offers modest accuracy with best value for ruling out a diagnosis of dementia in community and primary care.

Systematic reviews of qualitative studies allow us to have a more comprehensive understanding, for example, of patients' experience in relation to a particular issue. Noyes and colleagues10 state that syntheses of evidence from qualitative research:

explore questions such as how do people experience illness, why does an intervention work (or not), for whom and in what circumstances … what are the barriers and facilitators to accessing health care, or what impact do specific barriers and facilitators have on people, their experiences and behavior? … [Qualitative evidence syntheses] can aid understanding of the way in which an intervention is experienced by all of those involved in developing, delivering or receiving it; what aspect of the intervention they value, or not; and why this is so … [it] can provide insight into factors that are external to an intervention including, for example, the impact of other policy developments, factors which facilitate or hinder successful implementation of a programme, service, or treatment and how a particular intervention may need to be adapted for large-scale roll-out.

Systematic reviews of qualitative studies, often referred to as qualitative evidence syntheses, combine evidence from primary qualitative studies to create new understanding by comparing and analysing concepts and findings from different sources of evidence with a focus on the same topic of interest.10 Qualitative evidence syntheses have gained significant recognition in the field of health care, both as a stand-alone product and as a scientific contribution able to inform, extend, enhance or supplement systematic reviews of effectiveness. Discussion among researchers about how systematic reviews of qualitative research should best be conducted continues. There is even ongoing debate about whether a synthesis of qualitative studies is appropriate and whether it is acceptable to combine studies that use a variety of different methods.11

There are at least 20 different approaches to the synthesis of qualitative studies that have been developed and identified by researchers, including the commonly used meta-ethnographic approach to synthesis, meta-study, thematic synthesis, narrative synthesis, content analysis, cross-study analysis, grounded theory, and framework synthesis.12 Most of these approaches are interpretive in nature. They seek to increase the understanding of events based on the perspectives and experiences of the people studied by combining results of individual studies.

An alternative approach to qualitative evidence synthesis that is rapidly gaining recognition in the field is the meta-aggregative approach to synthesis. It is unique in taking an explicit integrative method to synthesis, as opposed to the more commonly accepted interpretive approach. This has been designed to model the Cochrane Collaboration's process of performing systematic reviews which summarise the results of quantitative studies, while being sensitive to the nature of qualitative research and its traditions.13 In this chapter we will focus on this meta-aggregative approach, as the process and procedure will appeal to most health professionals who are familiar with effectiveness reviews. An interesting illustrative example of this approach can be found in a synthesis of studies on barriers to the implementation of evidence-based practice in health care.14

Methods for combining results from both quantitative and qualitative studies within a single review are generally referred to as mixed-methods reviews. Examples of mixed-method protocols can now be found in the Cochrane Library. An interesting example is a systematic review that examined older people's views, perceptions and experiences of falls prevention interventions.15 The authors of the review searched eight databases using a broad search strategy in order to locate relevant qualitative and quantitative studies. The systematic review contained 24 studies, which consisted of 10 qualitative studies, 1 systematic review, 3 narrative reviews, 3 randomised controlled trials, 3 before/after studies and 4 cross-sectional observational studies. Synthesis of the quantitative studies in this review identified factors that encouraged older people's participation in falls prevention interventions, while the findings from the 10 qualitative studies demonstrated the importance of considering older people's views about falls interventions to ensure that these interventions are properly targeted.

So, contrary to common misconception, systematic reviews are not just limited to syntheses of quantitative studies such as randomised controlled trials. They are a methodology that can be used for many different study designs and research questions. Having pointed this out, the remainder of this chapter will outline the procedure for both an effectiveness review and a qualitative evidence synthesis using the meta-aggregative approach.

Locating systematic reviews

It has been mentioned a number of times already in this book that a substantial difficulty in locating research evidence is the overwhelming quantity of information that is available and the diverse range of journals in which information is published. Unfortunately, systematic reviews are not immune to this problem either. As you saw in Chapter 3, the premier source for systematic reviews of quantitative studies answering clinical questions about intervention effectiveness is the Cochrane Database of Systematic Reviews. These reviews are written by volunteer researchers who work with one of many review groups that are coordinated by The Cochrane Collaboration (www.cochrane.org). Each review group has an editorial team which oversees the preparation and maintenance of the reviews, as well as the application of rigorous quality standards. The Cochrane Collaboration has recently changed its policy towards including qualitative evidence in systematic reviews, with some versions of mixed-method reviews having already been published in the Library. It is expected that the number of these types of reviews will continue to increase.

Another source of reliable information contained within the Cochrane Library is the Database of Abstracts of Reviews (DARE). This contains abstracts of systematic reviews that have been quality-assessed. Each abstract includes a summary of the review together with a critical commentary about the overall quality. Chapter 3 provided the details of other resources that can be used to locate systematic reviews. These include the Joanna Briggs Institute, PEDro, OTseeker, PsycBITE, speechBITE and the large biomedical databases such as CINAHL and MEDLINE/PubMed (the latter contains a search strategy specifically for locating systematic reviews on the PubMed Clinical Queries screen).

How are systematic reviews conducted?

We believe that it is easier to learn how to appraise a systematic review if you first have an understanding of what is involved in performing a systematic review. Undertaking a systematic review requires a significant time commitment, with estimates varying depending on the number of citations/abstracts involved. Obviously, for some topics there is very little research that has been done, whereas for other topics there is a huge amount of research to find and sort through. To give you an idea, one estimation is that it takes approximately 1139 hours or the equivalent of 6 months full-time work to complete a systematic review.16

We will now explain the basic steps involved in undertaking a systematic review, which are:

1. Define the research question and plan the methods for undertaking the review.

2. Determine the eligibility criteria for studies to be included.

3. Search for potentially eligible studies.

4. Apply eligibility criteria to select studies.

5. Assess the risk of bias in included studies.

6. Extract data from the included studies.

8. Interpret and report the results.

9. Update the review at an appropriate time point in the future.

Define the research question and plan the methods for undertaking the systematic review

As with any research study, when conducting a systematic review the first place to start is planning the overall project. Planning also ensures that all aspects important to the scientific rigour of a review are undertaken to reduce the risk of bias. Each stage of the review needs to be thoroughly understood prior to moving on to plan the next stage.

The question needs to be clearly focused, as too broad a question will not produce a useful review. The question should also make sense clinically. Involving consumers, at both the health professional and the patient levels, may also be helpful when developing a plan, as this will ensure that areas of concern such as the types of interventions or outcomes that might not otherwise be considered are addressed.17

Planning a review also requires decisions to be made about the methods for searching, screening, appraisal and synthesis. These decisions should be made before commencing the review itself and ideally written up as a protocol, as this will help to make the whole process systematic and more transparent for all involved.

Determine the eligibility criteria for studies to be included in the review

The use of explicit, pre-defined criteria for including and excluding studies (eligibility criteria) is an important feature of systematic reviews. The eligibility criteria to be defined for, and applied in, a systematic review will depend on the type of review question (for example, whether the review is about effects of intervention or about diagnostic tests or patient experiences) and the population of interest.

Traditionally, eligibility criteria for systematic reviews of the effects of interventions have focused on defining types of participants (that is, people and populations), types of interventions and comparisons and types of studies (that is, the study designs most suitable for answering the review question). Although systematic reviews of the effects of interventions also typically pre-specify types of outcomes (the outcome measures that are important to answer the review question), these usually are not used to include or exclude studies but rather to guide how the findings from the included studies are reported. Generally, systematic reviews of the effects of interventions primarily focus on randomised controlled trials or quasi-randomised controlled trials. However, there are many reviews which include both randomised and non-randomised studies. While these reviews can provide a comprehensive overall picture of the available research, they require the reader to be particularly careful when interpreting the conclusions and to take into account the study types (and their associated strengths/weaknesses) that may have been used to contribute to the conclusion of the review.

Qualitative evidence syntheses generally include all sorts of qualitative study designs, including, for example, phenomenological studies, case studies, grounded theory designs and action research designs. Some review authors opt for the exclusion of opinion papers and editorials and papers without a clear methods and findings section. To date, there is little guidance on how to search for, critically appraise or extract data from these reports, or how to use them in a cross-comparison between papers.10

Search for potentially eligible studies

The search methodology needs to be developed prior to commencing the review and to be clearly explained and reproducible. All components of the question of the review (such as the participants, interventions, comparisons and outcomes) should be considered when developing the search strategy of the review. Synonyms and relevant Medical Subject Heading (MeSH) terms for key components of the search (for example, participants and interventions) should also be incorporated.18 The search should not be limited by publication date, publication format or language unless there is a good reason for doing so (such as an intervention only being available after a certain point in time). Searching should occur across multiple databases, as it has been found that search strategies that are limited to one database do not identify all of the relevant studies.18 The review authors should also ideally contact authors in the field in an attempt to locate other studies that have not already been identified and ongoing or planned studies in the area, and to ask about and obtain written copies of unpublished studies.19 The reason for doing this is to limit a problem called ‘publication bias’ that can occur due to study authors being more likely to submit studies with statistically significant results and journals being more likely to publish studies with positive results than those with negative outcomes.

As an illustration of how important it is that review authors use a comprehensive search strategy, one study found that 35% of all appropriate randomised controlled trials were not located by computerised searching20 and another reported that 10% of suitable trials for systematic reviews were missed when only electronic searching was conducted.21 Greenhalgh and Peacock22 evaluated the appropriateness of standard searching procedures for the retrieval of qualitative research papers and found that only 30% were retrieved through databases and hand-searching. The rest were identified through a snowball procedure of following up on the references of relevant papers and by personal contacts. Hand-searching journals has been identified as important to conducting a high quality systematic review,23 as not all journals are indexed in electronic databases or may be missed by the search strategy used. It is suggested24 that authors of systematic reviews may perform hand-searching of the major journals that publish in the area relevant to the review question, to increase the comprehensiveness of their search strategy. Citation tracking or use of the references from the studies found may also help the reviewer to locate further studies on the topic and increase comprehensiveness of the search. Attempts could also be made to obtain unpublished studies (including masters and doctoral research and conference proceedings) by searching the CENTRAL database in the Cochrane Library and databases of grey literature (e.g. OpenSIGLE, Open System for Information on Grey Literature, www.opensigle.inist.fr). The same applies for reviewers who are conducting a qualitative evidence synthesis, although, as far as we are aware, specialised registers for qualitative research currently do not exist.

Apply eligibility criteria to select studies

Once the search has been completed and potentially relevant studies identified, the next task is to decide which of the studies should be included in the systematic review. Not all articles that are located during the search will be directly relevant to the review question. Eligibility criteria are established to guide the selection of studies to be included in the review. The criteria specify the studies, participants, interventions and outcomes that are to be included. The selection process should then be carried out by two or more authors independently to minimise bias. Titles and abstracts are screened for relevance and eligibility. At this point some citations will be excluded as it will be clear from the title and abstract that they do not meet the review eligibility criteria. For other citations, the decision on whether to include or exclude will be unclear due to insufficient information in the title and abstract. The full text of these potentially relevant studies is then retrieved so that a more detailed evaluation can be done. A final evaluation of the full-text articles is conducted and a decision is made whether to include or exclude the studies. If the review authors are conducting a Cochrane systematic review, studies that are excluded at this stage of the review process are listed in the review along with the reason why they were excluded.24 A flow diagram summarising the study selection processes may also be appended to the review.

Assess the risk of bias in included studies

The next step is to evaluate the validity of the studies that have been included in the review. Assessing the validity, or ‘risk of bias’, of the results from the individual studies is vital to conducting a high quality systematic review. Including studies with a high risk of bias can serve to magnify the impact of that bias, and thus may raise doubts about the reliability of the systematic review's results and conclusions.25 Where such studies are included in the review, it is important that the risk of bias is clearly communicated for the reader. To guard against errors and increase the reliability of the validity assessment, it is recommended that more than one person independently extract data and assess the risk of bias of the included studies.26 Determining the potential for bias in individual studies can be done in a number of different ways, such as using the approach to appraising randomised controlled trials that was explained in Chapter 4 of this book. From the beginning of 2008, systematic reviews that are carried out through The Cochrane Collaboration have to use the ‘risk of bias’ tool.26 The risk of bias tool includes seven different domains which are shown in Table 12.1, along with a definition of each of these criteria. Implementation of this tool requires two steps:

TABLE 12.1:

The Cochrane Collaboration's tool for assessing risk of bias in individual studies26

| Domain | Description | Review authors' judgment* |

| Random sequence generation | Describe the method used to generate the allocation sequence in sufficient detail to allow an assessment of whether it should produce comparable groups. | Selection bias (biased allocation to interventions) due to inadequate generation of a randomised sequence. |

| Allocation concealment | Describe the method used to conceal the allocation sequence in sufficient detail to determine whether intervention allocations could have been foreseen in advance of, or during, enrolment. | Selection bias (biased allocation to interventions) due to inadequate concealment of allocations prior to assignment. |

| Blinding of participants and personnel Assessments should be made for each main outcome (or class of outcomes) |

Describe all measures used, if any, to blind study participants and personnel from knowledge of which intervention a participant received. Provide any information relating to whether the intended blinding was effective. | Performance bias due to knowledge of the allocated interventions by participants and personnel during the study. |

| Blinding of outcome assessment Assessments should be made for each main outcome (or class of outcomes) |

Describe all measures used, if any, to blind outcome assessors from knowledge of which intervention a participant received. Provide any information relating to whether the intended blinding was effective. | Detection bias due to knowledge of the allocated interventions by outcome assessors. |

| Incomplete outcome data Assessments should be made for each main outcome (or class of outcomes) |

Describe the completeness of outcome data for each main outcome, including attrition and exclusions from the analysis. State whether attrition and exclusions were reported, the numbers in each intervention group (compared with total randomised participants), reasons for attrition/exclusions where reported and any re-inclusions in analyses performed by the review authors. | Attrition bias due to amount, nature or handling of incomplete outcome data. |

| Selective reporting | State how the possibility of selective outcome reporting was examined by the review authors and what was found. | Reporting bias due to selective outcome reporting. |

| Other sources of bias | State any important concerns about bias not addressed in the other domains in the tool. | Bias due to problems not covered elsewhere in the table. |

*Judgment for each domain: ‘Low risk’ of bias, ‘High risk’ of bias, or ‘Unclear risk’ of bias.

Higgins JPT, Altman DG, Sterne JAC, editors. Chapter 8: Assessing risk of bias in included studies. In: Higgins JPT, Green S, editors. Cochrane Handbook for Systematic Reviews of Interventions. Version 5.1.0 [updated March 2011]. The Cochrane Collaboration; 2011. Online Available: http://handbook.cochrane.org/

1. extracting information from the original study report about each criterion to describe what was reported to have happened in the study (see description column in Table 12.1); and then

2. making a judgment about the likely risk of bias in relation to each criterion (rated as ‘Low risk’ of bias, ‘High risk’ of bias or ‘Unclear risk’ of bias) (see Review authors' judgment column in Table 12.1).

For the critical appraisal of qualitative studies, a number of different checklists and frameworks have been developed. Among them is the commonly used CASP checklist and QARI tool that were discussed and used in Chapters 10 and 11. The meta-aggregative approach encourages qualitative evidence synthesis review authors to assign an overall judgment to findings in an original article.13 This allows the reviewer to decide which insights are valid enough to be considered for inclusion. These judgments are:

• unequivocal if the evidence is beyond reasonable doubt and includes findings that are factual, directly reported/observed and not open to challenge;

• credible if the evidence, while interpretative, is plausible in light of the data and theoretical framework; or

• unsupported, in case the findings are not supported by the data and none of the other level descriptors apply.

Extract data from the included studies

Generally, the data that are collected from quantitative research papers concern participant details, intervention details, outcome measures used, results of the study and the study methodology.24 For qualitative research studies, the data to be extracted include setting, participant details, methodology and methods used, phenomenon of interest, cultural and geographical information, and findings of the study (such as the themes, metaphors or categories reported). Standardised data collection tools or processes are typically established regardless of the type of review so that the data are collected in a consistent manner and are relevant to the research question.

Synthesise the data

Before we explain what review authors do at this stage of the process, you first need to understand the principles of meta-analysis and meta-aggregation in more detail. We will deal with both issues, starting with the principles for conducting a meta-analysis. As we mentioned at the beginning of this chapter, a meta-analysis pools the data obtained from all studies answering the same clinical question included in a systematic review and produces an overall summary effect. The rationale behind a meta-analysis is that, as you are combining the samples of individual studies, the overall sample size is increased, which improves the power of the analysis as well as the precision of the estimates of the effects of the intervention.18

When review authors plan a systematic review, the process by which they will conduct the meta-analysis should be clearly outlined. It has been suggested that the process should include the following steps:27

1. The analysis strategy should clearly match the goals of the review.

2. Decide what types of study designs should be included in the meta-analysis.

3. Establish the criteria that would be used to decide whether or not to complete a meta-analysis (see when not to complete a meta-analysis, below).

4. Identify what types of outcome data are likely to be found in the studies and outline how each will be managed.

5. Identify in advance what effects measures will be used.

6. Decide the type of meta-analysis that will be used (random effects, fixed effects, or both).

7. Decide how clinical and methodological diversity will be managed, what characteristics would define the heterogeneity and if and how this will be incorporated into the analysis.

8. Decide how risk of bias in included studies will be assessed and addressed in the analysis.

9. Decide on how missing data will be managed.

10. Decide how publication and reporting bias will be managed.

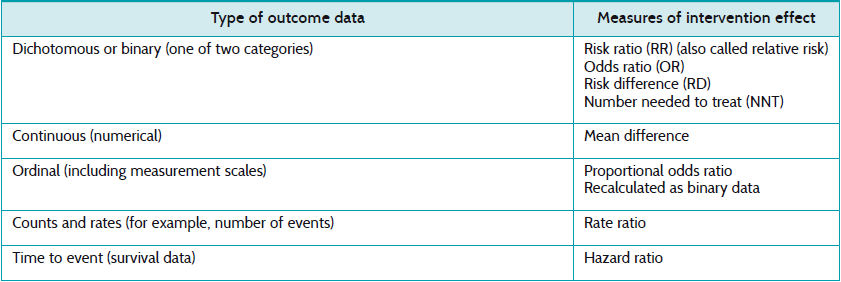

There are two stages to doing a meta-analysis. First, the intervention effect, including the confidence intervals, for each of the studies is calculated. The statistics used will depend on the type of outcome data found in the studies, with commonly used statistics including odds ratios, risk ratios (also known as relative risks) and mean differences.18 As we saw in Chapter 4 when learning how to interpret the results of randomised controlled trials, if the outcome is dichotomous then odds ratios or risk ratios may be used. For continuous outcomes, mean differences may be used.

Table 12.2 lists some of the statistical measures of intervention effect that can be used in a meta-analysis based on the outcome data type as outlined in the Cochrane Handbook for Systematic Reviews of Interventions.27

TABLE 12.2:

Examples of statistical measures of intervention effect that can be used in a meta-analysis

The second stage in a meta-analysis is calculating the overall intervention effect. This is generally an average of the summary statistics from the individual studies, each weighted to adjust for its sample size and variance. This is described in more detail later in this chapter.

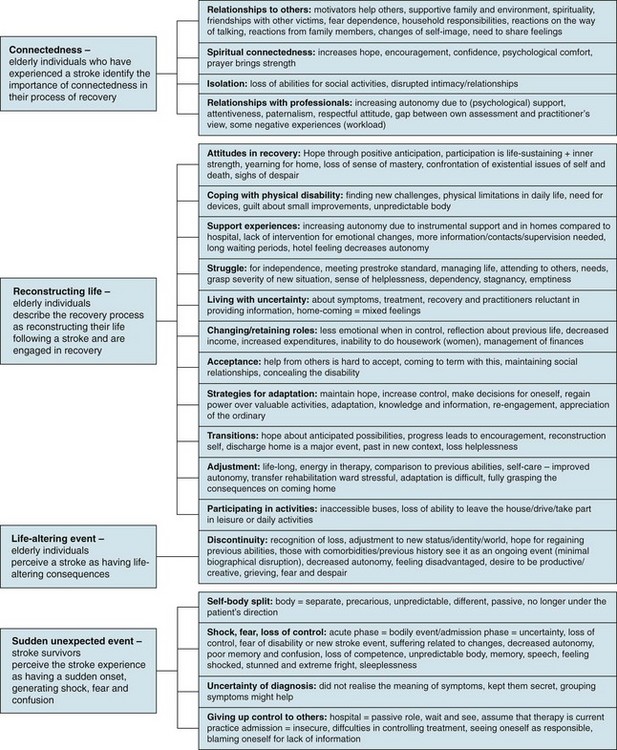

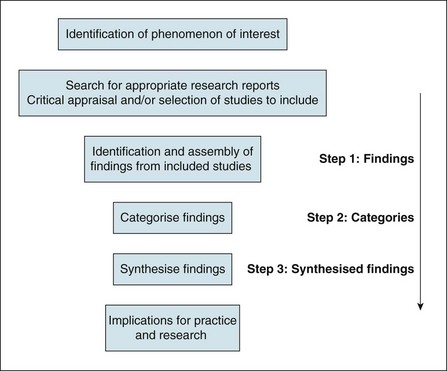

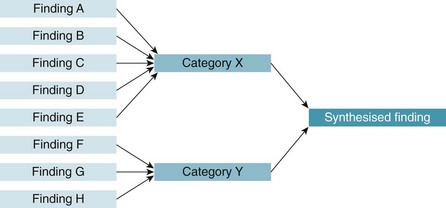

The procedure of meta-aggregation used in qualitative evidence synthesis involves three steps: (1) assembling the findings of studies (variously reported as themes, metaphors, categories); (2) pooling them through further aggregation based on similarity in meaning, to (3) arrive at a set of synthesised statements presented as ‘lines of action’ for practice and policy.12 In extracting the themes (step 1) identified by the authors of original studies, the reviewer takes the literal descriptions presented in the results sections of original articles into account and maintains representativeness with the primary literature. Similarity of meaning on the level of categories (step 2) is contingent on the reviewer's knowledge and understanding of the included studies. Having read and re-read the articles and extracted the findings from each included study, the reviewer looks for commonality in the themes and metaphors across all studies. Similarity may be conceptual (where a particular theme, metaphor or part thereof is identified across multiple papers) or descriptive (where the terminology associated with a theme or metaphor is consistent across studies). The actual move from findings to categories is similar to the procedures used in primary qualitative research methods (such as constant comparative analysis and thematic analysis). However, in a primary qualitative research project, the end result is a particular framework, matrix or conceptual model. In a meta-aggregation, it is declamatory statements or ‘lines of action’ (step 3) that are arrived at.12 An adapted version of the process of meta-aggregation28 is illustrated in Figure 12.2.

Figure 12.2 Process of meta-aggregative synthesis. Adapted with permission from the Joanna Briggs Institute.28

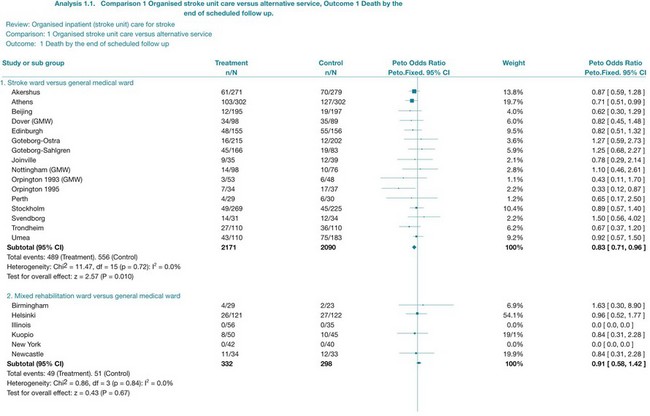

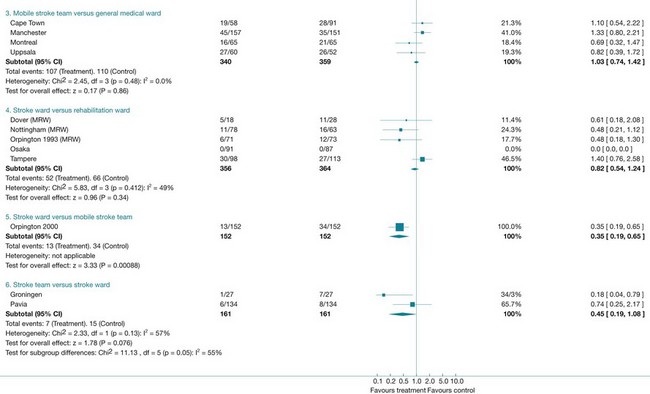

Interpret and report the results

The results of a systematic review are presented in the text, while the results of a meta-analysis are also displayed visually using a forest plot. Forest plots are made up of tree plots (explained in Chapter 4 and in Figure 4.3) represented in one figure. They display both the information from the individual studies included in the review (such as the intervention effect and the associated confidence interval) and an estimate of the overall effect (see Figure 12.4 later in the chapter). In some systematic reviews it is not proper to statistically combine data (because of heterogeneity, meaning the studies are not similar enough in one or more respects); forest plots may still be presented but without an overall estimate (summary statistic). Forest plots are useful, as they allow readers to visually assess the amount of variation among the studies that are included in the review.18

How do you interpret a forest plot?

There was a previous convention that risk ratios and odds ratios less than 1 indicated that the experimental intervention was more effective than the control. When looking at the forest plot, this would mean that effect estimates to the left of the vertical line (the line of no effect) implied that the intervention was beneficial. This convention is no longer used, as the position of the effect estimates on the forest plot is influenced by the nature of the outcome (that is, outcome of benefit or harm). Instead, when interpreting a forest plot you should look for descriptors on the horizontal axis of the plot to indicate which side of the line of no effect is associated with benefit from the intervention.29 We will take a closer look at interpreting a forest plot towards the end of this chapter when we look at the results from a specific systematic review.

Not all systematic reviews can undertake a meta-analysis, particularly when there is excessive heterogeneity. This means that the studies are not similar enough to allow them to be combined—for example because the interventions or outcomes were too different. For example, in a systematic review of family therapy for depression, three trials were found but the results were not combined as the studies were very heterogeneous in terms of the interventions, participants and measurement instruments that were involved.30 These differences mean that pooling results is not appropriate. When this occurs, review authors describe the results of each study separately in a narrative way.

Conclusions that may be reached in systematic reviews include:

1. The results from a review consistently show positive (or negative) effects of an intervention. In this case a review might state that the results (that is, the evidence) are convincing.

2. Where there are conflicting results from studies within the review, the authors might state that the evidence is inconclusive.

3. A number of reviews find only a few studies (or sometimes none) that meet their eligibility criteria and conclude that there is insufficient evidence about that particular intervention.

As a reader of systematic reviews it is important that you do not confuse the concept of ‘no evidence’ (or ‘no evidence of an effect’) with ‘evidence of no effect’. In other words, when there is inconclusive evidence, it is incorrect to state that the results of the review show that an intervention has ‘no effect’ or is ‘no different’ from the control intervention.25

For qualitative evidence syntheses, supporting tools such as the System for the Unified Management, Assessment and Review of Information (SUMARI) software and the Qualitative Assessment and Review Instrument (QARI) have been developed. These can be used to assist reviewers who are conducting a meta-aggregative approach in documenting each step of their decision process and linking synthesised statements to the findings retrieved from original studies.31

The outcome of a meta-aggregation generally looks like a chart such as the one presented in Figure 12.3.32

Figure 12.3 Meta-aggregative approach to analysis. Reproduced with permission from the Joanna Briggs Institute.32

In meta-synthesis approaches that are interpretive in nature, research findings are more often presented as a web of ideas, theory or conceptual model. Their graphical display is less linear than the meta-aggregative type of presentation of findings that leads to particular lines of action. Two different formulations of a synthesised statement have been proposed that indicate direct action: an if-then structure or a declamatory form.12 Both forms help authors of evidence syntheses to formulate suggestions to health professionals and policy makers on how to move forward with the results of the review. Two examples of the final syntheses derived from already published meta-aggregations are provided below:

• Using the ‘if-then’ structure: a review of caregivers' experiences of providing home-based care for people with HIV in Africa concluded that ‘if formal and informal support structures are available to caregivers providing home-based care to persons with HIV/AIDS, then the challenges and burdens they face may be lessened’.33

• Using the declamatory form: a review that explored the experiences of overseas-trained registered nurses working in Australia suggested that ‘the clash of cultures between overseas nurses and the dominant Australian culture should be addressed in a transition program’.34

These forms emphasise the probability of the claim and lead directly to an operational prediction. In these cases, the ‘line of action’ is for putting in place formal and informal support structures, respectively, for ‘inclusion of cultural awareness training in transition programs’. In practice, the synthesised statements are often accompanied by a short narrative summary.35

Critical appraisal of systematic reviews

Now that you have an understanding of what is involved in undertaking a systematic review of quantitative or qualitative studies, let us move on to looking at how to critically appraise systematic reviews. As with other types of studies, not all systematic reviews are carried out using rigorous methods and, therefore, bias may be introduced into the final results and conclusions of the review. A review of 300 systematic reviews found that not all systematic reviews were equally reliable and that their reporting could be improved if widely agreed reporting standards were adhered to by authors and journals.36 Just because the evidence that you have found to answer your clinical question is a systematic review, this does not mean that you can automatically trust the results of that review. It is important that you critically appraise systematic reviews and determine whether you can trust their results and conclusions.

Using the three-step critical appraisal process that we have used elsewhere in this book to appraise other types of studies, the three key elements to be considered when appraising a systematic review are: (1) the validity of the review methodology; (2) the magnitude and precision of the intervention effect (or the trustworthiness of the findings for qualitative evidence syntheses); and (3) the applicability of the review to your specific patient or patient population.

There are a number of checklists or tools that can be used to critically appraise systematic reviews, many of which are based on the early Overview of Quality Assessment Questionnaire37 and the article on overviews in the users' guide to evidence-based medicine.38 Three commonly used appraisal checklists are the CASP checklist for appraising reviews (casp-uk.net); the Assessment of Multiple Systematic Reviews (AMSTAR)—an 11-item tool for that has good agreement, reliability, construct validity and feasibility;39 and the criteria proposed by Greenhalgh and Donald.40 Checklists or frameworks for appraising reviews of qualitative as well as quantitative studies are also available.41

Box 12.1 lists key questions that you can ask when critically appraising a systematic review. These questions are adapted from the appraisal criteria suggested by Greenhalgh and Donald.40

Critical appraisal of systematic reviews—a worked example

To help you further understand how to critically appraise and use systematic reviews to inform clinical decisions, we will consider an extended clinical scenario (with two parts), formulate relevant clinical questions, locate a systematic review of quantitative studies and a review of qualitative studies that might address those questions and then critically appraise the reviews. The box describes the clinical scenario that we will use.

The importance of reporting in systematic reviews

Determining the methodological quality or risk of bias of a systematic review depends on how well the details of how the systematic review was conducted are reported. Based on studies which found that systematic reviews were generally poorly reported,45 an international group developed a reporting guideline for reporting details of meta-analyses of randomised controlled trials called the QUOROM Statement (QUality Of Reporting Of Meta-analyses).46 In 2009, the guideline was updated to encompass all types of systematic reviews about the effects of interventions, not just those using meta-analyses of randomised controlled trials. This reporting statement is called the Preferred Reporting Items for Systematic Reviews and Meta-Analyses, or the PRISMA statement.47 Reporting statements improve the quality of reporting of studies48 and therefore it is now recommended, or often insisted on, by an increasing number of journals that review authors use the PRISMA statement when writing their reviews.49

Resolution of clinical scenario

The review of quantitative studies that we have just appraised indicates that stroke units can result in improved outcomes in terms of survival, independence and avoiding institutional care when compared with care that is provided in alternative services. The outcome data from this review, particularly the data that compared stroke-unit care with care in medical wards, could be used by the therapists in our scenario when they are discussing hospital policy with the administrator to argue that people who have had a stroke should be cared for in the stroke unit. Data about the costs involved were not provided in the review; however, the review did find that there was no increase in the length of stay for participants who received care in stroke units. This is only a proxy for cost, and in future studies it would be important to ascertain full details of the costs involved. Discussions with the administrator about the feasibility of implementing a plan to ensure that all people who are admitted to hospital with a stroke are admitted to the stroke unit as soon as possible would also be needed.

From the meta-aggregation, a few important issues can be highlighted that would extend the discussion regarding stroke-unit care to include aspects of appropriateness and meaningfulness of stroke-unit care. The team members should consider how stroke-unit care could optimally support elderly persons who are in the process of recovering from stroke, given that they are often experiencing fear, feelings of disconnection with friends and family and while in hospital and following discharge will be required to do a huge amount of work, both physically and psychologically. Discussion about ways of providing opportunities for patients to discuss their perceptions of improvement and progress may also be warranted.

References

1. Mulrow, C, Cook, D, Davidoff, F. Systematic reviews: critical links in the great chain of evidence. Ann Intern Med. 1997; 126:389–391.

2. Cook, D, Mulrow, C, Haynes, RB. Systematic reviews: synthesis of best evidence for clinical decisions. Ann Intern Med. 1997; 126:376–380.

3. Hannes, K, Van den Noortgate, W. Master thesis projects in the field of education: who says we need more basic research? Educational Research. 2012; 3:340–344.

4. Greenhalgh, T. How to read a paper: papers that summarise other papers (systematic reviews and meta-analyses). BMJ. 1997; 315:672–675.

5. Phillips, B, Ball, C, Sackett, D, et al, Levels of evidence. Centre for Evidence-based Medicine, Oxford, 2009. www.cebm.net/index.aspx?o=1025

6. National Health and Medical Research Council (NHMRC), NHMRC additional levels of evidence and grades for recommendations for developers of guidelines. NHMRC, Canberra, 2009. www.nhmrc.gov.au/_files_nhmrc/file/guidelines/developers/nhmrc_levels_grades_evidence_120423.pdf

7. Meijer, R, Ihnenfeldt, D, van Limbeek, J, et al. Prognostic factors in the subacute phase after stroke for the future residence after six months to one year. A systematic review of the literature. Clin Rehab. 2003; 17:512–520.

8. Irwig, L, Tosteson, A, Gatsonis, C, et al. Guidelines for meta-analyses evaluating diagnostic tests. Ann Intern Med. 1994; 120:667–676.

9. Mitchell, A. A meta-analysis of the accuracy of the mini-mental state examination in the detection of dementia and mild cognitive impairment. J Psychiatr Res. 2009; 43:411–431.

10. Noyes, J, Popay, J, Pearson, A, et al, Chapter 20: Qualitative research and Cochrane reviewsHiggins JPT, Green S, eds. Cochrane Handbook for Systematic Reviews of Interventions. The Cochrane Collaboration: 201. www.cochrane-handbook.org

11. Goldsmith, M, Bankhead, C, Austoker, J. Synthesising quantitative and qualitative research in evidence-based patient information. J Epidemiol Community Health. 2007; 61:262–270.

12. Hannes, K, Lockwood, C. Pragmatism as the philosophical underpinning of the Joanna Briggs meta-aggregative approach to qualitative evidence synthesis. J Adv Nurs. 2011; 67:1632–1642.

13. Pearson, A. Balancing the evidence: incorporating the synthesis of qualitative data into systematic reviews. JBI Reports. 2004; 2:45–64.

14. Hannes, K, Aertgeerts, B, Goedhuys, J. Obstacles to implementing evidence-based practice in Belgium: a context-specific qualitative evidence synthesis including findings from different health care disciplines. Acta Clinica Belgica. 2012; 67:99–107.

15. McInnes, E, Askie, L. Evidence review on older people's views and experiences of falls prevention strategies. World Views Evid Based Nurs. 2004; 1:20–37.

16. Allen, IJ, Okin, I. Estimating the time to conduct a meta-analysis from number of citations retrieved. JAMA. 1999; 282:634–635.

17. Jackson, N, Waters, E. Criteria for the systematic review of health promotion and public health interventions. Health Promot Int. 2005; 20:367–374.

18. Akobeng, AK. Understanding systematic reviews and meta-analysis. Arch Dis Child. 2005; 90:845–848.

19. Bhandari, M, Guyatt, G, Montori, V, et al. Current concepts review. Users guide to orthopedic literature: how to use a systematic review. J Bone Joint Surg. 2002; 84A:1672–1682.

20. Hopewell, S, Clarke, M, Lefebvre, C, et al. Handsearching versus electronic searching to identify reports of randomised trials. Cochrane Database Syst Rev. (2):2007.

21. Kennedy, G, Rutherford, G. Identifying randomised controlled trials in the journal. Cape Town, South Africa: Presentation at the Eighth International Cochrane Colloquium; 2000.

22. Greenhalgh, T, Peacock, R. Effectiveness and efficiency of search methods in systematic reviews of complex evidence: audit of primary sources. BMJ. 2005; 331:1064–1065.

23. Armstrong, R, Jackson, N, Doyle, J, et al. It's in your hands: the value of handsearching in conducting systematic reviews. J Pub Health. 2005; 27:388–391.

24. Lefebvre, C, Manheimer, E, Glanville, J, Chapter 6: Searching for studiesHiggins J, Green S, eds. Cochrane Handbook for Systematic Reviews of Interventions. The Cochrane Collaboration: 201. http://handbook.cochrane.org/

25. Juni, P, Altman, D, Egger, M. Systematic reviews in health care: assessing the quality of controlled clinical trials. BMJ. 2001; 323:42–46.

26. Higgins, JPT, Altman, DG, Sterne, JAC, Chapter 8: Assessing risk of bias in included studiesHiggins JPT, Green S, eds. Cochrane Handbook for Systematic Reviews of Interventions. The Cochrane Collaboration: 201. http://handbook.cochrane.org/

27. Deeks, JJ, Higgins, JPT, Altman, DG, Chapter 9: Analysing data and undertaking meta-analysesHiggins JPT, Green S, eds. Cochrane Handbook for Systematic Reviews of Interventions. The Cochrane Collaboration: 201. www.cochrane-handbook.org

28. The Joanna Briggs Institute. Joanna Briggs Institute Reviewers’ Manual. Adelaide: Joanna Briggs Institute; 2008.

29. Schünemann, H, Oxman, A, Higgins, J, et al, Chapter 11: Presenting results and ‘Summary of findings’ tablesHiggins J, Green S, eds. Cochrane Handbook for Systematic Reviews of Interventions. The Cochrane Collaboration: 201. www.cochrane-handbook.org

30. Henken, H, Huibers, M, Churchill, R, et al. Family therapy for depression. Cochrane Database Syst Rev. (3):2007.

31. Joanna Briggs Institute, SUMARI. User's Manual Version 5.0. System for the unified management, assessment and review of information. Joanna Briggs Institute, Adelaide, 2012. www.joannabriggs.edu.au/documents/sumari/SUMARI%20V5%20User%20guide.pdf

32. The Joanna Briggs Institute. Comprehensive Systematic Review Study Guide Module 4. Adelaide: The Joanna Briggs Institute; 2012.

33. McInerney, P, Brysiewicz, P. A systematic review of the experiences of caregivers in providing home-based care to persons with HIV/AIDS in Africa. JBI Library of Systematic Reviews. 2009; 7:130–153.

34. Konno, R. Support for overseas qualified nurses in adjusting to Australian nursing practice: a systematic review. Int J Evid Based Healthc. 2006; 4:83–100.

35. McInnes, E, Wimpenny, P. Using qualitative assessment and review instrument software to synthesise studies on older people's views and experiences of falls prevention. Int J Evid Based Healthc. 2008; 6:337–344.

36. Moher, D, Tetzlaff, J, Tricco, A, et al. Epidemiology and reporting characteristics of systematic reviews. PLoS Med. 2007; 4:e78.

37. Oxman, A, Guyatt, G. Validation of an index of the quality of review articles. J Clin Epidemiol. 1991; 44:1271–1278.

38. Oxman, A, Cook, D, Guyatt, G. Users’ guides to the medical literature: VI. How to use an overview. JAMA. 1994; 272:1367–1371.

39. Shea, B, Hamel, C, Wells, G, et al. AMSTAR is a reliable and valid measurement tool to assess the methodological quality of systematic reviews. J Clin Epidemiol. 2009; 62:1013–1020.

40. Greenhalgh, T, Donald, A. Evidence-based health care workbook: understanding research: for individual and group learning. London: BMJ Publishing Group; 2000.

41. Pope, C, Mays, N, Popay, J. Synthesising qualitative and quantitative health research: a guide to methods. Maidenhead (UK): Open University Press; 2007.

42. Stroke Unit Trialists’ Collaboration. Organised inpatient (stroke unit) care for stroke. Cochrane Database Syst Rev. (4):2007.

43. Lamb, M, Buchanan, D, Godfrey, C, et al. The psychosocial spiritual experience of elderly individuals recovering from stroke: a systematic review. Int J Evid Based Healthc. 2008; 6:173–205.

44. Hannes, K, Lockwood, C, Pearson, A. A comparative analysis of three online appraisal instruments’ ability to assess validity in qualitative research. Qual Health Res. 2010; 20:1736–1743.

45. Sacks, HS, Reitman, D, Pagano, D, et al. Meta-analysis: an update. Mt Sinai J Med. 1996; 63:216–224.

46. Moher, D, Cook, D, Eastwood, S, et al. Improving the quality of reporting of meta-analysis of randomised controlled trials: the QUOROM statement. Lancet. 1999; 354:1896–1900.

47. Liberati, A, Altman, D, Tetzlaff, J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med. 2009; 6:e1000100.

48. Simera, I, Moher, D, Hirst, A, et al. Transparent and accurate reporting increases reliability, utility, and impact of your research: reporting guidelines and the EQUATOR Network. BMC Med. 2010; 26(8):24.

49. Tao, K, Li, X, Zhou, Q, et al. From QUOROM to PRISMA: a survey of high-impact medical journals’ instructions to authors and a review of systematic reviews in anesthesia literature. PLoS One. 2011; 6:e27611.

50. Kalra, L, Eade, J. Role of stroke rehabilitation units in managing severe disability after stroke. Stroke. 1995; 26:2031–2034.

51. Vemmos, K, Takis, K, Madelos, D, et al. Stroke unit treatment versus general medical wards: long term survival. Cerebrovasc Dis. 2001; 11:8.

52. Leonardi-Bee, J, Presenting and interpreting meta-analyses. RLO, School of Nursing and Academic Division of Midwifery, University of Nottingham, 2007. www.nottingham.ac.uk/nursing/sonet/rlos/ebp/meta-analysis2/index.html

53. Hannes, K. The Joanna Briggs Institute Best Practice Information Sheet: the psychosocial and spiritual experiences of elderly individuals recovering from a stroke. Nurs Health Sci. 2010; 12:515–518.