Language and Thinking Processes

We now are ready to explore the language and thinking of researchers who use the range of designs relevant to health and human service inquiry. As discussed in previous chapters, significant philosophical differences exist between experimental-type and naturalistic research traditions. Experimental-type designs are characterized by thinking and action processes based in deductive logic and a positivist paradigm in which the researcher seeks to identify

a single reality through systematized observation. This reality is understood by reducing it to its parts, observing and measuring the parts, and then examining the relationship among these parts. Ultimately, the purpose of research in the experimental-type tradition is to predict what will occur in one part of the universe by knowing and observing another part.

Unlike experimental-type design, the naturalistic tradition is characterized by multiple ontological and epistemological foundations. However, naturalistic designs share common elements that are reflected in their languages and thinking processes. Researchers in the naturalistic tradition base their thinking in inductive and abductive logic and seek to understand phenomena within the context in which they are embedded. Thus, the notion of multiple realities and the attempt to characterize holistically the complexity of human experience are two elements that pervade naturalistic approaches.

Mixed-method designs draw on and integrate the language and thinking of both traditions.

Now we turn to the philosophical foundations, language, and criteria for scientific rigor for experimental-type and naturalistic traditions. “Rigor” is a term used in research that refers to procedures that enhance and are used to judge the integrity of the research design. As you read this chapter, compare and contrast the two traditions and consider the application and integration of both.

Experimental-type language and thinking processes

Within the experimental-type research tradition, there is consensus about the adequacy and scientific rigor of action processes. Thus, all designs in the experimental-type tradition share a common language and a unified perspective as to what constitutes an adequate design. Although there are many definitions of research design across the range of approaches, all types of experimental-type research share the same fundamental elements and a single, agreed-on meaning (Box 8-1).

In experimental-type research, design is the plan or blueprint that specifies and structures the action processes of collecting, analyzing, and reporting data to answer a research question. As Kerlinger stated in his classic definition, design is “the plan, structure, and strategy of investigation conceived so as to obtain answers to research questions and to control variance.”1 “Plan” refers to the blueprint for action or the specific procedures used to obtain empirical evidence. “Structure” represents a more complex concept and refers to a model of the relationships among the variables of a study. That is, the design is structured in such a way as to enable an examination of a hypothesized relationship among variables. This relationship is articulated in the research question. The main purpose of the design is to structure the study so that the researcher can answer the research question.

In the experimental-type tradition, the purpose of the design is to control variances or restrict or control extraneous influences on the study. By exerting such control, the researcher can state with a degree of statistical assuredness that study outcomes are a consequence of either the manipulation of the independent variable (e.g., true experimental design) or the consequence of that which was observed and analyzed (e.g., nonexperimental design). In other words, the design provides a degree of certainty that an investigator's observations are not haphazard or random but reflect what is considered to be a true and objective reality. The researcher is thus concerned with developing the most optimal design that eliminates or controls what researchers refer to as disturbances, variances, extraneous factors, or situational contaminants. The design controls these disturbances or situational contaminants through the implementation of systematic procedures and data collection efforts, as discussed in subsequent chapters. The purpose of imposing control and restrictions on observations of natural phenomena is to ensure that the relationships specified in the research question(s) can be identified, understood, and ultimately predicted.

The element of design is what separates research from the everyday types of observations and thinking and action processes in which each of us engages. Design instructs the investigator to “do this” or “don't do that.” It provides a mechanism of control to ensure that data are collected objectively, in a uniform and consistent manner, with minimal investigator involvement or bias. The important points to remember are that the investigator remains separate from and uninvolved with the phenomena under study (e.g., to control one important potential source of disturbance or situational contaminant) and that procedures and systematic data collection provide mechanisms to control and eliminate bias.

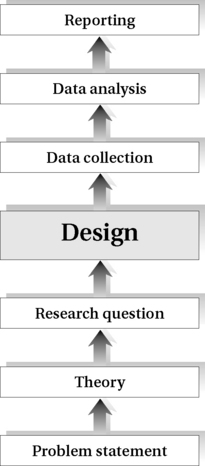

Sequence of Experimental-Type Research

Design is pivotal in the sequence of thoughts and actions of experimental-type researchers (Figure 8-1). It stems from the thinking processes of formulating a problem statement, a theory-specific research question that emerges from scholarly literature, and hypotheses or expected outcomes. Design dictates the nature of the action processes of data collection, the conditions under which observations will be made, and, most important, the type of data analyses and reporting that will be possible.

Do you recall our previous discussion on the essentials of research? In that discussion, we illustrated how a problem statement indicates the purpose of the research and the broad topic the investigator wants to address. In experimental-type inquiry the literature review guides the selection of theoretical principles and concepts of the study and provides the rationale for a research project. This rationale is based on the nature of research previously conducted and the level of theory development for the phenomena under investigation. The experimental-type researcher must develop a literature review in which the specific theory, scope of the study, research questions, concepts to be measured, nature of the relationship among concepts, and measures that will be used in the study are discussed and supported. Thus, the researcher develops a design that builds on both the ideas that have been formulated and the actions that have been conducted in ways that conform to the rules of scientific rigor.

The choice of design not only is shaped by the literature and level of theory development but is also dependent on the specific question asked and resources or the practical constraints, such as access to target populations and monetary and staff considerations. There is nothing inherently good or bad about a design. Every research study design has its particular strengths and weaknesses. The adequacy of a design is based on how well the design answers the research question that is posed. That is the most important criteria for evaluating a design. If it does not answer the research question, then the design, regardless how rigorous it may appear, is not appropriate. It is also important to identify and understand the relative strength and weakness of each design element. Methodological decisions are purposeful and should be made with full recognition of what is gained and what is not by implementing each design element.

Structure of Experimental-Type Research

Experimental-type research has a well-developed language that sets clear rules and expectations for the adequacy of design and research procedures. As you see in Table 8-1, nine key terms structure experimental-type research designs. Let us examine the meaning of each.

TABLE 8-1

Key Terms in Structuring Experimental-Type Research

| Term | Definition |

| Concept | Symbolically represents observation and experience |

| Construct | Represents a model of relationships among two or more concepts |

| Conceptual definition | Concept expressed in words |

| Operational definition | How the concept will be measured |

| Variable | Operational definition of a concept assigned numerical values |

| Independent variable | Presumed cause of the dependent variable |

| Intervening variable | Phenomenon that has an effect on study variables |

| Dependent variable | Phenomenon that is affected by the independent variable or is the presumed effect or outcome |

| Hypothesis | Testable statement that indicates what the researcher expects to find |

Concepts

A concept is defined as the words or ideas that symbolically represent observations and experiences. Concepts are not directly observable; rather, what they describe is observed or experienced. Concepts are “(1) tentative, (2) based on agreement, and (3) useful only to the degree that they capture or isolate something significant and definable.”2 For example, the terms “grooming” and “work” are both concepts that describe specific observable or experienced activities in which people engage on a regular basis. Other concepts, such as “personal hygiene” or “sadness,” have various definitions, each of which can lead to the development of different assessment instruments to measure the same underlying concept.

Constructs

As discussed in Chapter 6, constructs are theoretical creations based on observations but cannot be observed directly or indirectly.3 A construct can only be inferred and may represent a larger category with two or more concepts or constructs.

Definitions

The two basic types of definition relevant to research design are conceptual definitions and operational definitions. A conceptual definition, or lexical definition, stipulates the meaning of a concept or construct with other concepts or constructs. An operational definition stipulates the meaning by specifying how the concept is observed or experienced. Operational definitions “define things by what they do.”

Variables

A variable is a concept or construct to which numerical values are assigned. By definition, a variable must have more than one value even if the investigator is interested in only one condition.

There are three basic types of variables: independent, intervening, and dependent. An independent variable “is the presumed cause of the dependent variable, the presumed effect.”4 Thus, a dependent variable (also referred to as “outcome” and “criterion”) refers to the phenomenon that the investigator seeks to understand, explain, or predict. The independent variable almost always precedes the dependent variable and may have a potential influence on it. The dependent variable is also referred to as the “predictor variable.” An intervening variable (also called a “confounding” or an “extraneous” variable) is a phenomenon that has an effect on the study variables but that may or may not be the object of the study.

Investigators treat intervening variables differently depending on the research question. For example, an investigator may only be interested in examining the relationship between the independent and dependent variables and may thus statistically control or account for an intervening variable. The investigator would then examine the relationship after statistically “removing” the effect of one or more potential intervening variables. However, the investigator may want to examine the effect of an intervening variable on the relationship between the independent and dependent variables. For this question, the researcher would employ statistical techniques to determine the interrelationships.

Hypotheses

A hypothesis is defined as a testable statement that indicates what the researcher expects to find, given the theory and level of knowledge in the literature. A hypothesis is stated in such a way that it will either be verified or falsified by the research process. The researcher can develop either a directional or a nondirectional hypothesis (see Chapter 7). In a directional hypothesis, the researcher indicates whether she or he expects to find a positive relationship or an inverse relationship between two or more variables. A positive relationship is one in which both variables increase and decrease together to a greater or lesser degree.

In an inverse relationship, the variables are associated in opposite directions (i.e., as one increases, the other decreases). An inverse relationship may involve a hypothesis similar to the following:

In this statement, the expectation is that as the variable “employment” increases, difficulty in self-care will decrease.

Now that we have defined nine key terms that are essential to experimental-type designs, let us review the way they are actually used in research. Experimental-type research questions narrow the scope of the inquiry to specific concepts, constructs, or both. These concepts are then defined through the literature and are operationalized into variables that will be investigated descriptively, relationally, or predictively. The hypothesis establishes an equation or structure by which independent and dependent variables are examined and tested.

Plan of Design

The plan of an experimental-type design requires a set of thinking processes in which the researcher considers five core issues: bias, manipulation, control, validity, and reliability.

Bias

Bias is defined as the potential unintended or unavoidable effect on study outcomes. When bias is present and unaccounted for, the investigator may not be able to fully understand whether the study findings are accurate or reflect sources of bias and thus may misinterpret the results. Many factors can cause bias in a study (Box 8-2). We already discussed one source of bias, the “intervening variable.”

Another source is instrumentation. This involves the ways in which data are obtained in experimental-type approaches. The two major sources of bias from instrumentation are inappropriate data collection procedures and inadequate questions.

In the situation portrayed above, the procedures for data collection are problematic and introduce bias into the study.

Interview questions that elicit a socially correct response and questions that are vague, unclear, or ambiguous introduce a source of bias into the study design.

Sampling is another major source of bias.

Experimental-type designs are characterized by having detailed and elaborate plans and procedures that are established before conducting the study. Thus, any deviation from the original plan for data collection and analysis may be a potential source of bias. The violation of standard procedures is an important point at which bias may be introduced into an experimental-type study.

An essential part of planning a design is to introduce systematic procedures to minimize or eliminate as many sources of bias as possible. As you read subsequent chapters, think about the potential sources of bias. Also, as you conduct your own studies or read and analyze published studies, consider findings and conclusions in light of potential bias.

Manipulation

Manipulation is defined as the action process of maneuvering the independent variable so that the effect of its presence, absence, or degree on the dependent variable can be observed. To the extent possible, experimental-type researchers attempt to isolate the independent and dependent variables and then manipulate or change the condition of the independent variable so that the cause-and-effect relationship between the variables can be examined.

Control

Control is defined as the set of action processes that direct or manipulate factors to achieve an outcome. Control plays a critical role in experimental-type design. By controlling not only the independent variable but also other aspects of the research context, such as how study participants are assigned to groups, the relationship among the study variables can be observed. In experimental-type design, procedures to establish control are implemented to minimize the influences of extraneous variables on the outcome or dependent variable.

Two basic methods of control are typically introduced: random group assignment and control group. By randomly assigning subjects to one group or the other, the investigator attempts to develop equivalence, or eliminate subject bias, caused by inherent differences that may occur in the two groups.

Another method to enhance control is the use of a control group. A control group is one in which the experimental or comparative condition is absent.

Validity

Validity is a concept that has numerous applications in experimental-type research. However, the concept as it applies to design refers to the extent to which your study answers the research questions and your findings are accurate or reflect the underlying purpose of the study. Although many classifications of validity have been developed, we discuss four fundamental types of validity, based on Campbell and Stanley's classic work.6 These are internal validity, external validity, statistical conclusion validity, and construct validity.

Internal Validity. The ability of the research design to answer the research question accurately is known as internal validity. If a design has internal validity, the investigator can state with a degree of confidence that the reported outcomes are the consequence of the relationship between the independent variable and dependent variable and not the result of extraneous factors. Campbell and Stanley6 identified seven major factors that pose a threat to the researcher's ability to determine whether the observable outcome is a function of the study or the result of external and unintended forces (Box 8-3).

To illustrate these threats to validity, consider a hypothetical example.

Consider how each of the seven threats to internal validity affects your research outcomes.

Another alternative for the observed reduction in risk behavior may be the result of the testing situation itself. Participants who are aware of what is being observed and recorded may answer more cautiously. In other words, the testing procedures may pose yet another threat to the internal validity of the design. The reduction in reported risk behaviors may be a function of participants actively changing or adjusting their behaviors or thoughts on the topic as a consequence of participating in a group discussion with peers. The test itself or mode of data collection may have influenced a change in behavior independent of the effect of participating in the intervention. Therefore, it is difficult to determine whether observed change is a consequence of the test, of the intervention, or both.

This example also illustrates the threat to validity posed by instrumentation. In this example, the instrumentation may not be measuring what was intended. Instead of measuring risk behavior, the instrumentation may be a more accurate indicator of group norms related to sexual decision making.

Another way in which instrumentation poses a threat to a study is through changes that may occur within interviewers over time or with the instrument itself. For example, if you are collecting data regarding weight or blood pressure, any deviation in the calibration of a scale or blood pressure cuff from one testing occasion to the next will pose a significant threat to the validity of the data that are obtained.

Further, interviewer fatigue or any change in the way interviewers pose questions may cause a deviation in responses that will affect how the investigator interprets the findings.

The threat of regression frequently occurs when subjects with extreme scores are selected to participate in a study.

This change in scores, however, may be a consequence of a statistical principle known as “statistical regression toward the mean,” in which extreme scores tend to move toward the mean on repeated testing.

Thus, experimental mortality poses a threat to the interpretation of study findings. Numerous interactive effects could also confound the accuracy of the findings.

External Validity. External validity refers to the capacity to generalize findings and develop inferences from the sample to the study population stipulated in the research question. External validity answers the question of “generalizability.”

These factors represent some of the threats to external validity that may occur in a study. Although randomization decreases some of the threats to external validity, even when a sample is randomly selected, threats remain.7 The two threats in Box 8-4 result from the experimental condition, not from the limitations of sample selection. Consider your own behavior when being watched in a simulated situation. Certainly you act differently than you would under similar but unobserved and nonsimulated conditions.

The internal validity and external validity of a study are interrelated. As an investigator attempts to increase the internal validity of a study, the ability to make broad generalizations and inferences to a larger population decreases. That is, as more controls are implemented and extraneous factors are eliminated from a study design, the population to whom findings can be generalized becomes more limited. Thus, although investigators try to enhance the internal validity of a study to ensure that valid and accurate conclusions can be drawn, these efforts may limit the degree of external validity of the findings. It is a balancing act, but the investigator must err on the side of ensuring that the study has internal validity first, then external validity. If a study lacks internal validity, there would be no purpose in generalizing to a larger population. As you read further in this text, look for ways to balance internal validity and the scope of generalization that may be possible.

Statistical Conclusion Validity. Statistical conclusion validity refers to the power of your study to draw statistical conclusions. One aim of experimental-type research is to find relationships among variables and ultimately to predict the nature and direction of these relationships. Support for relationships is contained in the action process of statistical analysis. However, selection of statistical techniques is based on many considerations. Even though the expectation of making errors in determining relationships is built into the theory and practice of statistical analysis, the accuracy and potential of the statistics to support or predict a relationship among variables must be considered as a potential threat to the validity of a design.

Construct Validity. Construct validity addresses the fit between the constructs that are the focus of the study and the way in which these constructs are operationalized. As stated earlier, constructs are abstract representations of what humans observe and experience. The method used to define and measure constructs accurately is in large part a matter of opinion and consensus. It is possible for several factors related to construct validity to confound a study. First, a researcher may define a construct inappropriately.

Poor or incomplete operational definitions can result from incomplete conceptual definitions or from inadequate translation of the construct into an observable one.

Second, when a cause-and-effect relationship between two constructs is determined, it may be difficult to define each construct exclusively (referring to both independent and dependent variables) and to isolate the effects of one on the other.

Reliability

Reliability refers to the stability of a research design. In experimental-type design, rigorous research is shown to be well planned and properly executed when, if repeated under the same circumstances, the design would yield the same results. Investigators frequently replicate or repeat a study in the same population or in different groups to determine the extent to which the findings of one study are accurate in a broader scope. To replicate a study, the procedures, measures, and data analysis techniques must be consistent, well articulated, and appropriate to the research question. Reliability is threatened when a researcher is not consistent and changes a design in midstream, does not articulate procedures in sufficient detail for replication, or does not fully plan a sound design.

Experimental-Type Design Summary

Design is a pivotal concept in the experimental-type research process that indicates both the structure and the plan of the action processes. Experimental-type designs are developed to eliminate bias and the intrusion of unwanted factors that could confound findings and make them less credible. Five primary considerations in the plan of a design are bias, control, manipulation, validity, and reliability. Four types of validity threaten study outcomes: internal, external, statistical conclusion, and construct.

Experimental-type research is designed to minimize the threats posed by extraneous factors and bias by maximizing control over the research action process. In Chapter 9, we discuss how each type of design in the experimental-type tradition addresses these considerations in structuring and planning a design and using action processes to increase control.

Naturalistic language and thinking processes

Let us now turn our attention to the attributes of naturalistic inquiry and the implications for design with these traditions. Eight elements are common to designs in the naturalistic tradition, as listed in Box 8-5.

Purpose

Designs within the tradition of naturalistic research vary in purpose from developing descriptive knowledge to evolving full-fledged theories about observed or experienced phenomena. As discussed in earlier chapters, naturalistic designs tend to be preferred when no adequate theory exists to explain a human phenomenon or when the investigator believes that existing theory and explanations are not accurate, true, or complete. The structure of naturalistic designs is exploratory, enabling new insights and understandings to be revealed without the imposition of preconceived concepts, constructs, and principles.

Although the specific purpose of a study may differ, all naturalistic designs seek to describe, understand, or interpret daily life experiences and structures within the contexts in which they occur. Naturalistic inquiry has emerged through five phases of development to its current form of action-oriented social criticism, in which grand narratives are replaced by more local, small-scale theories fitted to specific problems and specific situations.9 Thus, the importance of naturalistic inquiry is now widely recognized because of its usefulness in examining and revealing phenomena that can help guide health and human service practitioners to address specific problems that emerge in the clinical context or explain why or how particular programs or interventions benefit individuals.

Context Specificity

As in Tally's Corner, to discover “new truth” rather than impose an existing frame of reference, the investigator proceeding from a naturalistic tradition must travel to the setting where the human phenomena occur or must seek information from the perspective of individuals who experience the phenomena of interest. Because of the revelatory nature of naturalistic research, this form of investigatory action is conducted in a natural context or seeks explanations of the natural context from those who experience it. Naturalistic research is therefore context-specific, and the “knowing” derived from this context is embedded in the context and does not extend beyond it. The designs in the tradition of naturalistic inquiry share this basic attribute of context specificity and development of knowledge that is grounded in or linked to the data that emerge from a particular field.

Complexity and Pluralistic Perspective of Reality

As a result of its underlying epistemology and its inductive and abductive approaches to knowing, naturalistic research assumes a pluralistic perspective of reality that characterizes complexity.

With inductive reasoning, principles emerge from seemingly unrelated information. One of its hallmarks is that the information can be organized differently by each individual who thinks about it. The end result of induction is the development of a complex set of relationships among smaller pieces of information (not the reduction of principles to their parts, as in deductive reasoning). It is therefore possible that the same information may have different meanings or that there may be pluralistic interpretations by different individuals. Adding abduction to this equation ensures that the information will be revisited and reanalyzed at several points, thereby sharpening and rendering the accuracy of the interpretation more credible and trustworthy.

Transferability of Findings

The findings from naturalistic design are specific to the research context. It is not the desired outcome of naturalistic design to generalize findings from a small sample and apply this generalization to a larger group of persons with similar characteristics. You may ask, “Why bother doing a naturalistic study if you cannot use the research beyond the actual scope of the study?” The answer lies in one of the primary purposes of naturalistic research: generating theory. Naturalistic researchers use their methods and findings to generate theory and to reveal the unique meanings of human experience in human environments. Because the investigator assumes that current knowledge does not adequately explain the phenomenon under investigation, the outcome of naturalistic design is the emergence of explanations, principles, concepts, and theories. Liebow illustrated this point well when he stated:

There is no attempt here to describe any Negro men other than those with whom I was in direct immediate association. To what extent this descriptive and interpretive material is applicable to Negro street corner men elsewhere in the city or in other cities or to lower class men generally in this society or any other society is a matter of further and later study. This is not to suggest that we are dealing with unique or even distinctive persons and relationships. Indeed, the weight of the evidence is the other direction.10

In this passage, even though the researcher highlighted the contextual limitations of the knowledge, he also indicated that the principles revealed in his study could have relevance to other arenas. Further, he suggested that additional research is the appropriate means to ascertain the degree of fit, or what Guba12 called the “transferability of findings,” to other similar populations and contexts.

Because phenomena are context bound and, according to naturalistic principles, cannot be understood apart from the context in which they occur, naturalistic inquiry is not concerned with the issue of generalizability or external validity as articulated in experimental-type inquiry. Rather, naturalistic inquiry is concerned with understanding the richness and depth in one context and with the capacity to retain unique meanings that are lost when generalization is a goal.9 The development of “thick”13 and in-depth descriptions and interpretations of different contexts leads to the ability to transfer meanings in one context to another context. In so doing, the researcher is able to compare and contrast contexts and their elements to gain new insights as to the specific context itself.

Flexibility

The experimental-type research design provides the basic structure and plan for the thinking and action processes of the research endeavor. The design must be followed as initially developed. In naturalistic research, however, the opposite is the case; design is a fluid, flexible concept. Design labels such as “ethnography,” “life history,” and “grounded theory” suggest a particular purpose of the inquiry and orientation of the investigator. However, these labels do not refer to the specification of step-by-step procedures, a blueprint for action, or a predetermined structure to the data gathering and analytical efforts.

Rather, a characteristic of naturalistic design is flexibility. An important and expected feature of a naturalistic study is that the procedures and plans for conducting it will change as the research proceeds. The investigator may use the results of preliminary findings as guidance for planning or altering subsequent action processes. Not only do procedures change, but so do the nature of the research query, the scope of the study, and the manner by which information is obtained are constantly reformulated and realigned to fit the emerging truths as they are discovered and obtained in the inquiry. This flexibility is illustrated more fully in Chapters 17 and 20.

Language

A major shared concern in naturalistic inquiry is understanding language and meanings. This concern with language is not only relevant to the ethnographer, who studies a cultural context in which the language used is different from that of the investigator. Even within the same cultural or language context, people use and understand language differently. For some naturalistic investigators, language, symbols, and ways of expression provide the data through which the investigator comes to understand and derive meaning within each context.14 Investigators proceeding from a postmodern philosophical foundation are particularly concerned with language itself. In contrast to previous philosophies that attribute meaning to symbols, postmodern thinkers suggest that language as a set of symbols does not necessarily have any of the underlying reality that the symbols describe.15

Depending on the philosophical approach, the investigator concerned with language may engage in a rigorous and active analytical process to “translate” the meaning and structure of the context of the studied population into meanings and language structures represented in the investigator's world. In this type of inquiry, the investigator must be careful to represent the meanings and intent of expression accurately in this translation of the analytical and reporting process. As mentioned, however, not all investigators concerned with language believe that naturalistic inquiry can reveal meaning. Postmodern inquiry aims to “deconstruct” language, or unravel it to illustrate its arbitrary structure and identify its political and purposive usage. For example, a postmodern investigator might examine the text of legislation designed to protect women against domestic violence to uncover the metaphors that help retain men's dominance and political power over women.

Throughout the full range of philosophical approaches that underpin the naturalistic tradition, the description and analysis of language is a primary concern.

Emic and Etic Perspectives

Design structures vary as to their emic or etic orientation. An emic perspective refers to the insider's or informant's way of understanding and interpreting experience. This perspective is phenomenological in that experience is understood as only that which is perceived and expressed by informants. Data gathering and analytical actions are designed to enable the investigator to bring forth and report the voices of individuals as they speak and interpret their unique perceptions of their reality. The concern with the emic perspective is often the motivator for naturalistic inquiry. For example, in her classic work,16 Krefting explained why she decided to study head injury from an ethnographic perspective, highlighting the preference for an emic viewpoint shared by designs in this tradition, as follows:

Until recently, the investigation of health problems was dominated by the outsider perspective, in which important questions of etiology and treatment are identified by the professions. Studies based on this perspective assume that professionals are the authorities on what wellness is and that they alone know what questions ought be asked to investigate methods to promote and maintain wellness while preventing and treating illness… . Ethnographic studies of the experience of illness and disability … consider what these experiences look like from an insider's perspective.16

An etic orientation reflects the structural aspects or those that are external to a group; that is, the etic perspective is held by those who do not belong to the group being investigated but to those who select an analytical and epistemic lens through which to examine information. Unlike the emic perspective, in which those who experience are considered to be most knowledgeable, the etic perspective assumes that those who do not experience a phenomenon can come to know it through (1) structuring an investigation, (2) selecting a theoretical foundation that expands beyond the group being examined, and (3) through that lens, conducting the interpretation of data.17 Many investigators integrate an emic with an etic perspective. Typically, investigators will start by using an emic perspective, or a focus on the voices of individuals. Further along the process, other pieces of information are collected and analyzed to place individual articulation and expression within a social structural or systemic framework. Although she started with an emic consideration, Krefting used a range of data collection strategies to integrate her understandings with an etic viewpoint, as follows:

While gathering data, I was able to review theory and check it for pertinence. There was, then, a constant movement back and forth between the concrete data and the social science concepts that helped explain them.16

Some designs, such as phenomenology and life history, favor only an emic orientation. Frank described her preference for an emic perspective and the purpose of her life history approach as follows:

The life history of Diane DeVries represents a collaborative effort, between the subject and researcher, to produce a holistic, qualitative account that would bear on theoretical issues, but that primarily and essentially would convey a sense of the personal experience of severe congenital disability. The life history, conceived in this way, emerged from a humanistic interest in presenting the voices of people often unheard, yet whose lives were otherwise studied, and acted upon, based on data that are decontextualized and fragmented from the standpoint of the individual.18

Because the investigator enters the inquiry having bracketed, suspended, or let go of any preconceived concepts, the naturalistic researcher defers to the informant or experiencer as the “knower.” This abrogation of power by the investigator to the investigated is characteristic of naturalistic designs, to a greater or lesser degree.

Where the investigator stands regarding an emic or etic perspective shapes the overall design that is chosen, as well as the specific data collection and analytical action processes that emerge within the context of the inquiry.

Gathering Information and Analysis

Analysis in naturalistic designs relies heavily on qualitative data and is an ongoing process throughout data-gathering activities. Thus, in the naturalistic tradition, data gathering and analysis are interdependent processes. In collecting information or data, the investigator engages in an active analytical process. In turn, the ongoing analytical activity frames the scope and direction of further data collection efforts. This interactive, iterative, and dynamic process is characteristic of designs within the tradition of naturalistic inquiry and is explored more fully later in this text.

Naturalistic Design Summary

The purpose of naturalistic inquiry and the nature of the concept of design in this tradition are vastly different from experimental-type research. The language and thinking processes that characterize naturalistic design are based on the notion that knowing is pluralistic and that knowledge derives from understanding multiple experiences. Thus, the thinking process is inductive and abductive; the action processes are dynamic and changing; and these processes are carried out within the actual or theoretical context in which the phenomena of interest occur or are experienced. The outcome of these thinking and action processes is the generation of theory, principles, or concepts that explain human experience in human environments and capture its complexity and uniqueness.

Within any naturalistic design, the investigator can implement the 10 essentials in various ways. Designs vary from informant-driven and no structure to researcher-driven and more structure.

The nature of qualitative inquiry has evolved in its forms, scope, and purposes.9 Naturalistic inquiry is continuing to evolve in its standards, vocabulary, and criteria for design adequacy, and it is becoming a well-respected tradition in its own right as it is increasingly used to answer questions about health and human service practice.

Integrated and mixed-method approaches

As discussed in previous chapters of this book, studies which integrate experimental-type and naturalistic traditions have increased despite skepticism about philosophical inconsistencies. The mixed-method approach for advancing knowledge has recently been referred to as the “third tradition”19 with a foundation in pragmatism.

Mixing methods is a systematic way to address the limitations of each approach, and we therefore support its use whenever possible. As discussed, however, both experimental-type and naturalistic traditions conform to diverse sets of rules, languages, and strategies for rigor. As such, the investigator who proceeds with an integrated approach must heed the important tenets of both traditions as well as the emerging tenets of a third tradition. Because of its nascence and limited epistemological underpinning, we do not yet view a mixed-method approach as a solid tradition in itself. Rather, at this point in its development, we view it as an attempt to integrate experimental-type and naturalistic approaches within a defined and purposive context and to incorporate language and thinking processes from each of the two traditions.

Summary

Experimental-type and naturalistic traditions have distinct language and thinking processes. Experimental-type researchers approach their studies with intact theory and a set of procedures. Procedures are developed and followed to eliminate the potential for factors other than those studied to be responsible for the findings. Dissimilarly, naturalistic language and thinking processes are flexible, fluid, and changing. As the investigator proceeds throughout the research design, the aim of capturing complexity from the perspective of the individuals or phenomena studied is actualized through systematic but dynamic interaction between collecting and analyzing information.

References

1. Kerlinger, F.N. Foundations of behavioral research, ed 2, New York: Holt, Rinehart, & Winston; 1973:279.

2. Wilson, J. Thinking with concepts. Cambridge, Mass: Cambridge University Press, 1966;54.

3. Babbie, E. The practice of social research, ed 12. Belmont, Calif: Wadsworth, 2009.

4. Kerlinger, F.N. Foundations of behavioral research, ed 2, New York: Holt, Rinehart, & Winston; 1973:39.

5. Gitlin, L.N., Winter, L., Corcoran, M., et al. Effects of the home environmental skill-building program on the caregiver-care recipient dyad: six-month outcomes from the Philadelphia REACH Initiative. The Gerontologist. 2003;43:532–546.

6. Campbell, D.T., Stanley, J.C. Experimental and quasi-experimental design. Chicago: Rand McNally, 1963.

7. Neuman, W.L. Social research methods: qualitative and quantitative approaches, ed 5. Boston: Allyn Bacon, 2003.

8. Babbie, E. The practice of social research, ed 12. Belmont, Calif: Wadsworth, 2009.

9. Denzin, N.K., Lincoln, Y.S. Handbook of qualitative research, ed 3. Thousand Oaks, Calif: Sage, 2003.

10. Liebow, E. Tally's corner. Boston: Little Brown, 1967.

11. Maruyama, M. Endogenous research: the prison project. In: Reason P., Rowan J., eds. Human inquiry: a sourcebook for new paradigm research. New York: Wiley & Sons, 1981.

12. Guba, E.G. Criteria for assessing the trustworthiness of naturalistic inquiries. Educ Commun Technol J. 1981;29:75–92.

13. Geertz, C. Thick description: toward an interpretative theory of culture. In: The interpretation of cultures: selected essays. New York: Basic Books; 1973.

14. Hodge, R., Kress, G. Social semiotics. Ithaca, NY: Cornell University Press, 1988.

15. Silverman, D. Doing qualitative research: a practical handbook, ed 2. Thousand Oaks, Calif: Sage, 2004.

16. Krefting, L. Reintegration into the community after head injury: the results of an ethnographic study. Occup Ther J Res. 1989;9:67–83.

17. Headland, T.N., Pike, K.L., Harris, M. Emics and etics: the insider/outsider debate. Newbury Park, Calif: Sage, 1990.

18. Frank, G. Life history model of adaptation to disability: the case of a congenital amputee. Soc Sci Med. 1984;19:639–645.

19. Tashakkori, A., Teddlie, C. Sage handbook of mixed methods social and behavioral research. Thousand Oaks, Calif: Sage, 2003.

The construct of “function” may be inferred from observing concepts such as grooming, personal hygiene, and work or asking individuals to appraise their function in discrete areas. However, the construct of function is not observable unless it is broken down into its component parts or concepts.

The construct of “function” may be inferred from observing concepts such as grooming, personal hygiene, and work or asking individuals to appraise their function in discrete areas. However, the construct of function is not observable unless it is broken down into its component parts or concepts.